We recently wrote a very detailed blog post about Kubernetes Ingress. It discusses the various ways of how to route traffic from external sources towards internal services deployed to a Kubernetes cluster. It mostly talks about basic ingress options in Kubernetes, but briefly mentions Istio as a different approach.

In this post we examine Istio’s gateway functionality more thoroughly. We discuss the ingress gateway itself that acts as the common entry point for external traffic in the cluster, we take an in depth look into the configuration model, and we finish by talking about the advantages of using Backyards (now Cisco Service Mesh Manager), Banzai Cloud’s production ready Istio distribution.

Doing ingress with Istio 🔗︎

The Kubernetes Ingress resource is relatively easy to use for a wide variety of use cases with simple HTTP traffic, but has its shortcomings in complex scenarios mostly because of its limited capabilities around routing rules. When doing ingress with Istio, the most obvious advantage is that you get the same level of configuration options that Istio provides for east-west traffic. Rewrites, redirects, or routes can easily be configured for various matching rules via custom resources, along with TLS termination, monitoring, tracing and a few other handy features.

Istio can also understand

Ingressresources, but using that mechanism takes away the advantages and config options that the native Istio resources provide.

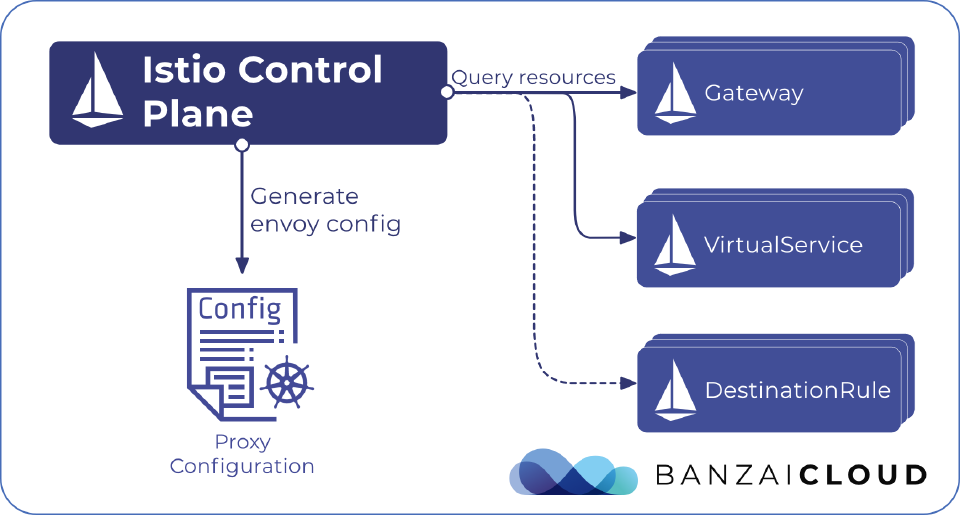

Istio offers its own configuration model, using the Gateway, VirtualService and DestinationRule custom resources.

The Istio ingress gateway 🔗︎

In Kubernetes Ingress, the ingress controller is responsible for watching Ingress resources and for configuring the ingress proxy. In Istio, the “controller” is basically the control plane, namely istiod. It watches the above mentioned Kubernetes custom resources, and configures the Istio ingress proxy accordingly. Not so surprisingly, the Istio ingress proxy that handles all incoming traffic is an Envoy proxy, running in a separate deployment.

Usually it has a corresponding LoadBalancer type service, that exposes the ingress service through a cloud load balancer (e.g. an AWS ELB).

All external traffic gets into the cluster through this cloud load balancer that routes traffic to the Envoy proxy pods. It acts as the gateway, and traffic is routed to the corresponding internal services through the Istio rules that are configured in the CRs.

$ kubectl get deploy -n istio-system -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES

istio-ingressgateway 1/1 1 1 2d22h istio-proxy banzaicloud/istio-proxyv2:1.6.0-bzc

$ kubectl get svc -n istio-system istio-ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP

istio-ingressgateway LoadBalancer 10.10.14.96 a93a0c0f3d4b741c792a230f8ead8776-2072369349.eu-west-3.elb.amazonaws.com

The ingress service can be configured like any other service in Kubernetes. It can expose multiple ports (that’s configured in the cloud load balancer’s settings by Kubernetes), it can have a custom externalTrafficPolicy, and it isn’t even necessarily a LoadBalancer type service.

While it’s a special use-case, sometimes it makes sense to create an internal gateway. Either as a common entry point for a set of services inside the cluster, or to be able to proxy a set of services together from the cluster. That’s what Backyards (now Cisco Service Mesh Manager) is doing when running the backyards dashboard command. It makes Backyards (now Cisco Service Mesh Manager), Prometheus, and Grafana all available on localhost by proxying the internal Backyards ingress gateway that has some routing rules set for these particular services.

Multiple gateways in a cluster 🔗︎

Even if you only have external services, sometimes it’s useful to create multiple ingress gateways. For example in a very large cluster, with thousands of services you probably don’t want to drive all external traffic through one cloud load balancer and one deployment, but want to shard the load horizontally. In smaller clusters it can still happen, like with the above example of having an internal ingress gateway, or if you just want to have a separate entry point for a separate set of services.

It can also make sense to create multiple egress gateways. Egress gateways are very similar, but instead of accepting incoming traffic, they handle traffic flowing out from the cluster. But they still consist of a Kubernetes service, and an Envoy proxy deployment.

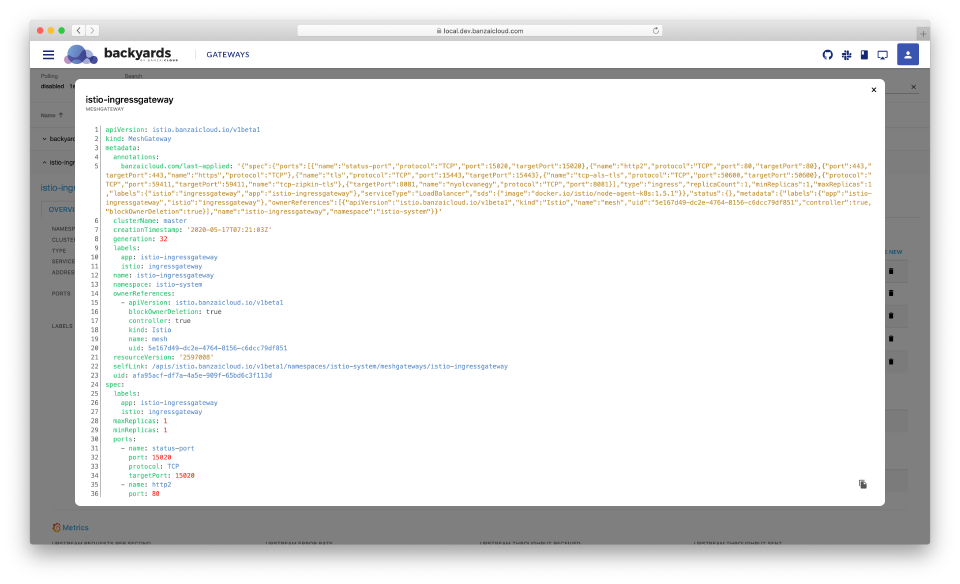

Creating multiple ingress gateways can be a daunting task with Istio. You’ll need to create the service and the deployment, properly configure the images, environment variables and entrypoints of the Envoy containers. We’ve simplified this part, and introduced a new Kubernetes custom resource, the MeshGateway. This is not a totally new concept, we wrote a post about configuring multiple Istio gateways a while back. A very simple MeshGateway looks like this:

apiVersion: istio.banzaicloud.io/v1beta1

kind: MeshGateway

metadata:

name: echo-ingress

namespace: default

spec:

maxReplicas: 1

minReplicas: 1

ports:

- name: http

port: 8000

protocol: TCP

targetPort: 8000

serviceType: LoadBalancer

type: ingress

When applied, the Banzai Cloud Istio operator reconciles and configures the corresponding service and Envoy deployment.

Configuration model - Gateway 🔗︎

As everything else in Istio, the gateway configuration is declarative and based on Kubernetes custom resources.

The Gateway resource describes a load balancer operating at the edge of the mesh. It contains the ports where the Envoy proxy should listen and their configuration: the protocol that’s used, the hosts that are accepted, and the TLS configuration. Along this config, there’s also a label selector in the gateway that specifies which particular proxy (deployment) this configuration belongs to (see multiple gateways above).

That’s how a typical gateway configuration looks like for a host with simple TLS, and HTTPS redirect enabled:

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: example-gateway

namespace: example

spec:

selector:

app: example-gw-deployment

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- frontpage.demo.banzaicloud.io

tls:

httpsRedirect: true

- port:

number: 443

name: https-443

protocol: HTTPS

hosts:

- frontpage.demo.banzaicloud.io

tls:

mode: SIMPLE

credentialName: example-secret

The above example is quite straightforward, but contains a few interesting details. TLS mode SIMPLE means that it’s a plain old TLS connection, and the related credentialName is a Kubernetes secret (not necessarily, but best to have the type kubernetes.io/tls). It’s the most simple way of setting up TLS, but Istio gives a lot more options. Mode can be SIMPLE, MUTUAL, PASSTHROUGH, AUTO_PASSTHROUGH or ISTIO_MUTUAL.

For example PASSTHROUGH can be used when you don’t want to terminate the TLS connection at the gateway, but at the internal service in the cluster. In that case the SNI string presented by the client will be used as the match criterion in a VirtualService TLS route to determine the destination service. You can learn more about these options and their configuration in the docs.

Another interesting thing to know is that multiple Gateway resources can be used to configure the same ingress gateway. If these Gateway resources hold different port configs, or the same ports, but without overlapping hosts, these are merged by Istio.

Terminology: it can be confusing that lots of different things are called gateway, or have gateway in their names.

- When talking about the ingress gateway itself, it usually means a LoadBalancer type service, and a corresponding Envoy proxy deployment

- A

MeshGatewayis the custom resource that declaratively describes an ingress gateway- A

Gatewayis a custom resource that describes the port configuration of a particular ingress gateway

- The same terminology applies the egress gateways as well

Configuration model - VirtualService 🔗︎

VirtualService defines a set of traffic routing rules to apply when a host is addressed on a particular gateway. It’s one of the most complicated custom resources in terms of configuration knobs, so we won’t describe the complete reference now (that would fill its own post - maybe someday we’ll write The Complete Guide to Istio Virtual Services). But let’s see what does a VirtualService describe and a basic example on how to use it with Gateways.

While the Gateway resource implements the first part of exposing an internal service though an ingress gateway (port, host, TLS), a VirtualService is responsible for the second part: it describes the routing rules of requests flowing through a specific Gateway. More specifically a VirtualService rule is built up from three parts (at least when we talk about HTTP):

- which requests are matched?

- where should these requests be routed?

- what other actions are applied for these requests?

Let’s take a look at an example VirtualService, that’s connected to our Gateway example:

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: frontpage-route

namespace: backyards-demo

spec:

gateways:

- example/example-gateway

hosts:

- frontpage.demo.banzaicloud.io

http:

- route:

- destination:

host: frontpage.backyards-demo.svc.cluster.local

The above declaration is pretty easy to follow. It says that requests to the example-gateway Gateway (in the example namespace) with the host frontpage.demo.banzaicloud.io should be routed to the frontpage service in the backyards-demo namespace. Or if we want to answer the above questions:

- all requests are matched (the

matchsection is empty) - to the

frontpageservice in thebackyards-demonamespace - no other actions are specified

This is a very basic example, but what makes a VirtualService config pretty hard to comprehend is the vast amount of options to set up routing rules. Just to name a few:

- Multiple hosts can be defined in one

VirtualService, and they can overlap with otherVirtualServices. - Hosts can be wildcards, or can contain wildcard prefixes.

- Multiple gateways can be defined in one

VirtualService, and they can overlap with otherVirtualServices. - When the magic word

meshis included in the gateways section, it means that the configuration is valid for every sidecar in the mesh, not only for gateways - There are different sections for

http,tcpandtlsrules, and all of these are lists of routing rules - Every routing rule has a

matchsection (except when it doesn’t - it means match any request), that is again a list where the elements have anORrelation, but every element can contain several filters (likeuri, orheader) that haveANDrelations. - Every routing rule has a

routesection (except when it doesn’t - then it needs aredirector adelegatesection), that is again a list of destinations from the Istio service registry. - The order of routing rules is important, because these are evaluated from top to bottom: it’s pretty easy to shadow specific rules with a broader

matchsection. - There are lots of actions that can be applied to a route along with routes or redirects:

rewrite,retries,timeout,fault,mirror,corsPolicy,headers. All of these have different configuration options, and not all can be used with bothrouteandredirect. - Multiple

VirtualServicescan be defined for the same (or overlapping) gateway/host pair. In other words, rules for a particular gateway and host can be split in two different CRs. In that case Istio is doing a merge when building the Envoy config, but cross-resource order of evaluation is undefined.

To route traffic through an Istio ingress gateway’s port to an internal service, you’ll need at least one

Gatewayand oneVirtualServicein your cluster.

Configuration model - DestinationRule 🔗︎

A DestinationRule defines policies that apply to traffic intended for a service, after routing has occurred. These rules specify load balancing configurations, connection pool sizes from the sidecar, and outlier detection settings to detect and evict unhealthy hosts from the load balancing pool.

Version specific policies can be specified by defining a named subset and overriding the settings specified at the service level.

It’s not necessary to create a DestinationRule to expose an internal service through an Istio ingress gateway, but it contains useful configuration options when working in a production environment.

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: frontpage-dr

spec:

host: frontpage.backyards-demo.svc.cluster.local

trafficPolicy:

loadBalancer:

simple: LEAST_CONN

connectionPool:

tcp:

maxConnections: 100

http:

http2MaxRequests: 1000

maxRequestsPerConnection: 10

subsets:

- name: v2

labels:

version: v2

trafficPolicy:

loadBalancer:

simple: ROUND_ROBIN

- name: v1

labels:

version: v1

The above example sets up two different subsets based on label selectors, configures a global loadBalancer policy for the frontpage service, but overrides it for the v2 version. It also sets up a connection pool for circuit breaking.

A production setup of a service exposed through an Istio ingress gateway consists of a

Gatewaywith TLS settings, one or moreVirtualServiceswith a complex set of routing rules, and one or moreDestinationRuleswith fine-tuned circuit breaking and outlier detection configs.

What does Backyards (now Cisco Service Mesh Manager) add to the mix? 🔗︎

Istio has a very powerful and flexible model of setting up ingress gateways, but it’s like a lego set: you’ll need to manually put the pieces together to have a production ready setup. You’ll need to set up Istio, and install and configure monitoring tools like Prometheus or Grafana to at least be able to follow what’s happening with your gateways. Also it’s not easy to follow or debug complex VirtualService rules with hundreds of lines of YAML. Things get even more complicated if you want to have proper certificate management for your gateways.

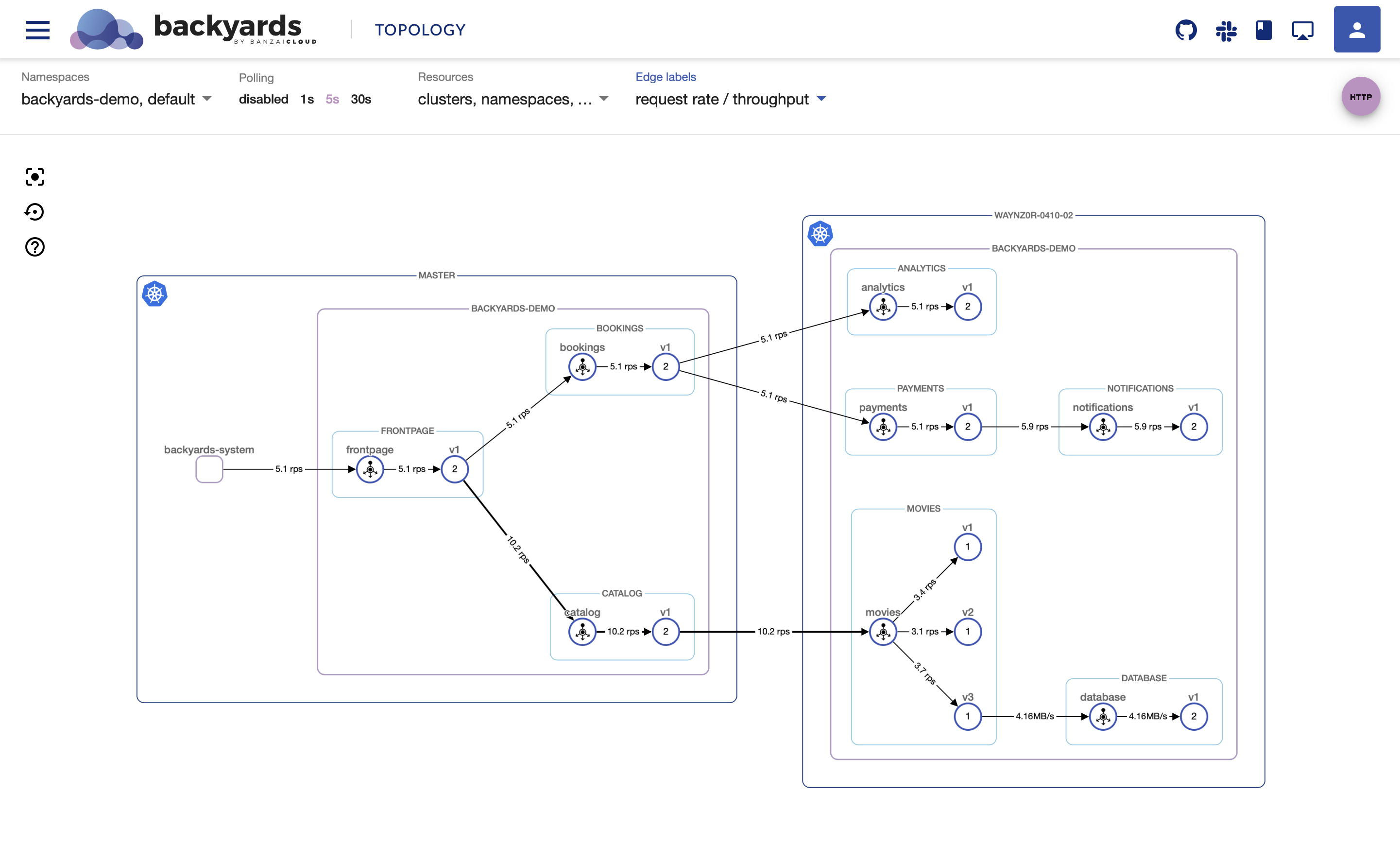

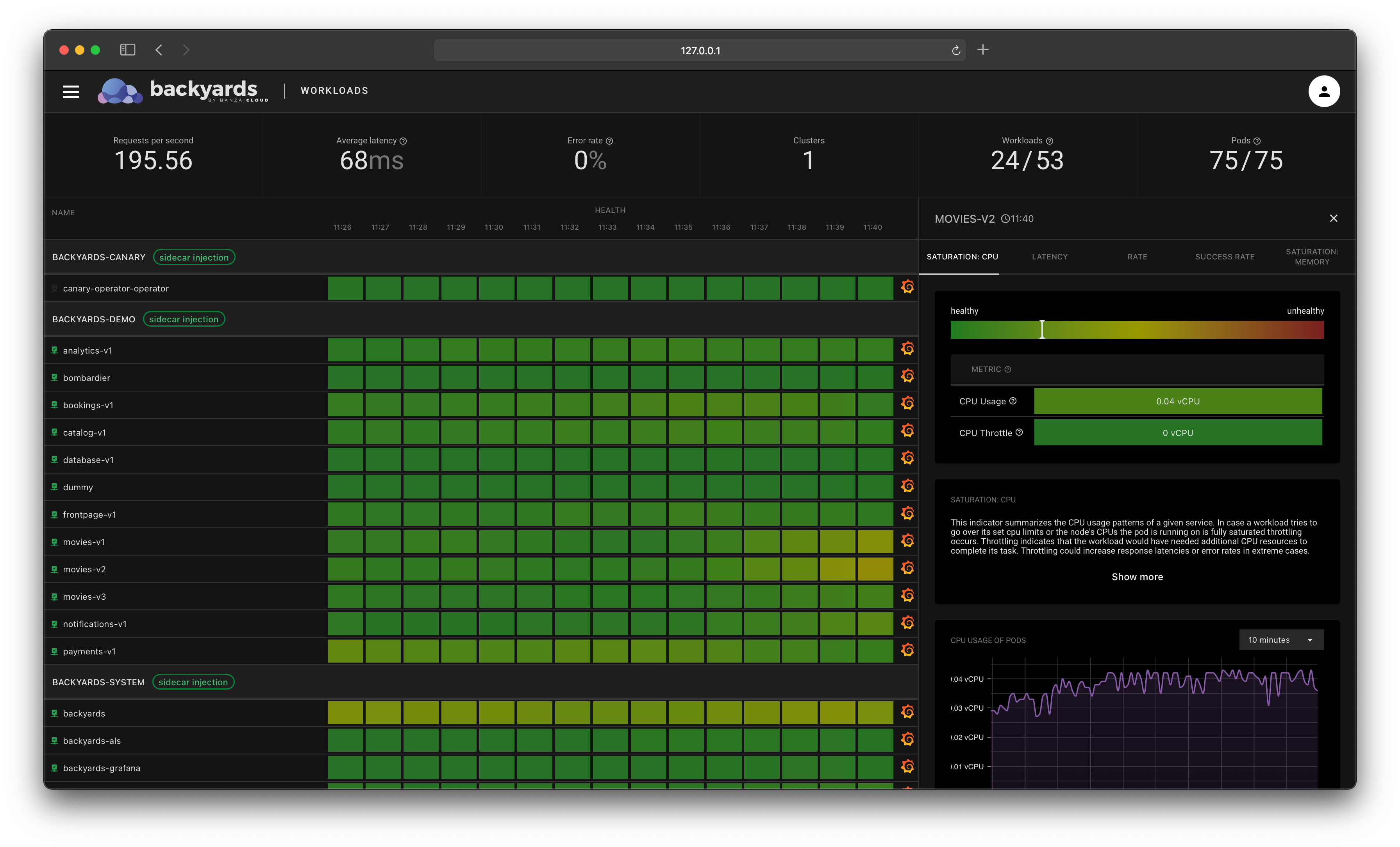

Backyards (now Cisco Service Mesh Manager) tries to tackle these challenges by giving you a complete, but slightly opinionated distribution of Istio. It will build and manage an environment of Istio, Prometheus, Grafana, Cert-manager and a nice dashboard to follow what’s happening within your mesh, and to ease daily work with Istio.

Istio doesn’t lag too far behind API Gateway solutions in terms of feature completeness, but lacks most of their convenience features. If you want to avoid having yet another product along Istio to handle north-south traffic in your cluster, it could work just as well. And if the complexity scares you off, Backyards (now Cisco Service Mesh Manager) could be a great fit. It’s entirely compatible with upstream Istio, but packages some of lego blocks together to deliver a better user experience.

You can think of it as a lightweight API gateway, built purely on Istio primitives. It doesn’t bring convenience features like JWT authentication or rate limiting for now, but with the help of Envoy WASM extensions, it remains fully customizable, and we’re already working on some of these features to be included in the near future.

To get started with Backyards, follow the quickstart docs.

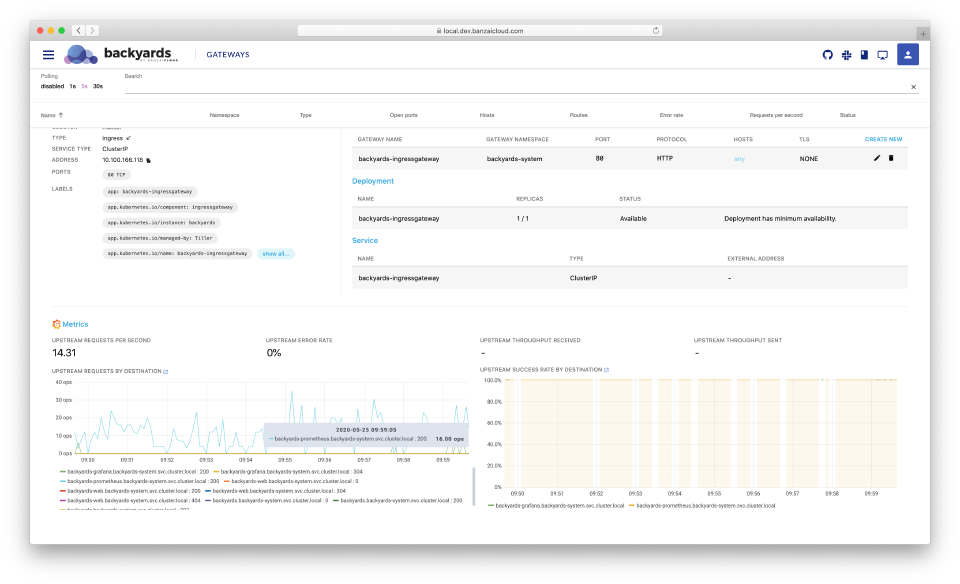

Out-of-the box monitoring of upstream traffic 🔗︎

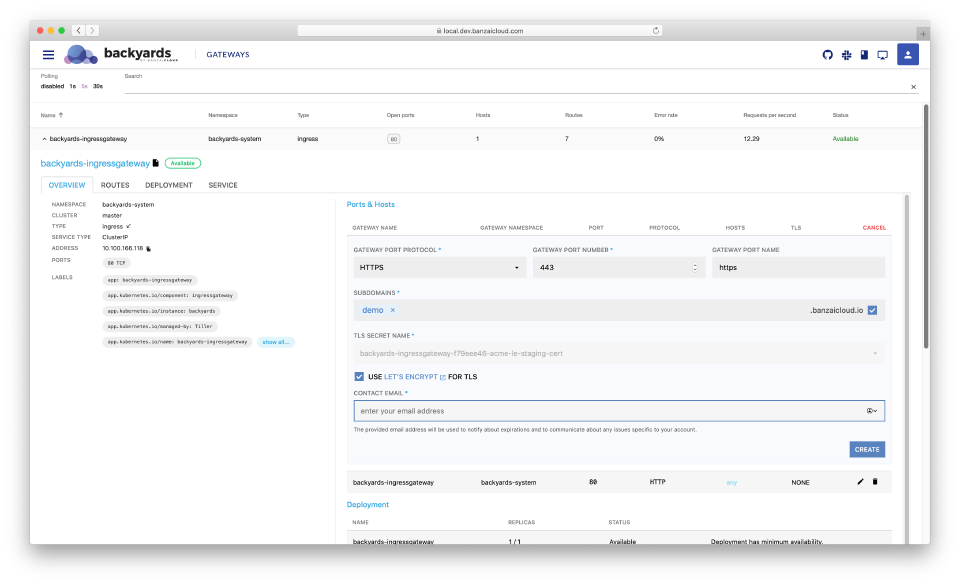

Backyards (now Cisco Service Mesh Manager) collects upstream metrics like latencies, throughput, RPS, or error rate from Prometheus, and provides a summary for each gateway. It also sets up a Grafana dashboard and displays appropriate charts in-place.

Manage port and host configurations 🔗︎

Backyards (now Cisco Service Mesh Manager) understands Istio’s Gateway resources and the gateway’s service configuration in Kubernetes, so it can display information about ports, hosts and protocols that are configured on a specific gateway. It’s basically a human readable visual representation of Istio configuration. You can also set up new ports, and Backyards (now Cisco Service Mesh Manager) will translate your configuration to custom resources. The YAML representation is also easily accessible from the UI.

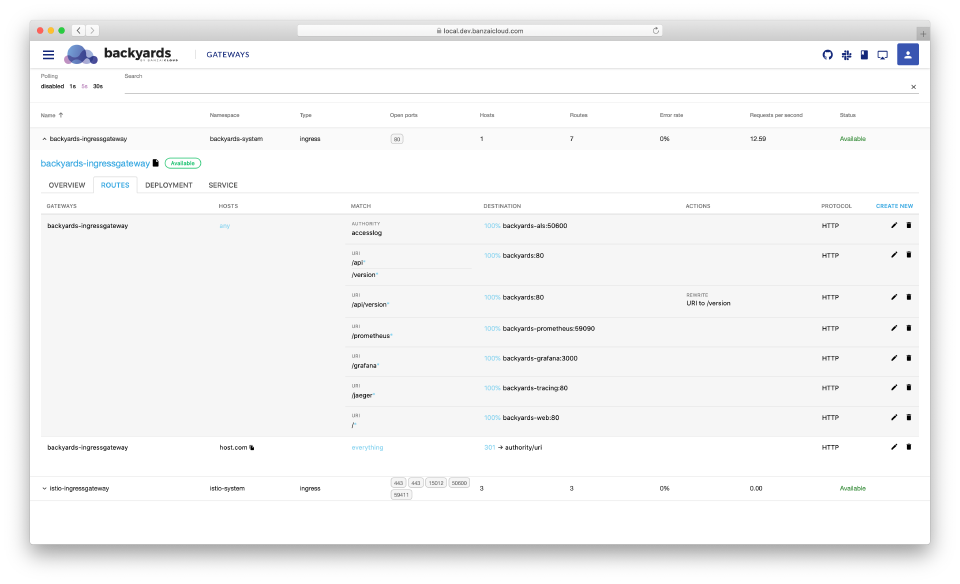

Routing configuration 🔗︎

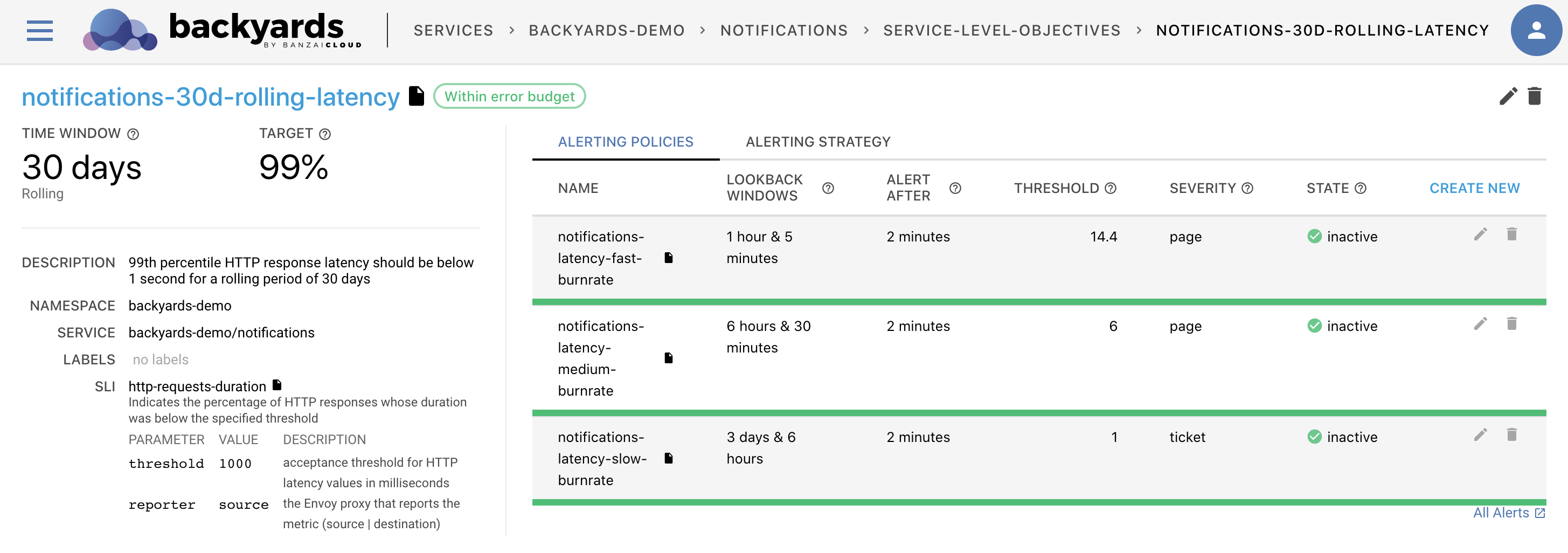

Routing of incoming traffic is done through Istio VirtualServices. We’ve already talked a lot about the powerful feature set that they bring to the table, and also the complexity that comes along with it.

Backyards (now Cisco Service Mesh Manager) displays routes and their related configuration on the gateway management page. It is able to understand complex scenarios, displays them in an easily processable format, and does validations. It makes it easy to overview complicated setups, and to find misconfigurations.

It also gives you the ability to configure routing rules. As with port configurations, it translates the inputs to Istio custom resources (mainly VirtualServices), then validates and applies them to the cluster. Without an additional abstraction layer it’s easy to reuse the generated YAMLs in CI/CD or GitOps scenarios as well.

TLS configuration 🔗︎

When setting up a service on a gateway with TLS, you need to configure a certificate for the host(s). You can do that by bringing your own certificate, putting it in a Kubernetes secret, and configuring it for a gateway server. This works for simple use cases, but involves lots of manual steps when obtaining or renewing a certificate. Automated Certificate Management Environments (ACME) automates these kinds of interactions with the certificate provider.

ACME is most widely used with Let’s Encrypt and - when in a Kubernetes environment - cert-manager. Backyards (now Cisco Service Mesh Manager) helps you set up cert-manager, and you can quickly obtain a valid Let’s Encrypt certificate through the dashboard with a few clicks - even with an automatically generated banzaicloud.io domain if you’d like!

Note: Backyards (now Cisco Service Mesh Manager) runs an additional controller to workaround cert-manager problems with Istio. There is a community requirement to Support VirtualService resources for HTTP01 solving for better Istio support in cert-manager, and based on our work done we will be pushing it upstream.

tl;dr 🔗︎

A service mesh is mainly responsible for handling east-west traffic in a cluster, but Istio extends the basic service mesh functionality with ingress and egress capabilities. It gives the user powerful options for setting up routing and traffic control on the edge of the mesh, but does it in a complex fashion, that may not suit the needs of all users. Backyards (now Cisco Service Mesh Manager) combines Istio’s strong feature set with an API Gateway’s user experience that makes it a viable option if you don’t want to have yet another product for handling north-south traffic in your clusters.

Check out Backyards in action on your own clusters!

Want to know more? Get in touch with us, or delve into the details of the latest release.

Or just take a look at some of the Istio features that Backyards automates and simplifies for you, and which we’ve already blogged about.