The content of this page hasn't been updated for years and might refer to discontinued products and projects.

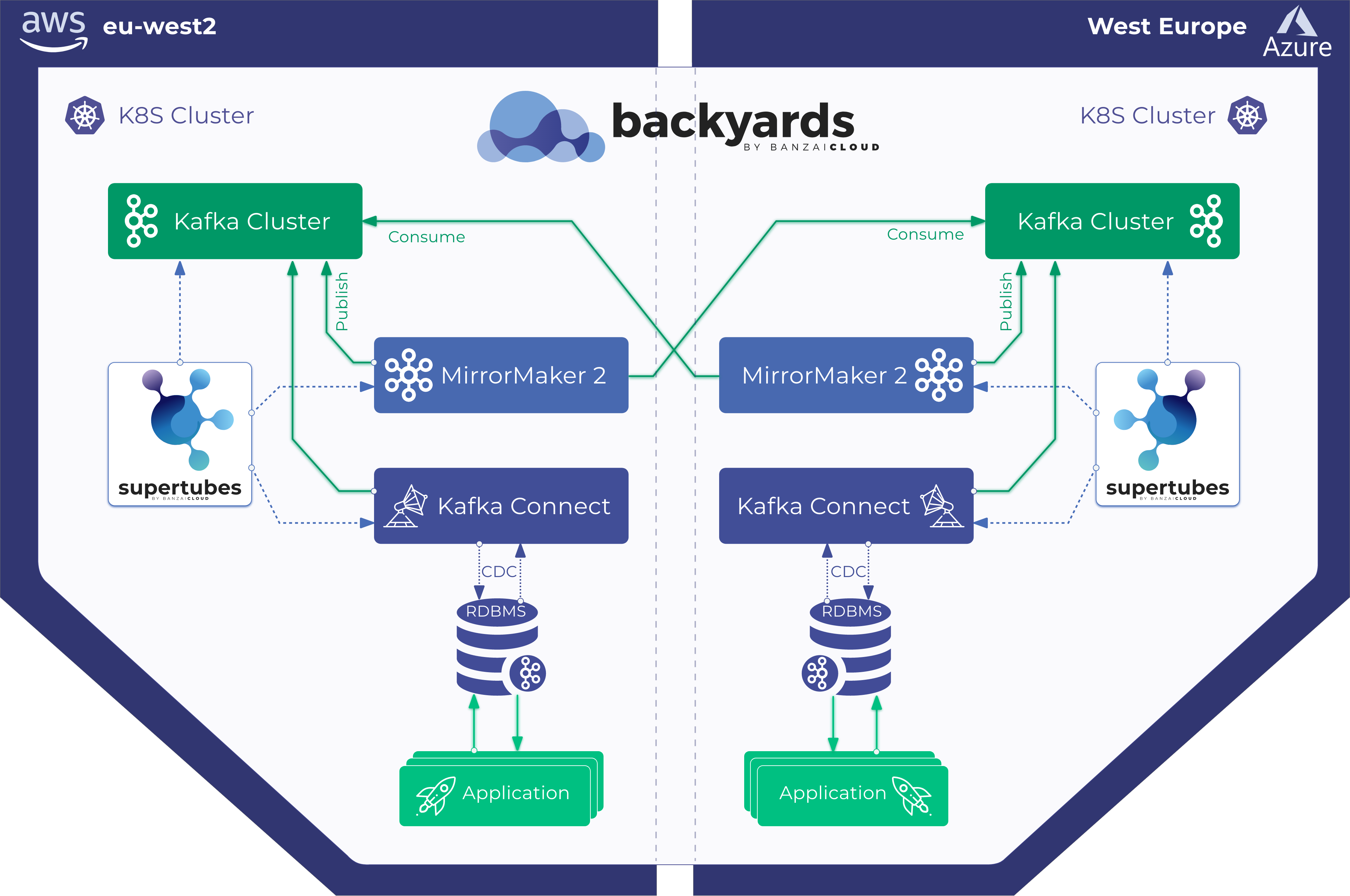

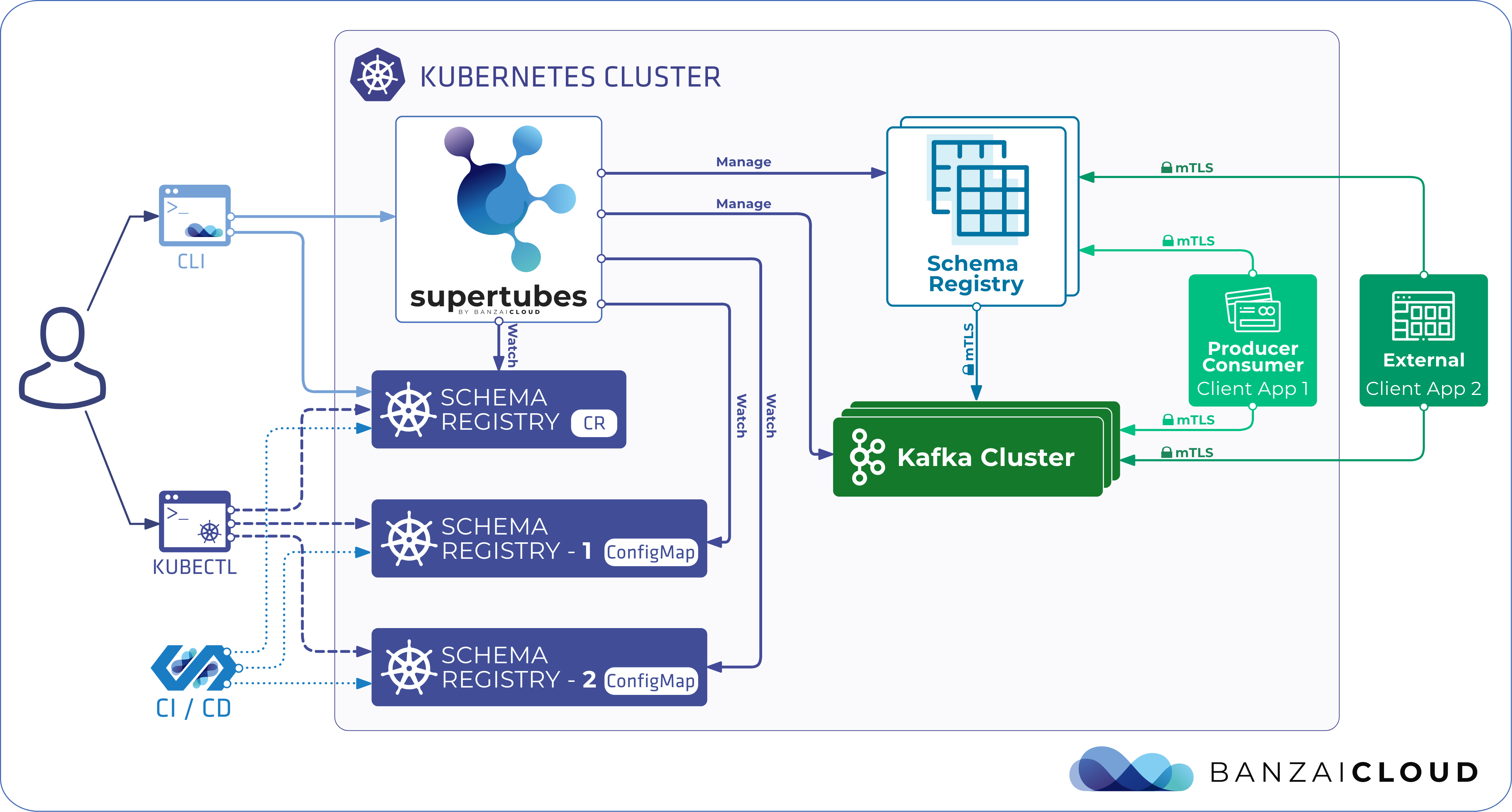

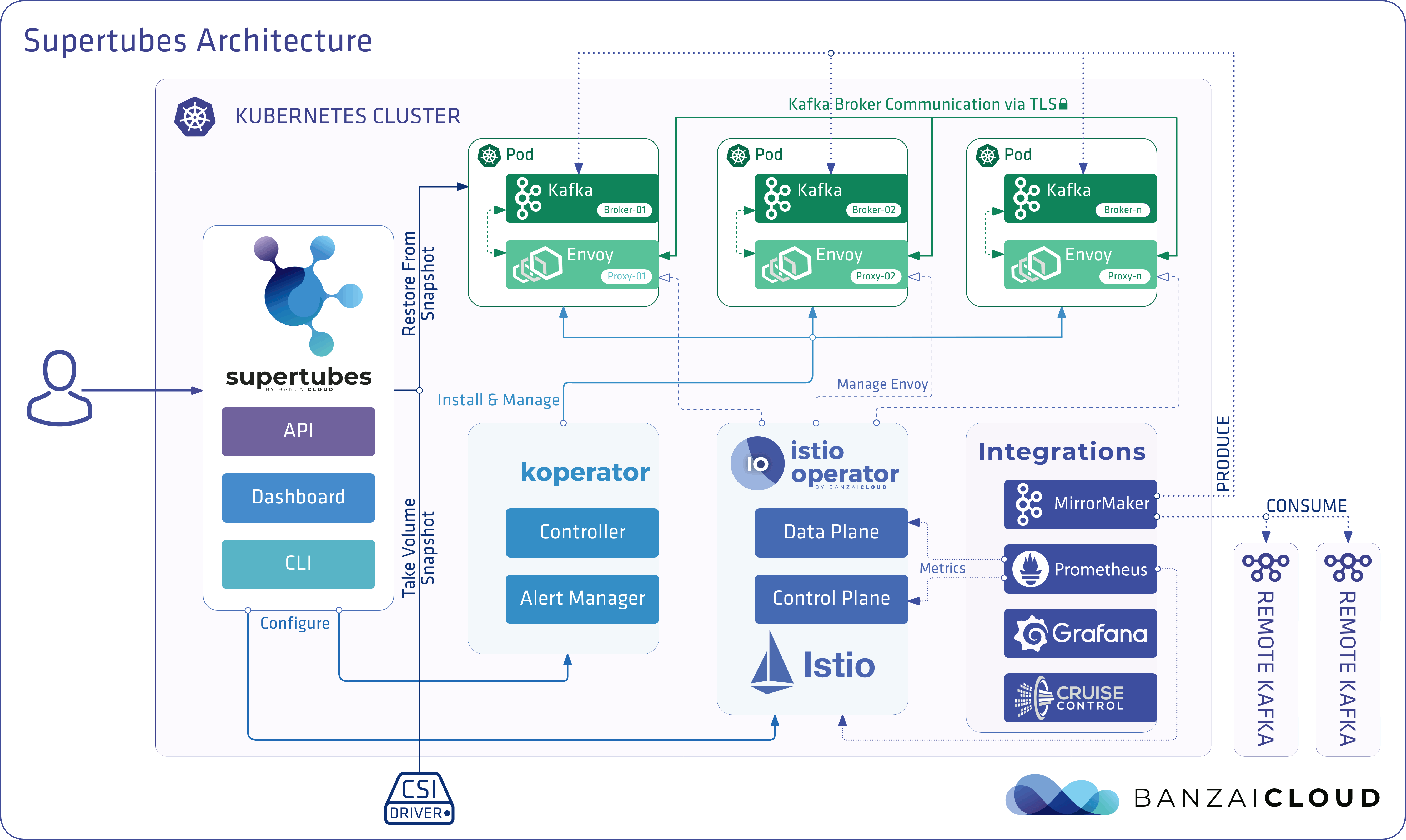

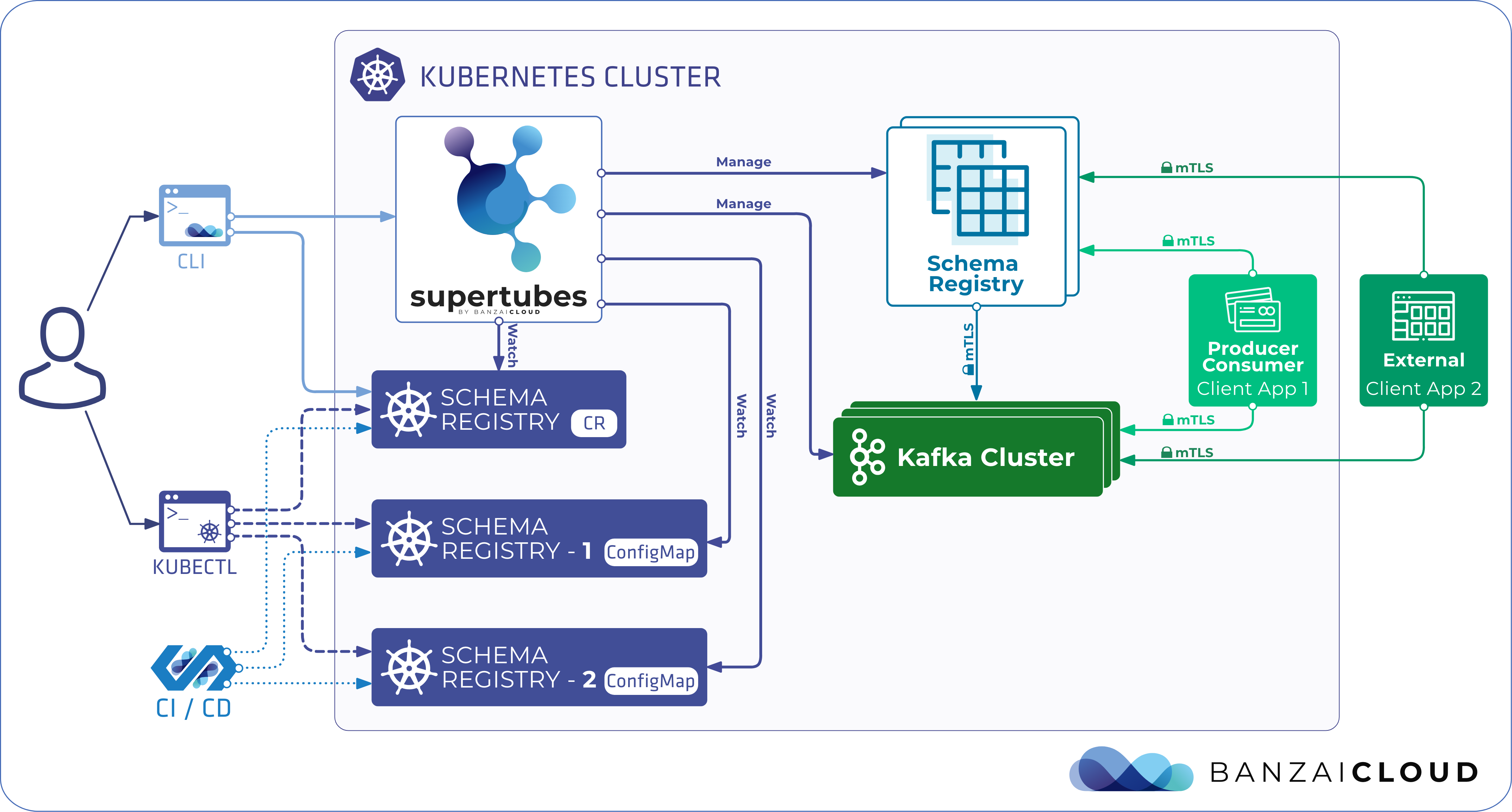

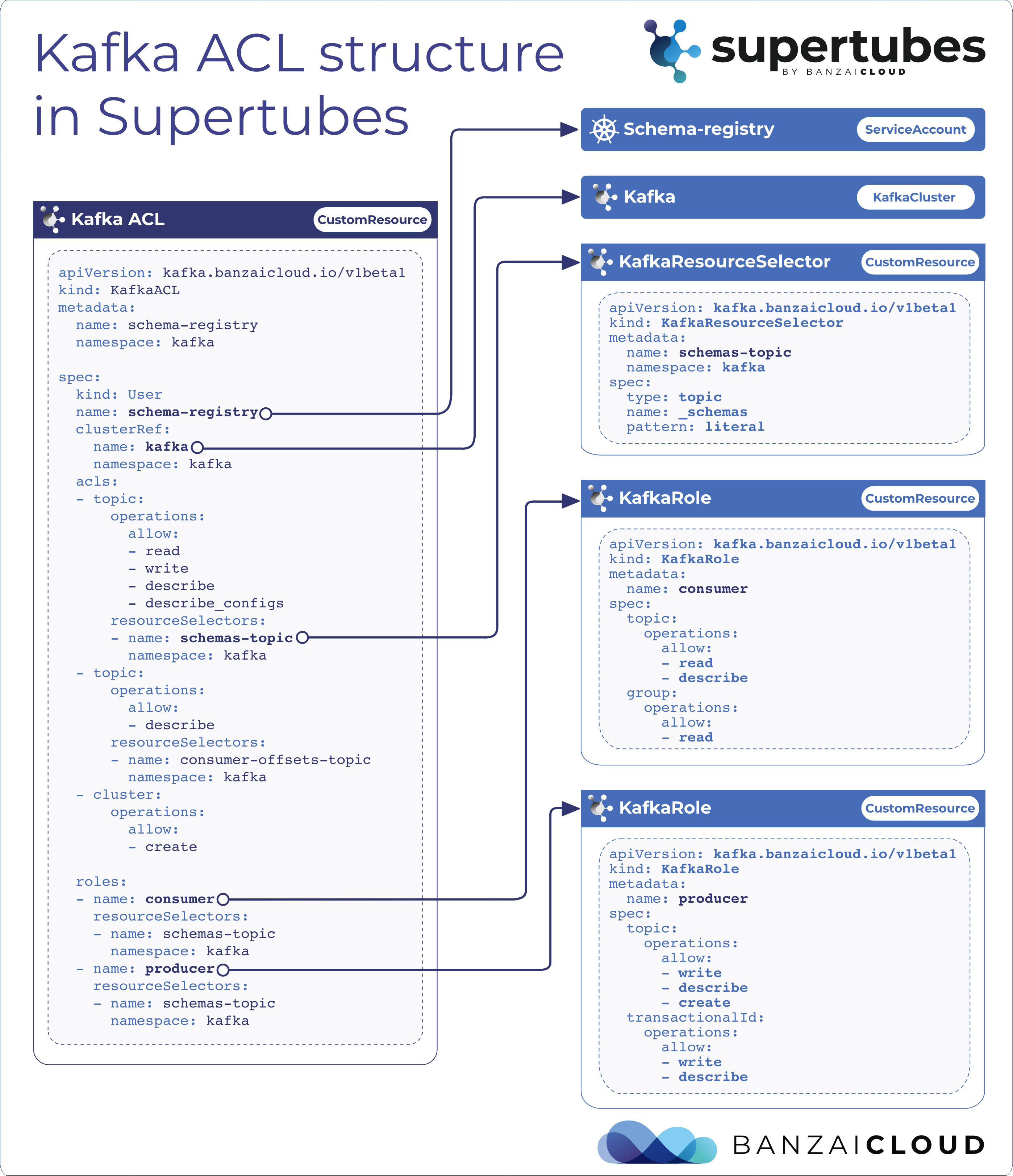

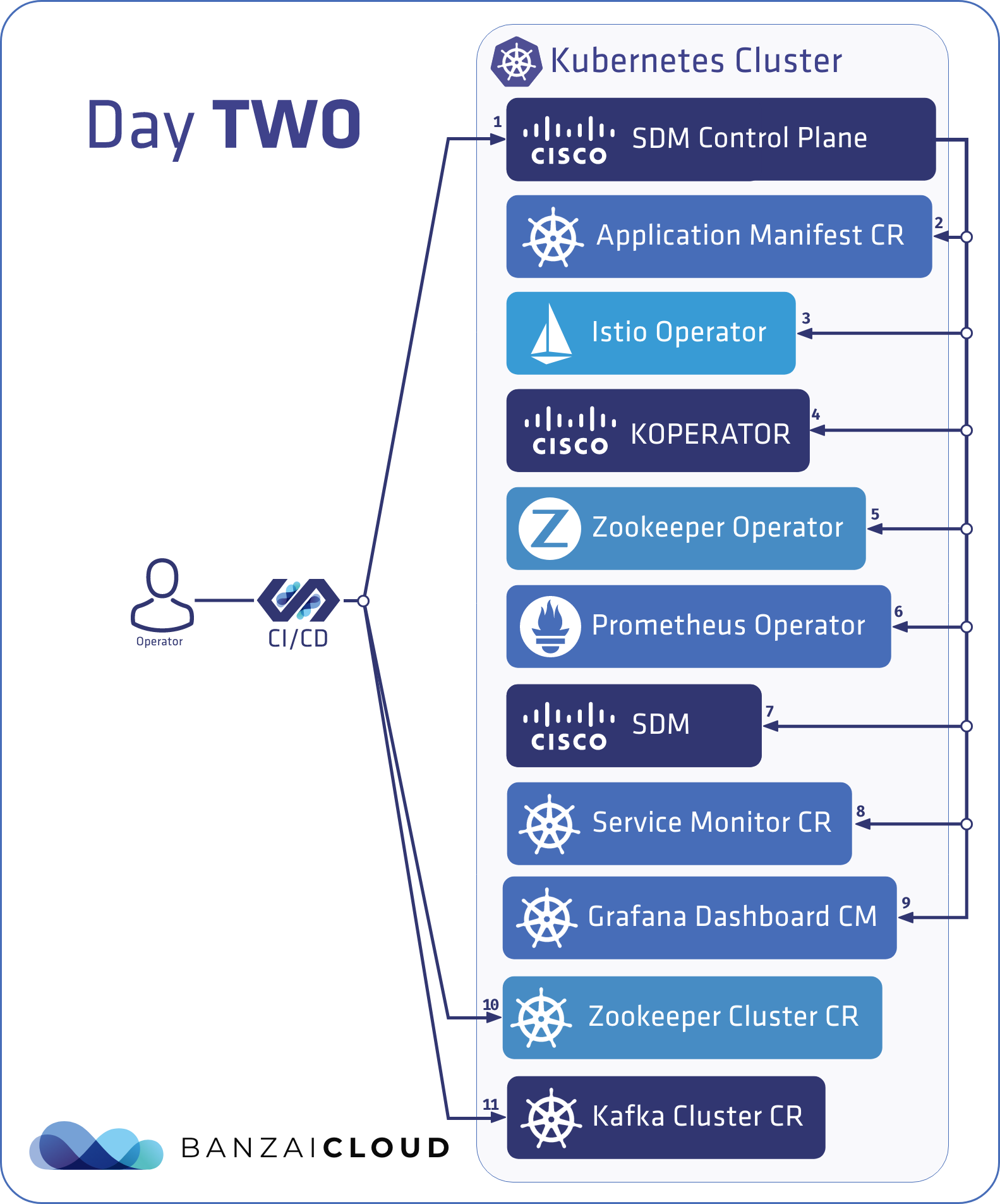

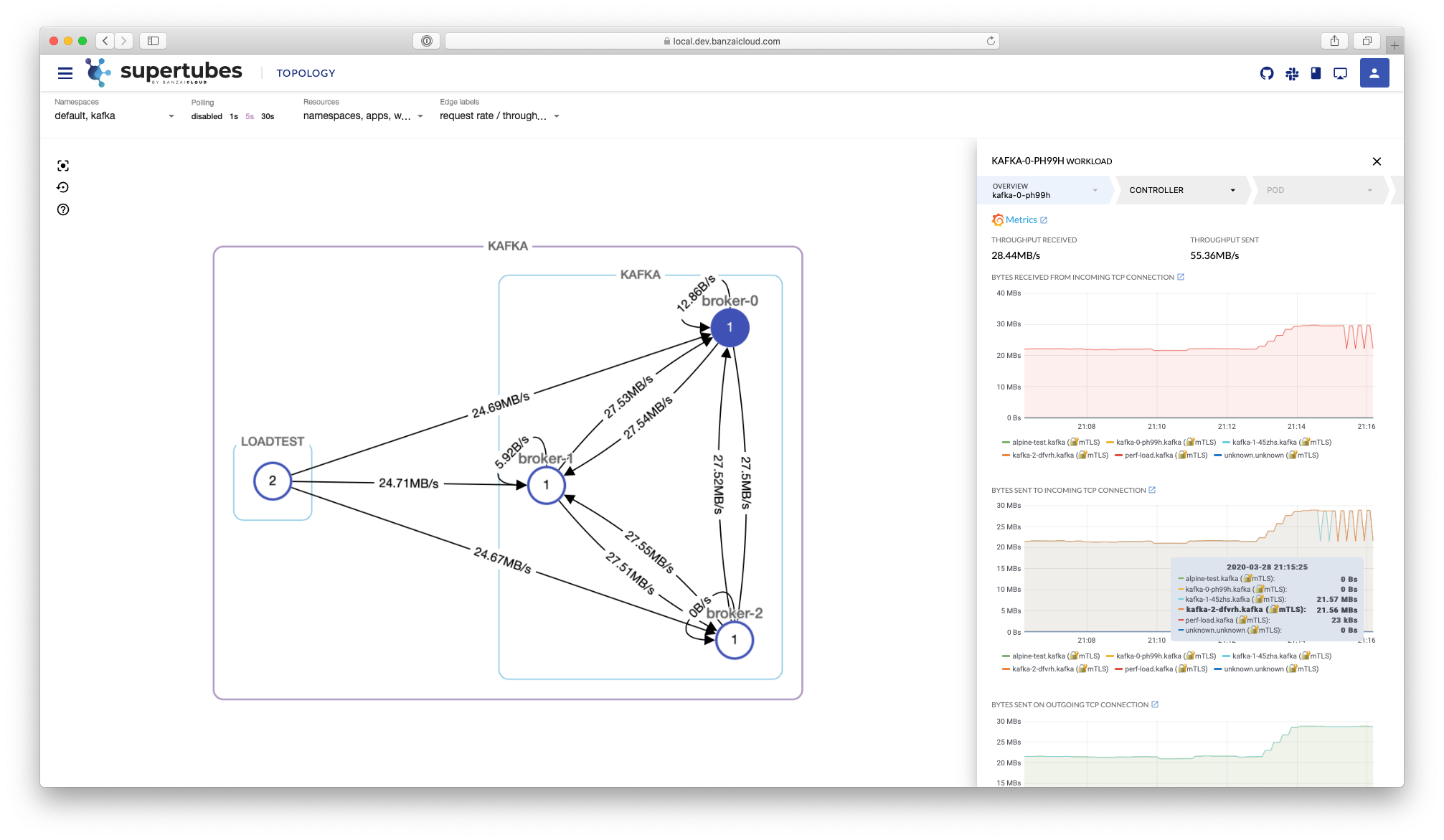

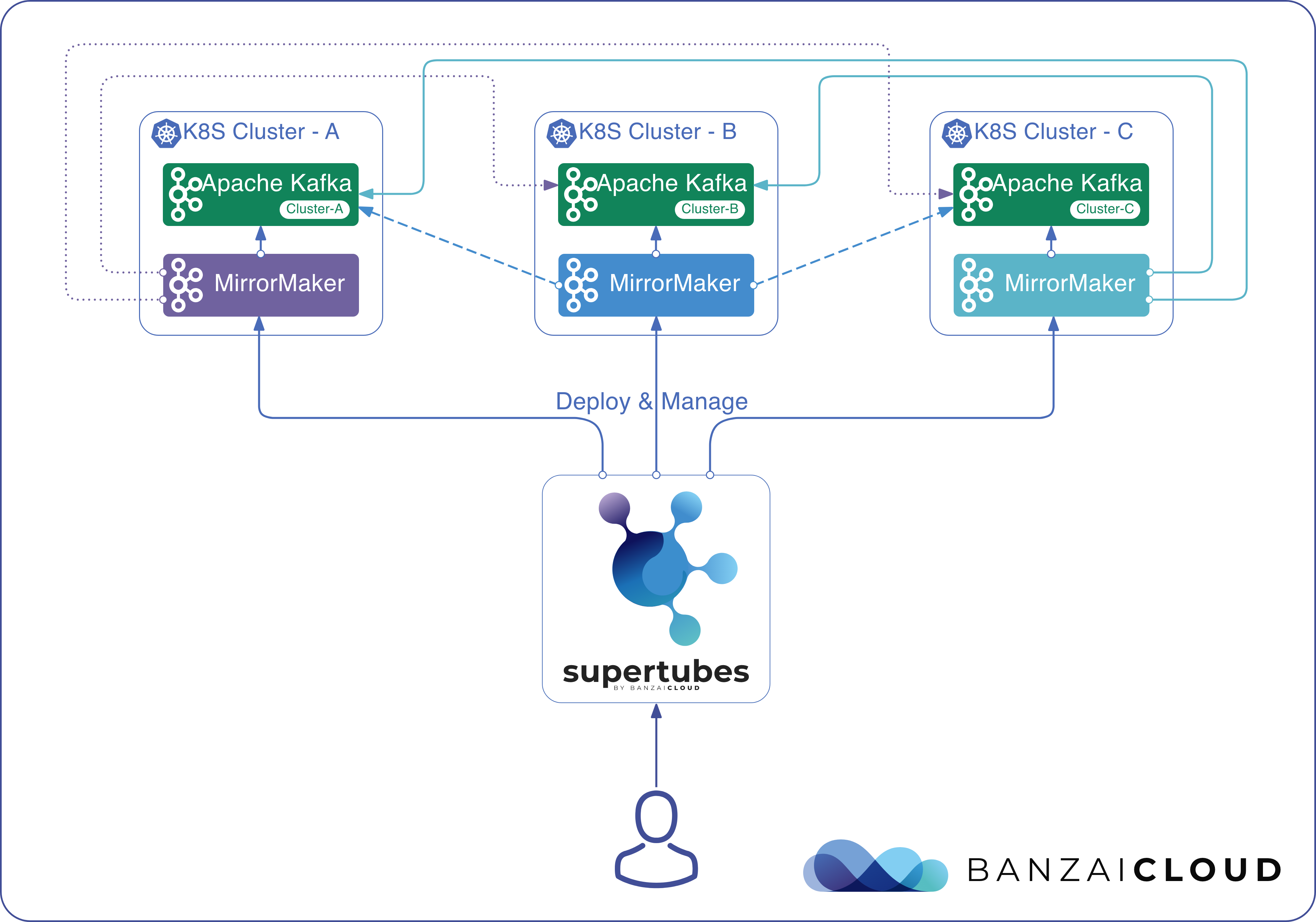

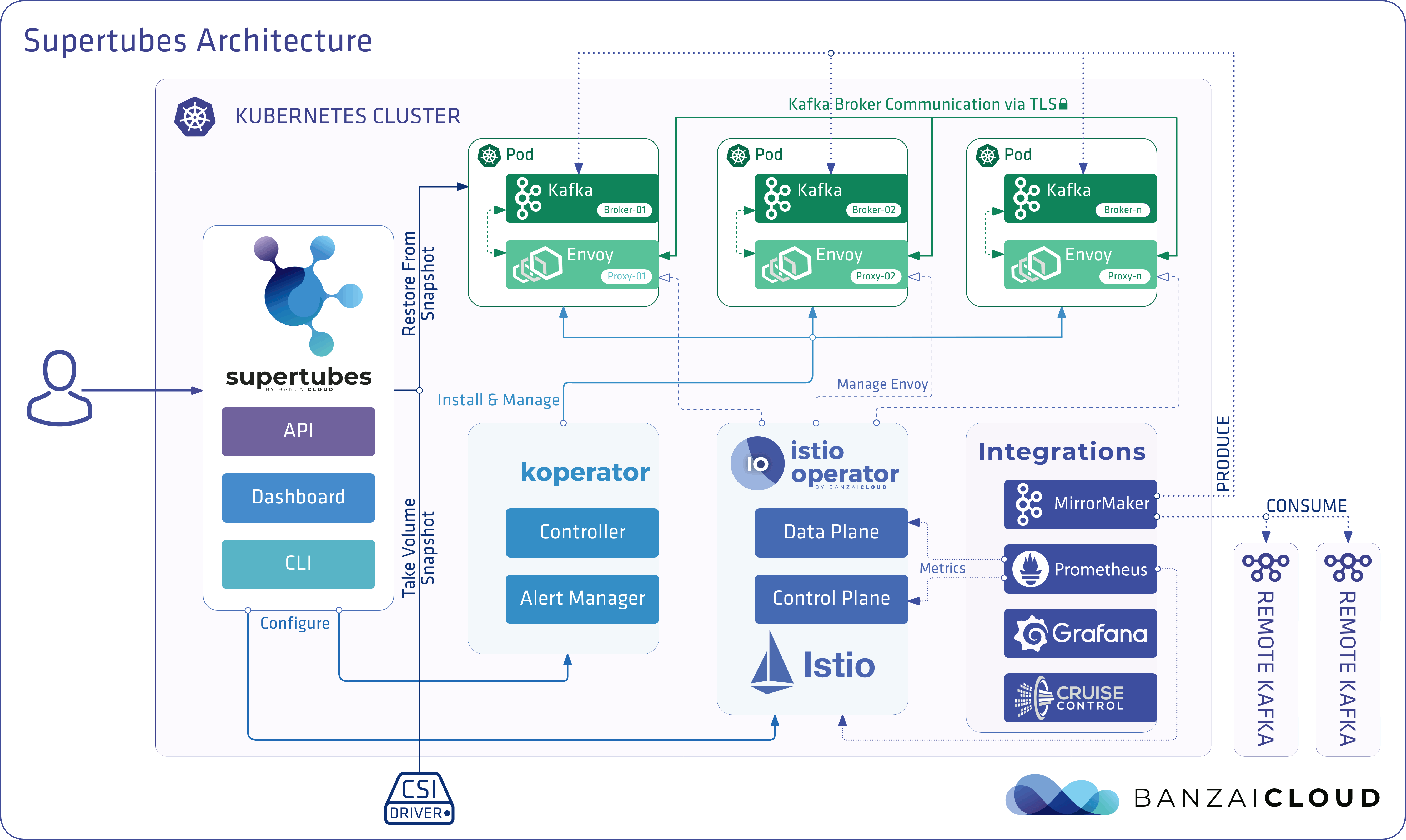

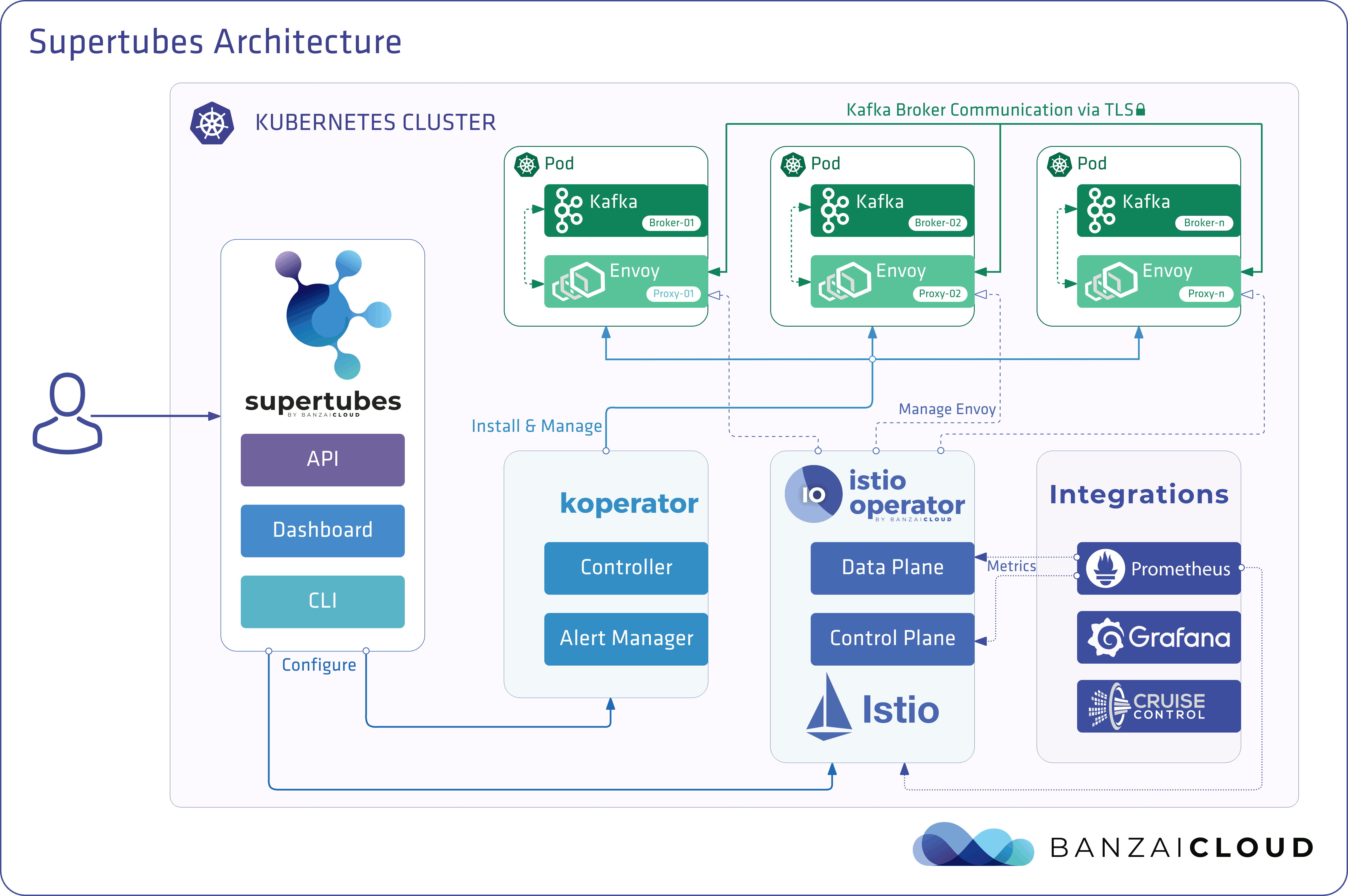

Today, we’re happy to announce the 1.0 release of Supertubes, Banzai Cloud’s tool for setting up and operating production-ready Kafka clusters on Kubernetes through the leveraging of a Cloud-Native technology stack.

If you are a frequent reader of our blog, or if you’ve been using the open source Koperator , you might already be familiar with Supertubes, our product that delivers Apache Kafka as a service on Kubernetes.

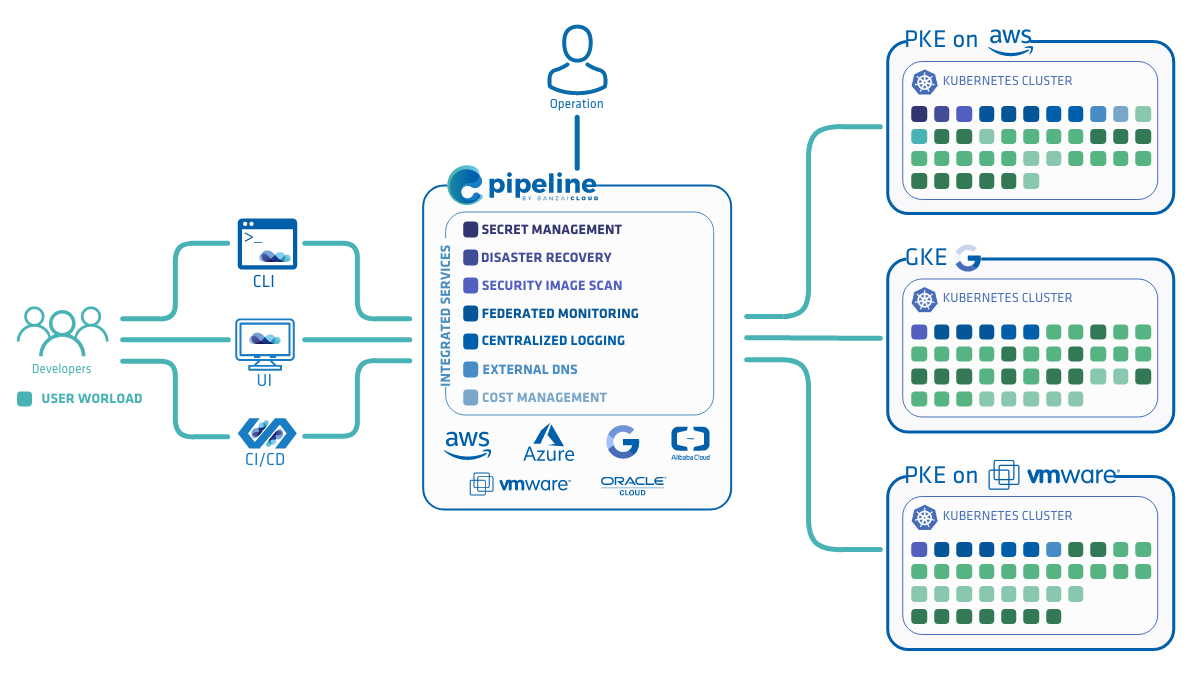

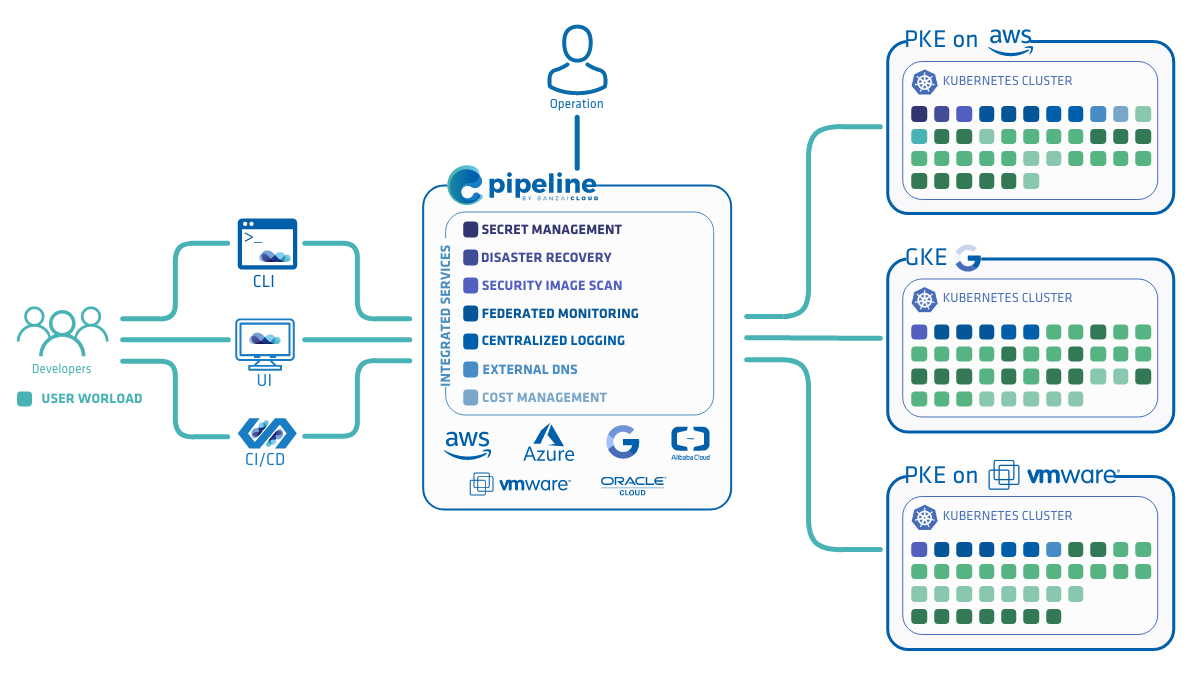

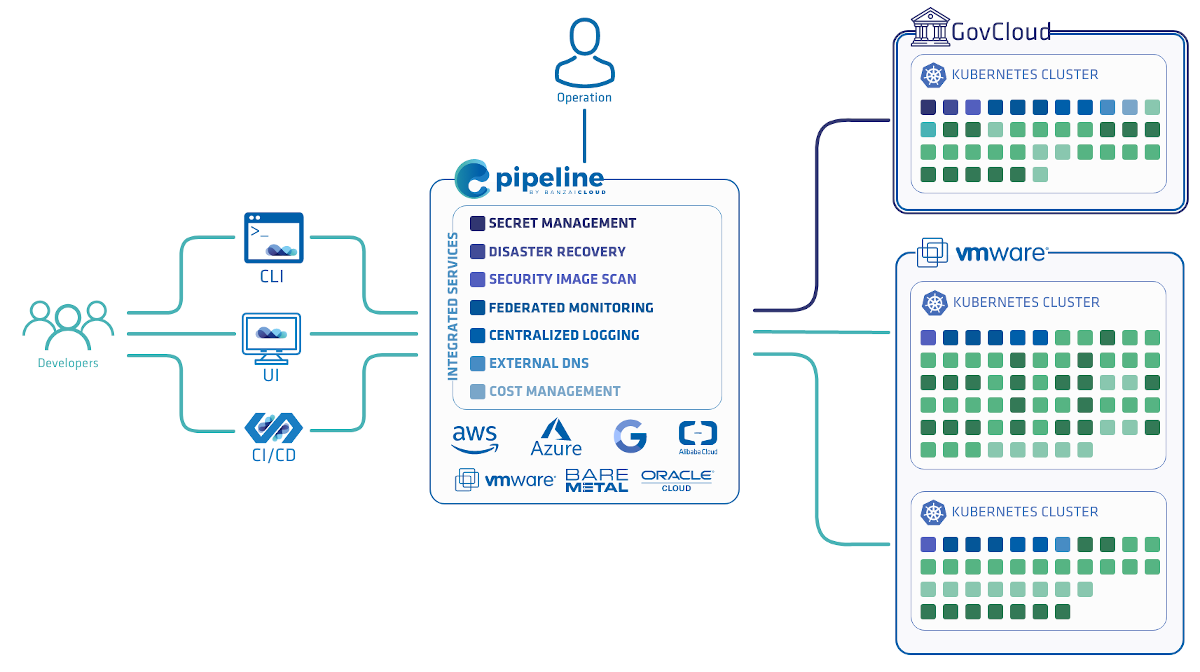

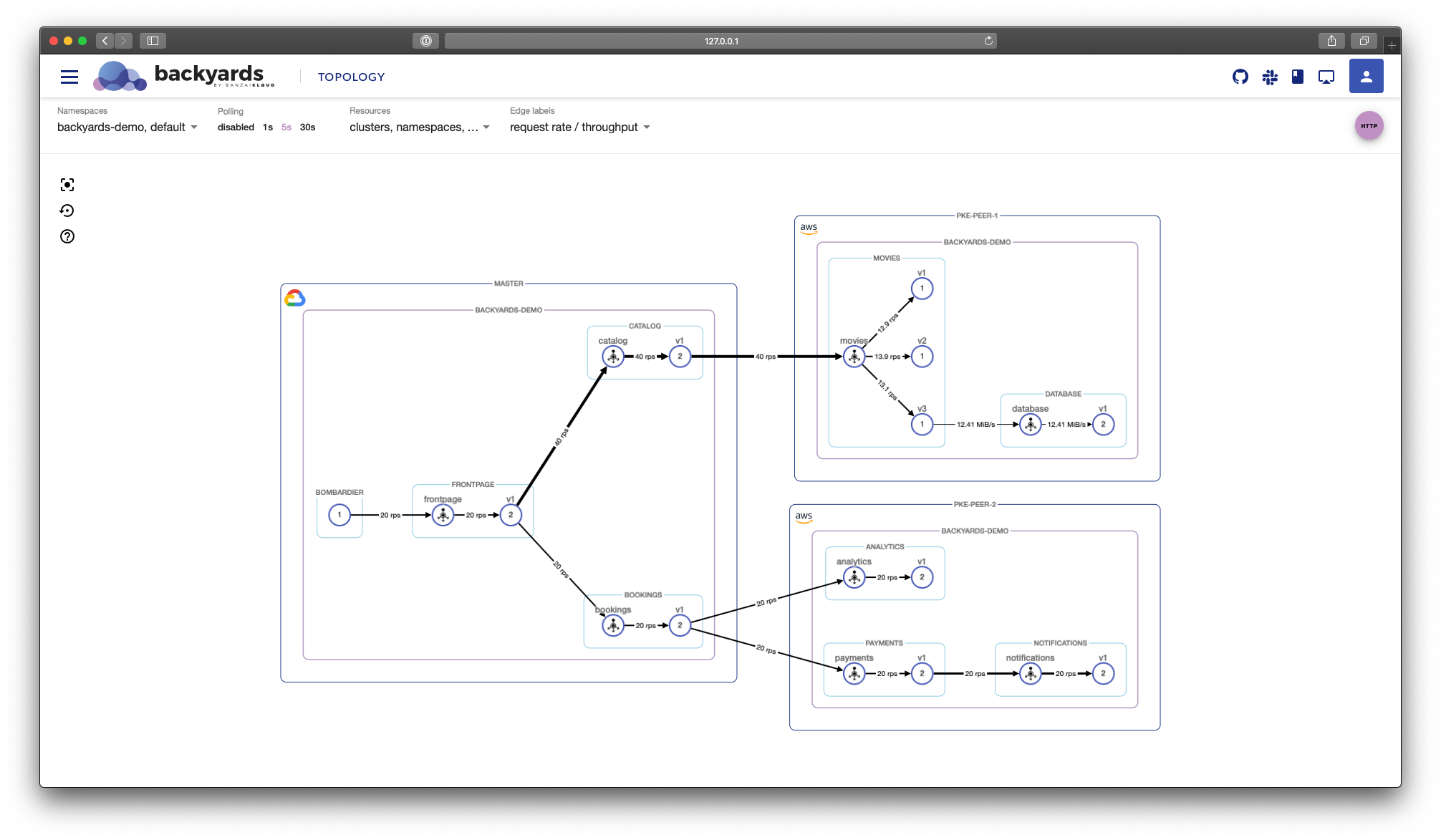

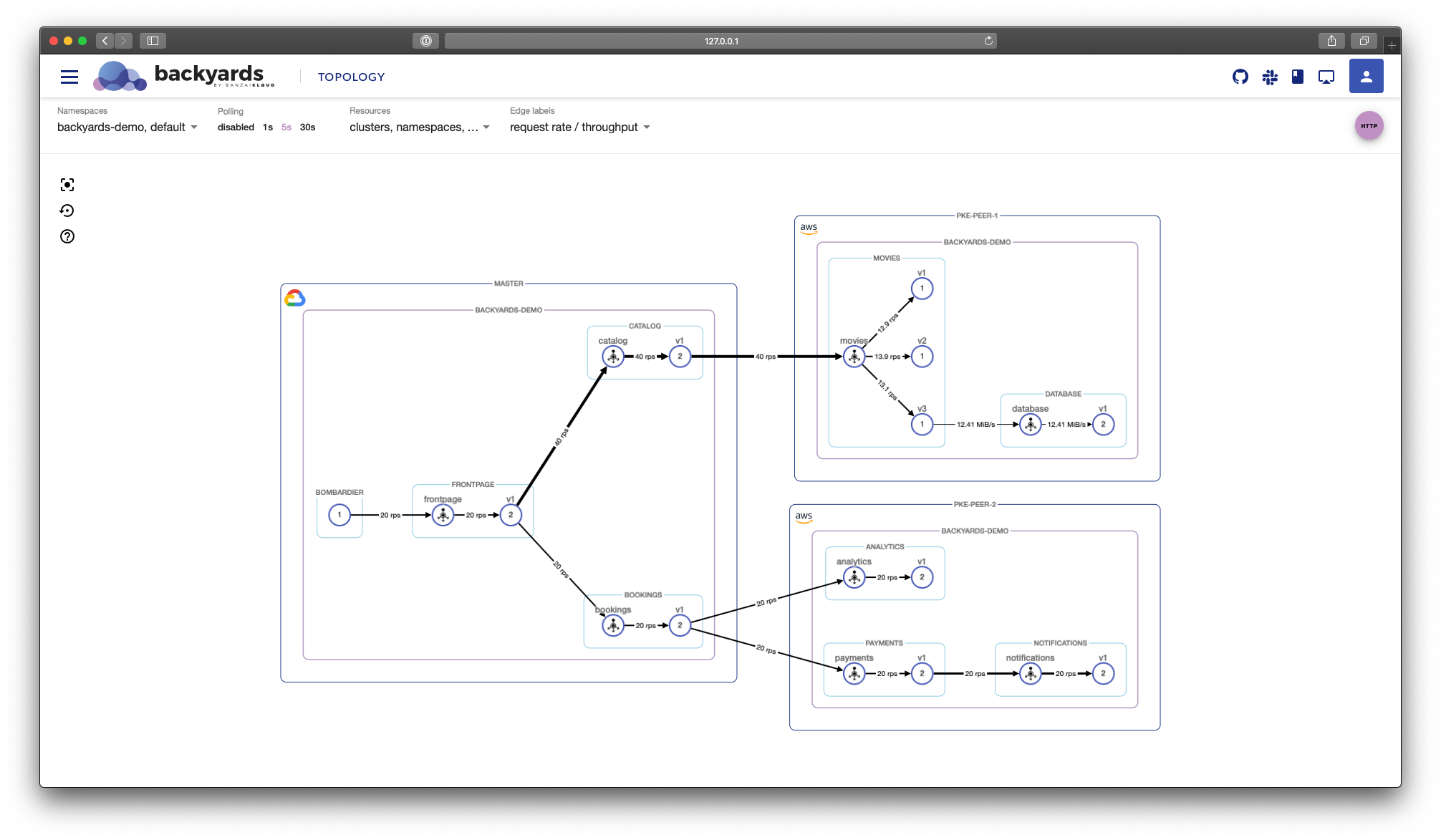

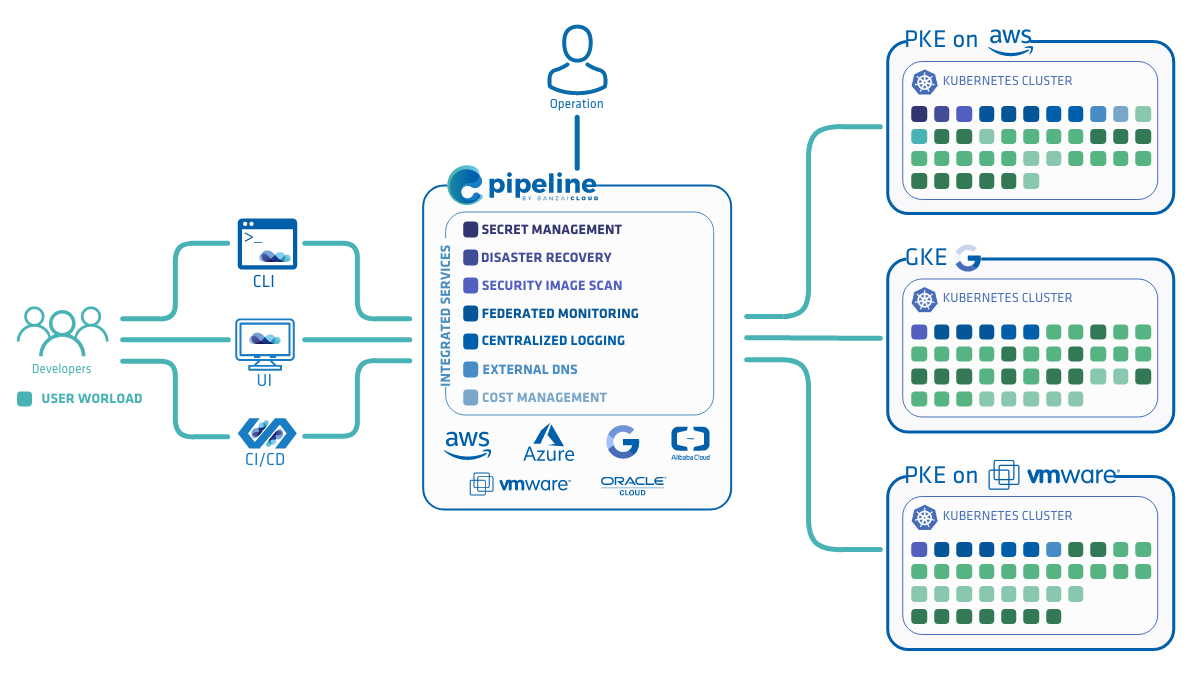

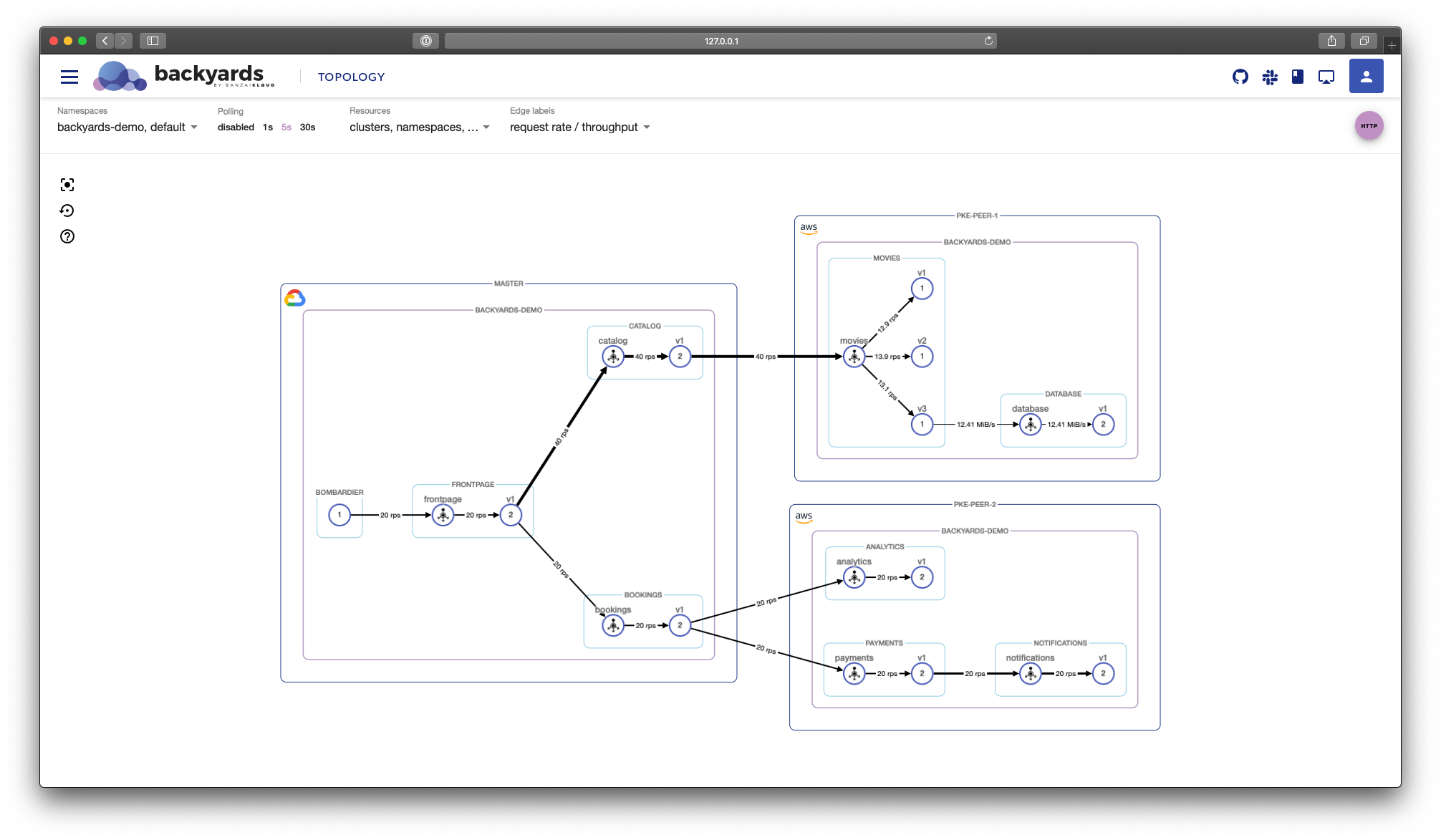

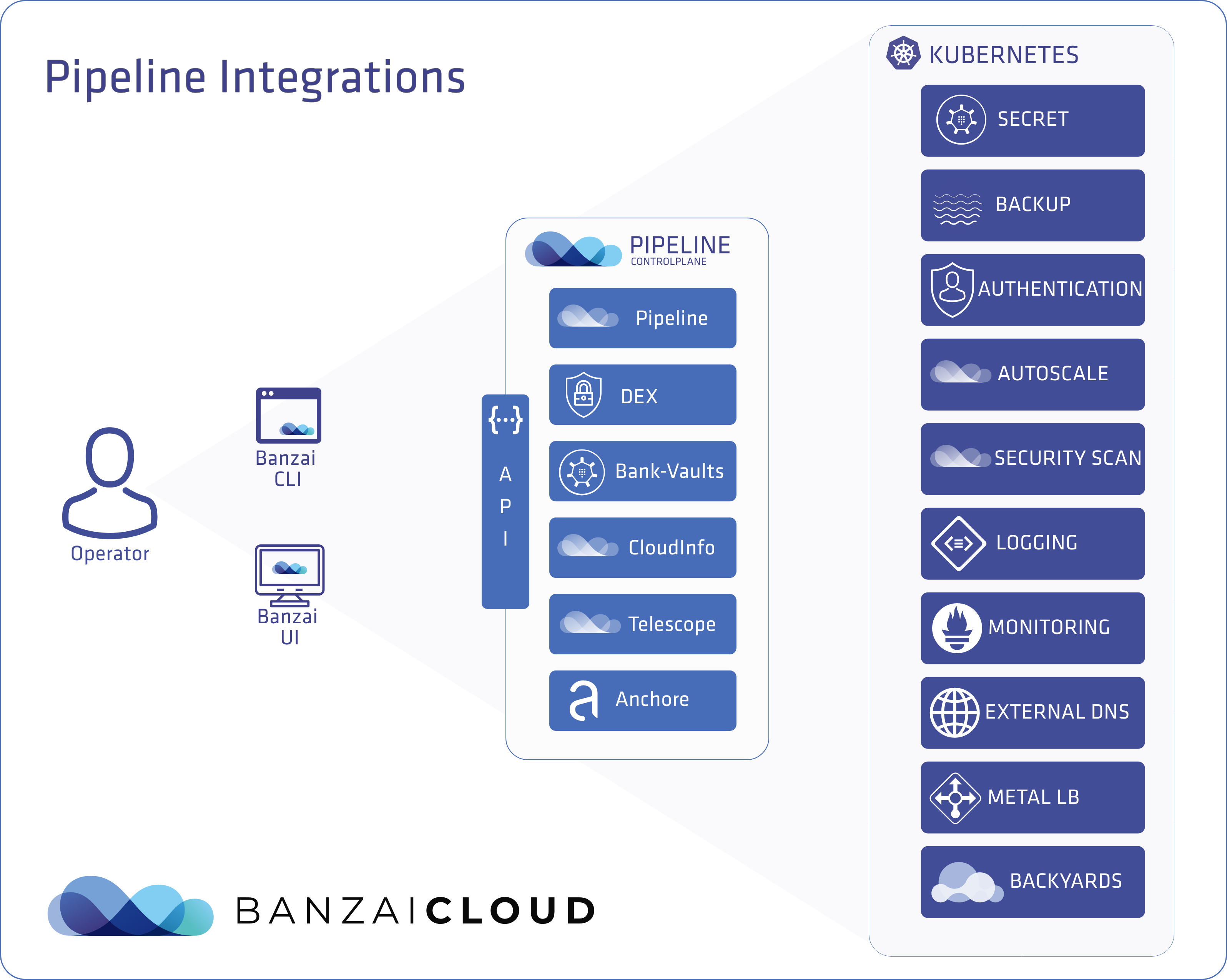

A few weeks ago we discussed the way that we integrated Kubernetes federation v2 into Pipeline, and took a deep dive into how it works. This is the next post in our federation multi cloud/cluster series, in which we’ll dig into some real world use cases involving one of Kubefed’s most interesting features: Replica Scheduling Preference.