Istio 1.7 has just been released and it mostly focuses on improving the operational experience of an Istio service mesh. In this post we’ll review what’s new in Istio 1.7 and highlight a few gotchas to look for when upgrading to this new version.

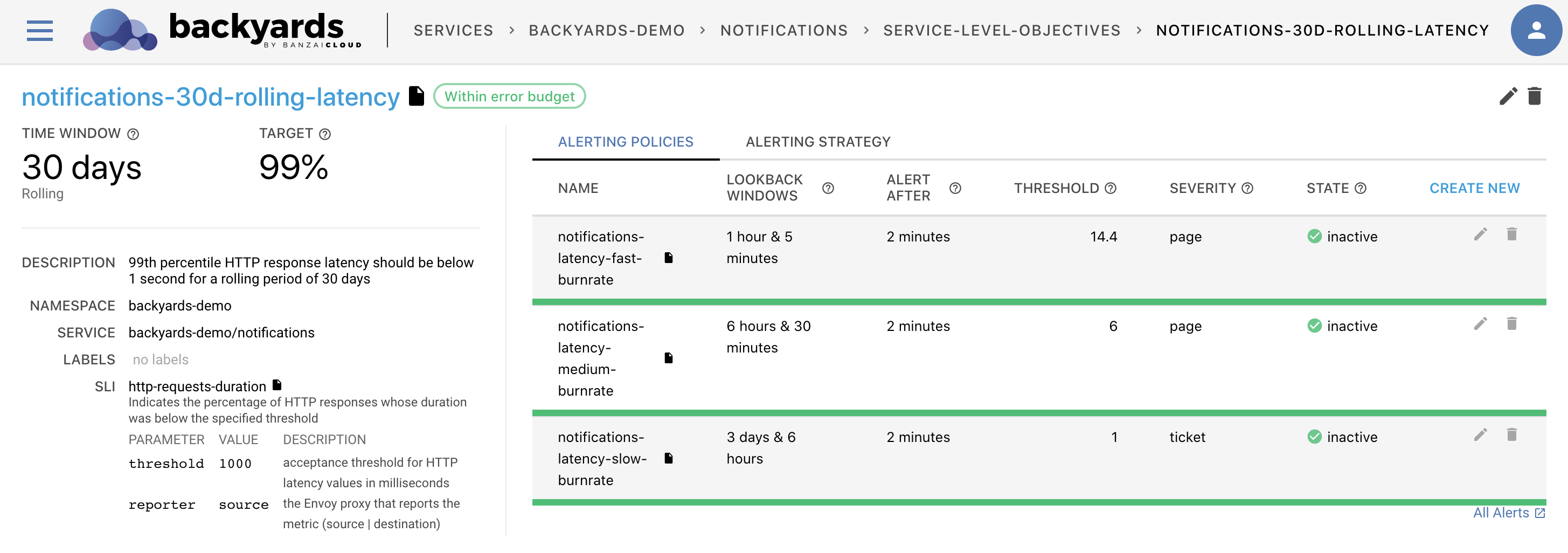

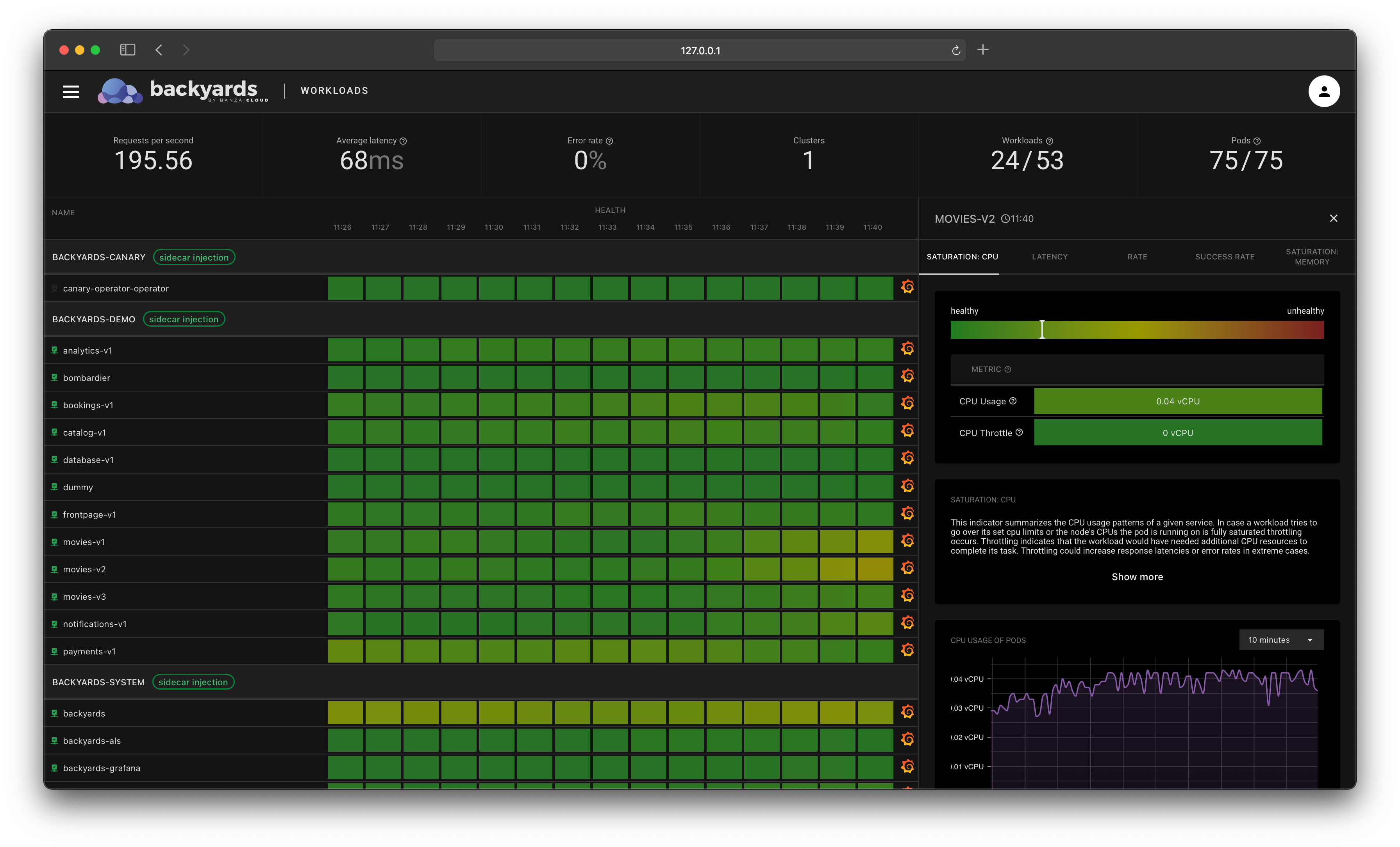

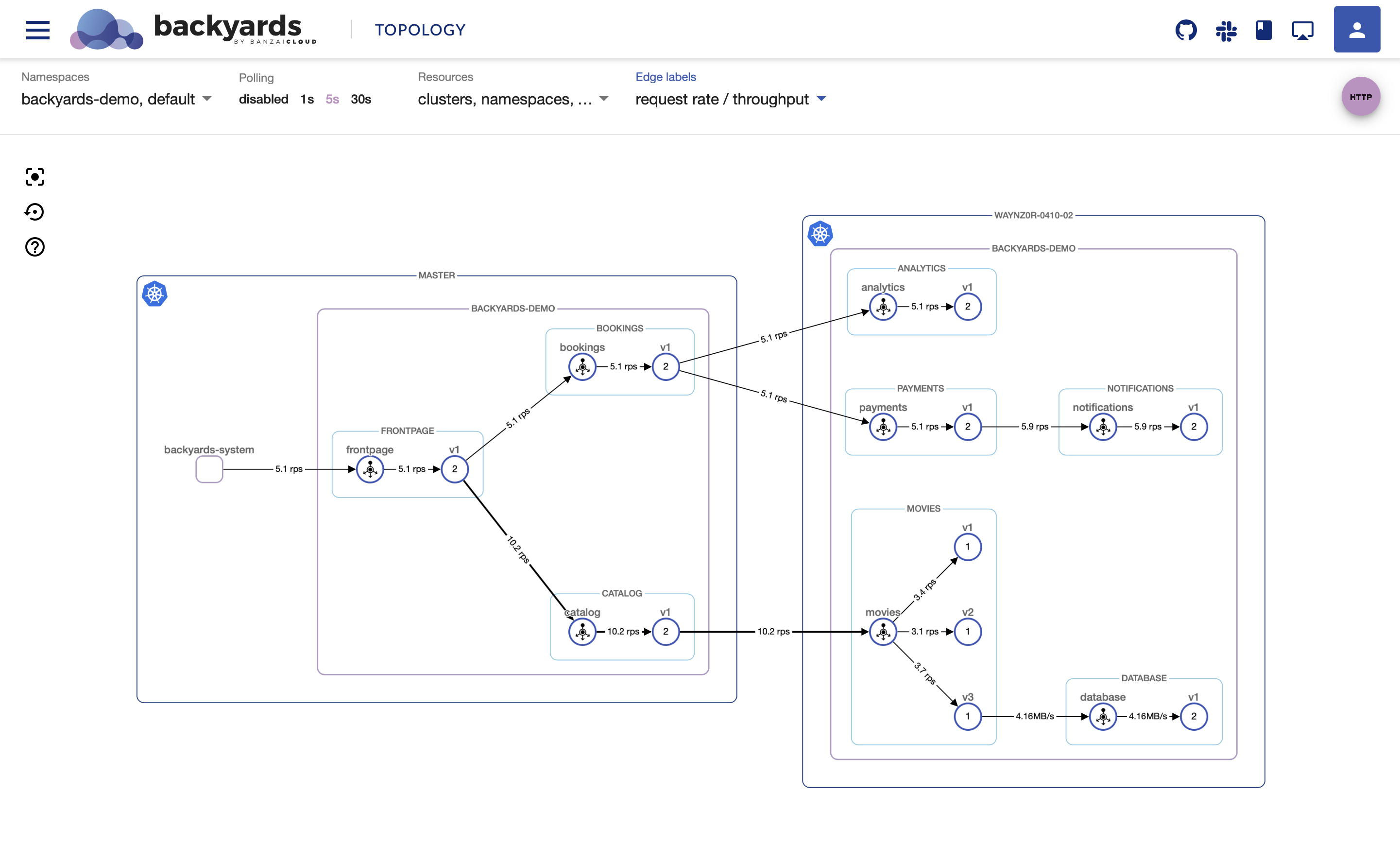

If you’re looking for a production ready Istio distribution based on the new Istio 1.7 release and with support for a safe and automated canary upgrade flow, check out the new Backyards 1.4 release.

Istio 1.7 changes 🔗︎

High impact changes (affect the every-day operation of the mesh):

- Multiple control plane upgrade support enhanced

- EnvoyFilter syntax for Lua filter is changed

- Gateway deployments are run without root privileges by default

Under the radar changes (smaller changes, but we believe they are interesting/useful):

- Option added to only start application containers after sidecar proxy has started

- Source principal based authorization added for Istio Gateways

- SDS added for egress gateways

High impact changes 🔗︎

Multiple control plane upgrade support enhanced 🔗︎

Upgrading the Istio control plane to a newer version was not a straightforward task in the past. For a while now, in-place Istio control plane upgrade has been automated to ease this pain. However, for some production use cases this upgrade model is not acceptable as it transitions the whole mesh to the new control plane version in one step where issues could arise and may lead to traffic disruptions.

To solve this issue in Istio 1.6 the multi control plane upgrade model was introduced where multiple Istio control planes can run simultaneously in a cluster with different versions and the applications can be gradually moved from one control plane to the other. This way mesh operators can accomplish control plane upgrades more safely and with much more confidence, and it is easier to rollback to the older version in case of an issue.

For Istio 1.7 this upgrade model became more stable and now it is the recommended way to upgrade Istio.

In our open-source Banzai Cloud Istio operator we’ve been working on this feature as well. You can read more on Istio control plane canary upgrades in this blog post.

EnvoyFilter syntax for Lua filter is changed 🔗︎

With EnvoyFilters the Envoy configurations generated by istiod can be customized.

In EnvoyFilters you can write Lua filters e.g. to manipulate request/response headers of the requests.

The syntax of this Lua filter config was changed for Istio 1.7.

If you run Istio 1.7 with an EnvoyFilter containing the old Lua filter config syntax which does not have a specific proxy version specified, your istio proxy containers won't start!

The old config looks like this:

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: ef-1.6

namespace: istio-system

spec:

configPatches:

- applyTo: HTTP_FILTER

match:

context: ANY

listener:

filterChain:

filter:

name: envoy.http_connection_manager

proxy:

proxyVersion: ^1\.6.*

patch:

operation: INSERT_BEFORE

value:

name: envoy.lua

config:

inlineCode: |

function envoy_on_request(handle)

request_handle:headers():add("foo", "bar")

end

Whilst the new one should look like this (notice the changes from field config to typed_config):

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: ef-1.7

namespace: istio-system

spec:

configPatches:

- applyTo: HTTP_FILTER

match:

context: ANY

listener:

filterChain:

filter:

name: envoy.http_connection_manager

proxy:

proxyVersion: ^1\.7.*

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.http.lua

typed_config:

'@type': type.googleapis.com/envoy.extensions.filters.http.lua.v3.Lua

inlineCode: |

function envoy_on_request(handle)

request_handle:headers():add("foo", "bar")

end

It is a good idea to fill the proxy.proxyVersion field so that the EnvoyFilter is only applied to proxies with specific version and prevents these issues when incompatible changes happen in the syntax for newer Istio proxy versions.

Gateway deployments are run without root privileges by default 🔗︎

In Istio 1.6, an option was added to run ingress and egress gateway proxy containers without root privileges due to security considerations. With this addition it became possible to run the Istio control plane components without root privileges and ensure this with restricted Pod Security Policies.

This feature was not enabled by default in 1.6, but it is enabled in Istio 1.7.

It is important to know that you must use higher target port numbers for the gateway deployments (higher than 1024), otherwise they won't be able to start because the containers do not have root privileges.

Under the radar changes 🔗︎

Option added to only start application containers after sidecar proxy has started 🔗︎

In Istio the sidecar proxy container runs alongside your application container in the same pod. Until the sidecar proxy is not running, the app container may not be able to make some network calls, which can lead to some hard to debug issues. The solution for this issue usually was to make the app container sleep for a few seconds before actually starting to run the application logic so that the istio-proxy container can start up.

This is not a very sophisticated solution and this is where the new proxy.holdApplicationUntilProxyStarts flag comes into the picture, which was introduced in Istio 1.7 as an experimental feature and is turned off by default.

An even better solution for this problem will be when containers can be marked as sidecars and their lifecycle will be managed natively in Kubernetes. You can follow the GitHub issue on this feature here.

If proxy.holdApplicationUntilProxyStarts is set to true, the sidecar injector injects the sidecar in front of all other containers and adds a post-start lifecycle hook to the sidecar container.

The hook blocks the start of the other containers until the proxy is fully running.

To learn more, you can read this blog post from the author of this feature.

Let’s see how a pod starts when this feature is turned off:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 26s default-scheduler Successfully assigned backyards-demo/analytics-v1-85454fbdd4-s2cm9 to ip-192-168-11-248.eu-north-1.compute.internal

Normal Pulling 25s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Pulling image "docker.io/istio/proxyv2:1.7.0"

Normal Pulled 23s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Successfully pulled image "docker.io/istio/proxyv2:1.7.0"

Normal Created 23s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Created container istio-init

Normal Started 23s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Started container istio-init

Normal Pulling 21s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Pulling image "banzaicloud/allspark:0.1.2"

Normal Pulled 13s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Successfully pulled image "banzaicloud/allspark:0.1.2"

Normal Created 13s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Created container analytics

Normal Started 13s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Started container analytics

Normal Pulling 13s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Pulling image "istio/proxyv2:1.7.0"

Normal Pulled 5s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Successfully pulled image "istio/proxyv2:1.7.0"

Normal Created 5s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Created container istio-proxy

Normal Started 5s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Started container istio-proxy

Notice, that the application container (banzaicloud/allspark:0.1.2) was started before sidecar proxy container (istio/proxyv2:1.7.0).

Now, let’s set proxy.holdApplicationUntilProxyStarts to true and restart the pod:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 48s default-scheduler Successfully assigned backyards-demo/analytics-v1-85454fbdd4-w9ptx to ip-192-168-11-248.eu-north-1.compute.internal

Normal Pulling 46s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Pulling image "docker.io/istio/proxyv2:1.7.0"

Normal Pulled 36s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Successfully pulled image "docker.io/istio/proxyv2:1.7.0"

Normal Created 36s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Created container istio-init

Normal Started 36s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Started container istio-init

Normal Pulling 34s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Pulling image "istio/proxyv2:1.7.0"

Normal Pulled 26s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Successfully pulled image "istio/proxyv2:1.7.0"

Normal Created 26s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Created container istio-proxy

Normal Started 26s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Started container istio-proxy

Normal Pulling 25s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Pulling image "banzaicloud/allspark:0.1.2"

Normal Pulled 16s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Successfully pulled image "banzaicloud/allspark:0.1.2"

Normal Created 16s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Created container analytics

Normal Started 16s kubelet, ip-192-168-11-248.eu-north-1.compute.internal Started container analytics

As you can see, this time the sidecar container was started first as expected.

One thing to consider when using this feature is that commands which default to the first container will behave differently when an explicit container is not specified.

The most prominent example is the kubectl exec command which will default to the istio-proxy container by default.

This should be kept in mind if you want to use this feature and have kubectl exec in your scripts.

Even though this feature makes sure that the sidecar proxy container starts before the application container, it does not guarantee that the sidecar container is stopped after the app container. This is because the containers are started sequentially by the kubelet, but they are stopped in parallel.

Source principal based authorization added for Istio Gateways 🔗︎

In Istio Authorization Policies are used for access control.

You can control, based on Service Accounts, which service can reach other services using the principals field in the Authorization Policy resource.

So far this field could only be used for sidecar proxies, but did not work for gateways.

For Istio 1.7, it was implemented so that principal based authorization now works for gateways as well.

This can be utilised e.g. when controlling which source workloads can access which external services from a cluster.

We had a different recommended solution for this use case using the Sidecar resource, but with this change Authorization Policies could be used for controlling egress traffic as well.

SDS added for egress gateways 🔗︎

Secret Discovery Service (SDS) is an Envoy feature used in Istio to load certificates automatically to containers without requiring pod restart. So far, it was implemented for ingress gateways, now SDS works for egress gateways as well in Istio 1.7.

Takeaway 🔗︎

Istio 1.7 made another step towards simplified operational experience, but there are a few gotchas to pay attention to when upgrading.

If you’d like to kickstart your Istio experience, try out Backyards (now Cisco Service Mesh Manager), our Istio distribution. Backyards (now Cisco Service Mesh Manager) operationalizes the service mesh to bring deep observability, convenient management, and policy-based security to modern container-based applications.

Check out Backyards in action on your own clusters!

Want to know more? Get in touch with us, or delve into the details of the latest release.

Or just take a look at some of the Istio features that Backyards automates and simplifies for you, and which we’ve already blogged about.