Check out Backyards in action on your own clusters!

Want to know more? Get in touch with us, or delve into the details of the latest release.

Or just take a look at some of the Istio features that Backyards automates and simplifies for you, and which we’ve already blogged about.

Last week we released our open source Istio operator designed to help ease the sometimes difficult task of managing Istio. One of the main feature of the operator is its ability to manage a single mesh multi-cluster Istio.

Multi cluster scenarios 🔗︎

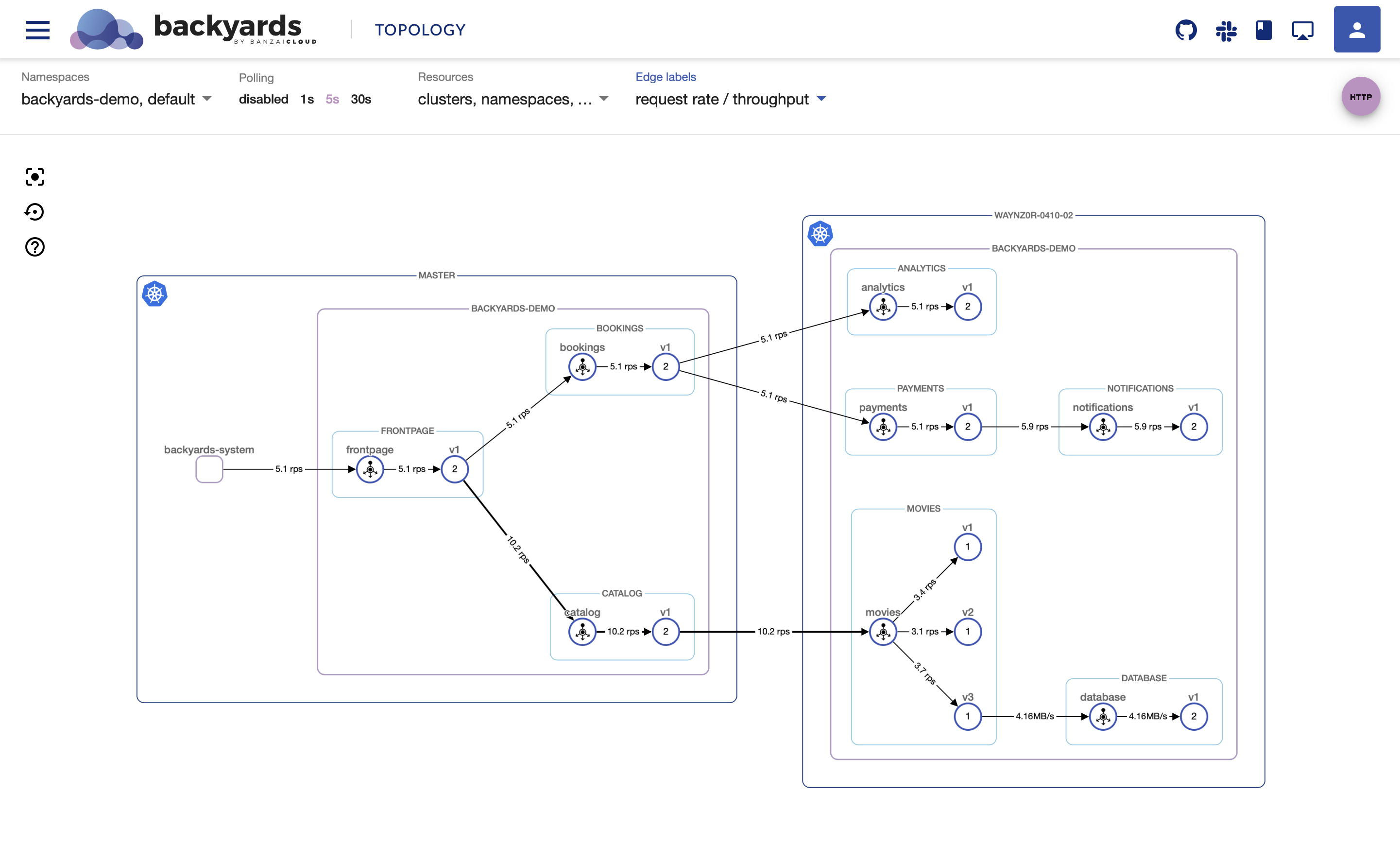

Typical multi-cluster-based patterns are single mesh - combining multiple clusters into one unit managed by one Istio control plane - and mesh federation, wherein multiple clusters act as individual management domains and the service exposure between those domains is done selectively.

Single mesh multi-cluster 🔗︎

The single mesh scenario is most suitable in those use cases where clusters are configured together, sharing resources and typically being treated as one infrastructural component within an organization.

Because the first release of the Istio operator supports Istio 1.0, our single mesh multi-cluster implementation is based on the features that version provides. It allows multiple clusters to be joined into a mesh, but all those clusters must be on a single shared network, all pod CIDRs must be unique and routable to each other in every cluster, and all API servers must also be routable to each other.

These requirements will be lowered with Istio 1.1

A single mesh multi-cluster is formed by enabling any number of Kubernetes control planes running a remote Istio configuration to connect to a single Istio control plane. Once one or more Kubernetes clusters is connected to the Istio control plane in that way, Envoy communicates with the Istio control plane in order to form a mesh network across those clusters.

Read on the roadmap section towards the end of the blog to learn about single mesh multi-cluster with lesser networking constraints and mesh federation for multi-clusters

How the Istio operator works 🔗︎

The Istio control plane must get information about service and pod states from remote clusters. To achieve this, the kubeconfig of each remote cluster must be added to the cluster where the control plane is running in the form of a k8s secret.

The Istio operator uses a CRD called RemoteIstio to store the desired state of a given remote Istio configuration. Adding a new remote cluster to the mesh is as simple as defining a RemoteIstio resource with the same name as the secret which contains the kubeconfig for the cluster.

The operator handles deploying Istio components to clusters and implements a sync mechanism, which provides constant reachability to the Istio central components in remote clusters. DNS entries are automatically updated whenever a pod restarts or any other failure occurs in the Istio control plane.

Try it out 🔗︎

For demo purposes, let’s say there are two GKE clusters with flat networking, so their networking prerequisites are met. Let’s also suppose there exists a running Istio control plane on the central cluster, deployed by our Istio operator.

❯ gcloud container clusters list

NAME LOCATION MASTER_VERSION NODE_VERSION NUM_NODES STATUS

k8s-central europe-west1-b 1.11.7-gke.4 1.11.7-gke.4 1 RUNNING

k8s-remote-1 us-central1-a 1.11.7-gke.4 1.11.7-gke.4 1 RUNNING

There are some prerequisites each remote cluster must meet before it can be joined into the mesh.

Remote cluster prerequisites 🔗︎

Create istio-system namespace 🔗︎

[context:remote-cluster] ❯ kubectl create namespace istio-system

namespace/istio-system created

Create service account, cluster role and cluster role binding 🔗︎

[context:remote-cluster] ❯ kubectl create -f https://raw.githubusercontent.com/banzaicloud/istio-operator/master/docs/federation/example/rbac.yml

serviceaccount/istio-operator created

clusterrole.rbac.authorization.k8s.io/istio-operator created

clusterrolebinding.rbac.authorization.k8s.io/istio-operator created

Generate kubeconfig for the created service account 🔗︎

[context:remote-cluster] ❯ REMOTE_KUBECONFIG_FILE=$(https://raw.githubusercontent.com/banzaicloud/istio-operator/master/docs/federation/example/generate-kubeconfig.sh | bash)

Add kubeconfig for the remote cluster as a secret to the central cluster 🔗︎

[context:central-cluster] ❯ kubectl create secret generic remoteistio-sample --from-file ${REMOTE_KUBECONFIG_FILE} -n istio-system

Add remote cluster to the mesh 🔗︎

To add a remote cluster to the mesh, all you have to do is create a RemoteIstio resource on the central cluster where the operator resides.

Once you’ve done this, the operator will begin the process of reconciling your changes, deploying the necessary components to the remote cluster and adding that cluster to the mesh.

The name of the secret that contains the kubeconfig must be the same as the name of the RemoteIstio resource

[context:central-cluster] ❯ cat <<EOF | kubectl apply -n istio-system -f -

apiVersion: istio.banzaicloud.io/v1beta1

kind: RemoteIstio

metadata:

labels:

controller-tools.k8s.io: "1.0"

name: remoteistio-sample

spec:

autoInjectionNamespaces:

- "default"

includeIPRanges: "*"

excludeIPRanges: ""

enabledServices:

- name: "istio-pilot"

labelSelector: "istio=pilot"

- name: "istio-policy"

labelSelector: "istio-mixer-type=policy"

- name: "istio-statsd"

labelSelector: "statsd-prom-bridge"

- name: "istio-telemetry"

labelSelector: "istio-mixer-type=telemetry"

- name: "zipkin"

labelSelector: "app=jaeger"

controlPlaneSecurityEnabled: false

citadel:

replicaCount: 1

sidecarInjector:

replicaCount: 1

EOFHere, the operator detects the creation of resources and begins the reconciliation process, creating the necessary Istio remote resources.

[context:central-cluster] ❯ kubectl logs -f istio-operator-controller-manager-0 -c manager

{"msg":"remoteconfig status updated","status":"Created"}

{"msg":"remoteconfig status updated","status":"Reconciling"}

{"msg":"reconciling remote istio","clusterName":"remote-cluster"}

{"msg":"reconciling","clusterName":"remote-cluster","component":"common"}

{"msg":"reconciled","clusterName":"remote-cluster","component":"common"}

...

...

{"msg":"reconciling","clusterName":"remote-cluster","component":"sidecarinjector"}

{"msg":"reconciled","clusterName":"remote-cluster","component":"sidecarinjector"}

{"msg":"remoteconfig status updated","status":"Available"}

{"msg":"remote istio reconciled","cluster":"remote-cluster"}

[context:remote-cluster]❯ kubectl get pods

NAME READY STATUS RESTARTS AGE

istio-citadel-6cb84f4c47-86jp6 1/1 Running 0 2m

istio-sidecar-injector-68fdf88c87-69pr4 1/1 Running 0 2m

The operator keeps the DNS entries of Istio services in sync by managing services without selectors and corresponding endpoints on the remote cluster that hold the pod IPs of Istio services. These endpoints are automatically updated by the operator upon any failure or pod restart.

[context:remote-cluster] ❯ kubectl get svc -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-citadel ClusterIP 10.90.1.166 <none> 8060/TCP,9093/TCP 2m

istio-pilot ClusterIP None <none> <none> 2m

istio-policy ClusterIP None <none> <none> 2m

istio-sidecar-injector ClusterIP 10.90.10.177 <none> 443/TCP 2m

istio-statsd ClusterIP None <none> <none> 2m

istio-telemetry ClusterIP None <none> <none> 2m

zipkin ClusterIP None <none> <none> 2m

❯ kubectl get endpoints

NAME ENDPOINTS AGE

istio-citadel 10.28.0.13:9093,10.28.0.13:8060 2m

istio-pilot 10.24.0.24:65000 2m

istio-policy 10.24.0.29:65000 2m

istio-sidecar-injector 10.28.0.14:443 2m

istio-telemetry 10.24.0.32:65000 2m

zipkin 10.24.0.8:65000 2m

Let’s see what happens when the telemetry pod is removed from the central cluster.

[context:central-cluster] ❯ kubectl delete pods/istio-telemetry-77476d58c7-pmnnq

pod "istio-telemetry-77476d58c7-pmnnq" deleted

[context:central-cluster] ❯ kubectl logs -f istio-operator-controller-manager-0 -c manager

{"msg":"pod event detected","podName":"istio-telemetry-77476d58c7-pmnnq","podIP":"10.24.0.32","podStatus":"Running"}

{"msg":"updating endpoints","cluster":"remote-cluster"}

[context:remote cluster] ❯ kubectl get endpoints/istio-telemetry

NAME ENDPOINTS AGE

istio-telemetry 10.24.0.32:65000 4m

The operator detected that the pod was deleted and propagated the change to the remote cluster and updated the service endpoint to the current IP.

Setting the autoInjectionNamespaces configuration in the RemoteIstio spec will give us automatic namespace labeling for namespaces on the remote side that need auto-sidecar injection.

[context:remote-cluster] ❯ kubectl get namespaces/default --show-labels

NAME STATUS AGE LABELS

default Active 1h istio-injection=enabled,istio-operator-managed-injection=enabled

The complete example is available here.

Roadmap 🔗︎

As was mentioned at the beginning of this post, there are several multi-cluster scenarios. Our intention is that our operator should support those scenarios, as well as several mixed cluster scenarios.

1. Single mesh multi-cluster with lesser networking constraints 🔗︎

Istio 1.1 is going to introduce and implement Split Horizon EDS and SNI aware routing. Once implemented, it will no longer be necessary for a single mesh Istio setup to have a flat network. We plan to add that feature after re-releasing our operator with Istio 1.1 support.

2. Mesh federation multi-cluster 🔗︎

Our operator – with upcoming Istio 1.1 support – will also provide support for full mesh federation multi-clusters by connecting two or more k8s clusters running Istio control planes together via their Istio gateways. The alternative, setting up a mesh federation and simultaneously managing inter-connecting services between clusters without an operator, would require a significant amount of manual work.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.