Check out Backyards in action on your own clusters!

Want to know more? Get in touch with us, or delve into the details of the latest release.

Or just take a look at some of the Istio features that Backyards automates and simplifies for you, and which we’ve already blogged about.

Service mesh has, without question, been one of the most vigorously debated and obsessed over topics of discussion in recent memory. It seems like, whichever way you turn, you run into heated arguments between those developers that are convinced that service mesh will outgrow even Kubernetes, and the naysayers convinced that, outside of use in a few large companies, service mesh is impractical to the point of uselessness. As always, the truth probably lies somewhere in between, but that doesn’t mean you can avoid developing an opinion, especially if you’re a Kubernetes distribution and platform provider like us.

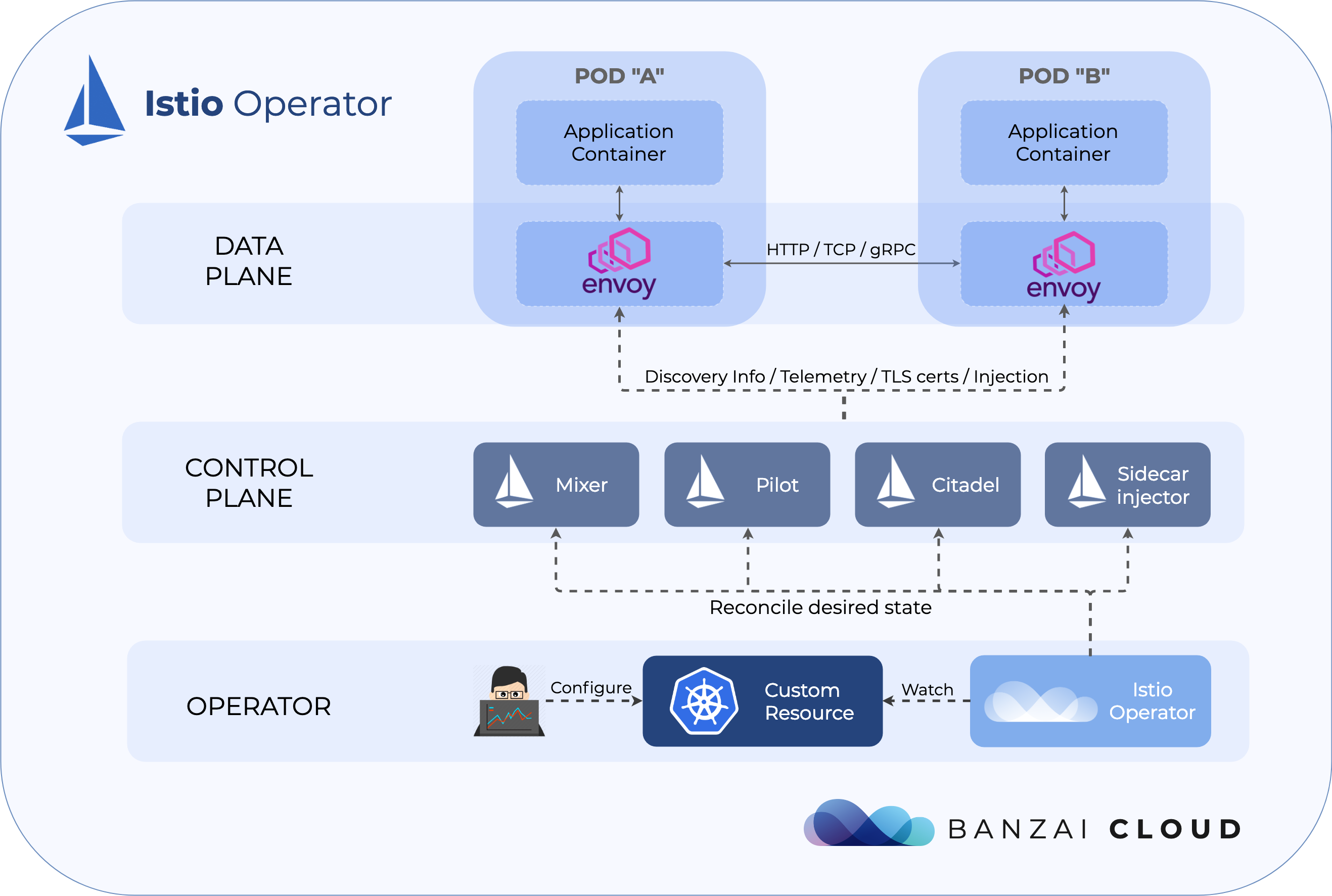

The inclusion of Istio in the Pipeline platform has been one of our most frequently requested features over the last few months, so, whether we would put in the effort to enable it was a bit of a foregone conclusion. And, while working hard to provide customers with a great user experience, we realized that even the simple task of managing Istio on a Kubernetes cluster can be difficult to accomplish. To meet and overcome the challenges involved, we’ve built an operator that encapsulates the management of various components of the Istio service mesh.

We realize that we’re not the only ones struggling with these kinds of problems (see for example #9333 on Github), so we’ve decided to open source our work.

Now, we’re very excited to announce the alpha release of our Istio operator, and we hope that a great community will emerge around it and help drive innovation in the future.

Motivation 🔗︎

Istio is currently far and away the most mature service mesh solution on Kubernetes (we really like the concept of Linkerd2, and hope that it will grow, but it’s just not there yet), so it was clear that Istio would be the first service mesh that we would support in Pipeline.

In creating the operator, our main goal was the simplification of the deployment and management of Istio’s components. Its first release effectively replaces the Helm chart as a preferred means of installing Istio, while providing a few additional features.

Istio has a great Helm chart, but we could think of several reasons why an operator would better suit our purposes:

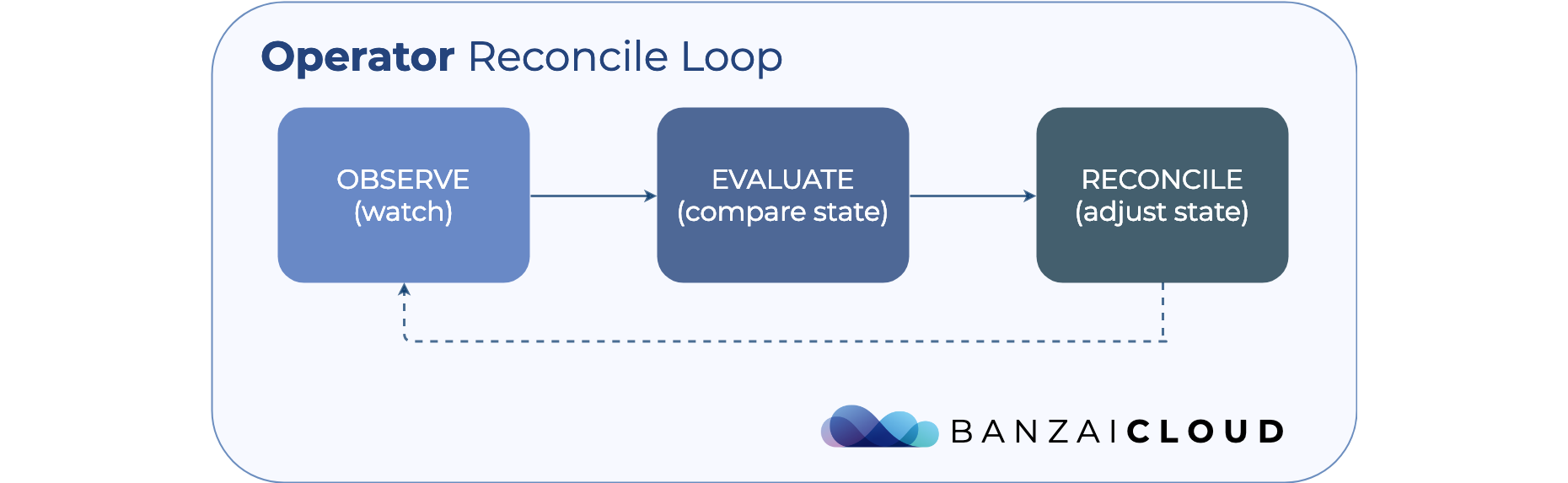

- Helm is “only” a package manager, so only concerns itself with Istio’s components until they are first deployed to a cluster. An operator continuously reconciles the state of those components to keep them healthy.

- The Helm chart is very complex and hard to read. We prefer to describe everything in code, instead of as

yamls. - Several small convenience features can be implemented, that must otherwise be done manually (like labelling the namespaces in which we want sidecar auto-injection)

- Complex Istio features, like multi-cluster or canary releases, can be simplified via higher level concepts.

Current features 🔗︎

This is only an alpha release, so the feature set is quite limited. Nonetheless, our operator can do a few handy things already:

1. Manage Istio’s components - no Helm needed

This project follows the conventions of a standard operator, and has a corresponding custom resource definition that describes the desired state of its Istio deployment. It contains all the necessary configuration values, and, if one or more of these change, the operator automatically reconciles the state of the components to match their new desired state. The same reconciliation occurs if the components’ state changes for any reason - be it an incidental deletion of a necessary resource, or a change in the cluster infrastructure.

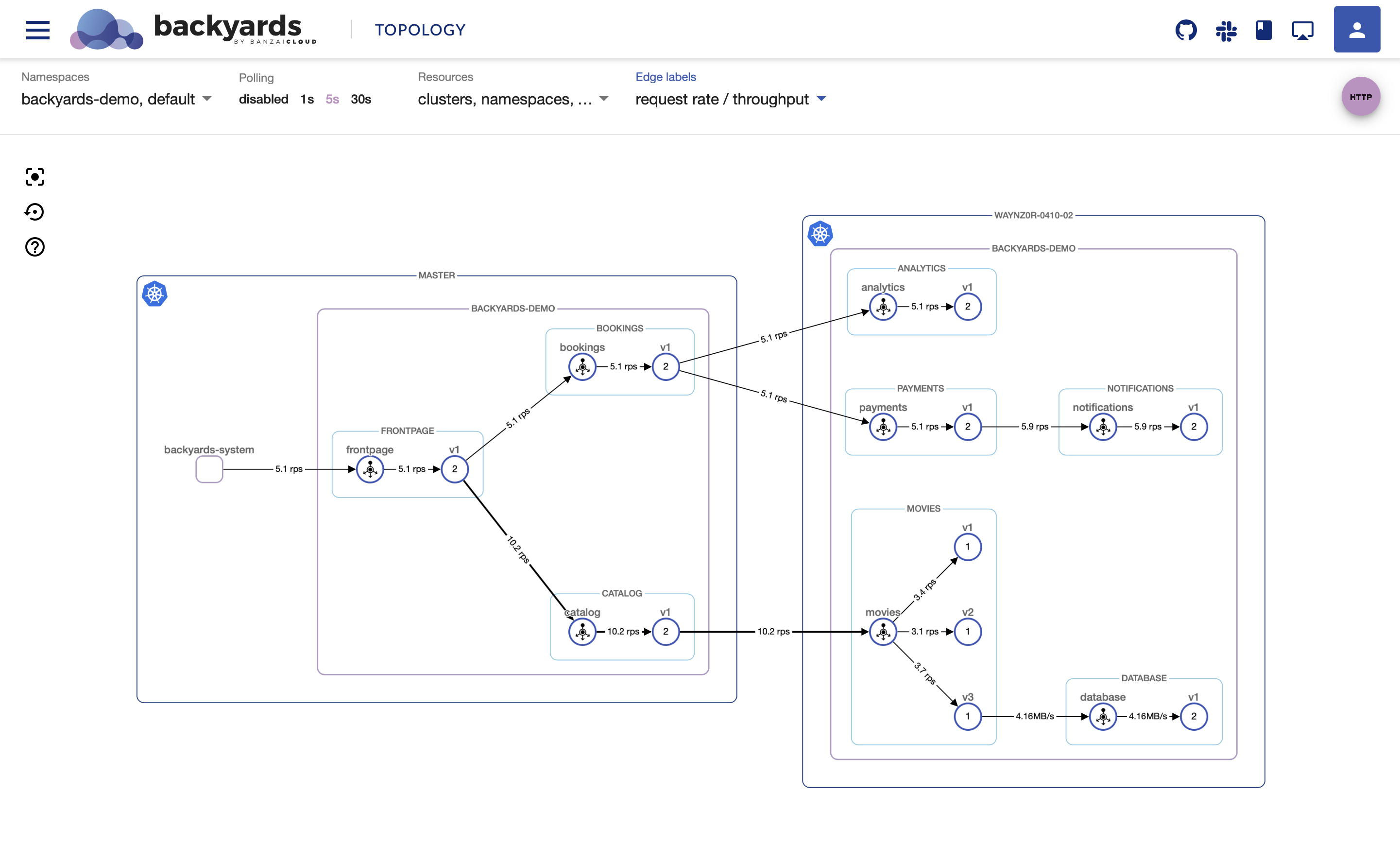

2. Multi-cluster federation

Multi-cluster federation functions by enabling Kubernetes control planes running a remote configuration to connect to a single Istio control plane. Once one or more remote Kubernetes clusters are connected to the Istio control plane, Envoy communicates with the Istio control plane to form a mesh network across multiple Kubernetes clusters.

The operator handles deploying Istio components to their remote clusters and gives us a sync mechanism which provides constant reachability to Istio’s central components from remote clusters. DNS entries are automatically updated if a pod restarts or if some other failure occurs on the Istio control plane.

The main requirements of a working multi-cluster federation are: one, that all pod CIDRs be unique and routable to each other in every cluster, and, two, that API servers also be routable to each other. We plan on further enhancing this feature over future releases, and we’ll be writing a detailed blog post to that effect soon. Until then, if you’re interested, you can find an example of how this works, here.

Read more about multi cluster federation: - Automate single mesh multi-clusters with the Istio operator

3. Manage automatic sidecar-injection

Istio’s configuration keeps track of namespaces where auto sidecar-injection is enabled, so you’ll be able to manage that feature from one central location instead of labelling namespaces one-by-one. The injection label has also been reconciled, so if you take a namespace off that list, auto-injection will be turned off.

4. mTLS and control plane security

You can easily turn these on or off inside the configuration with your mesh already in place. This type of configuration change is one of the best features of an operator: there’s no need to reinstall charts and/or manually delete and reconfigure internal Istio custom resources like MeshPolicies or default DestinationRules. Just rewrite config and the operator will take care of the rest.

Try it out! 🔗︎

The operator installs Istio version 1.0.5, and can run on Minikube v0.33.1+ and Kubernetes 1.10.0+.

Of course, you’ll need a Kubernetes cluster, first. You can create one by using Pipeline in your datacenter, or in one of the six cloud providers we support.

To try out the operator, point KUBECONFIG to your cluster and simply run this make goal from the project root.

git clone git@github.com:banzaicloud/istio-operator.git

make deploy

This command will install a custom resource definition in the cluster, and will deploy the operator in the istio-system namespace.

Following a pattern typical of operators, this will allow you to specify your Istio configurations to a Kubernetes custom resource.

Once you apply that to your cluster, the operator will start reconciling all of Istio’s components.

cat <<EOF | kubectl apply -n istio-system -f -

apiVersion: istio.banzaicloud.io/v1beta1

kind: Istio

metadata:

labels:

controller-tools.k8s.io: "1.0"

name: istio

spec:

mtls: false

includeIPRanges: "*"

excludeIPRanges: ""

autoInjectionNamespaces:

- "default"

controlPlaneSecurityEnabled: false

EOFAfter some time, you should see that the Istio pods are running:

kubectl get pods -n istio-system --watch

NAME READY STATUS RESTARTS AGE

istio-citadel-7fcc8fddbb-2jwjk 1/1 Running 0 4h

istio-egressgateway-77b7457955-pqzt8 1/1 Running 0 4h

istio-galley-94fc98cd9-wcl92 1/1 Running 0 4h

istio-ingressgateway-794976d866-mjqb7 1/1 Running 0 4h

istio-operator-controller-manager-0 2/2 Running 0 4h

istio-pilot-6f988ff756-4r6tg 2/2 Running 0 4h

istio-policy-6f947595c6-bz5zj 2/2 Running 0 4h

istio-sidecar-injector-68fdf88c87-zd5hq 1/1 Running 0 4h

istio-telemetry-7b774d4669-jrj68 2/2 Running 0 4h

And that the Istio custom resource is showing Available in its status field:

$ kubectl describe istio -n istio-system istio

Name: istio

Namespace: istio-system

Labels: controller-tools.k8s.io=1.0

Annotations: <none>

API Version: istio.banzaicloud.io/v1beta1

Kind: Istio

Metadata:

Creation Timestamp: 2019-02-26T12:23:54Z

Finalizers:

istio-operator.finializer.banzaicloud.io

Generation: 2

Resource Version: 103778

Self Link: /apis/istio.banzaicloud.io/v1beta1/namespaces/istio-system/istios/istio

UID: 62042df2-39c1-11e9-9464-42010a9a012e

Spec:

Auto Injection Namespaces:

default

Control Plane Security Enabled: true

Include IP Ranges: *

Mtls: true

Status:

Error Message:

Status: Available

Events: <none>

Contributing 🔗︎

If you’re interested in this project, we’re happy to accept contributions:

- You can open an issue describing a feature request or bug

- You can send a pull request (we do our best to review and accept these ASAP)

- You can help new users with whatever issues they may encounter

- Or your can just star the repo to help support the development of this project

If you’re interested in contributing to the code, read on. Otherwise, feel free to skip to the Roadmap section.

Building and testing the project 🔗︎

Our operator was built using the Kubebuilder project. It follows the standards described in Kubebuilder’s documentation, the Kubebuilder book, but some default make goals and build commands have been changed.

To build the operator and run tests:

make vendor # runs `dep` to fetch the dependencies in the `vendor` folder

make # generates code if needed, builds the project, runs tests

If you’d like to try out your changes in a cluster, create your own image, push it to the docker hub and deploy it to your cluster:

make docker-build IMG={YOUR_USERNAME}/istio-operator:v0.0.1

make docker-push IMG={YOUR_USERNAME}/istio-operator:v0.0.1

make deploy IMG={YOUR_USERNAME}/istio-operator:v0.0.1

To watch the operator’s logs, use:

kubectl logs -f -n istio-system $(kubectl get pod -l control-plane=controller-manager -n istio-system -o jsonpath={.items..metadata.name}) manager

In order to allow the operator to set up Istio in your cluster, you should create a custom resource describing the desired configuration. There is a sample custom resource under config/samples:

kubectl create -n istio-system -f config/samples/istio_v1beta1_istio.yaml

Roadmap 🔗︎

Please note that the Istio operator is under heavy development and new releases might introduce breaking changes. We strive to maintain backward compatibility insofar as that is feasible, while rapidly adding new features. Issues, new features, or bugs are tracked on the project’s GitHub page - feel free to contribute yours!

Some significant features and future items on our roadmap:

1. Integration with Prometheus, Grafana and Jaeger

The Istio Helm chart can install and configure these components. However, we believe that it should not be the responsibility of Istio to manage them, but, instead, to provide easy ways to integrate them with external deployments. In the real world, developers often already have their own way of managing these components - like the Prometheus operator reconfigured for other rules and targets. In our case, Pipeline installs and automates the configuration of all these components during cluster creation or deployment.

2. Manage missing components like Servicegraph or Kiali

The alpha version only installs the core components of Istio, but we want to support add-ons like Servicegraph and Kiali. Are there any other components you might suggest and would like to see us support? Let us know!

3. Support for Istio 1.1 and easy upgrades

Istio 1.1 is coming soon, and will contain some major changes. We want to be able to support the new version as soon as possible, and we want to make it easy to upgrade from current 1.0.5 and 1.0.6 versions. Upgrading to a new Istio version now involves manual steps, like changing old sidecars by re-injecting them. We are working to add support for automatic and seamless Istio version upgrades.

4. Adding a “Canary release” feature

Canary releases are some of Istio’s most frequently utilized features, but they are quite complex and repetitive. Handling traffic routing in Istio custom resources and tracking monitoring errors is something that can be automated. We’d like to create a higher level Canary resource, where configurations can be described and the operator can manage a canary’s whole lifecycle on that basis.

5. Providing enhanced multi-cluster federation

Current multi-cluster support in the operator simplifies a lot when compared with the “Helm method”, but has some difficult requirements like the necessity of having a flat network in which pod IPs are routable from one cluster to another. Thus, we are working to add gateway federation to the Istio operator.

6. Security improvements

Currently, the operator needs full admin permissions in a Kubernetes cluster to be able to work properly; its corresponding role is generated by make deploy. The operator creates a bunch of additional roles for Istio, so, because of privilege escalation prevention, the operator needs to have all its permissions contained within those roles, or - from Kubernetes 1.12 onwards - it must have the escalate permission for roles and clusterroles.

Another security problem is that Istio proxies run in privileged mode. We’ll put some effort into getting rid of this requirement soon.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.