In a perfect world, resources are infinite and readily available. But as we all know, things in the real world are never quite so simple. The struggle for resources is much the same in the world of computer programs, where CPU cycles, memory and storage space, or network bandwidth can easily become scarcities even when using the latest generation of devices and technology.

Time-sharing, virtual memory and paging, logical volumes… These techniques are used by most operating systems today without the average user or software developer having to think about them, working hard to make the limitations of hardware disappear. Even so, the bounds of the machine — or a number of machines in case of grid computing — become apparent eventually.

Just as we can hide internal parts of the machine behind a layer of abstraction to make them appear unlimited, we can apply the same technique to machines themselves. Cloud computing and other cloud services — like cloud storage — make it possible to provision virtual hardware on-demand, seemingly without limits. Container orchestrators, like Kubernetes, — usually in tandem with cloud platforms — do the same thing on yet a higher level, scaling services inside and across said virtual hardware as required.

These technologies allow developers to pay less attention to the limits of infrastructure and hardware, and concentrate on creating business value. But with great limitlessness come great bills from your cloud provider… 🧾💰

Keeping costs down 🔗︎

When provisioning resources for a task, you’re working toward two opposing goals:

- the resources must be sufficient for solving the task and

- their cost should be kept to a minimum.

This leads to an optimization problem of which resources to use and for how long.

First, you have to figure out what kinds of resources you’ll need. Next, you’ll have to select a service model for accessing a given resource or group of resources: IaaS, PaaS or SaaS. To give the best service to their customers — and incidentally make selecting the right resources much harder —, cloud providers have a plethora of options for you to choose from, both for resources and properties of those resources, each with different pricing. There might also be periods of time when some resources are cheaper or more expensive based on expected or actual demand, and even geography can play a role in determining prices.

Cloud experts around the world make a living just by learning and staying up to date with the ins and outs of one or more of these platforms and their service offerings, and helping businesses apply them while being cost effective. There also exist tools that try to assist with making these decisions, built into the services by the cloud providers themselves or developed by 3rd parties. A good example is our own Pipeline platform: it uses Telescopes to make recommendations about what cloud infrastructure to deploy for your Kubernetes clusters. Telescopes, in turn, queries Cloudinfo for reliable data on instance type attributes and product pricing of the most prominent cloud providers.

So, at last, you’ve figured out which resources you want to use. Awesome! Just don’t forget to turn the lights off before you leave… 💡💸

Don’t keep the meter running! 🔗︎

Today’s cloud platforms provide users with an almost carefree experience of provisioning and using their virtual resources. Just fill out some forms, click a few buttons, and 5 minutes later there’s a whole army of virtual machines with practically infinite storage space at your disposal. Upload your scripts, binaries, configuration and data files, check a few options, and watch your service come alive and scale automagically with user demand. These levels of automation make you forget about the hurdles of buying hardware, installing and keeping software up to date, and hiring a maintenance staff when you just can’t do it all yourself anymore. What’s also easy to forget about, is that these resources can cost you a lot when left unchecked.

Manual resource management is at least tiresome for a small number of resources, impractical for a lot. Resource management automation is an essential part of service operations. Automatic scaling of services based on demand is commonplace in production, even deployment is done without human intervention when practicing continuous deployment, but shutdown of services is kept manual as a safeguard. But what about development? Developers’ needs are different than production. They test their code automatically with CI, test manually, and demo features to colleagues and customers when needed. The latter two requires services to be deployed and shut down manually, but while you cannot perform a test or a demo without the deployment, it’s all too easy to forget about cleaning up after yourself.

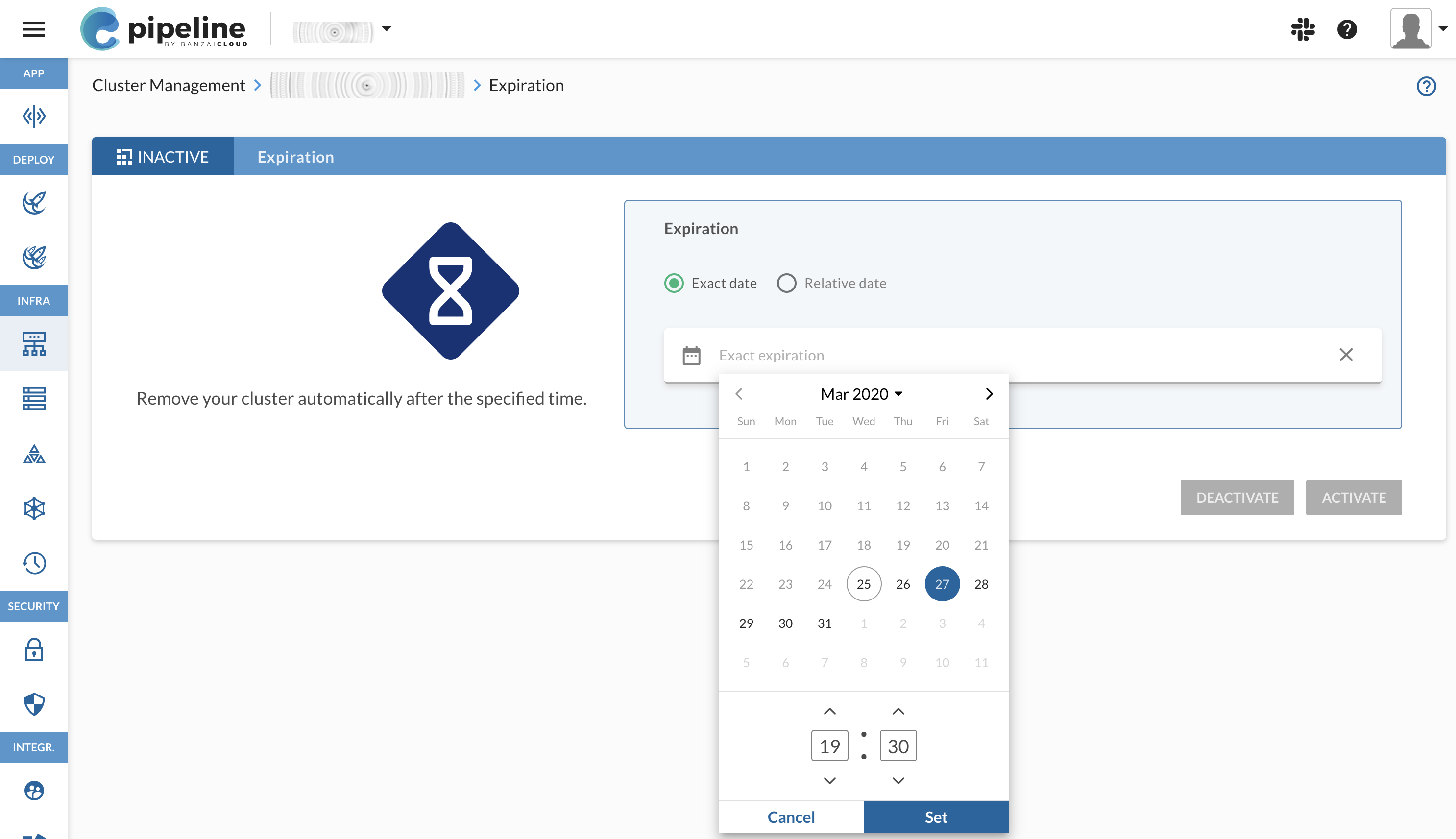

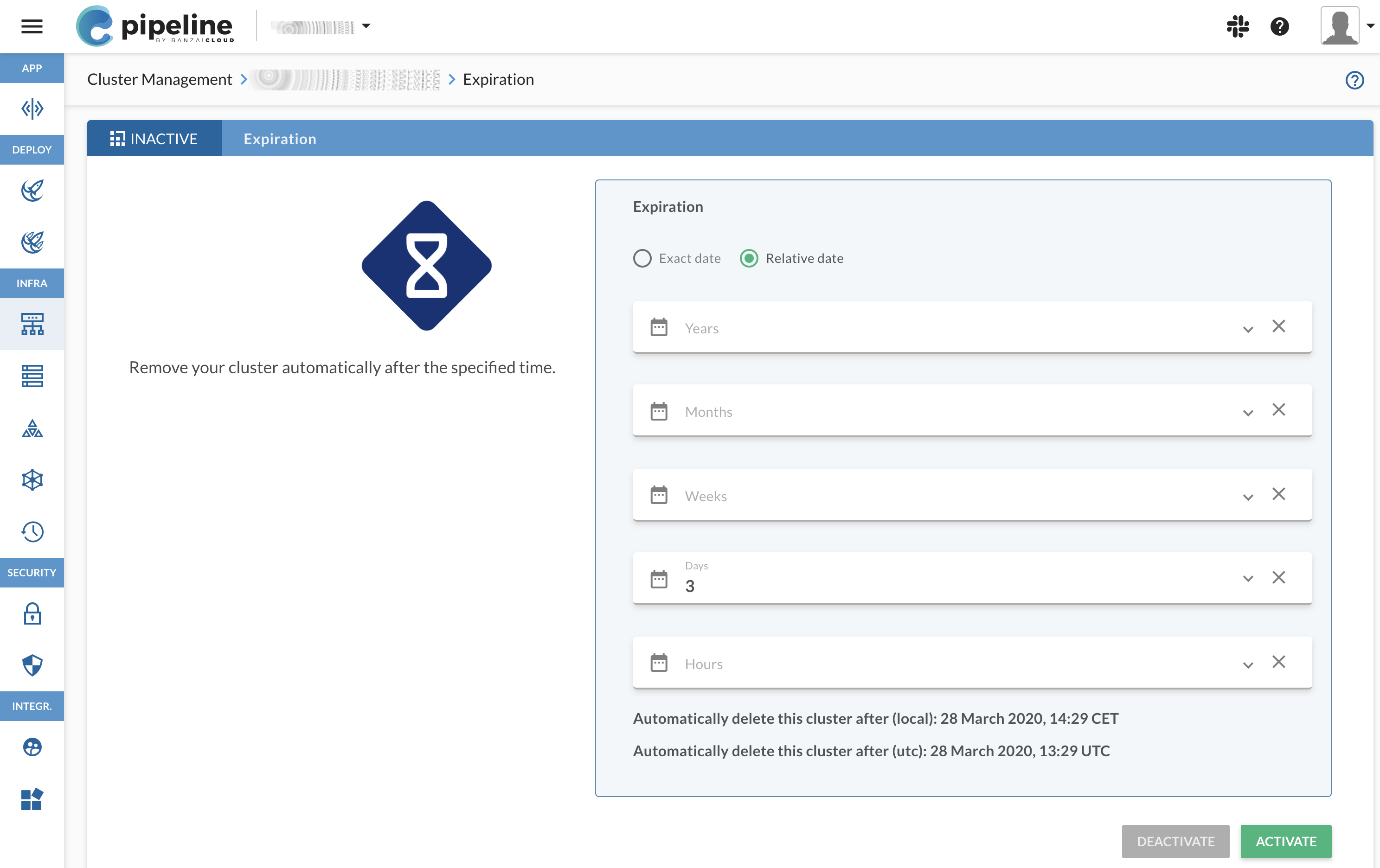

We had the same issue in-house with Pipeline. Pipeline makes it very easy to provision Kubernetes clusters, but even easier to leave them running after a demo or testing session. While Pipeline gives a great overview of your clusters running on any of the 5 supported cloud providers, people get distracted easily and fail to check up on their leftover clusters. And despite using the cheapest spot instances, a few clusters left running for the weekend or over a vacation leave can quickly add up and consume a hefty chunk of company funds. That’s why we developed the Expiration integrated service. This service lets you shut down a cluster automatically at a specified due date. When activating the service you specify either a future date or a time offset — e.g. 2 weeks from now — and the service will make sure the cluster is deleted along with it’s underlying resources1, to keep it from wasting money unnecessarily. If you ever change your mind — before the due date, obviously 😀 —, you can simply update the deadline or turn off the service altogether.

The best part is that this works on all 5 cloud providers that Pipeline supports, both for the managed Kubernetes solution of these providers and when you are running our own Kubernetes distribution, PKE. In a future version we plan on adding the option to automatically activate the service with a default offset on newly created clusters, but until then you can Do It Yourself with an automation script using Pipeline’s CLI tool.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.

-

Except for imported clusters, where it only removes the cluster from the Pipeline system. ↩︎