Check out Backyards in action on your own clusters!

Want to know more? Get in touch with us, or delve into the details of the latest release.

Or just take a look at some of the Istio features that Backyards automates and simplifies for you, and which we’ve already blogged about.

Istio has been rightfully praised for ushering in free observability and secure service to service communication. Other, more significant features, however, are what truly make Istio the Swiss army knife of service mesh operators; when it comes to meeting SLOs like uptime, latency and error rates, the ability to manage traffic between services is absolutely critical.

When we released the Istio operator earlier this year, our goal (besides managing Istio installation and upgrades) was to provide support for these excellent traffic routing features, while making everything more usable and UX friendly. We ended up creating a simple and automated service mesh, Backyards (now Cisco Service Mesh Manager), which features a management UI, CLI and GraphQL API on top of our Istio operator. Backyards (now Cisco Service Mesh Manager) is integrated into Banzai Cloud’s container management platform, Pipeline, however, it also works, and is available, as a standalone product. Naturally, using Backyards with Pipeline provides users with a variety of specific benefits (like managing applications in a multi-cloud and hybrid cloud world) but Backyards works on any Kubernetes installation.

Some of the related Backyards features we have already blogged about:

Circuit breaking: failure is an option 🔗︎

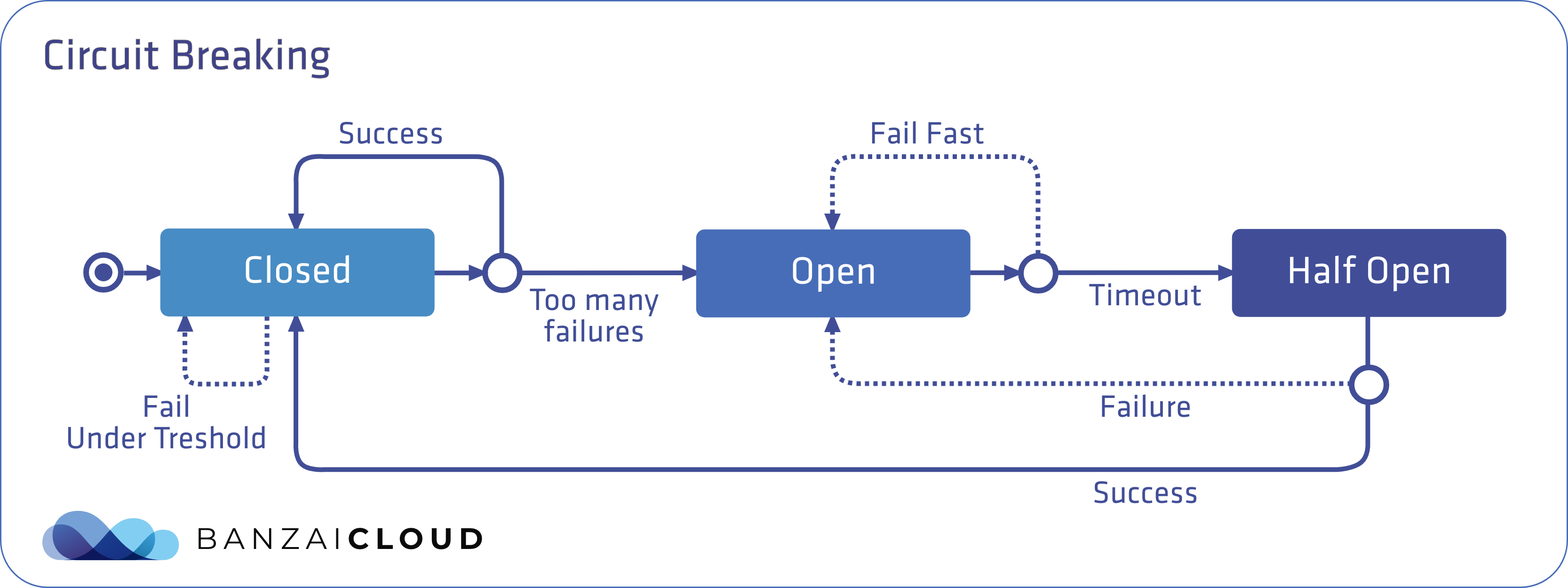

In microservices architecture, services are written in different languages, deployed across multiple nodes or clusters and have different response times or failure rates. Usually, if a service responds to requests successfully (and in a timely manner), it has performed satisfactorily. However, this is often not the case, and downstream clients need to be protected from excessive slowness of upstream services. Upstream services, in turn, must be protected from being overloaded by a backlog of requests. This becomes more complicated with multiple clients, and can lead to a cascading series of failures throughout the whole infrastructure. The solution to this problem is the time-tested circuit breaker pattern.

A circuit breaker can have three states: closed, open and half open, and by default exists in a closed state. In the closed state, requests succeed or fail until the number of failures reach a predetermined threshold, with no interference from the breaker. When the threshold is reached, the circuit breaker opens. When calling a service in an open state, the circuit breaker trips the requests, which means that it returns an error without attempting to execute the call. In this way, by tripping the request downstream at the client, cascading failures can be prevented in a production system. After a configurable timeout, the circuit breaker enters a half open state, in which the failing service is given time to recover from its broken behavior. If requests continue to fail in this state, then the circuit breaker is opened again and keeps tripping requests. Otherwise, if the requests succeed in the half open state, then the circuit breaker will close and the service will be allowed to handle requests again.

Circuit breaking in Istio 🔗︎

Istio’s circuit breaking can be configured in the TrafficPolicy field within the Destination Rule Istio Custom Resource. There are two fields under TrafficPolicy which are relevant to circuit breaking: ConnectionPoolSettings and OutlierDetection.

In ConnectionPoolSettings, the volume of connections can be configured for a service. OutlierDetection is for controlling the eviction of unhealthy services from the load balancing pool.

I.e. ConnectionPoolSettings controls the maximum number of requests, pending requests, retries or timeouts, while OutlierDetection controls the number of errors before a service is ejected from the connection pool, and is where minimum ejection duration and maximum ejection percentage can be set. For a full list of fields, check the documentation.

Istio utilizes the circuit breaking feature of Envoy in the background.

Let’s take a look at a Destination Rule with circuit breaking configured:

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: notifications

spec:

host: notifications

trafficPolicy:

connectionPool:

tcp:

maxConnections: 1

http:

http1MaxPendingRequests: 1

maxRequestsPerConnection: 1

outlierDetection:

consecutiveErrors: 1

interval: 1s

baseEjectionTime: 3m

maxEjectionPercent: 100

With these settings in the ConnectionPoolSettings field, only one connection can be made to the notifications service within a given time frame: one pending request with a maximum of one request per connection. If a threshold is reached, the circuit breaker will start tripping requests.

The OutlierDetection section is set so that it checks whether there is an error calling the service every second. If there is, the service is ejected from the load balancing pool for at least three minutes (the 100% maximum ejection percent indicates that all services can be ejected from the pool at the same time, if necessary).

There’s one thing which you need to pay special attention to when manually creating the

Destination Ruleresource, which is whether or not you have mutual TLS enabled for this service. If you do, you’ll also need to set the field below inside yourDestination Rule, otherwise your caller services will probably receive 503 responses when calling themoviesservice:

trafficPolicy:

tls:

mode: ISTIO_MUTUAL

Mutual TLS can be enabled globally for a specific namespace or for a specific service, as well. You should be aware of these settings in order to determine whether you should set

trafficPolicy.tls.modetoISTIO_MUTUALor not. More importantly, it is very easy to forget to set this field when you are trying to configure a completely different feature (e.g. circuit breaking).

Tip: Always think about mutual TLS before creating a

Destination Rule!

To trigger circuit breaker tripping, let’s call the notifications service from two connections simultaneously. Remember, the maxConnections field is set to one. When we do, we should see 503 responses arriving alongside 200s.

When a service receives a greater load from a client than it is believed to be able to handle (as configured in the circuit breaker), it starts returning 503 errors before attempting to make a call. This is a way of preventing an error cascade.

Monitoring circuit breakers 🔗︎

It is an absolute must that you monitor your services in a production environment, and that you are notified and be able to investigate when errors occur in the system. It stands to reason, then, that if you’ve configured a circuit breaker for your service, you’ll want to know when that breaker is tripped; what percentage of your requests were tripped by the circuit breaker; how many requests were tripped and when, and from which downstream client? If you can answer these questions, you can determine how well your circuit breaker is working, fine tune the circuit breaker configurations as needed, or optimize your service to handle additional concurrent requests.

Pro tip: you can see and configure all these (and more) on the Backyards UI if you keep reading.

Let’s see how to determine the trips caused by the circuit breaker in Istio:

The response code in the event of a circuit breaker trip is 503, so you won’t be able to differentiate it from other 503 errors based merely on that response. In Envoy, there is a counter called upstream_rq_pending_overflow, which is the total number of requests that overflowed the connection pool circuit breaker and were failed. If you dig into Envoy’s statistics for your service, you can acquire this information, but it’s not particularly easy to reach.

Envoy also returns response flags in addition to response codes, and there exists a dedicated response flag to indicate circuit breaker trips: UO. This wouldn’t be particularly helpful if this flag could only be obtained through Envoy logs, but, fortunately, it was implemented in Istio, so that response flags that are available in Istio metrics and can be fetched by Prometheus.

Circuit breaker trips can be queried like this:

sum(istio_requests_total{response_code="503", response_flags="UO"}) by (source_workload, destination_workload, response_code)

Circuit breaking with Backyards (now Cisco Service Mesh Manager), the easy way! 🔗︎

When using Backyards, you don’t need to manually edit the Destination Rules to set circuit breaking configurations. Instead, you can achieve the same result via a convenient UI, or, if you prefer, through the Backyards command line tool.

You don’t need to worry about misconfiguring your Destination Rules by forgetting to set trafficPolicy.tls.mode to ISTIO_MUTUAL. Backyards takes care of this for you; it finds out whether your service has mutual TLS enabled or not and sets the aforementioned field accordingly.

The above is just one example of Backyards’ validation features, which can help protect you from potential misconfigurations. There are lots more!

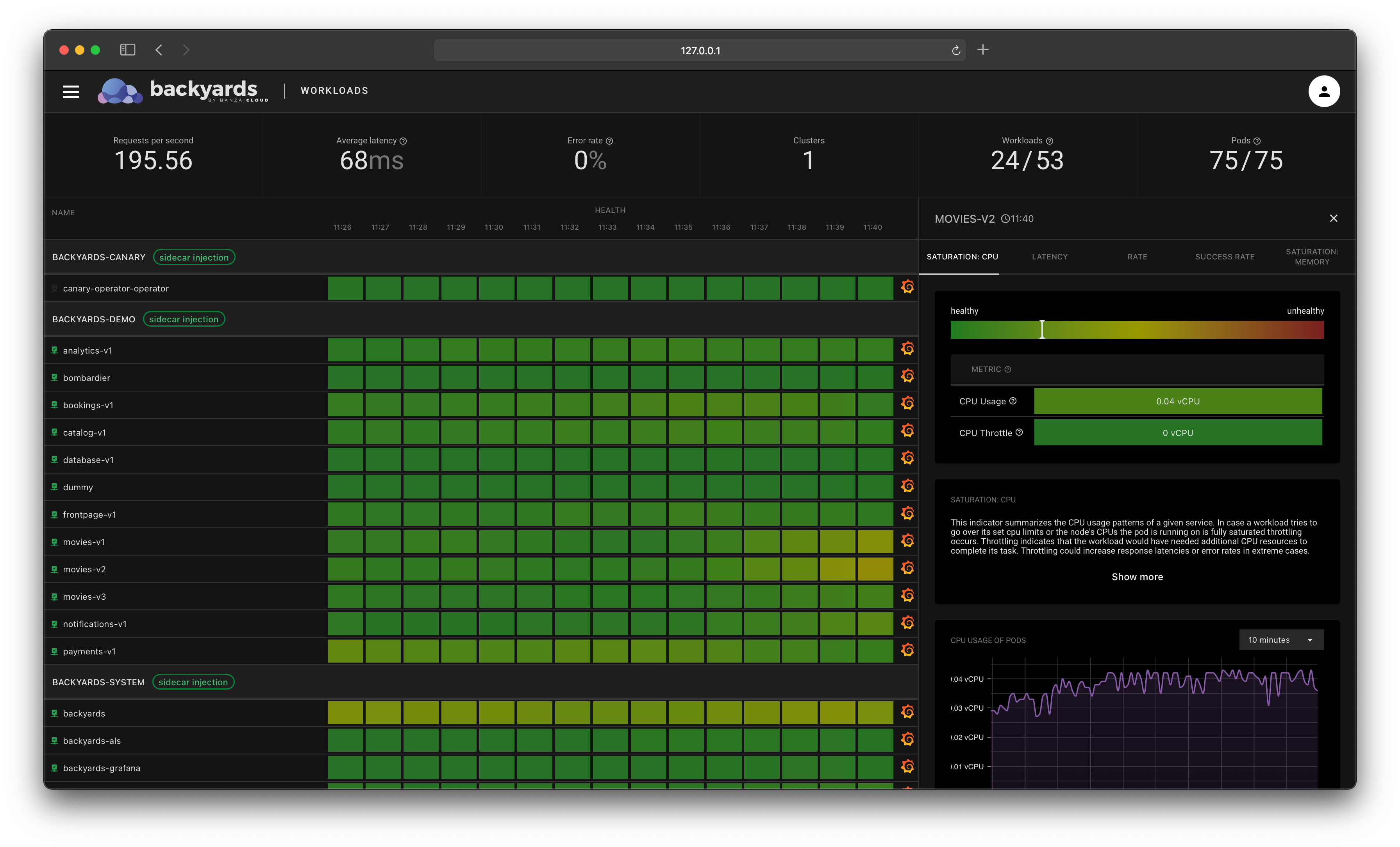

On top of this, you can see visualizations of and live dashboards for your services and requests, so you can easily determine how many of your requests were tripped by the circuit breaker, and from which caller and when.

Circuit breaking in action! 🔗︎

Create a cluster 🔗︎

First of all, we’ll need a Kubernetes cluster.

I created a Kubernetes cluster on GKE via the free developer version of the Pipeline platform. If you’d like to do likewise, go ahead and create your cluster on any of the several cloud providers we support or on-premise using Pipeline. Otherwise bring your own Kubernetes cluster.

Install Backyards 🔗︎

The easiest way by far of installing Istio, Backyards, and a demo application on a brand new cluster is to use the Backyards CLI.

You just need to issue one command (KUBECONFIG must be set for your cluster):

$ backyards install -a --run-demo

This command first installs Istio with our open-source Istio operator, then installs Backyards itself as well as a demo application for demonstration purposes. After the installation of each component has finished, the Backyards UI will automatically open and send some traffic to the demo application. By issuing this one simple command you can watch as Backyards starts a brand new Istio cluster in just a few minutes! Give it a try!

You can do all these steps in sequential order as well. Backyards requires an Istio cluster - if you don’t have one, you can install Istio with

$ backyards istio install. Once you have Istio installed, you can install Backyards with$ backyards install. Finally, you can deploy the demo application withbackyards demoapp install.

Tip: Backyards is a core component of the Pipeline platform - you can try the hosted developer version here: /products/pipeline/ (Service Mesh tab).

Circuit breaking using the Backyards UI 🔗︎

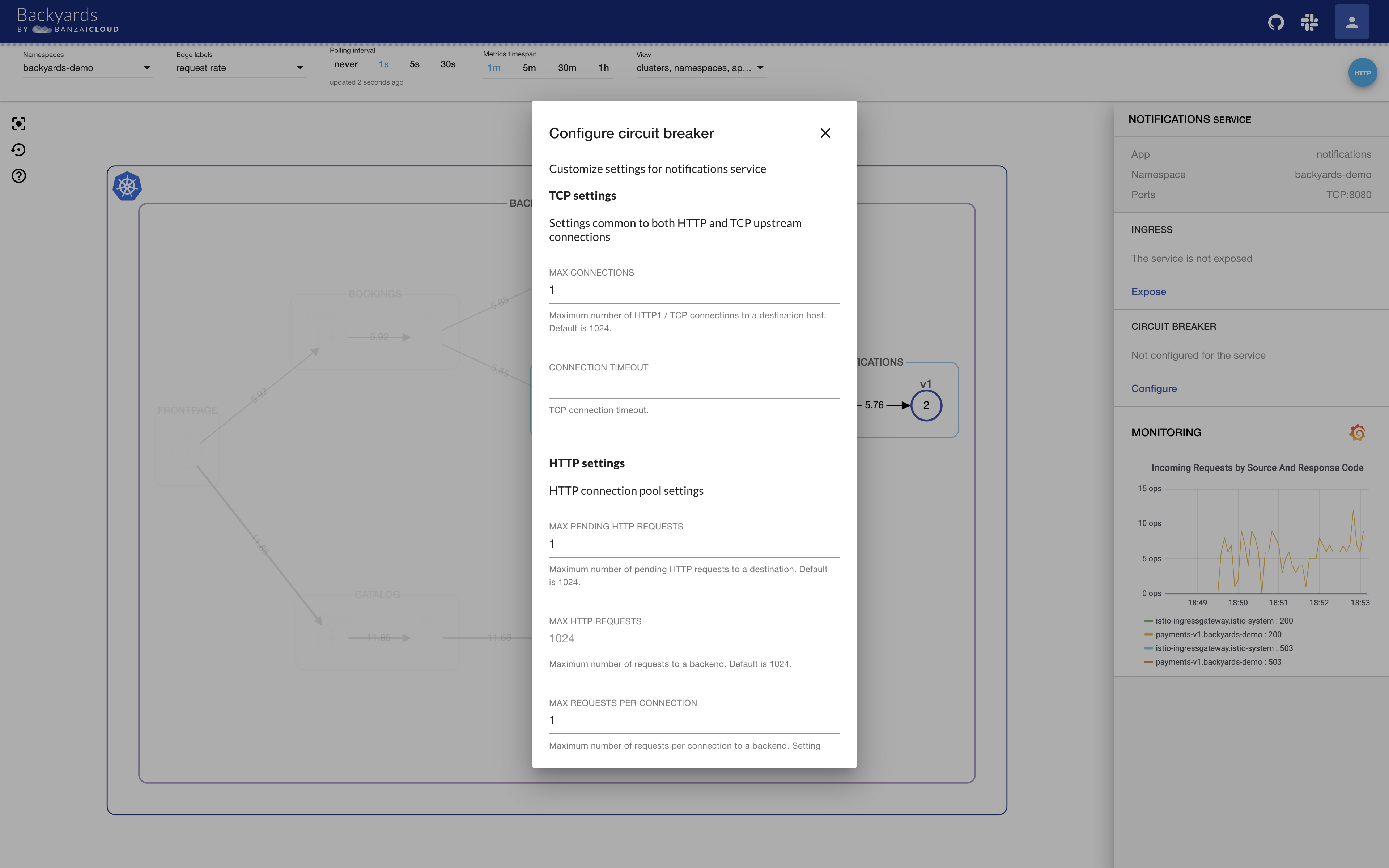

Set circuit breaking configurations 🔗︎

You don’t need to create or edit a Destination Rule resource manually, you can easily change the circuit breaker configurations from the UI. Let’s first create a demo circuit breaker.

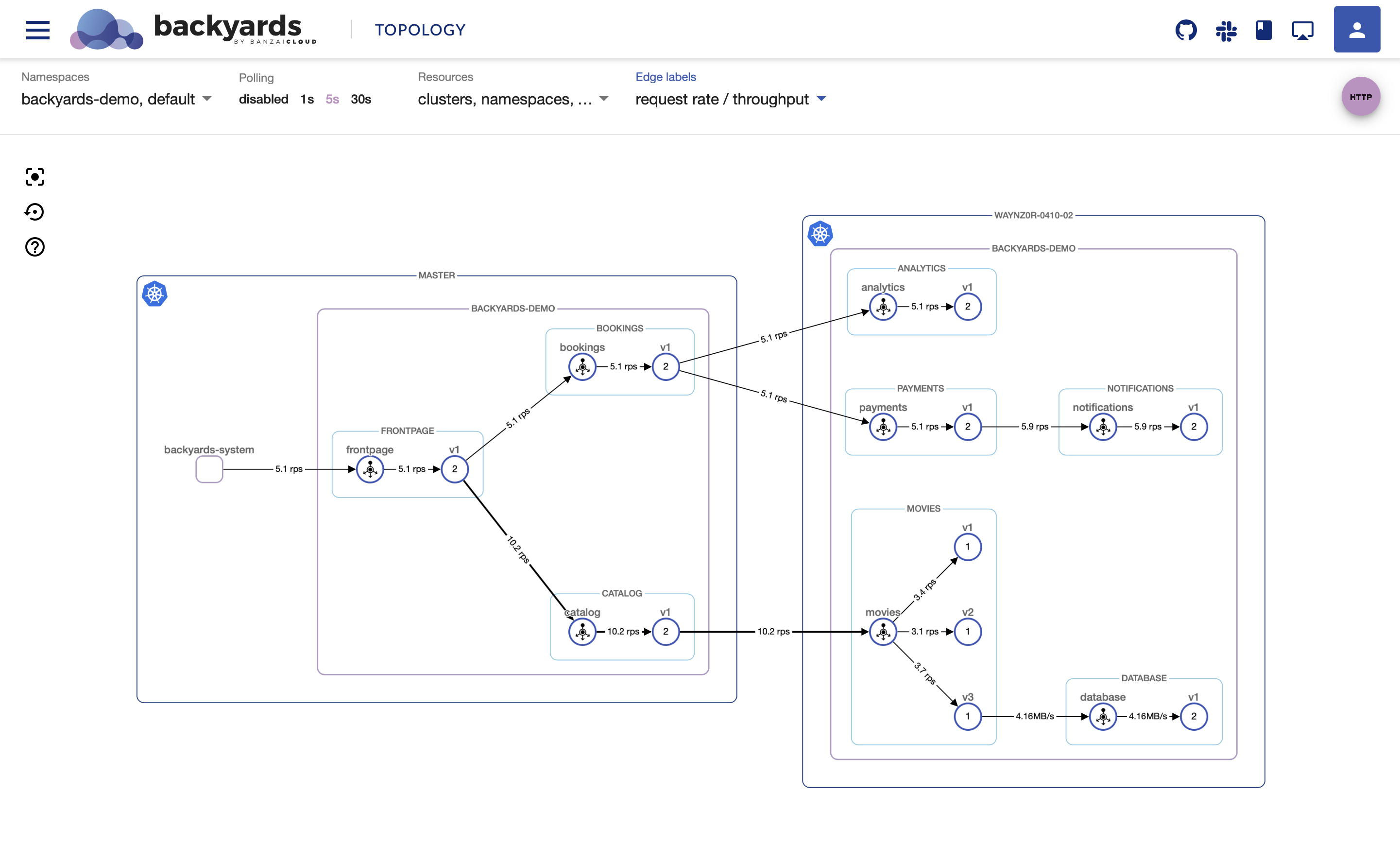

As you will see, Backyards (in constrast to, say, Kiali) is not just a web-based UI built for observability, but is a feature rich management tool for your service mesh, is single- and multi-cluster compatible, and is possessed of a powerful CLI and GraphQL API.

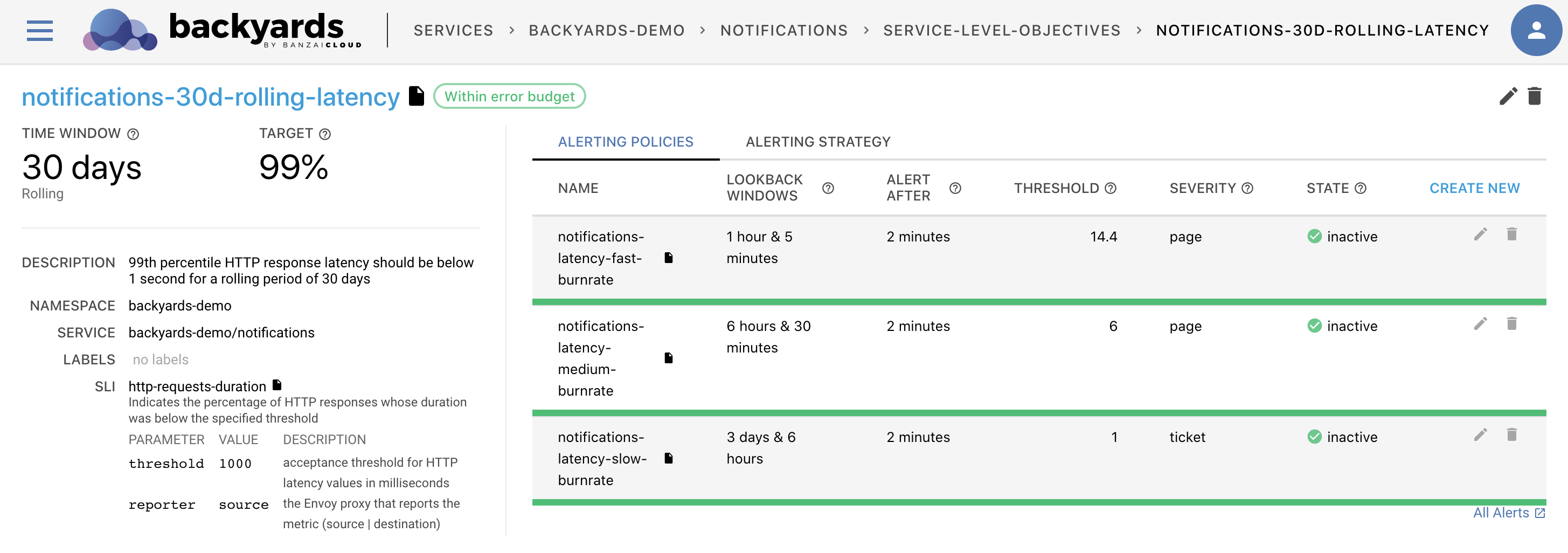

View circuit breaking configurations 🔗︎

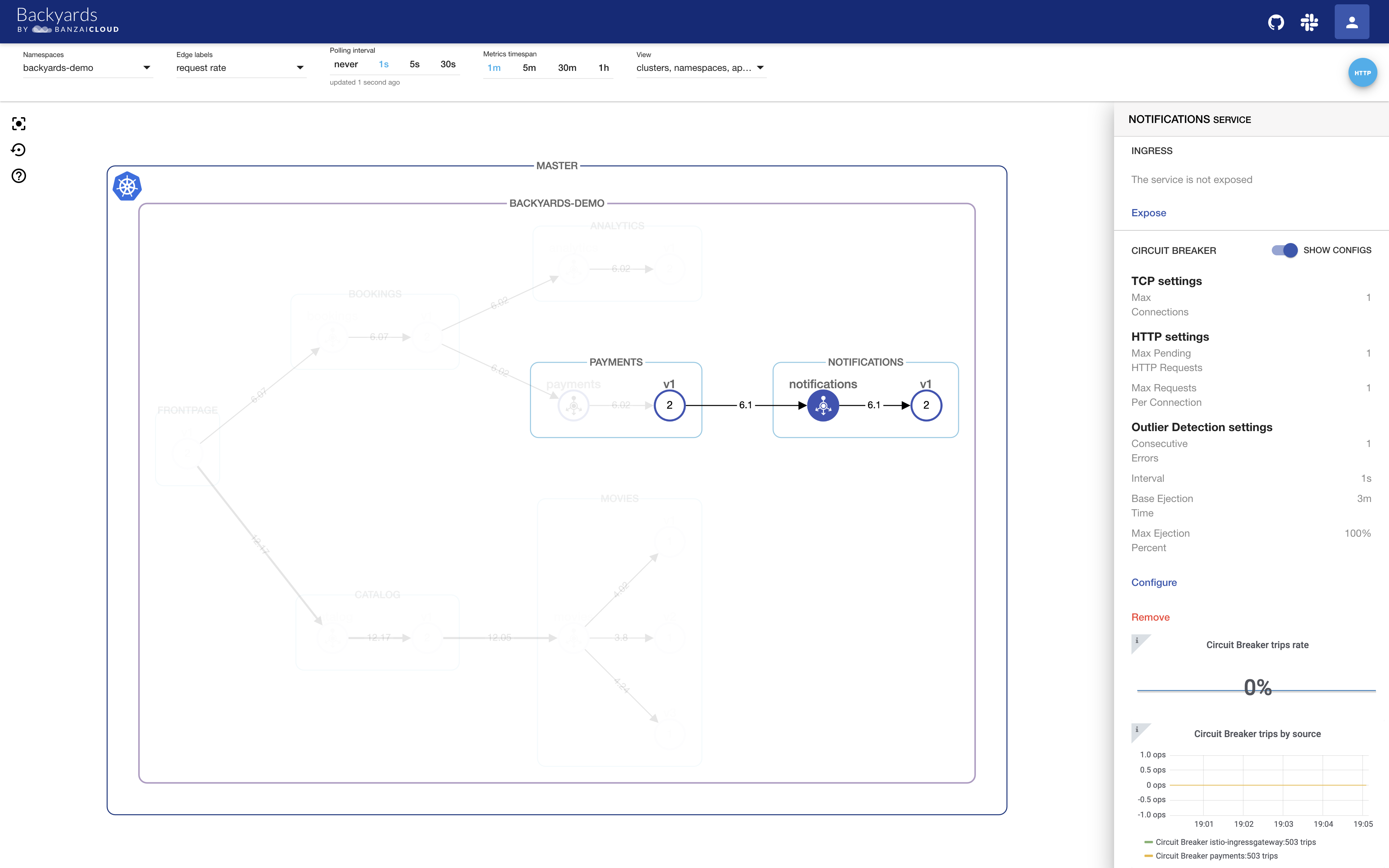

You don’t have to fetch the Destination Rule (e.g. with kubectl) to see the circuit breaker’s configurations, you can see them on the right side of the Backyards UI when you click on the notifications service icon and then toggle the SHOW CONFIGS slider.

Monitor circuit breaking 🔗︎

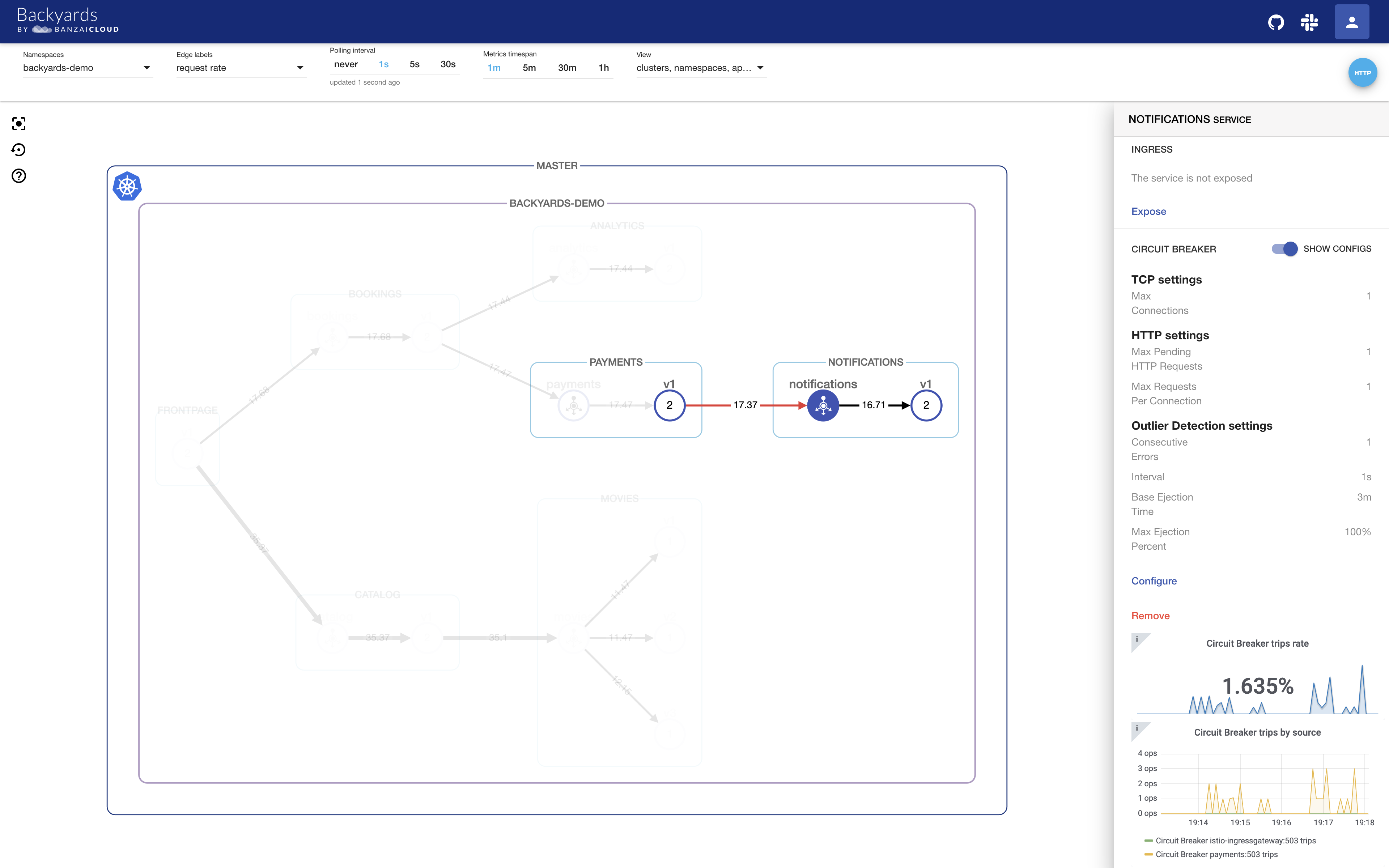

With this configuration I’ve just set, when traffic begins to flow from two connections simultaneously, the circuit breaker will start to trip requests. In the Backyards UI, you will see this being vizualized via the graph’s red edges. If you click on the service, you’ll learn more about the errors involved, and will see two live Grafana dashboards which specifically show the circuit breaker trips.

The first dashboard details the percentage of total requests that were tripped by the circuit breaker. When there are no circuit breaker errors, and your service works as expected, this graph will show 0%. Otherwise, you’ll be able to see what percentage of the requests were tripped by the circuit breaker right away.

The second dashboard provides a breakdown of the trips caused by the circuit breaker by source. If no circuit breaker trips occurred, there will be no spikes in this graph. Otherwise, you’ll see which service caused the circuit breaker to trip, when, and how many times. Malicious clients can be tracked by checking this graph.

These are live Grafana dashboards customized in order to display circuit breaker-related information. Grafana and Prometheus are installed with Backyards by default - and lots more dashboards exist to help you dig deep into your service’s metrics.

Remove circuit breaking configurations 🔗︎

You can easily remove circuit breaking configurations with the Remove button.

Circuit breaking on Backyards UI in action 🔗︎

To summarize all these UI actions let’s take a look at the following video:

Circuit breaking using the backyards-cli 🔗︎

As a rule of thumb, everything that can be done through the UI can also be done with the Backyards CLI tool.

Set circuit breaking configurations 🔗︎

Let’s put this to the test by creating the Circuit Breaker again, but this time through the CLI.

You can do this in interactive mode:

$ backyards r cb set backyards-demo/notifications

? Maximum number of HTTP1/TCP connections 1

? TCP connection timeout 3s

? Maximum number of pending HTTP requests 1

? Maximum number of requests 1024

? Maximum number of requests per connection 1

? Maximum number of retries 1024

? Number of errors before a host is ejected 1

? Time interval between ejection sweep analysis 1s

? Minimum ejection duration 3m

? Maximum ejection percentage 100

INFO[0043] circuit breaker rules successfully applied to 'backyards-demo/notifications'

Connections Timeout Pending Requests Requests RPC Retries Errors Interval Ejection time percentage

1 3s 1 1024 1 1024 1 1s 3m 100

Or, alternatively, in a non-interactive mode, by explicitly setting the values:

$ backyards r cb set backyards-demo/notifications --non-interactive --max-connections=1 --max-pending-requests=1 --max-requests-per-connection=1 --consecutiveErrors=1 --interval=1s --baseEjectionTime=3m --maxEjectionPercent=100

Connections Timeout Pending Requests Requests RPC Retries Errors Interval Ejection time percentage

1 3s 1 1024 1 1024 5 1s 3m 100

After the command is issued, the circuit breaking settings are fetched and displayed right away.

View circuit breaking configurations 🔗︎

You can list the circuit breaking configurations of a service in a given namespace with the following command:

$ backyards r cb get backyards-demo/notifications

Connections Timeout Pending Requests Requests RPC Retries Errors Interval Ejection time percentage

1 3s 1 1024 1 1024 5 1s 3m 100

By default, the results are displayed in a table view, but it’s also possible to list the configurations in JSON or YAML format:

$ backyards r cb get backyards-demo/notifications -o json

{

"maxConnections": 1,

"connectTimeout": "3s",

"http1MaxPendingRequests": 1,

"http2MaxRequests": 1024,

"maxRequestsPerConnection": 1,

"maxRetries": 1024,

"consecutiveErrors": 5,

"interval": "1s",

"baseEjectionTime": "3m",

"maxEjectionPercent": 100

}

$ backyards r cb get backyards-demo/notifications -o yaml

maxConnections: 1

connectTimeout: 3s

http1MaxPendingRequests: 1

http2MaxRequests: 1024

maxRequestsPerConnection: 1

maxRetries: 1024

consecutiveErrors: 5

interval: 1s

baseEjectionTime: 3m

maxEjectionPercent: 100

Monitor circuit breaking 🔗︎

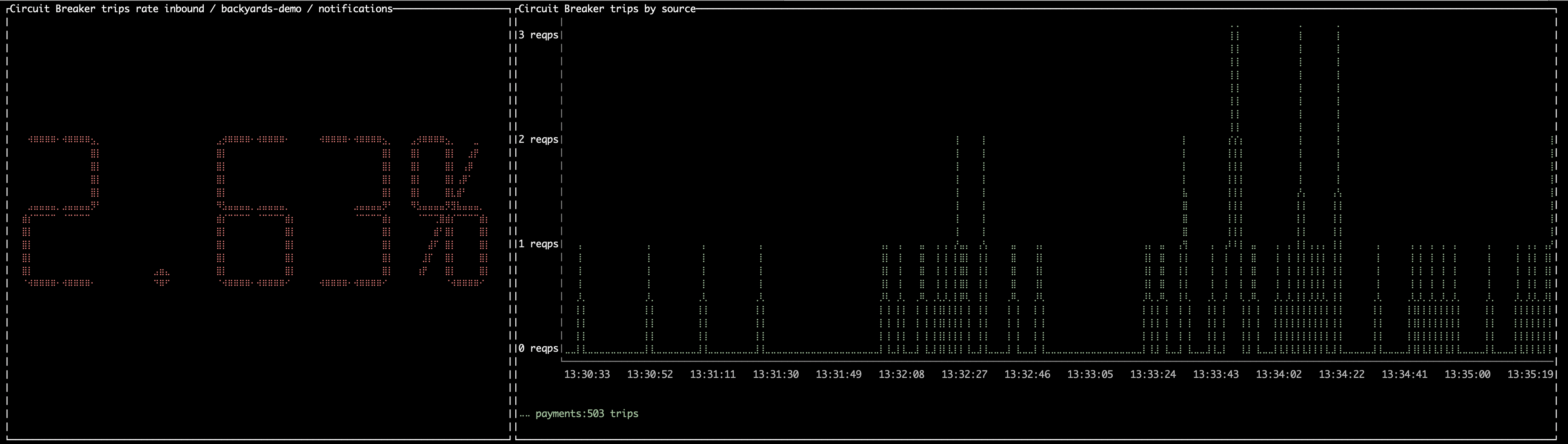

To see similar dashboards from the CLI that you’ve seen earlier on the Grafana dashboards on the UI, trigger circuit breaker trips by calling the service from multiple connections and then issue the following command:

$ backyards r cb graph backyards-demo/notifications

You should see something like this:

Remove circuit breaking configurations 🔗︎

To remove circuit breaking configurations:

$ backyards r cb delete backyards-demo/notifications

INFO[0000] current settings

Connections Timeout Pending Requests Requests RPC Retries Errors Interval Ejection time percentage

1 3s 1 1024 1 1024 5 1s 3m 100

? Do you want to DELETE the circuit breaker rules? Yes

INFO[0008] circuit breaker rules set to backyards-demo/notifications successfully deleted

To verify that the command was successful:

$ backyards r cb get backyards-demo/notifications

INFO[0001] no circuit breaker rules set for backyards-demo/notifications

Circuit breaking using the Backyards GraphQL API 🔗︎

Backyards is composed of several components, like Istio, Banzai Cloud’s Istio operator, our multi-cluster Canary release operator, as well as several backends. However, all of these are behind Backyards’ GraphQL API.

The Backyards UI and CLI both use Backyards’ GraphQL API, which will be released with the GA version at the end of September! Users will soon be able to use our tools to manage Istio and build their own clients!

Cleanup 🔗︎

To remove the demo application, Backyards, and Istio from your cluster, you need only to apply one command, which takes care of removing these components in the correct order:

$ backyards uninstall -a

Takeaway 🔗︎

With Backyards, you can easily configure circuit breaker settings from a convenient UI or with the Backyards CLI tool. Then you can monitor the circuit breaker from the Backyards UI with live embedded Grafana dashboards customized to show circuit breaker trip rates and the number of trips by source.

Next up, we’ll be covering fault injection, so stay tuned!

About Backyards 🔗︎

Banzai Cloud’s Backyards (now Cisco Service Mesh Manager) is a multi and hybrid-cloud enabled service mesh platform for constructing modern applications. Built on Kubernetes, our Istio operator and the Banzai Cloud Pipeline platform gives you flexibility, portability, and consistency across on-premise datacenters and on five cloud environments. Use our simple, yet extremely powerful UI and CLI, and experience automated canary releases, traffic shifting, routing, secure service communication, in-depth observability and more, for yourself.

About Banzai Cloud 🔗︎

Banzai Cloud is changing how private clouds are built: simplifying the development, deployment, and scaling of complex applications, and putting the power of Kubernetes and Cloud Native technologies in the hands of developers and enterprises, everywhere.

#multicloud #hybridcloud #BanzaiCloud