Check out Backyards in action on your own clusters!

Want to know more? Get in touch with us, or delve into the details of the latest release.

Or just take a look at some of the Istio features that Backyards automates and simplifies for you, and which we’ve already blogged about.

In the last few months we wrote a lot of different blog posts about the Istio service mesh. We started with a simple Istio operator, then went on with different multi-cluster service mesh topologies, Istio CNI and a telemetry deep dive.

The contents of the posts were built around our open source Istio operator that helps installing and managing an Istio service mesh in a single or multi and hybrid-cluster setup. But one thing was missing from these posts: how is this whole thing connected to Banzai Cloud’s container management platform, Pipeline, and how can it help you on your service mesh journey. Today, that wait is over as we are officially announcing Backyards, our product around the Istio service mesh.

If you’d like to read more about the multi cluster features follow this post: Backyards - automated service mesh for multi and hybrid cloud deployments

Motivation for Backyards 🔗︎

The inclusion of Istio in the Pipeline platform has been one of our most frequently requested features over the last few months, so, whether we would put in the effort to enable it was a bit of a foregone conclusion. As a first step, we’ve created an open source operator that encapsulates the management of various components of the Istio service mesh, and added a feature in Pipeline to initialize a mesh when starting a cluster.

But it wasn’t enough, because we’ve realized that working with Istio is hard. We talked with a lot of companies, and the vast majority told us that they wanted to utilize the benefits the service mesh can offer, but they were stuck in the evaluation phase because of the complexity of Istio. So we’ve started to focus on a complete solution, that not only helps with installing the mesh, but also provides a rich feature set for monitoring and configuration during the operation phase. The following section details the features and walks through the setup, configuration and monitoring of a mesh.

Creating a service mesh 🔗︎

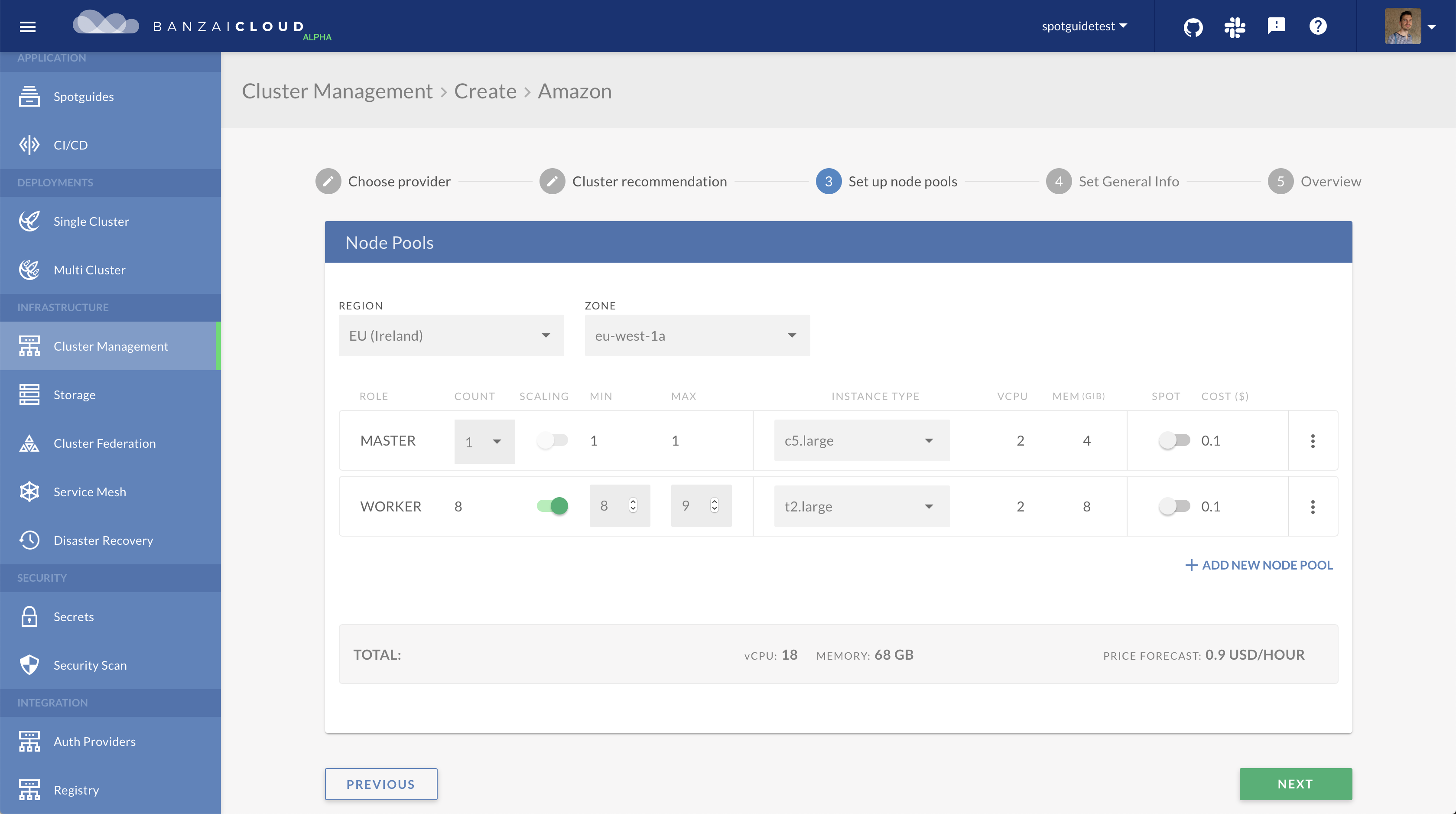

First of all, you’ll need an Istio service mesh. This can easily be done with Pipeline with the help of the Istio operator. Pipeline is a complete Kubernetes platform, that can be used to provision and manage Kubernetes clusters on five different cloud providers, or on-prem. Let’s start by creating a cluster on AWS, using Banzai Cloud’s lightweight and CNCF certified Kubernetes distribution, PKE:

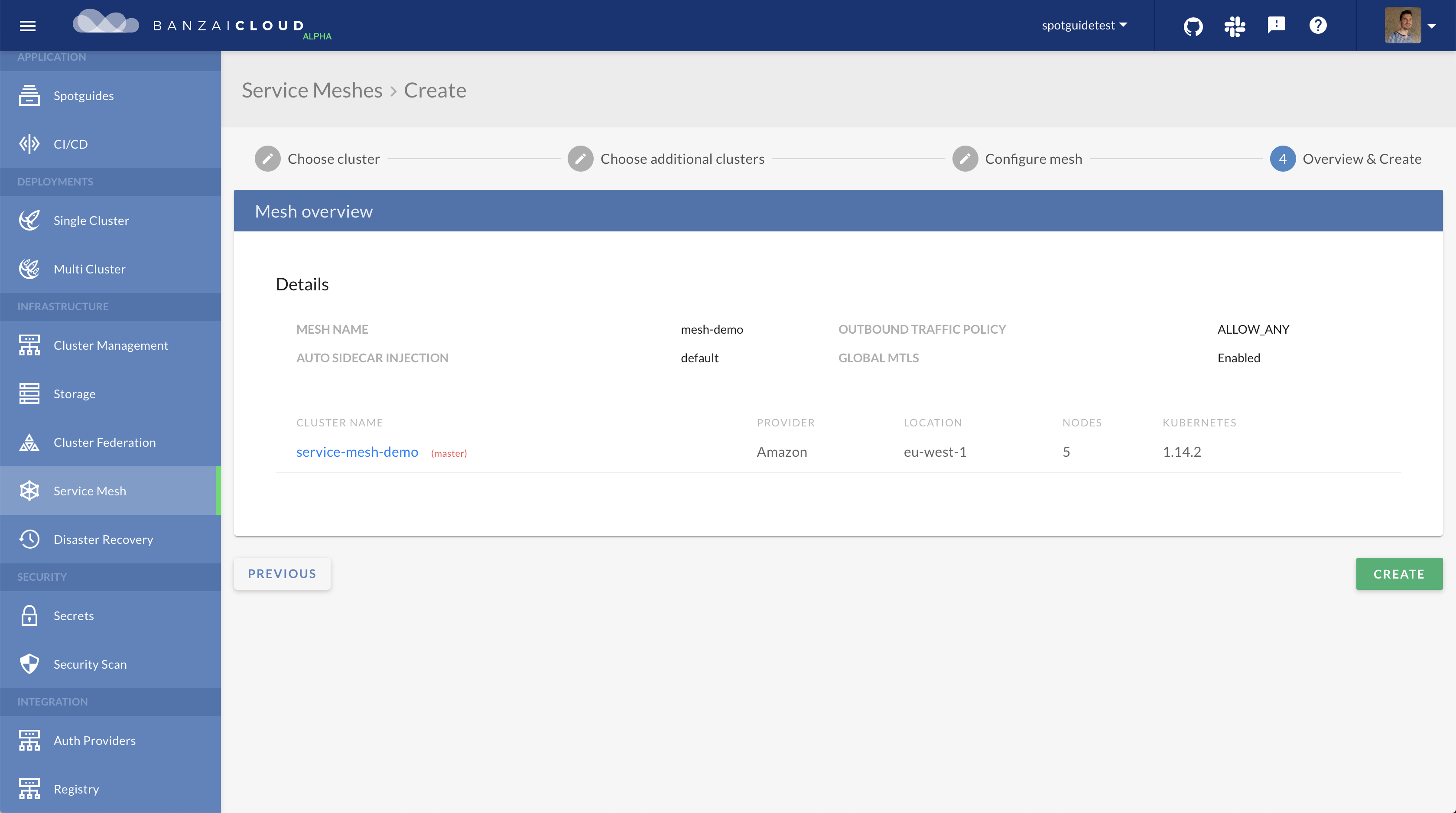

When the cluster is up and running, we can add it to a new mesh. Behind the scenes it means installing the Istio operator on the cluster, and adding a new CRD with the proper configuration. To learn more about how the operator works, read this blog post. This will install the Istio components in the cluster and it will enable automatic sidecar injection in the selected namespaces. Also, Prometheus will be deployed (if not there yet), and configured to scrape the Envoy and Mixer metrics. Earlier this step was included in the create cluster flow configuration, but we’ve decided that it’s better to separate concerns. This way the Pipeline components are more loosely coupled, it enables you to use the service mesh feature with clusters that were imported to the platform, and also makes it easier to setup a mesh that spans across multiple clusters.

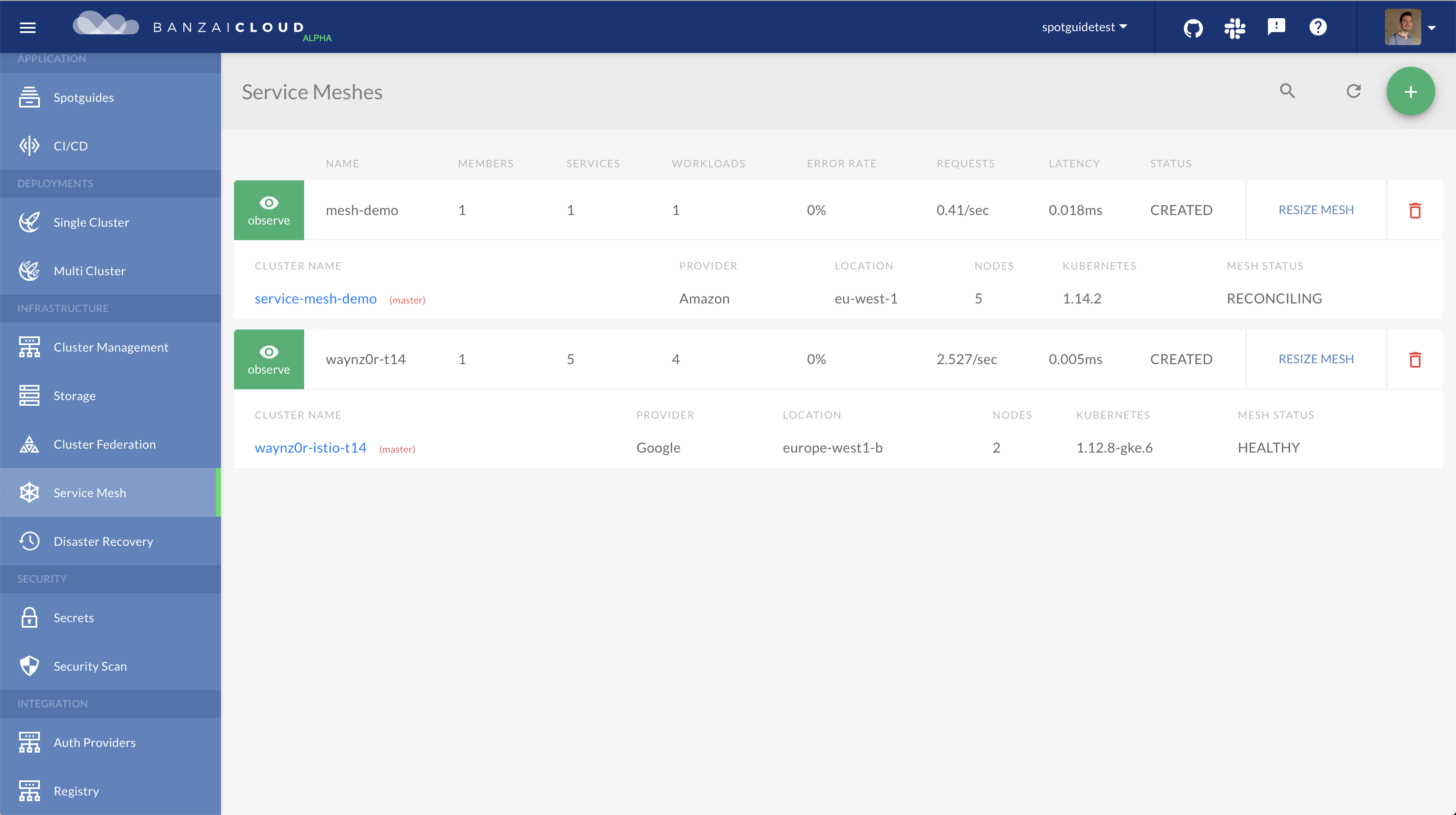

After a few seconds, the service mesh overview page appears and you’ll immediately see some aggregated metrics, like the number of services in the mesh, or the global average latency.

Deploying a test app through Pipeline 🔗︎

Now that we have a mesh, we’ll need a test microservice application to showcase the product’s capabilities.

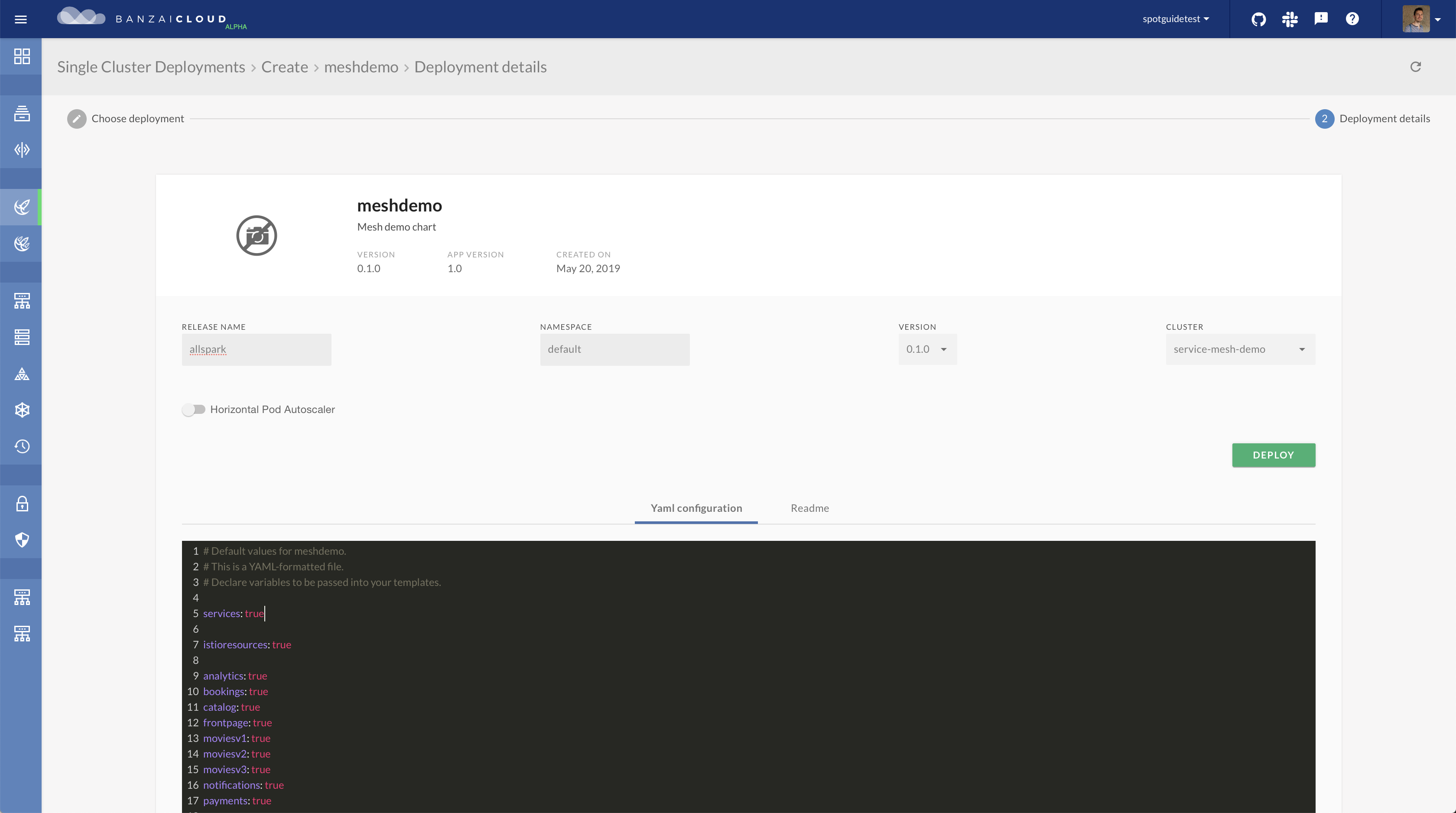

Again, we’ll use the Pipeline platform to deploy something on the cluster.

While it’s only a very simple Helm install that we could have easily done with Helm’s CLI, it’s a good way to showcase Pipeline’s deployment feature, that can be used in a very similar fashion in a multi-cluster environment as well.

We’ve created a very simple project that can be used to simulate a microservice application.

This project is called AllSpark and we’ll use this as a demo application.

We’ve built a Helm chart that contains a pre-configured AllSpark deployment that simulates a cinema booking system.

So let’s add the https://charts.banzaicloud.io/gh/spotguidetest repo to Pipeline, select the meshdemo chart and run the install (make sure to set values to true to install all components):

Service mesh overview 🔗︎

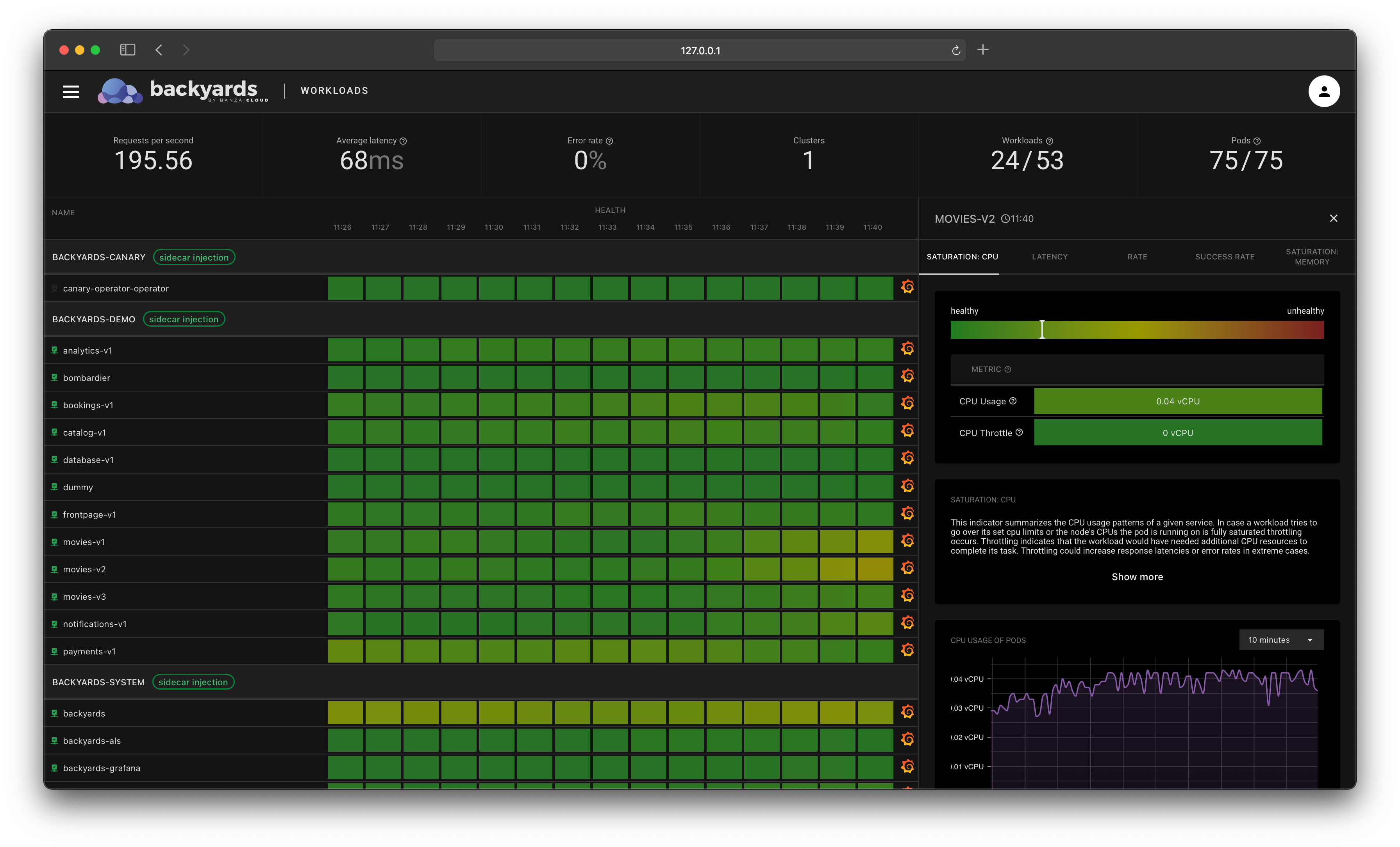

Until this point, we’ve provisioned a cluster using Banzai Cloud’s lightweight distribution, added it to a new Istio service mesh and deployed a test application on top - all with a few clicks. But the most interesting part is still ahead: visualizing and controlling the mesh.

If you go back to the service mesh list and click the observe button, you’ll be presented the service mesh control panel.

Because our test application haven’t received any traffic yet, there are no metrics in the system so you won’t see any visualization yet.

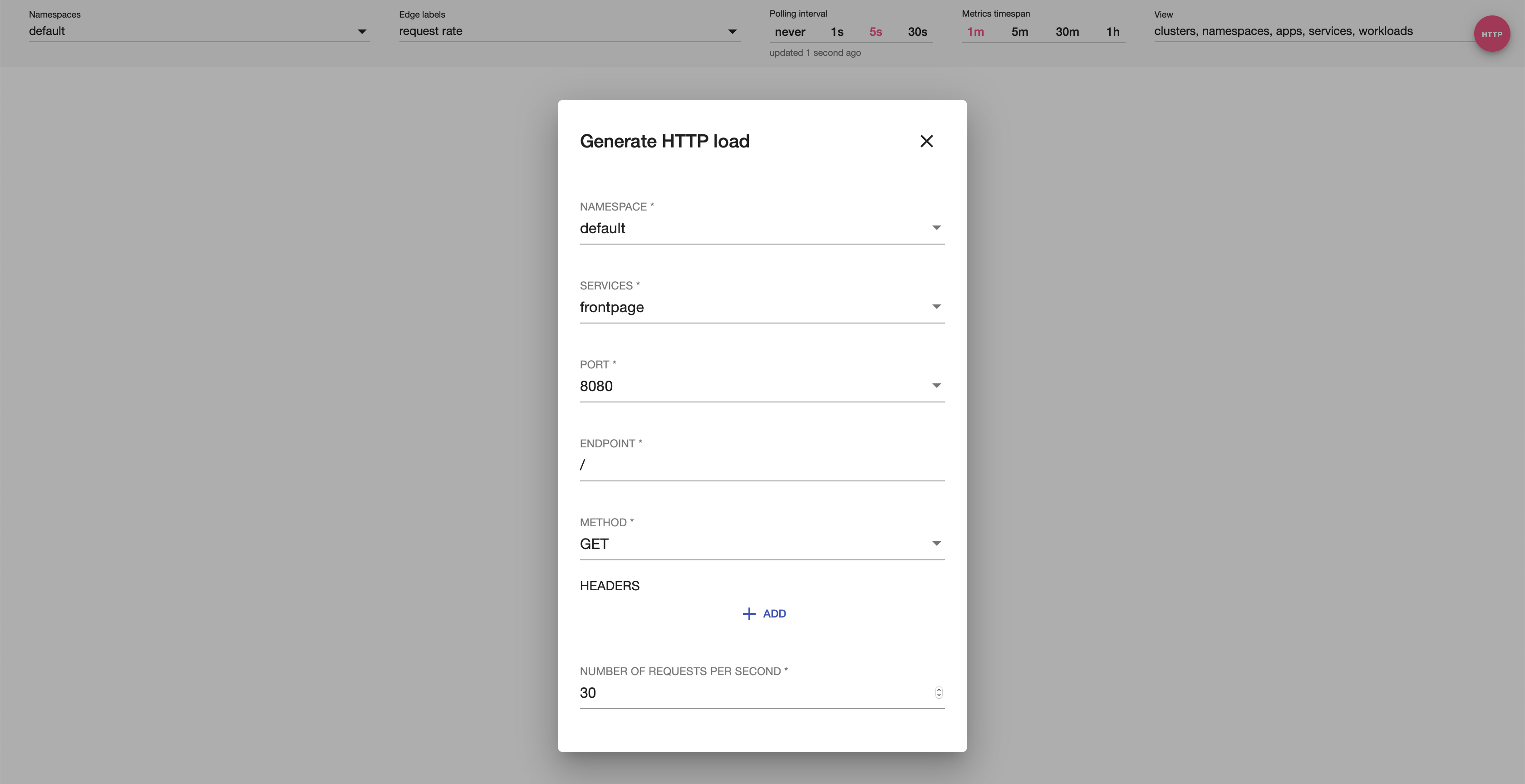

The UI has a test flight feature that sends some traffic to a selected endpoint. If you click the HTTP button in the top right corner of the screen, you’ll be able to fill out a form with the endpoint details and send some test traffic to your services.

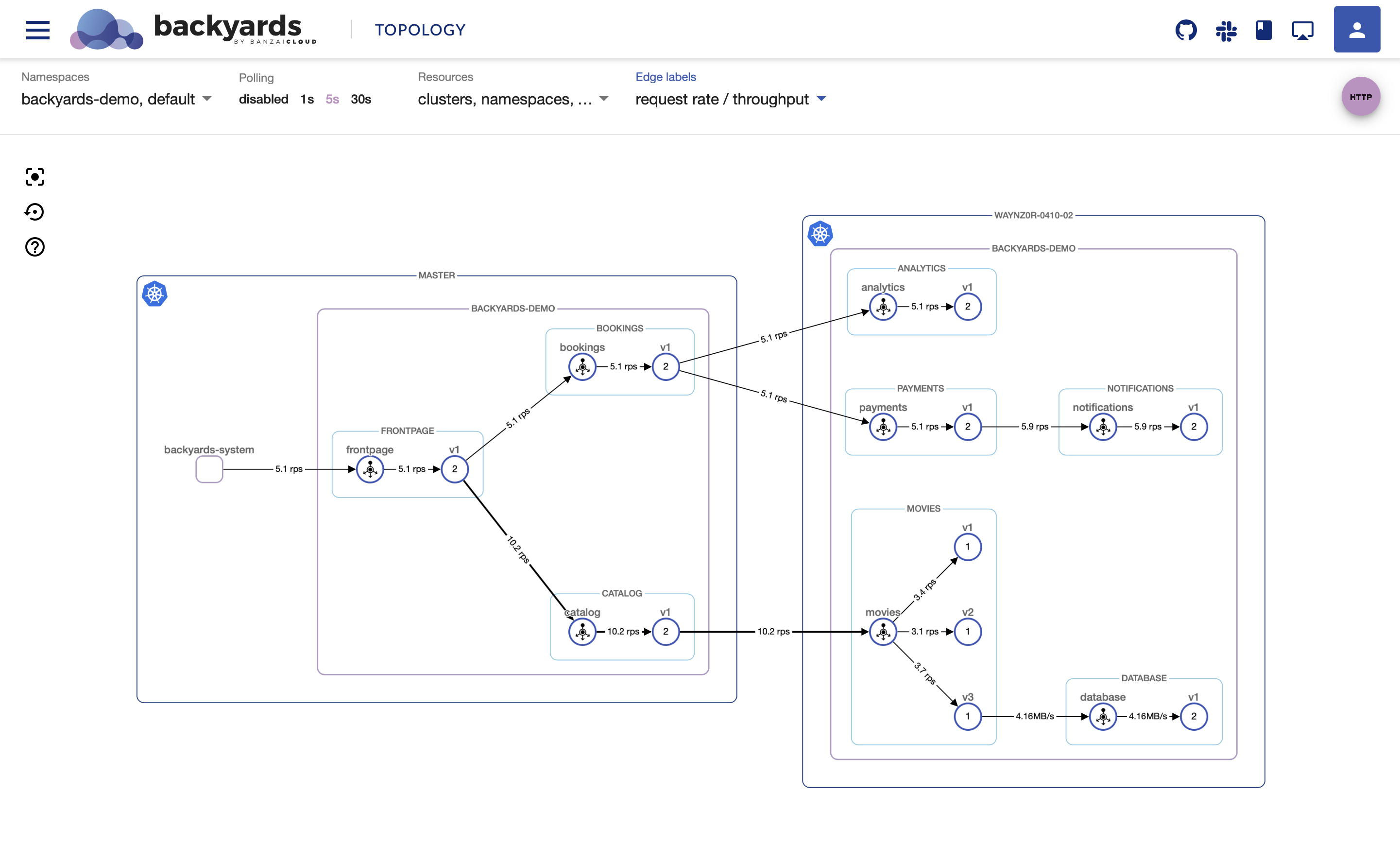

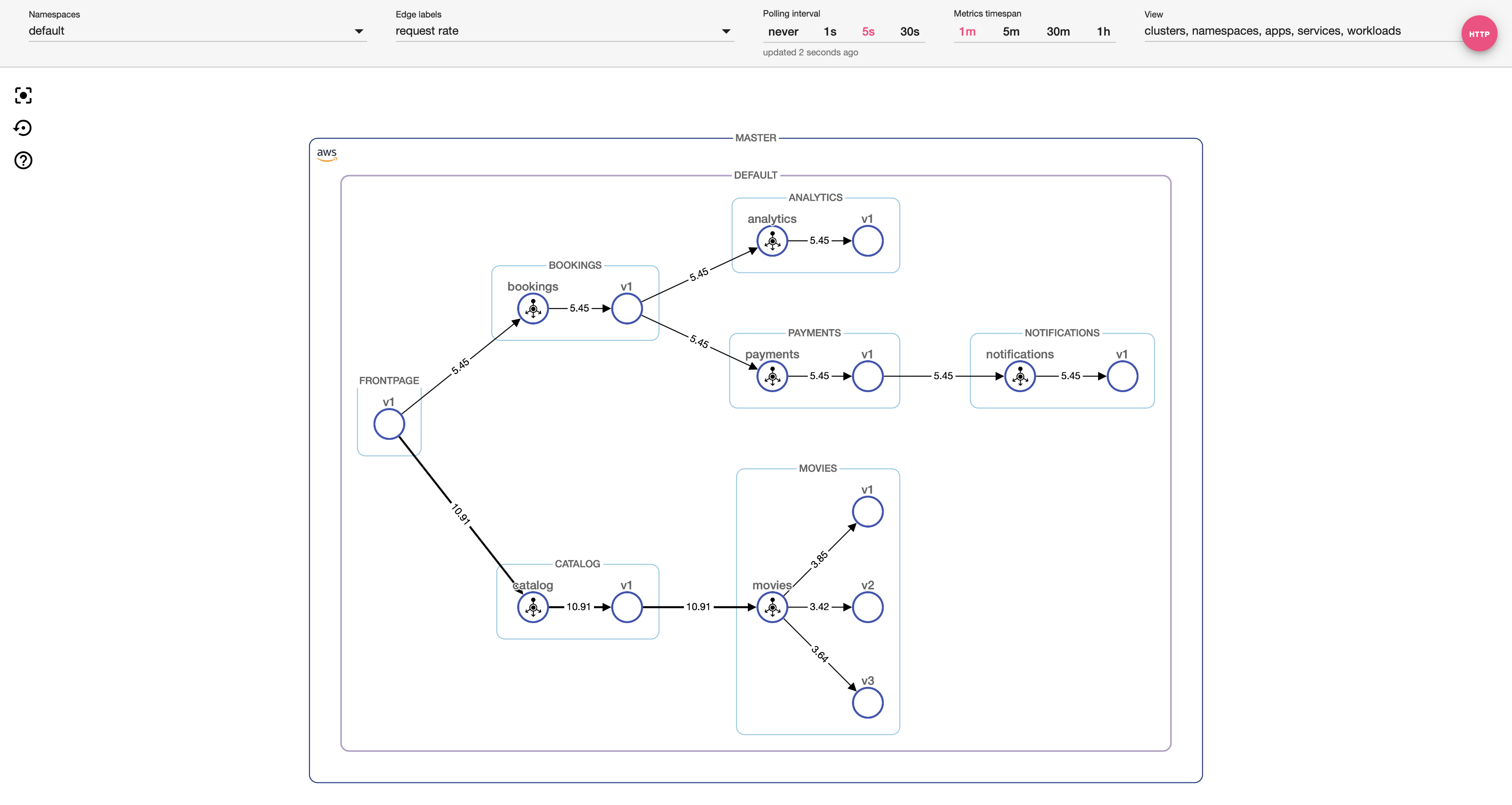

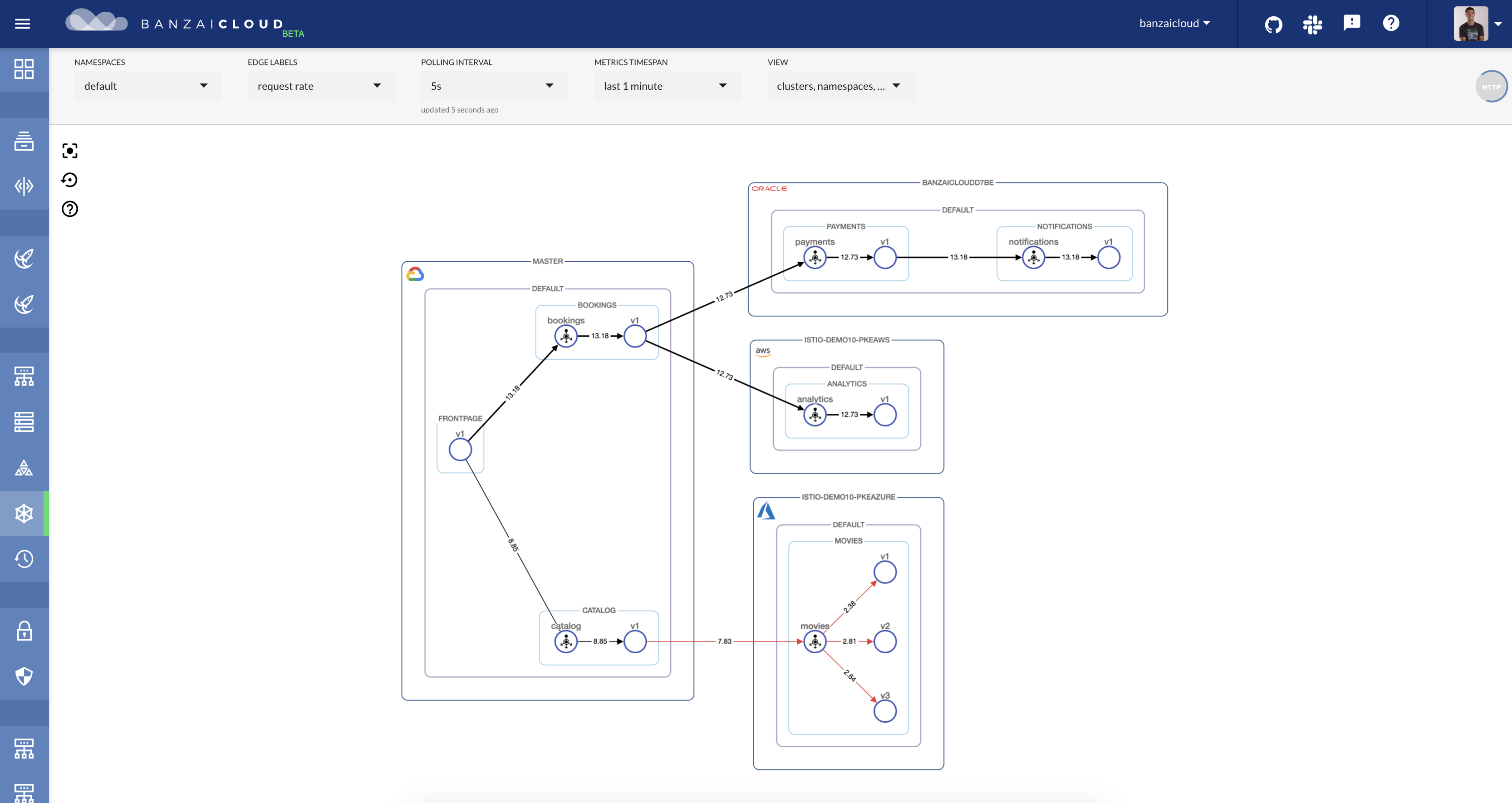

In a few seconds a graph of your services will appear. The nodes in the graph are services or workloads, while the the arrows represent network connection between different services. This is based on Istio metrics coming from Prometheus.

While this is a good representation of the layout of a microservice architecture, it doesn’t mean too much in itself. Instead the UI serves three purposes:

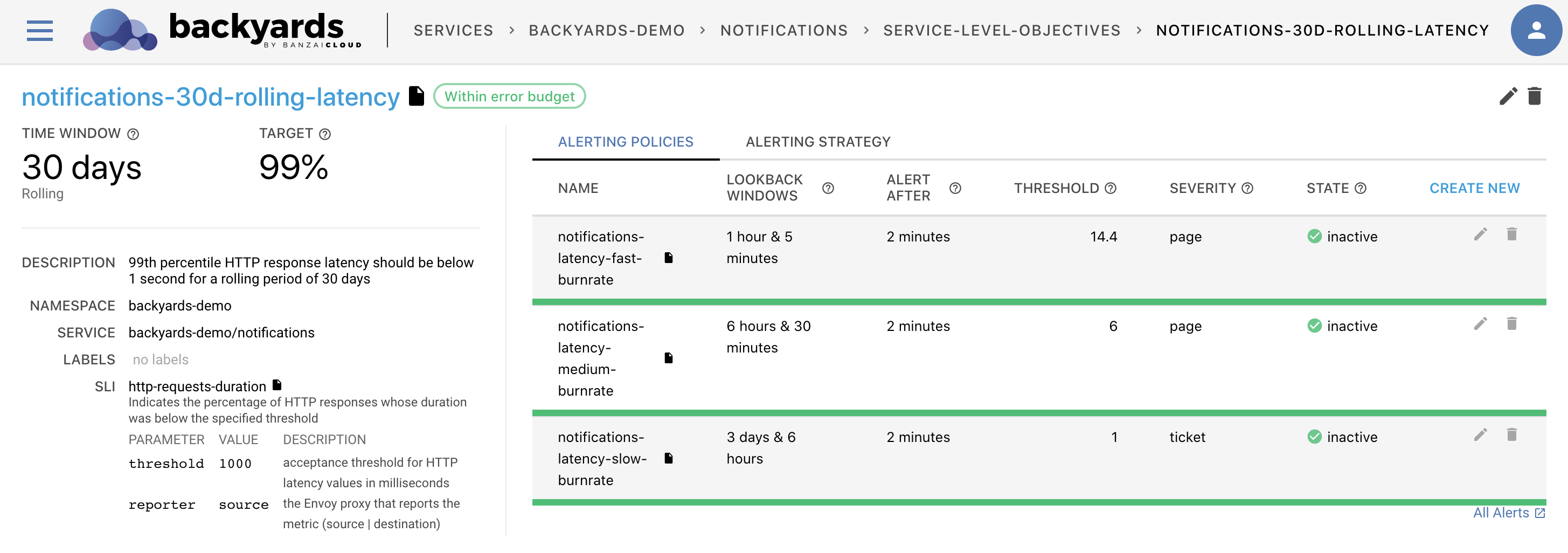

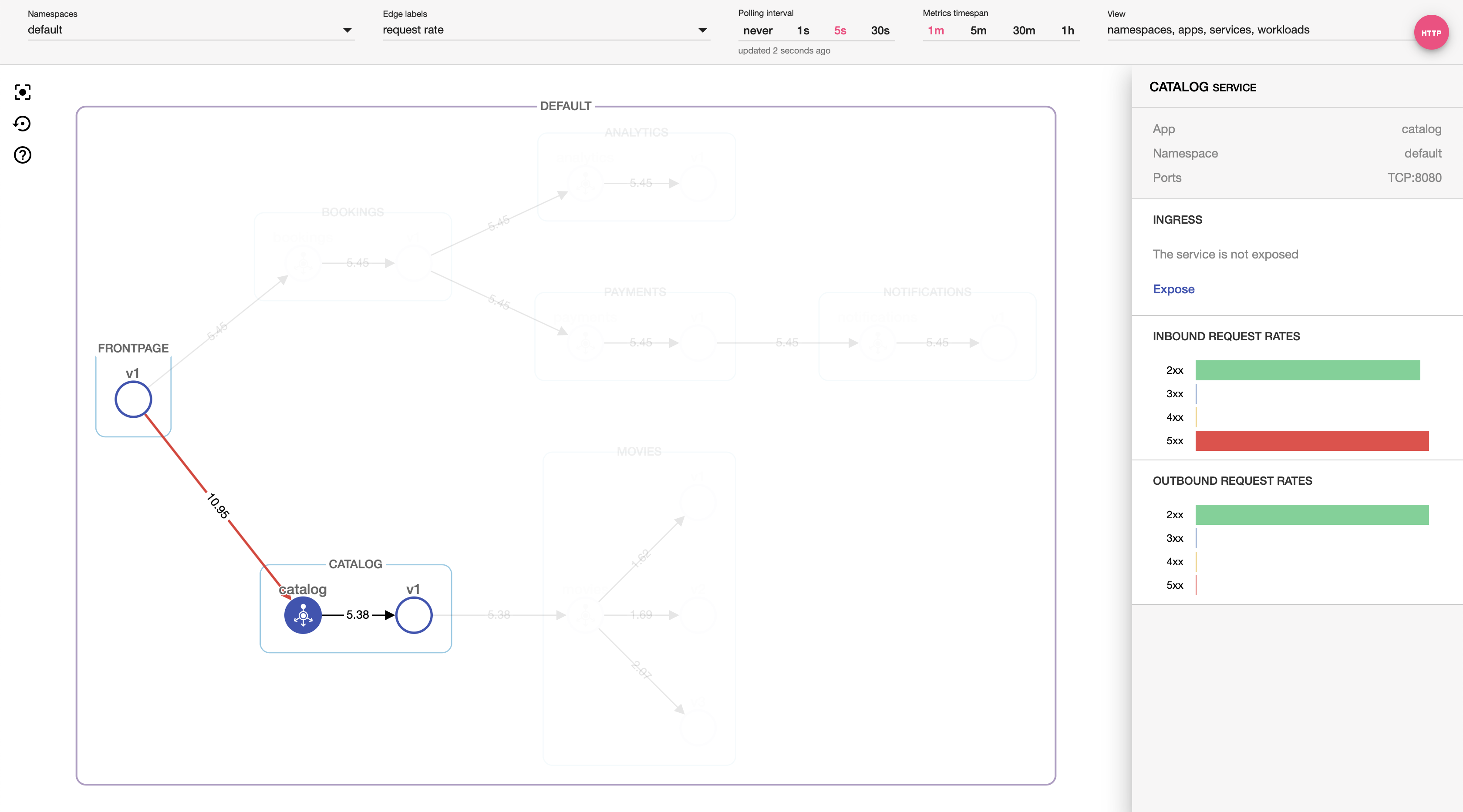

- It serves as a visual monitoring tool, as it displays various errors and metrics in the system.

- It acts as a control panel for Istio and makes it easy to control parts of the mesh that would otherwise be configurable through extensive custom resource machinery only.

- Make these tasks seamless in a multi or hybrid-cluster environment as well.

Monitoring the mesh 🔗︎

When service communication is failing between some services in your cluster, and monitoring, alerting is properly configured you’ll be notified of the error immediately. But a visual representation could come handy as the second line of investigation. For example let’s take the following scenario.

If you have a lot of inter-service communication in your cluster - and you’ll probably have if you’re using a service mesh - and something fails, it usually means that other parts of your application networking can also fail or change. Because of improper handling of 5xx errors, or increasing timeouts, some other services may also start misbehaving and report errors. This means that you’ll receive alerts from a bunch of different services at once and you’ll have a hard time figuring out what caused the issue in the first place. When looking at the graph it will instantly be clear what the source of the issue is, and it also makes really easy to identify second order problems.

It can also be informative to see an overview of the services - even if the original error didn’t cause any problems between other services - and easily check how request rate dropped, latency increased, or where traffic was shifted in the cluster.

Control features 🔗︎

Our main goal with Pipeline’s service mesh extension is to ease the pain of managing Istio.

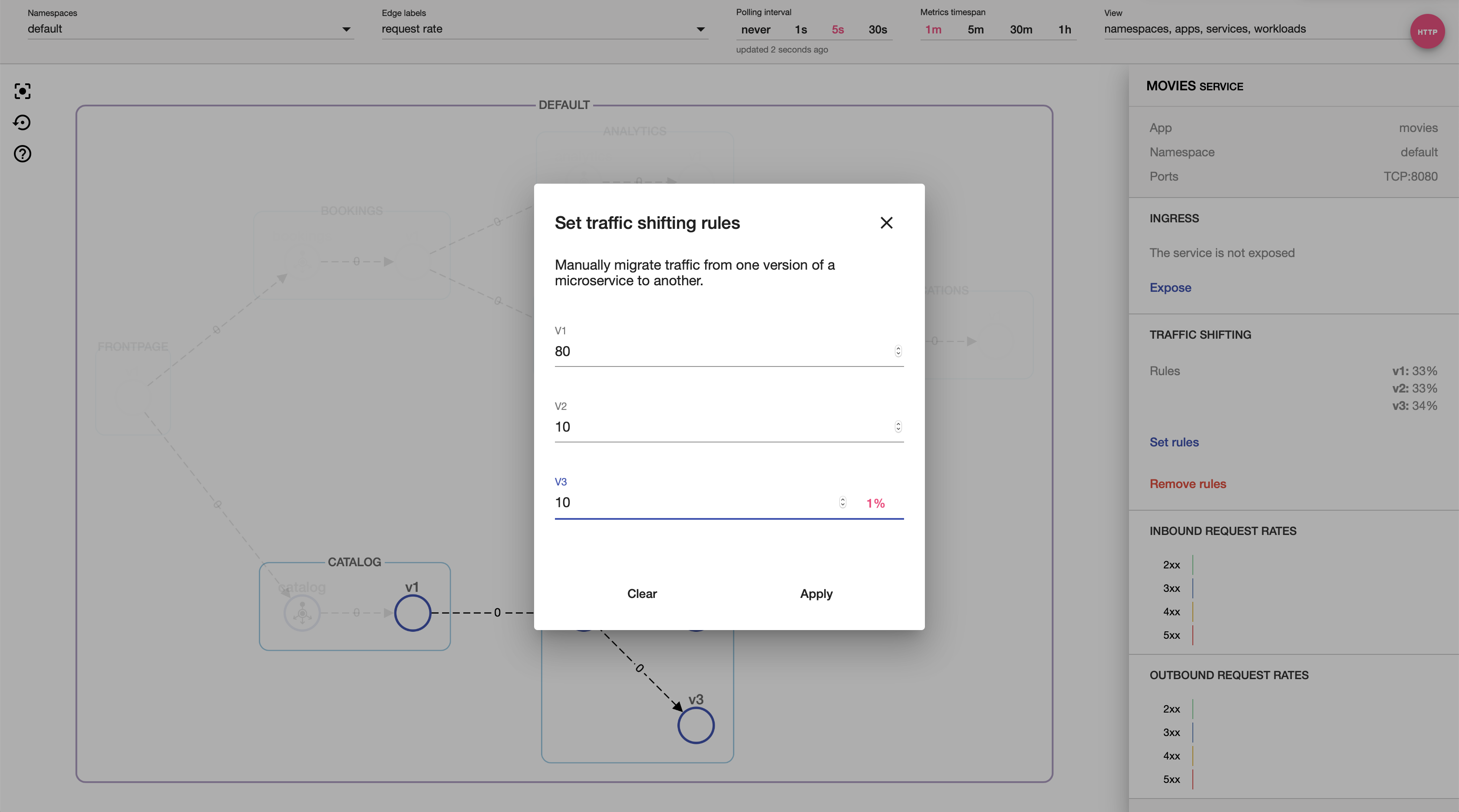

While the benefits offered by Istio are hard to overlook, the main source of criticism towards it stems from complexity. Even if you’re familiar with the networking concepts, you’ll need to have a deep Istio domain knowledge to be productive.

Even to do simple things, like shifting traffic from one service to another you’ll need to understand what a VirtualService, and a DestinationRule means, and how to properly configure them through Istio custom resources.

By using our product, all you’ll need to focus on is how your services should communicate, and we’ll do the heavy lifting with Istio. Take for example traffic shifting - you can drive traffic from one service to another with a few clicks:

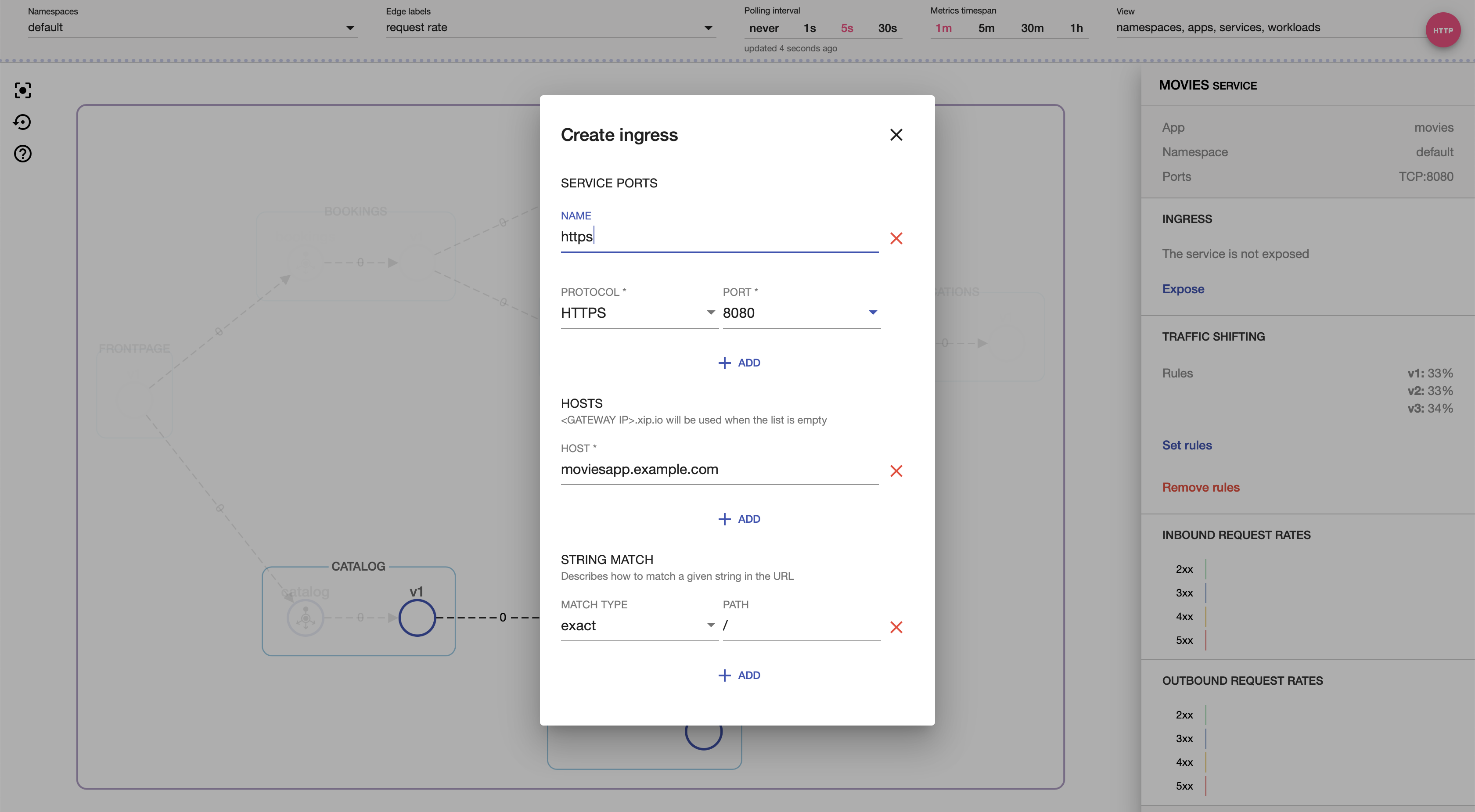

Or exposing a service through the Istio ingressgateway:

Multi cluster 🔗︎

When planning our service mesh solution, one of the most important goals was to eliminate the barriers to multi and hybrid-cloud deployments. Almost all of the companies we had discussions with has some kind of multi or hybrid cloud requirement. Some choose to run most of their workloads in-house in their own data centers, only scaling out to public clouds when peak-demand cannot be met. Others continually look for the cheapest available machines across cloud vendors, only spinning up workloads when their cost criteria is met. And even more simply need a convenient way to move into a different public cloud when a customer requests it.

One of the base building blocks to achieve this goal is our Istio operator that supports different multi-cluster service mesh scenarios out of the box. The other is Pipeline that provides a unified way to manage clusters on different cloud providers and on-prem.

Pipeline connects selected clusters automatically using the operator, and you’ll get an inter-cluster view of services. From that point on you can work with a multi-cluster mesh almost just like as it was a single cluster.

If you’d like to read more about the multi cluster features follow this post: Backyards - automated service mesh for multi and hybrid cloud deployments

Alpha features 🔗︎

To build a complete service mesh product, we have a lot of work ahead of us. Most importantly we’ll need to cover the most widely used features of Istio - expect most of that to come in the following weeks. But we have a bunch of other ideas that we’ve already started to work on or planning in the very near future:

- Canary and blue-green deployments: while Istio has lower level building blocks, it is often used for higher level tasks, like canary deployments. We’ll include some of these higher level concepts in the UI to make them easier.

- Tracing integration: tracing and service meshes go hand-in-hand, so it feels like a natural thing to connect traces and services on the UI.

- Audit trail: we understand that configuring network behaviour in a service mesh is a sensitive topic. We’ll add a full audit trail to track changes made.

- CLI: not everyone likes to click buttons on a UI - us included - so we’ll soon release a CLI that covers the same feature set as the UI.

- Validation and diagnostics: Another big issue with Istio is that if something fails or misconfigured, you’ll only get a generic error like

upstream connect error or disconnect/reset before headers, and it’s not easy to figure out what went wrong. We’re working on a validation and diagnostics tool that will be built in the UI and that can help with these kind of issues.

Let us know in the comments if you have other feature requests, or what would be the most interesting for you from the above.

About Backyards 🔗︎

Banzai Cloud’s Backyards (now Cisco Service Mesh Manager) is a multi and hybrid-cloud enabled service mesh platform for constructing modern applications. Built on Kubernetes, our Istio operator and the Banzai Cloud Pipeline platform gives you flexibility, portability, and consistency across on-premise datacenters and on five cloud environments. Use our simple, yet extremely powerful UI and CLI, and experience automated canary releases, traffic shifting, routing, secure service communication, in-depth observability and more, for yourself.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.

About Banzai Cloud 🔗︎

Banzai Cloud is changing how private clouds are built: simplifying the development, deployment, and scaling of complex applications, and putting the power of Kubernetes and Cloud Native technologies in the hands of developers and enterprises, everywhere.

#multicloud #hybridcloud #BanzaiCloud