Check out Backyards in action on your own clusters!

Want to know more? Get in touch with us, or delve into the details of the latest release.

Or just take a look at some of the Istio features that Backyards automates and simplifies for you, and which we’ve already blogged about.

Hybrid- and multi-cloud are quickly becoming the new norm for enterprises, just as service mesh is becoming essential to the cloud native computing environment. From the very beginning, the Pipeline platform has supported multiple cloud providers and wiring them together at multiple levels (cluster, deployments and services) was always one of the primary goals.

We supported setting up multi-cluster service meshes from the first release of our open source Istio operator. That release was based on Istio 1.0, which had some network constraints for single mesh multi-clusters, such as that all pod CIDRs had to be unique and routable to each other in every cluster, as well as it being necessary that API servers also be routable to one another. You can read about it in our previous blog post, here.

Since then Istio 1.1 was released and we are proud to announce that the latest version of our Istio operator supports hybrid- and multi-cloud single mesh without flat network or VPN.

tl;dr: 🔗︎

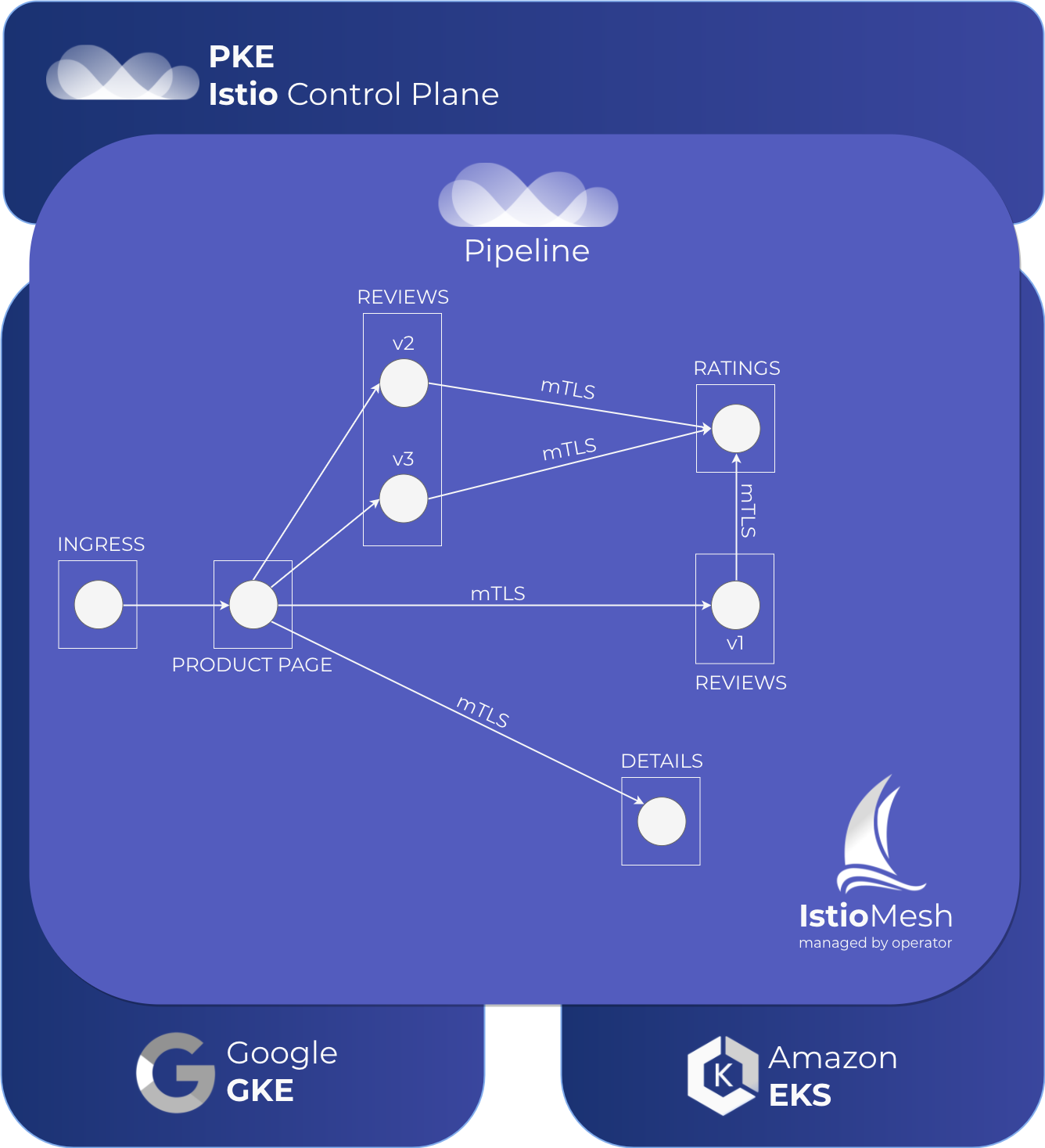

- the Pipeline platform deploys K8s clusters on 6 clouds, including provider managed K8s (EKS, AKS, GKE, etc) and our own CNCF certified Kubernetes distribution, Banzai Cloud PKE

- the Banzai Cloud Istio operator added support to create multi and hybrid cloud service meshes

- service meshes now can expand across Kubernetes clusters created with Pipeline across any cloud provider, on-premise or the mixture of these environments

- the Pipeline platform automates creating and managing these with a few clicks on the UI or CLI

- read on to learn how can you use the Istio operator to create a service mesh using 3 different Kubernetes distributions and cloud environments (Banzai Cloud PKE, Amazon EKS and Google GKE)

Hybrid- and multi-cloud single mesh without flat network or VPN 🔗︎

Hybrid- and multi-cloud single mesh is most suitable in those use cases where clusters are configured together, sharing resources and typically being treated as one infrastructural component within an organization.

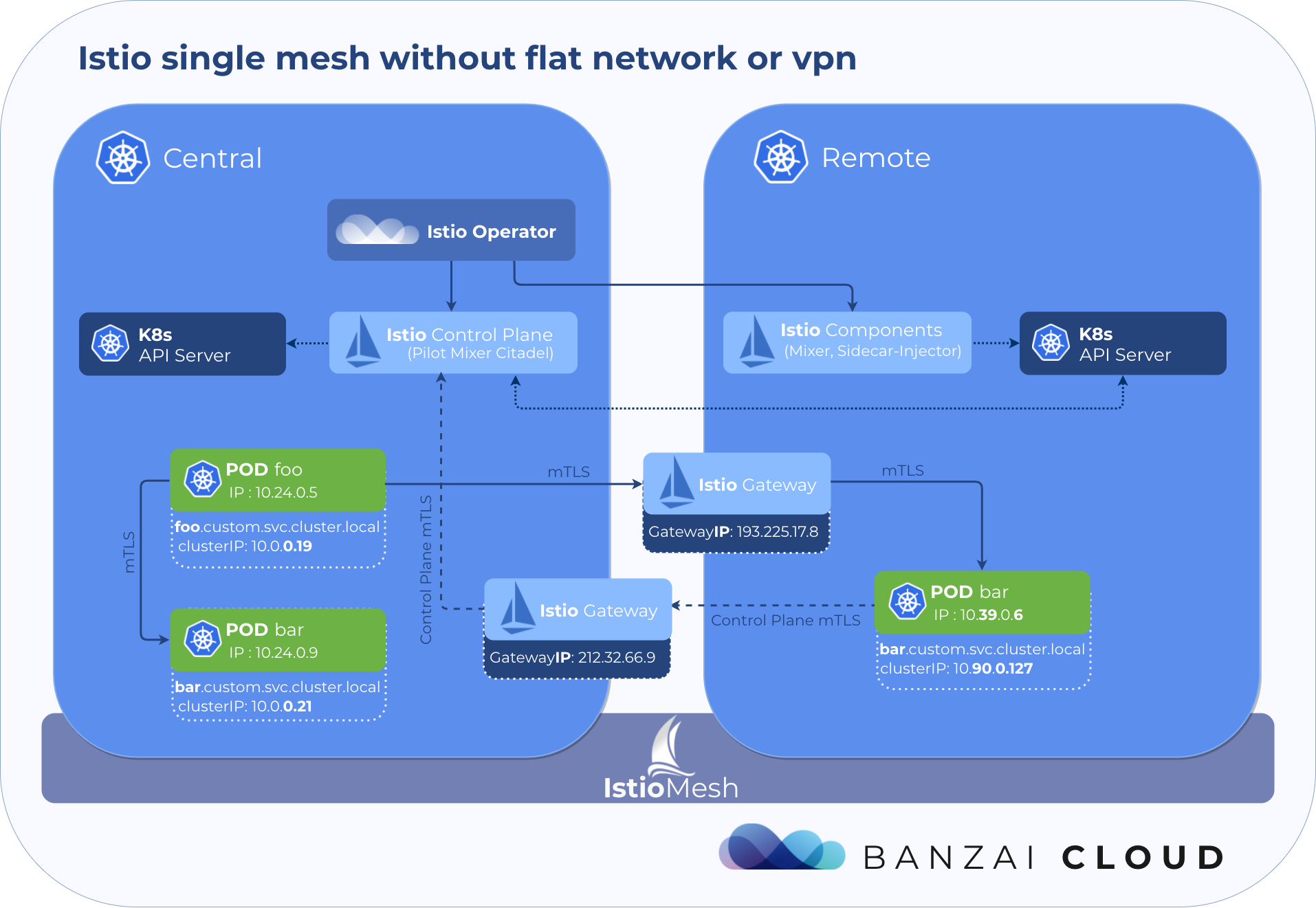

Two new features which were introduced in Istio v1.1 come in particularly handy for decreasing our reliance on flat networks or VPNs between clusters: Split Horizon EDS and SNI-based routing.

- EDS is short for Endpoint Discovery Service (EDS), a part of Envoy’s API, which is used to fetch what cluster members refer to as “endpoints” within the Envoy terminology

- SNI-based routing leverages the “Server Name Indication” TLS extension to make routing decisions

- Split-horizon EDS enables Istio to route requests to different endpoints, depending on the location of the requested source. Istio Gateways intercept and parse TLS handshakes and use SNI data to decide destination service endpoints.

A single mesh multi-cluster is formed by enabling any number of Kubernetes control planes running a remote Istio configuration to connect to a single Istio control plane. Once one or more Kubernetes clusters is connected to the Istio control plane in that way, Envoy communicates with the Istio control plane in order to form a mesh network across those clusters.

In this configuration, a request from a sidecar in one cluster to a service in the same cluster is forwarded to the local service IP (as per usual). If the destination workload is running in a different cluster, the remote cluster Gateway IP is used to connect to the service instead.

How the Istio operator forms a single mesh 🔗︎

In a single mesh scenario, there is one Istio control plane on one of the clusters that receives information about service and pod states from remote clusters. To achieve this, the kubeconfig of each remote cluster must be added to the cluster where the control plane is running in the form of a k8s secret.

The Istio operator uses a CRD called RemoteIstio to store the desired state of a given remote Istio configuration. Adding a new remote cluster to the mesh is as simple as defining a RemoteIstio resource with the same name as the secret which contains the kubeconfig for the cluster.

The operator handles deploying Istio components to clusters and – because inter-cluster communication goes through a cluster’s Istio gateways in the Split Horizon EDS setup – implements a sync mechanism, which provides constant reachability between the clusters by syncing ingress gateway addresses.

However, as of today, there is one important caveat. The control plane does not send the ingress gateway of the local cluster to a remote cluster as an endpoint, so connectivity from the remote cluster into the local cluster is currently impossible. Hopefully this will be fixed soon; you can track the progress of this issue, here.

Try it out 🔗︎

For demonstrative purposes, create 3 clusters, a single node Banzai Cloud PKE cluster on EC2, a GKE cluster with 2 nodes and an EKS cluster that also has 2 nodes.

Get the latest version of the Istio operator 🔗︎

❯ git clone https://github.com/banzaicloud/istio-operator.git

❯ git checkout release-1.1

❯ cd istio-operator

The Pipeline platform is the easiest way to setup that environment via our CLI tool (install) for Pipeline, which is simply called banzai.

AWS_SECRET_ID="[[secretID from Pipeline]]"

GKE_SECRET_ID="[[secretID from Pipeline]]"

❯ cat docs/federation/gateway/samples/istio-pke-cluster.json | sed "s/{{secretID}}/${AWS_SECRET_ID}/" | banzai cluster create

INFO[0004] cluster is being created

INFO[0004] you can check its status with the command `banzai cluster get "istio-pke"`

Id Name

541 istio-pke

❯ cat docs/federation/gateway/samples/istio-gke-cluster.json | sed -e "s/{{secretID}}/${GKE_SECRET_ID}/" -e "s/{{projectID}}/${GKE_PROJECT_ID}/" | banzai cluster create

INFO[0004] cluster is being created

INFO[0004] you can check its status with the command `banzai cluster get "istio-gke"`

Id Name

542 istio-gke

❯ cat docs/federation/gateway/samples/istio-eks-cluster.json | sed "s/{{secretID}}/${AWS_SECRET_ID}/" | banzai cluster create

INFO[0004] cluster is being created

INFO[0004] you can check its status with the command `banzai cluster get "istio-eks"`

Id Name

543 istio-eks

Wait for the clusters to be up and running 🔗︎

❯ banzai cluster list

Id Name Distribution Status CreatorName CreatedAt

543 istio-eks eks RUNNING waynz0r 2019-04-14T16:55:46Z

542 istio-gke gke RUNNING waynz0r 2019-04-14T16:54:15Z

541 istio-pke pke RUNNING waynz0r 2019-04-14T16:52:52Z

Download the kubeconfigs from the Pipeline UI and set them as k8s contexts.

❯ export KUBECONFIG=~/Downloads/istio-pke.yaml:~/Downloads/istio-gke.yaml:~/Downloads/istio-eks.yaml

❯ kubectl config get-contexts -o name

istio-eks

istio-gke

kubernetes-admin@istio-pke

❯ export CTX_EKS=istio-eks

❯ export CTX_GKE=istio-gke

❯ export CTX_PKE=kubernetes-admin@istio-pke

Install the operator onto the PKE cluster 🔗︎

❯ kubectl config use-context ${CTX_PKE}

❯ make deploy

❯ kubectl --context=${CTX_PKE} -n istio-system create -f docs/federation/gateway/samples/istio-multicluster-cr.yaml

This command will install a custom resource definition in the cluster, and will deploy the operator to the istio-system namespace.

Following a pattern typical of operators, this will allow you to specify your Istio configurations to a Kubernetes custom resource.

Once you apply that to your cluster, the operator will start reconciling all of Istio’s components.

Wait for the gateway-multicluster Istio resource status to become Available and for the pods in the istio-system to become ready.

❯ kubectl --context=${CTX_PKE} -n istio-system get istios

NAME STATUS ERROR AGE

gateway-multicluster Available 1m

❯ kubectl --context=${CTX_PKE} -n istio-system get pods

NAME READY STATUS RESTARTS AGE

istio-citadel-67f99b7f5f-lg859 1/1 Running 0 1m30s

istio-galley-665cf4d49d-qrm5s 1/1 Running 0 1m30s

istio-ingressgateway-64f9d4b75b-jh6jr 1/1 Running 0 1m30s

istio-operator-controller-manager-0 2/2 Running 0 2m27s

istio-pilot-df5d467c7-jmj77 2/2 Running 0 1m30s

istio-policy-57dd995b-fq4ss 2/2 Running 2 1m29s

istio-sidecar-injector-746f5cccd9-8mwdb 1/1 Running 0 1m18s

istio-telemetry-6b6b987c94-nmqpg 2/2 Running 2 1m29s

Add a GKE cluster to the service mesh

❯ kubectl --context=${CTX_GKE} apply -f docs/federation/gateway/cluster-add

❯ GKE_KUBECONFIG_FILE=$(docs/federation/gateway/cluster-add/generate-kubeconfig.sh ${CTX_GKE})

❯ kubectl --context $CTX_PKE -n istio-system create secret generic ${CTX_GKE} --from-file=${CTX_GKE}=${GKE_KUBECONFIG_FILE}

❯ rm -f ${GKE_KUBECONFIG_FILE}

❯ kubectl --context=${CTX_PKE} create -n istio-system -f docs/federation/gateway/samples/remoteistio-gke-cr.yaml

Add an EKS cluster to the service mesh

❯ kubectl --context=${CTX_EKS} apply -f docs/federation/gateway/cluster-add

❯ EKS_KUBECONFIG_FILE=$(docs/federation/gateway/cluster-add/generate-kubeconfig.sh ${CTX_EKS})

❯ kubectl --context $CTX_PKE -n istio-system create secret generic ${CTX_EKS} --from-file=${CTX_EKS}=${EKS_KUBECONFIG_FILE}

❯ rm -f ${EKS_KUBECONFIG_FILE}

❯ kubectl --context=${CTX_PKE} create -n istio-system -f docs/federation/gateway/samples/remoteistio-eks-cr.yaml

Wait for the istio-eks and istio-gke RemoteIstio resource statuses to become Available and for the pods in the istio-system on those clusters to become ready.

It could take some time for these resources to become

Available; some reconciliation failures may occur, since the reconciliation process must determine the ingress gateway addresses of the clusters.

❯ kubectl --context=${CTX_PKE} -n istio-system get remoteistios

NAME STATUS ERROR GATEWAYS AGE

istio-eks Available [35.177.214.60 3.8.50.24] 3m

istio-gke Available [35.204.1.52] 5m

❯ kubectl --context=${CTX_GKE} -n istio-system get pods

NAME READY STATUS RESTARTS AGE

istio-citadel-75648bdf6b-k8rfl 1/1 Running 0 6m9s

istio-ingressgateway-7f494bcf8-qb692 1/1 Running 0 6m8s

istio-sidecar-injector-746f5cccd9-7b55s 1/1 Running 0 6m8s

❯ kubectl --context=${CTX_EKS} -n istio-system get pods

NAME READY STATUS RESTARTS AGE

istio-citadel-78478cfb44-7h42v 1/1 Running 0 4m

istio-ingressgateway-7f75c479b8-w4qr9 1/1 Running 0 4m

istio-sidecar-injector-56dbb9587f-928h9 1/1 Running 0 4m

Deploy the bookinfo sample application in a distributed way

❯ kubectl --context=${CTX_GKE} apply -n default -f docs/federation/gateway/bookinfo/deployments/productpage-v1.yaml

❯ kubectl --context=${CTX_GKE} apply -n default -f docs/federation/gateway/bookinfo/deployments/reviews-v2.yaml

❯ kubectl --context=${CTX_GKE} apply -n default -f docs/federation/gateway/bookinfo/deployments/reviews-v3.yaml

deployment.extensions/productpage-v1 created

deployment.extensions/reviews-v2 created

deployment.extensions/reviews-v3 created

❯ kubectl --context=${CTX_EKS} apply -n default -f docs/federation/gateway/bookinfo/deployments/details-v1.yaml

❯ kubectl --context=${CTX_EKS} apply -n default -f docs/federation/gateway/bookinfo/deployments/ratings-v1.yaml

❯ kubectl --context=${CTX_EKS} apply -n default -f docs/federation/gateway/bookinfo/deployments/reviews-v1.yaml

deployment.extensions/details-v1 created

deployment.extensions/ratings-v1 created

deployment.extensions/reviews-v1 created

❯ kubectl --context=${CTX_PKE} apply -n default -f docs/federation/gateway/bookinfo/services/

service/details created

service/productpage created

service/ratings created

service/reviews created

❯ kubectl --context=${CTX_EKS} apply -n default -f docs/federation/gateway/bookinfo/services/

service/details created

service/productpage created

service/ratings created

service/reviews created

❯ kubectl --context=${CTX_GKE} apply -n default -f docs/federation/gateway/bookinfo/services/

service/details created

service/productpage created

service/ratings created

service/reviews created

Add Istio resources in order to configure destination rules and virtual services.

❯ kubectl --context=${CTX_PKE} apply -n default -f docs/federation/gateway/bookinfo/istio/

gateway.networking.istio.io/bookinfo-gateway created

destinationrule.networking.istio.io/productpage created

destinationrule.networking.istio.io/reviews created

destinationrule.networking.istio.io/ratings created

destinationrule.networking.istio.io/details created

virtualservice.networking.istio.io/bookinfo created

virtualservice.networking.istio.io/reviews created

High level overview 🔗︎

Service mesh in action 🔗︎

The components of the bookinfo app are distributed in the mesh. Three different versions are deployed by the reviews service and configured so that one third of the traffic goes to each.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews

namespace: default

spec:

hosts:

- reviews

http:

- match:

- headers:

end-user:

exact: banzai

route:

- destination:

host: reviews

subset: v2

- route:

- destination:

host: reviews

subset: v1

weight: 33

- destination:

host: reviews

subset: v2

weight: 33

- destination:

host: reviews

subset: v3

weight: 34

The bookinfo app’s product page is reachable through each cluster’s ingress gateway, since they are part of a single mesh. Let’s determine the ingress gateway address of the clusters:

export PKE_INGRESS=$(kubectl --context=${CTX_PKE} -n istio-system get svc/istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')

export GKE_INGRESS=$(kubectl --context=${CTX_GKE} -n istio-system get svc/istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

export EKS_INGRESS=$(kubectl --context=${CTX_EKS} -n istio-system get svc/istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')

Hit the PKE cluster’s ingress with some traffic to see how the reviews service is spread:

for i in `seq 1 100`; do curl -s "http://${PKE_INGRESS}/productpage" |grep -i -e "</html>" -e color=\"; done | sort | uniq -c

62 <font color="black">

72 <font color="red">

100 </html>

This may look a bit cryptic at first, but it means that 100 requests were successful, 31 hit reviews-v2, 36 hit reviews-v3, the remaining 33 hit reviews-v1. The results could vary, but should remain divided into approximate thirds.

Test it through other clusters ingresses:

# GKE

for i in `seq 1 100`; do curl -s "http://${GKE_INGRESS}/productpage" |grep -i -e "</html>" -e color=\"; done | sort | uniq -c

66 <font color="black">

72 <font color="red">

100 </html>

# EKS

for i in `seq 1 100`; do curl -s "http://${EKS_INGRESS}/productpage" |grep -i -e "</html>" -e color=\"; done | sort | uniq -c

74 <font color="black">

56 <font color="red">

100 </html>

Cleanup 🔗︎

Execute the following commands to clean up the clusters:

❯ kubectl --context=${CTX_PKE} delete namespace istio-system

❯ kubectl --context=${CTX_GKE} delete namespace istio-system

❯ kubectl --context=${CTX_EKS} delete namespace istio-system

❯ banzai cluster delete istio-pke --no-interactive

❯ banzai cluster delete istio-gke --no-interactive

❯ banzai cluster delete istio-eks --no-interactive

The Istio operator - contributing and development 🔗︎

Our Istio operator is still under heavy development. If you’re interested in this project, we’re happy to accept contributions and/or fix any issues/feature requests you might have. You can support its development by starring the repo, or if you would like to help with development, read our contribution and development guidelines from the first blog post on our Istio operator.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.