If you are a frequent reader of this blog or familiar with our products, you may already be aware that the control plane of our multi- and hybrid-cloud container management platform, Pipeline, is available not just as a free/developer service but can be run in any number of preferred envionments, whether cloud or on-prem. The control-plane’s only requirement is Kubernetes, its installation wholely automated by the banzai CLI tool alongside our own CNCF certified Kubernetes distribution, PKE.

This is especially practical for production setups, but we are also set to support multiple kickstart options for quick tests, as well as improved user and developer experiences. We went so far as to implement support for a single-command installer on our platform’s control plane in order to further improve UX for first time users. We wanted the installer to be as flexible as possible, and we are now set to begin using it in ad-hoc test scenarios - whether that means a local installation on a laptop or in a production ready environment.

This post focuses on the easiest possible installation method for the control plane, running on kind.

We tested against Docker-for-Mac Kubernetes, Minikube, KIND, and, of course, our own PKE distribution.

Why kind? 🔗︎

So you’d like to try running the Pipeline control plane on your laptop? Don’t worry; the CLI automatically provisions a kind (Kubernetes IN Docker) cluster for local installations. This means your prerequisites are reduced to a Docker daemon - easier than installing K8s, right?

During the building phase of our installer, we chose kind because it was an official Kubernetes Special Interest Groups project, and it was extremely easy to install and use. It also supported multi-node testing. Additionally, it was a relatively small project and leveraged existing tooling to great effect. It made minimal assumptions about our environment, and started up extremely quickly.

In this blog post we’ll be helping you use kind, and demonstrating specifically how it can be used as a fully functional Kubernetes cluster for testing purposes. Our control-plane runs lots of services - service meshes, disaster recovery, security scans, autoscaling, single/multi cluster deployments (plus roughly twenty others) - and we often find it necessary to access these in-browser (even on Docker for Mac and Docker for Windows). Thus, we’ve assembled a tutorial that teaches you how to expose ports when deploying to kind.

Using K8s Ingress on kind and exposing ports 🔗︎

Setup kind 🔗︎

Install kind with go get:

GO111MODULE="on" go get sigs.k8s.io/kind@v0.3.0

Create a kind cluster (this will create a single node cluster, where the kind control-plane node is also a worker, and has no taints):

$ kind create cluster --name="banzai"

Creating cluster "banzai" ...

✓ Ensuring node image (kindest/node:v1.14.2) 🖼

✓ Preparing nodes 📦

✓ Creating kubeadm config 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Cluster creation complete. You can now use your cluster with:

export KUBECONFIG="$(kind get kubeconfig-path --name="banzai")"

kubectl cluster-info

Installing an Ingress controller to kind 🔗︎

Install the NGINX Ingress Controller to your kind cluster:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

namespace/ingress-nginx created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/cloud-generic.yaml

service/ingress-nginx created

Now we have an Ingress controller (hooray), but how can we access it? Let’s check the external IP of the Ingress Service that NGINX created:

$ kubectl get services -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx LoadBalancer 10.106.112.12 <pending> 80:32293/TCP,443:32117/TCP 16m

It’s <pending>, which doesn’t sound good. kind hasn’t implemented LoadBalancer support, so it will continue to loop in this state forever. We’ll fix this later. For now, let’s explore how kind networking works from a bird’s eye view.

kind networking 🔗︎

kind v0.3.0 uses the clean CNI configuration of the ptp plugin. Simultaneously and in tandem, kind operates its networking helper daemon, called kindnetd, which helps the ptp plugin to discover the Node’s InternalIP. The ptp CNI plugin creates a point-to-point link between a container and the host by using a veth device.

kind maps each Kubernetes node to a Docker container:

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

banzai-control-plane Ready master 4h55m v1.14.2 172.17.0.2 <none> Ubuntu Disco Dingo (development branch) 4.9.125-linuxkit containerd://1.2.6-0ubuntu1

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a1117fc85ef6 kindest/node:v1.14.2 "/usr/local/bin/entr…" 5 hours ago Up 5 hours 58954/tcp, 127.0.0.1:58954->6443/tcp banzai-control-plane

$ docker inspect banzai-control-plane -f '{{.NetworkSettings.IPAddress}}'

172.17.0.2

$ docker exec -it banzai-control-plane ip route show

default via 172.17.0.1 dev eth0

10.244.0.0/24 dev cni0 proto kernel scope link src 10.244.0.1

172.17.0.0/16 dev eth0 proto kernel scope link src 172.17.0.2

Expose Ingress from kind 🔗︎

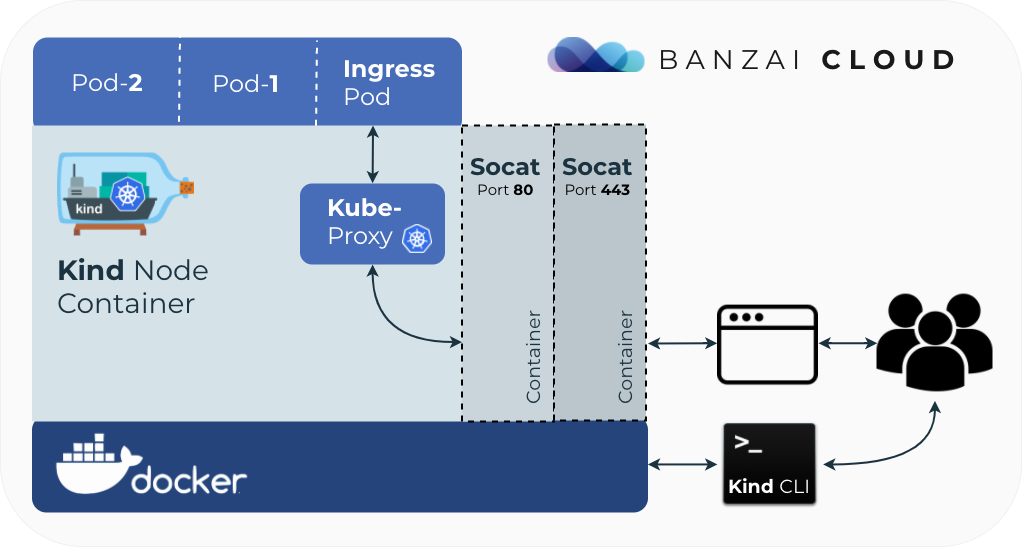

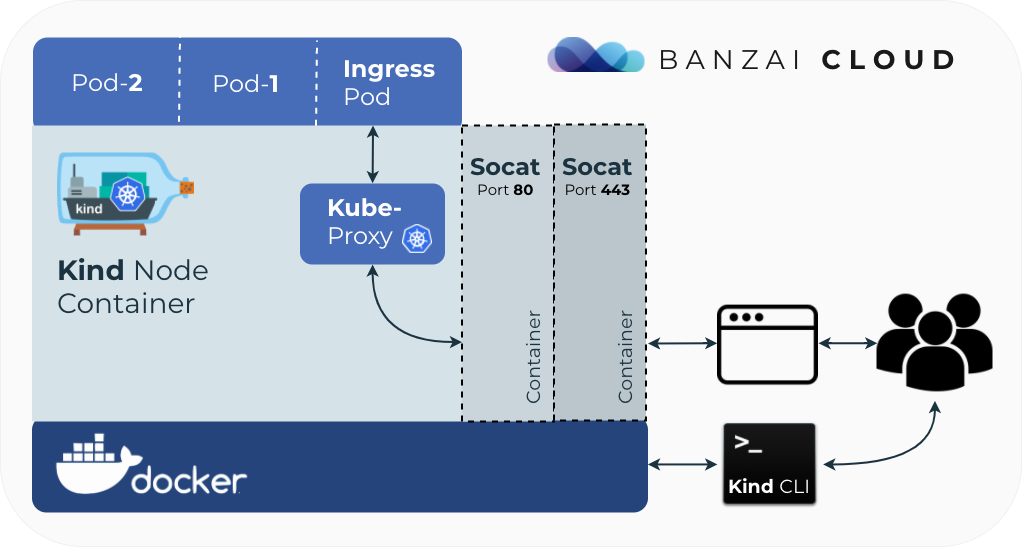

The issue, here, is that kind currently exposes only a single Docker port to the host machine (the Kubernetes API server). To overcome this limitation we proxy the ports to the local machine with another set of containers running on the same level as the kind node(s).

Let’s change the Ingress Service to a NodePort, since we won’t be getting a LoadBalancer from kind:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/baremetal/service-nodeport.yaml

service/ingress-nginx configured

$ kubectl get services -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx NodePort 10.106.112.12 <none> 80:32293/TCP,443:32117/TCP 176m

Now it’s configured correctly, and no longer in a <pending> state. Since this is a NodePort-type Service, the same ephemeral ports (32293 and 32117) are allocated across all the nodes of your cluster, even on the banzai-control-plane node.

Let’s expose the Ingress NodePorts by creating two instances of socat for port 80 and 443 respectively:

for port in 80 443

do

node_port=$(kubectl get service -n ingress-nginx ingress-nginx -o=jsonpath="{.spec.ports[?(@.port == ${port})].nodePort}")

docker run -d --name banzai-kind-proxy-${port} \

--publish 127.0.0.1:${port}:${port} \

--link banzai-control-plane:target \

alpine/socat -dd \

tcp-listen:${port},fork,reuseaddr tcp-connect:target:${node_port}

done

This way the original host will have two open ports, from which traffic will be routed from socat to the kind node container and then, subsequently, to the ingress pod, from which it will hop again if there’s a specified backend.

If you are using a multi-node kind setup, this solution will still work, since that’s just how the NodePort works.

NOTE: you can create a multi-node cluster with the following commands:

cat > kind.yaml <<EOT

kind: Cluster

apiVersion: kind.sigs.k8s.io/v1alpha3

nodes:

- role: control-plane

- role: worker

- role: worker

EOT

kind create cluster --name banzai --config kind.yaml

We’ll get the infamous NGINX 404 response if we try to access https://localhost with curl, which is a good sign in this case:

$ curl -v -k https://localhost

...

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.15.10</center>

</body>

</html>

We are now ready to use our cluster to install our control plane or any other application that uses Ingress.

What’s next 🔗︎

For local setups we’ll provision your kind cluster for you in a relatively straight-forward way. However, in the future this all might be baked into one single image - thus sparing you the time it takes to go through the control plane installation process. kind has shown some notable improvements to reboot support as of its latest release, but it could still be better. We’re excited to see them make progress on this over time.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.

About Banzai Cloud 🔗︎

Banzai Cloud is changing how private clouds are built in order to simplify the development, deployment, and scaling of complex applications, putting the power of Kubernetes and Cloud Native technologies in the hands of developers and enterprises, everywhere.

#multicloud #hybridcloud #BanzaiCloud