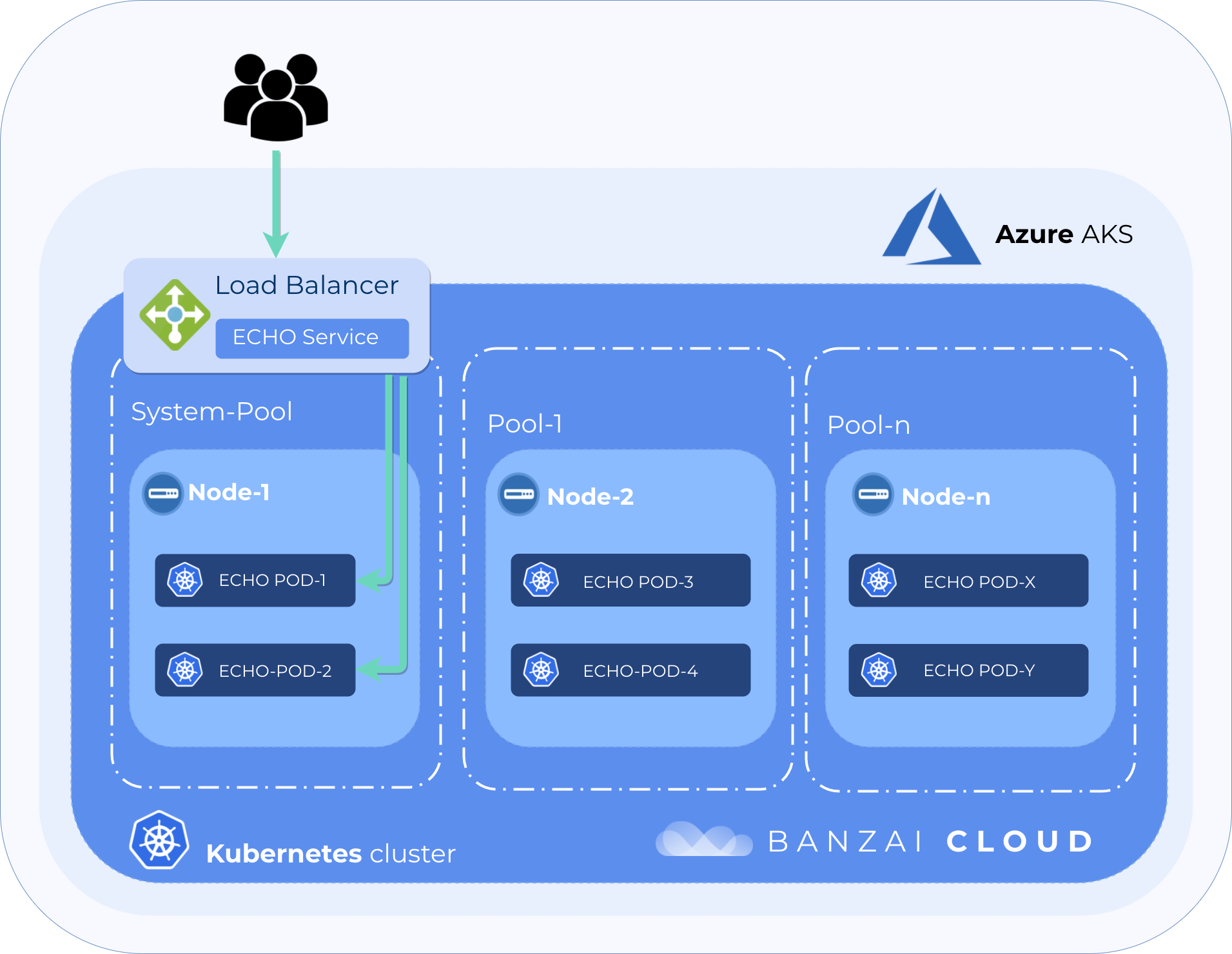

Enterprises often use heterogenous clusters to deploy their applications to Kubernetes, because those applications usually have needs that involve special scheduling constraints. Pods may require nodes that have special hardware, that are isolated, or that are colocated with other pods running within a system. To help our customers solve these problems, the Banzai Cloud Pipeline platform uses nodepools.

A nodepool is a subset of node instances within a cluster with the same configuration, however, the overall cluster can contain multiple nodepools as well heterogenous nodes/configurations. The Pipeline platform can manage any number of nodepools on a cloud-based Kubernetes cluster, each with different configurations - e.g. nodepool 1 is local SSD, nodepool 2 is spot or preemptible-based, nodepool 3 contains GPUs - these configurations are turned into actual cloud specific instances.

We apply the concept of nodepools across all the cloud providers we support, in order to create heterogeneous clusters, even to those providers which do not support heterogeneous clusters by default (e.g Azure). Implementation can be tricky for providers that are not prepared and do not officially support nodepools, and may result in edge case problems depending on the workloads and services deployed to a Kubernetes cluster.

Thanks to our growing community, more and more people are using our free developer tier version of Pipeline, all of whom have different usage patterns and requirements. In most cases, everything works as expected, however (luckily) we see more and more edge cases, and - since we support six cloud providers, and on-prem clusters - sometimes those cases are particularly interesting.

AKS load balancing issue 🔗︎

First of all, let me emphasize how much we appreciate feedback from our users, since we are constantly working on improving Pipeline. Each of our users (or commercial customers) runs different scenarios on Kubernetes to meet their businesses’ needs. We learn a lot from these cases, and though we have very limited time that’s already split between engineering new features and supporting our customers, we also strive to support our free developer tier users as much as we can. For quicker turnarouds and fixes, we ask that you reach us through our community slack channel.

Last Friday, late afternoon (yes, these things always happen on a Friday) one of our users was facing a load balancing issue on an AKS cluster. It started as a small issue, but it quickly turned into an all-nighter.

tl;dr 🔗︎

We cannot use taint with hard effects to protect the Pipeline system nodepool on AKS, because the kube-proxy (besides other k8s system pods) won’t tolerate every taint.

AKS only supports a

Basicload balancer which does not support backend nodes from different nodepools.There is an AKS specific load balancer annotation (

service.beta.kubernetes.io/azure-load-balancer-mode) available to specify which availability set/nodepool to use for backend nodes.

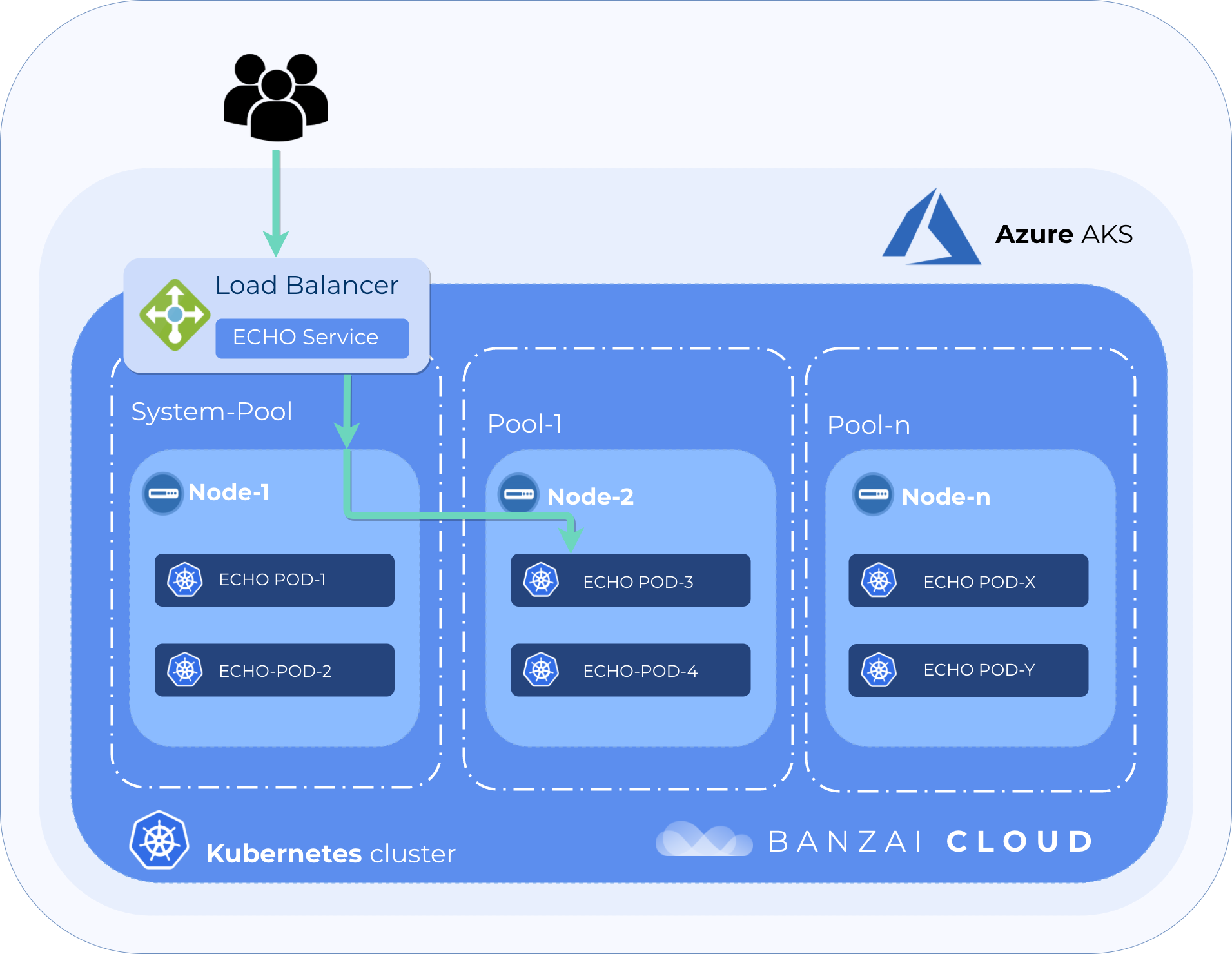

The main issue, here, was uneven load balancing to a simple externally available service. Some of the pods weren’t getting any traffic through a load balancer service for an unknown reason. The cluster contained two nodepools, one system node pool which Pipeline always deploys for its managed workload pods (monitoring, logging, etc.), and another for user workloads.

The available nodes, running pods, and the service on the clusters looked something like this

NAME STATUS ROLES AGE VERSION

aks-pool-1-27255451-0 Ready agent 1h v1.11.5

aks-system-27255451-0 Ready agent 1h v1.11.5

NAME READY STATUS IP NODE

echo-deployment-78dc57c76d-58mz5 1/1 Running 10.244.1.7 aks-system-27255451-0

echo-deployment-78dc57c76d-fpwkf 1/1 Running 10.244.0.18 aks-pool-1-27255451-0

echo-deployment-78dc57c76d-v2brg 1/1 Running 10.244.0.17 aks-pool-1-27255451-0

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echo-service LoadBalancer 10.0.237.55 13.93.24.195 80:32651/TCP 25mSend some traffic to the LB 🔗︎

for i in `seq 1 100`; do curl -s http://13.93.24.195 |grep -i "hostname: "|cut -d ' ' -f 2; done |sort |uniq -c

100 echo-deployment-78dc57c76d-58mz5

For some reason only the pod on the system node would answer. This is strange for multiple reasons. First of all, that pod should not be scheduled onto a system node at all.

Usually a taint is added to the nodes in the Pipeline system nodepool with NoSchedule and NoExecute effects to prevent unwanted workloads getting to where they’re not supposed to be.

Unfortunately, on AKS that’s just not possible, since it would also prevent kube-proxy from being scheduled onto that node. kube-proxy is usually deployed in such a way as to tolerate every NoSchedule and NoExecute taint, but AKS won’t do this. Bummer. So on AKS we have to set the taint with a PreferNoSchedule effect.

As a workaround we can set node anti-affinity for deployment, which would prevent pod scheduling onto system nodes.

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: nodepool.banzaicloud.io/name

operator: NotIn

values:

- "system"In a previous post we’ve already discussed how to use Taints and tolerations, and pod and node affinities.

Although the pod running on the system node is not be ideal, that doesn’t explain why the other pods aren’t getting any traffic.

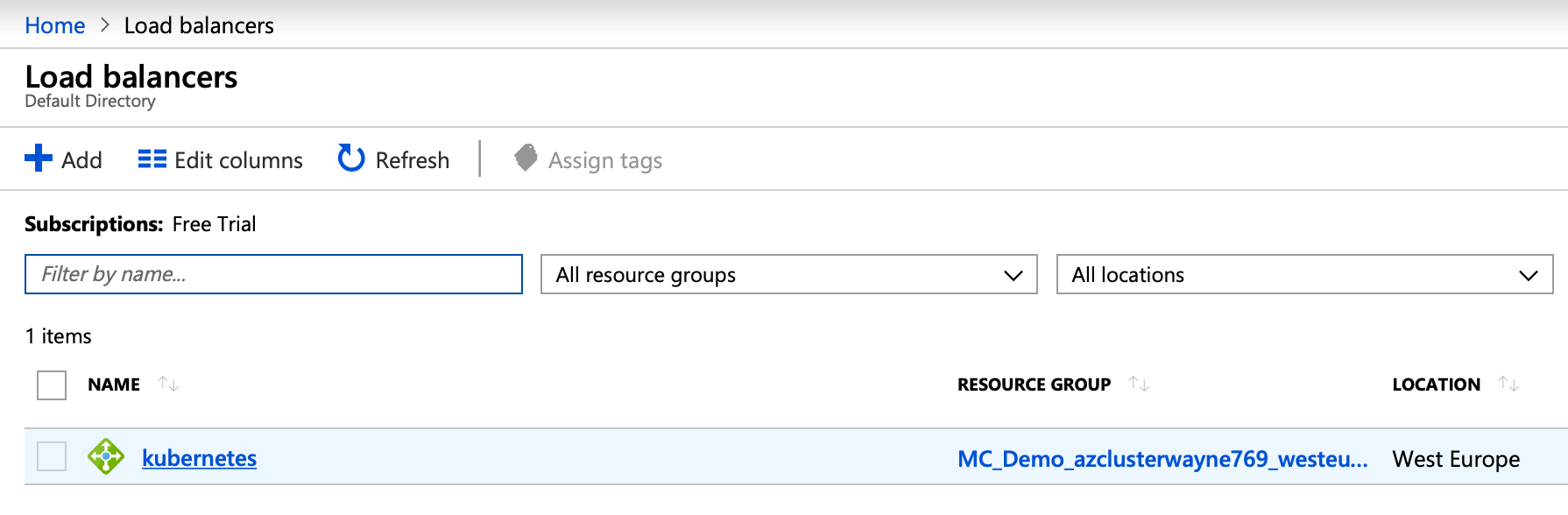

Let’s take a look on the provider’s side 🔗︎

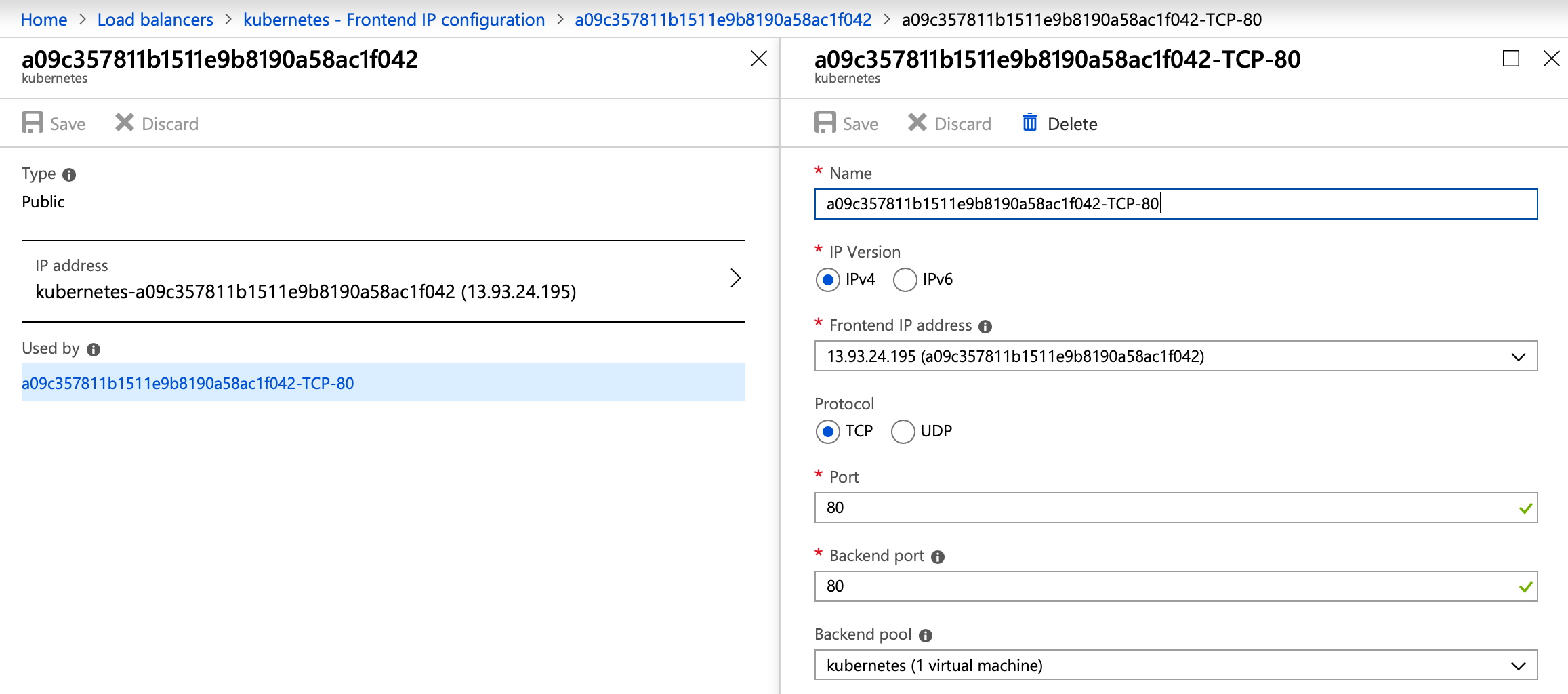

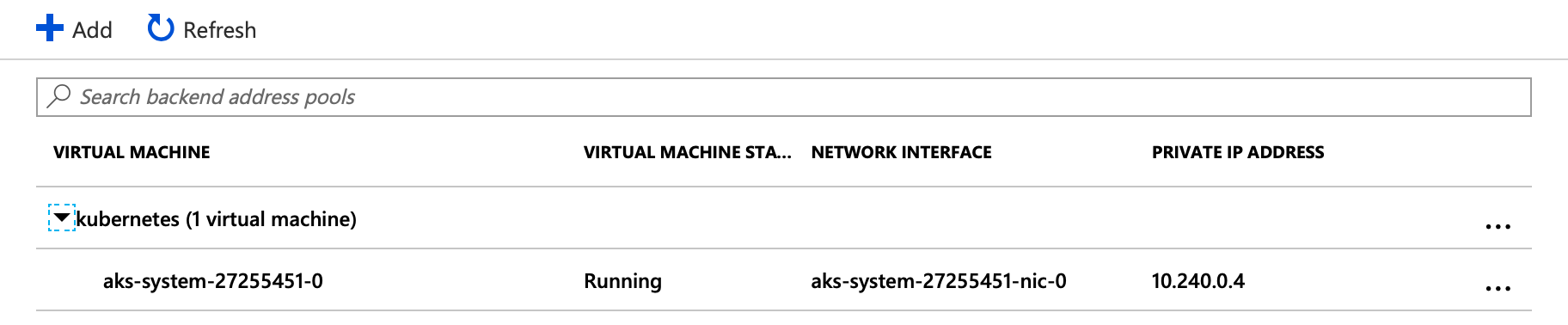

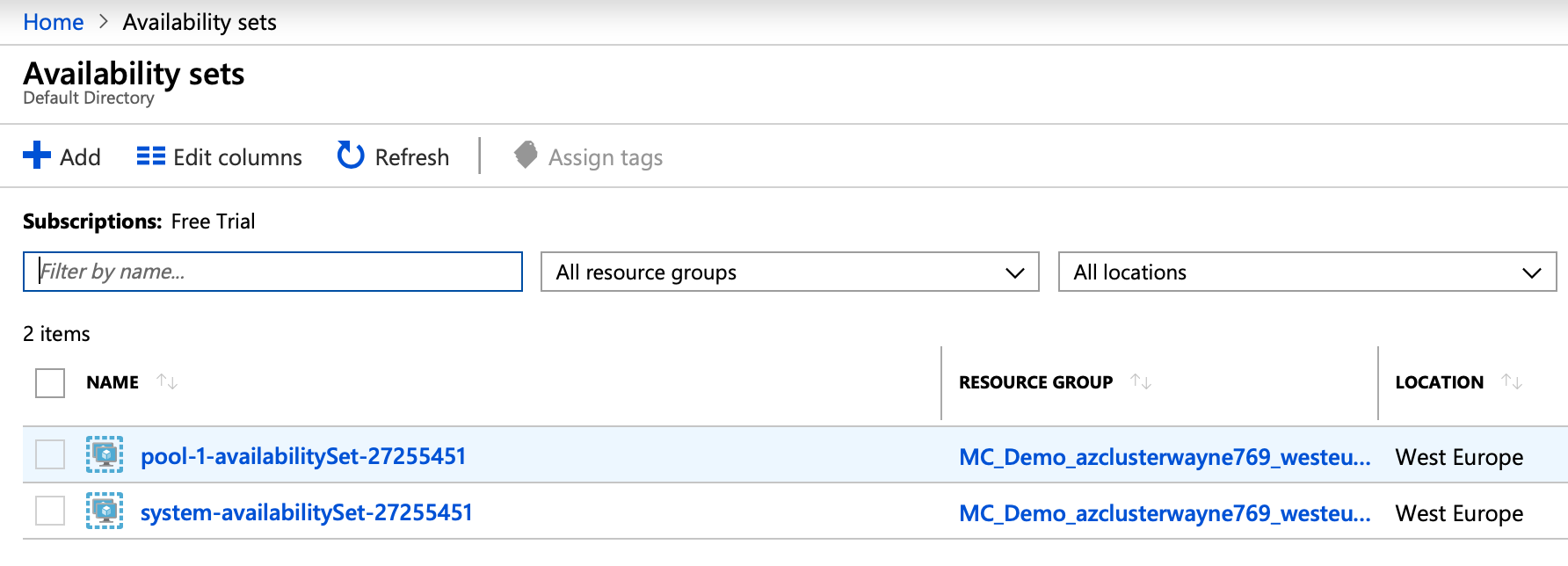

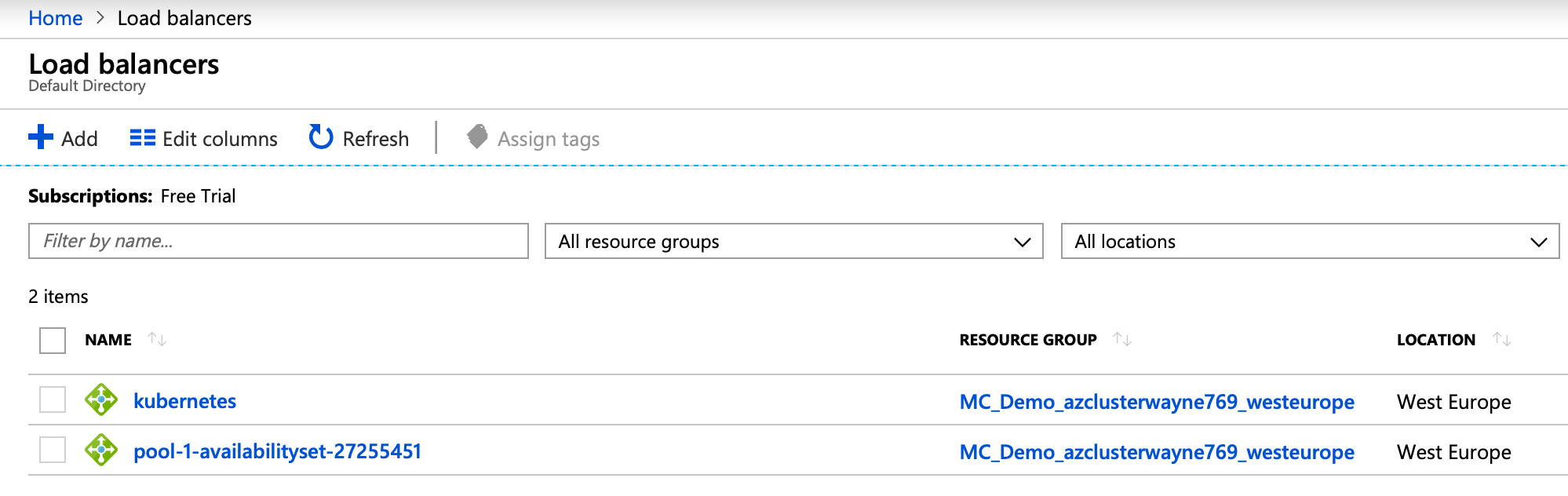

Once a LoadBalancer service is defined in Kubernetes it will create an external load balancer on whatever infrastructure it’s running on. However, AKS supports only the Basic Azure load balancer, the main drawback of which is that it only supports a single availability set or virtual machine scale set as backend. The availability set is a direct counterpart of a Pipeline nodepool on the AKS side.

As we can see on the provider side, the LB is created in such a way that the backends are nodes of the system nodepool. That means the external traffic goes to the nodes in the system nodepool, when, by default, it should be spread out within the cluster through the kube proxies. But sometimes that’s not what we want. The discrepency lies in the externalTrafficPolicy setting of echo-service.

service.spec.externalTrafficPolicy - denotes if this Service desires to route external traffic to node-local or cluster-wide endpoints. There are two available options: “Cluster” (default) and “Local”. “Cluster” obscures the client source IP and may cause a second hop to another node, but should have good overall load-spreading. “Local” preserves the client source IP and avoids a second hop for LoadBalancer and NodePort type services, but risks potentially imbalanced traffic spreading.

Lets check the echo-service specs 🔗︎

$ kubectl get svc/echo-service -o yaml

apiVersion: v1

kind: Service

...

spec:

externalTrafficPolicy: Local

...

status:

loadBalancer:

ingress:

- ip: 13.93.24.195What happens if we change the traffic policy to Cluster? 🔗︎

for i in `seq 1 100`; do curl -s http://13.93.24.195 |grep -i "hostname: "|cut -d ' ' -f 2; done |sort |uniq -c

36 echo-deployment-78dc57c76d-58mz5

33 echo-deployment-78dc57c76d-fpwkf

31 echo-deployment-78dc57c76d-v2brg

This results in better load balancing, but we lose the proper source IP of the request.

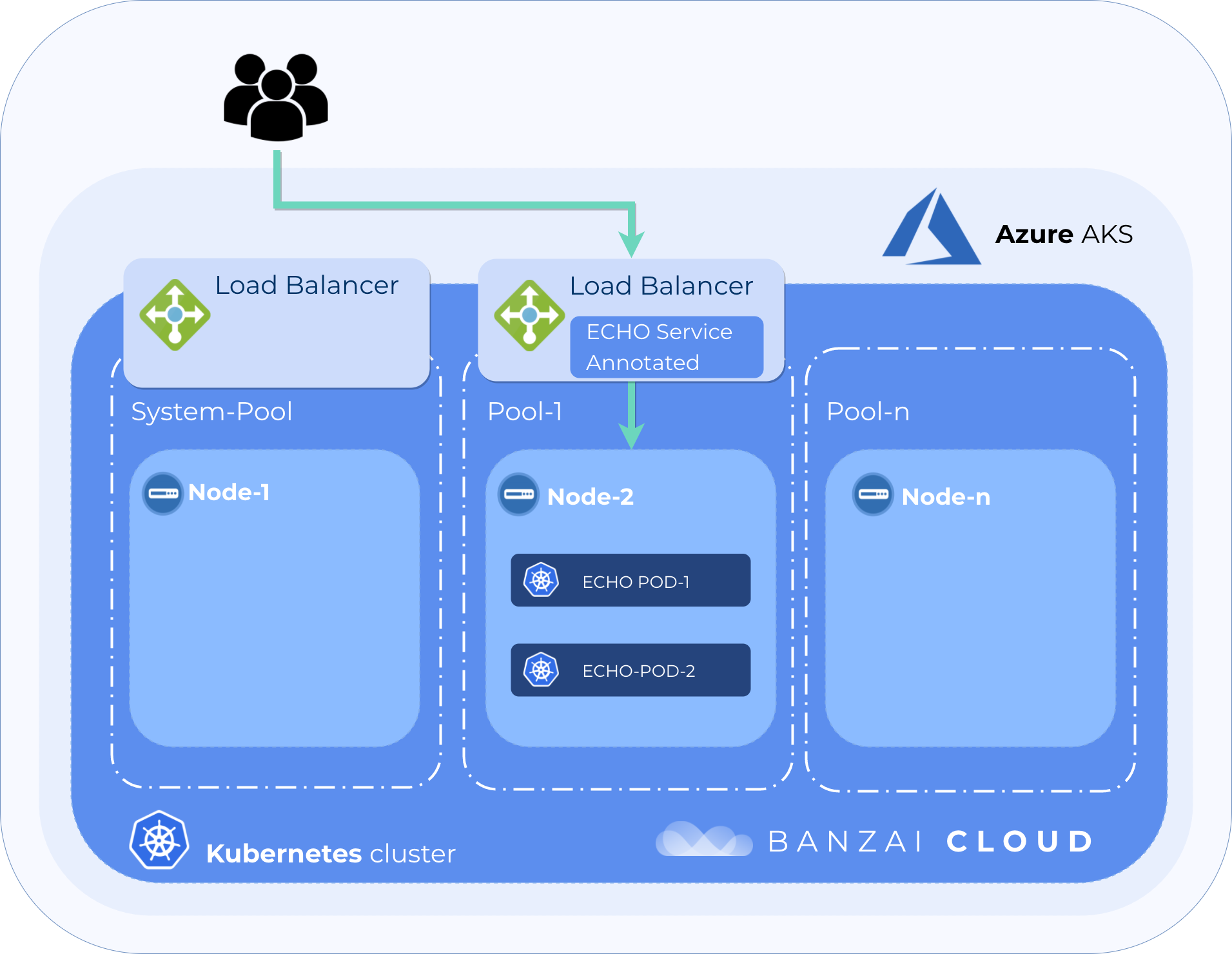

To have a proper source IP we have to schedule our pods onto a specific nodepool and also somehow create the load balancer service which uses the same nodepool as its backend.

Use node affinity to make sure our pods are scheduled onto a node in a specific nodepool 🔗︎

Extend the deployment spec with affinity to make sure the pods will be scheduled onto nodes in the pool-1 nodepool.

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: nodepool.banzaicloud.io/name

operator: In

values:

- "pool-1"NAME READY STATUS IP NODE

echo-deployment-575658b97c-5tvfb 1/1 Running 10.244.0.20 aks-pool-1-27255451-0

echo-deployment-575658b97c-lxn4h 1/1 Running 10.244.0.19 aks-pool-1-27255451-0

echo-deployment-575658b97c-nmgmn 1/1 Running 10.244.0.21 aks-pool-1-27255451-0Use the AKS-specific LoadBalancer service annotation to specify which nodepool to use as the backend availabilitySet 🔗︎

As we mentioned earlier, the Pipeline nodepools are directly mapped onto Azure availability sets.

Fortunately, Azure provides a specific (service.beta.kubernetes.io/azure-load-balancer-mode) annotation that allows us to specify which availability set to use as a backend for a given load balancer.

# remove `echo-service`

$ kubectl delete svc/echo-service

# add annotation to `echo-service` specs

apiVersion: v1

kind: Service

metadata:

name: echo-service

annotations:

service.beta.kubernetes.io/azure-load-balancer-mode: "pool-1-availabilitySet-27255451"

spec:

externalTrafficPolicy: Local

selector:

app: echo-pod

ports:

- name: http

protocol: TCP

port: 80

targetPort: 8080

# apply `echo-service`

$ kubectl apply -f echo-service.yml

# check the service

$ kubectl get svc/echo-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echo-service LoadBalancer 10.0.189.194 40.115.57.212 80:31250/TCP 3mOn the provider side, another load balancer has been deployed for pool-1 just as we specified. So far so good.

Let’s send some traffic again 🔗︎

for i in `seq 1 100`; do curl -s http://40.115.57.212 |grep -i "hostname: "|cut -d ' ' -f 2; done |sort |uniq -c

36 echo-deployment-78dc57c76d-58mz5

33 echo-deployment-78dc57c76d-fpwkf

31 echo-deployment-78dc57c76d-v2brg

Utilising node affinity and service annotation, the endpoint now properly balances the traffic without losing the source IP of requests.

Takeaway 🔗︎

Although the managed Kubernetes services from various providers are based on the same main component, there are important differences in how cloud providers integrate Kubernetes with their other service offerings. This issue clearly demonstrates how important it is to have a deeper understanding of how things work under the hood.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.