Creating Kubernetes clusters in the cloud and deploying (or CI/CDing) applications to those clusters is not always simple. There are a few conventional options, but they are either cloud or distribution specific. While we were working on our open source Pipeline Platform, we needed a solution which covered (here follows an inclusive but not exhaustive list of requirements):

- provisioning of Kubernetes clusters on all major cloud providers (via REST, UI and CLI) using a unified interface

- application lifecycle management (on-demand deploy, dependency management, etc) preferably over a REST interface

- support for multi tenancy, and advanced security scenarios (app to app security with dynamic secrets, standards, multi-auth backends, and more)

- ability to build cross-cloud or hybrid Kubernetes environments

This posts highlights the ease of creating Kubernetes clusters using the Pipeline API on the following providers:

- Amazon Web Services (AWS)

- Azure Container Service (AKS)

- Google Kubernetes Engine (GKE)

- Bring your own Kubernetes Cluster (BYOC)

Note, this is not a complete list of providers (we will be open sourcing EKS as soon as those services are available to the public. If you need support for any of these providers don’t hesitate to contact us).

For an overview of Pipeline, please study this diagram, which contains the main components of the Control Plane and a typical layout for a provisioned cluster.

Prerequisites 🔗︎

Pipeline Control Plane 🔗︎

Before we begin, you’ll need a running Pipeline Control Plane for launching the components/services that compose the Pipeline Platform. We practice what we preach, so the Pipeline Platform, itself, is a Kubernetes deployment. You can spin up a control plane on any cloud provider (we have automated the setup for Amazon, Azure and Google), on an existing Kubernetes cluster or, for development purposes, even Minikube.

Provider credentials 🔗︎

You will need provider specific credentials for creating secrets in Pipeline (see below).

Get Amazon credentials

If you don’t have aws cli installed please follow these instructions

To create a new AWS secret access key and a corresponding AWS access key ID for the specified user, run the following command:

Change

{{username}}to your AWS username

aws iam create-access-key --user-name {{username}}You should get something like:

{

"AccessKey": {

"UserName": "{{username}}",

"AccessKeyId": “EWIAJSBDOQWBSDKFJSK",

"Status": "Active",

"SecretAccessKey": "OOWSSF89sdkjf78234",

"CreateDate": "2018-05-04T13:50:07.586Z"

}

}The relationship between variables and secret keys:

| Credential variable name | Secret variable |

|---|---|

| AccessKeyId | AWS_ACCESS_KEY_ID |

| SecretAccessKey | AWS_SECRET_ACCESS_KEY |

Get Azure credentials

For this step it is necessary that you have an Azure subscription with AKS service enabled.

If the Azure CLI tool is not installed, please run the following commands:

$ curl -L https://aka.ms/InstallAzureCli | bash $ exec -l $SHELL $ az login

Create a Service Principal for the Azure Active Directory using the following command:

$ az ad sp create-for-rbacYou should get something like:

{

"appId": "1234567-1234-1234-1234-1234567890ab",

"displayName": "azure-cli-2017-08-18-19-25-59",

"name": "http://azure-cli-2017-08-18-19-25-59",

"password": "1234567-1234-1234-be18-1234567890ab",

"tenant": "1234567-1234-1234-be18-1234567890ab"

}Run the following command to get your Azure subscription ID:

$ az account show --query id

"1234567-1234-1234-1234567890ab"The relationship between variables and secret keys:

| Credential variable name | Secret variable |

|---|---|

| appId | AZURE_CLIENT_ID |

| password | AZURE_CLIENT_SECRET |

| tenant | AZURE_TENANT_ID |

az account show --query id output |

AZURE_SUBSCRIPTION_ID |

AKS requires a few services to be pre-registered for the subscription. You can add these through the Azure portal or by using the CLI. The required pre-registered service providers are:

Microsoft.Compute

Microsoft.Storage

Microsoft.Network

Microsoft.ContainerServiceYou can check registered providers with:

az provider list --query "[].{Provider:namespace, Status:registrationState}" --out table.

If the above are not registered, you can add them:

az provider register --namespace Microsoft.ContainerService

az provider register --namespace Microsoft.Compute

az provider register --namespace Microsoft.Storage

az provider register --namespace Microsoft.NetworkWhile registration runs through the necessary zones and datacenters, we suggest you sit back and have a nice hot cup of coffee. You can check the status of each individual service by hitting az provider show -n Microsoft.ContainerService.

Get Google credentials

If you don’t have gcloud cli installed, please follow these instructions

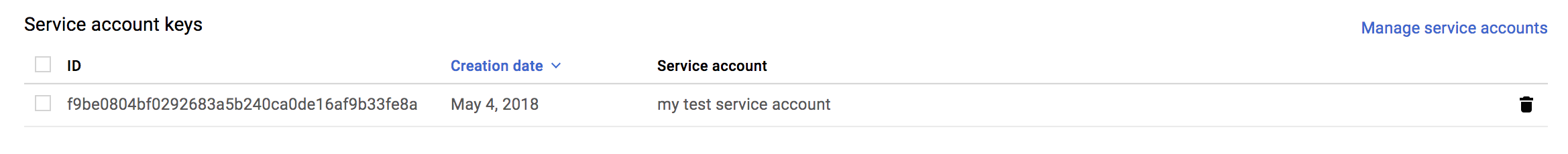

Then, follow the steps below to create a new service account:

Your new public/private key pair will be generated and downloaded to your machine. It serves as the only copy of your private key. You are responsible for storing that key securely.

If the service account was successfully created, your key.json file should look something like:

{

"type": "service_account",

"project_id": "colin-pipeline",

"private_key_id": "1234567890123456",

"private_key": "-----BEGIN PRIVATE KEY-----\nyourprivatekey\n-----END PRIVATE KEY-----\n",

"client_email": "mytestserviceaccount@colin-pipeline.iam.gserviceaccount.com",

"client_id": "1234567890123456",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://accounts.google.com/o/oauth2/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/mytestserviceaccount%40colin-pipeline.iam.gserviceaccount.com"

}You should check on your new service account in the Google Cloud Console.

The JSON received should be exactly what you’ll send to Pipeline later.

Create cluster 🔗︎

The current version of Pipeline also supports cluster creation with profiles.

The easiest way to create a Kubernetes cluster on one of the supported cloud providers is by using the REST API, which is available as a Postman collection:

Endpoints to be used:

- Create cluster (POST):

{{url}}/api/v1/orgs/{{orgId}}/clusters- Get cluster status (GET):

{{url}}/api/v1/orgs/{{orgId}}/clusters/{{cluster_id}}- Get cluster config (GET):

{{url}}/api/v1/orgs/{{orgId}}/clusters/{{cluster_id}}/config- API endpoint (GET):

{{url}}/api/v1/orgs/{{orgId}}/clusters/{{cluster_id}}/apiendpoint- Create secret (POST):

{{url}}/api/v1/orgs/{{orgId}}/secrets- Create profile (POST):

{{url}}/api/v1/orgs/{{orgId}}/profiles/cluster

Create secrets 🔗︎

Before you start, you’ll need to create secrets via Pipeline. Note that we place a great deal of emphasis on security, and store all credentials and sensitive information in Vault. These secrets are never available as environment variables, placed in files, etc. They are securely stored and retrieved from Vault using plugins and exchanged between applications or APIs behind the scenes. All heavy lifting pertaining to Vault is done by our open source project, Bank-Vaults.

For the purposes of this blog, we will need three types of secrets:

- AWS secrets

- AKS secrets

- GKE secrets

Pipeline stores these secrets in Vault, then you can use them by referring to them as secret_ids.

Responses will contain a secret id

The following paragraphs describe a sample cURL request.

AWS secret 🔗︎

curl -g --request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/secrets' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name": "My amazon secret",

"type": "amazon",

"values": {

"AWS_ACCESS_KEY_ID": "{{AWS_ACCESS_KEY_ID}}",

"AWS_SECRET_ACCESS_KEY": "{{AWS_SECRET_ACCESS_KEY}}"

}

}'AKS secret 🔗︎

curl -g --request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/secrets' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name": "My azure secret",

"type": "azure",

"values": {

"AZURE_CLIENT_ID": "{{AZURE_CLIENT_ID}}",

"AZURE_CLIENT_SECRET": "{{AZURE_CLIENT_SECRET}}",

"AZURE_TENANT_ID": "{{AZURE_TENANT_ID}}",

"AZURE_SUBSCRIPTION_ID": "{{AZURE_SUBSCRIPTION_ID}}"

}

}'GKE secret 🔗︎

curl -g --request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/secrets' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name": "My google secret",

"type": "google",

"values": {

"type": "{{gke_type}}",

"project_id": "{{gke-projectId}}",

"private_key_id": "{{private_key_id}}",

"private_key": "{{private_key}}",

"client_email": "{{client_email}}",

"client_id": "{{client_id}}",

"auth_uri": "{{auth_uri}}",

"token_uri": "{{token_uri}}",

"auth_provider_x509_cert_url": "{{auth_provider_x509_cert_url}}",

"client_x509_cert_url": "{{client_x509_cert_url}}"

}

}'Create an AWS EC2 cluster 🔗︎

Here is a sample cURL request that will create an Amazon cluster with two nodePools:

curl -g --request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/clusters' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name":"awscluster-{{username}}-{{$randomInt}}",

"location": "eu-west-1",

"cloud": "amazon",

"secret_id": "{{secret_id}}",

"nodeInstanceType": "m4.xlarge",

"properties": {

"amazon": {

"nodePools": {

"pool1": {

"instanceType": "m4.xlarge",

"spotPrice": "0.2",

"minCount": 1,

"maxCount": 2,

"image": "ami-16bfeb6f"

},

"pool2": {

"instanceType": "m4.xlarge",

"spotPrice": "0.2",

"minCount": 3,

"maxCount": 5,

"image": "ami-16bfeb6f"

}

},

"master": {

"instanceType": "m4.xlarge",

"image": "ami-16bfeb6f"

}

}

}

}'If the cluster was created successfully, you’ll see something like this after calling Get cluster status endpoint:

{

"status": "RUNNING",

"status_message": "Cluster is running",

"name": "awscluster-colin-227",

"location": "eu-west-1",

"cloud": "amazon",

"id": 75,

"nodePools": {

"pool1": {

"instance_type": "m4.xlarge",

"spot_price": "0.2",

"min_count": 1,

"max_count": 2,

"image": "ami-16bfeb6f"

},

"pool2": {

"instance_type": "m4.xlarge",

"spot_price": "0.2",

"min_count": 3,

"max_count": 5,

"image": "ami-16bfeb6f"

}

}

}status field is ERROR the status_message explains what caused the error.

To get cluster config use Get cluster config endpoint.

To get cluster endpoint use API endpoint endpoint.

The kubectl get nodes result will be:

NAME STATUS ROLES AGE VERSION

ip-10-0-0-41.eu-west-1.compute.internal Ready master 5m v1.9.5

ip-10-0-100-33.eu-west-1.compute.internal Ready <none> 4m v1.9.5

ip-10-0-101-48.eu-west-1.compute.internal Ready <none> 4m v1.9.5

ip-10-0-101-85.eu-west-1.compute.internal Ready <none> 4m v1.9.5Create an Azure AKS cluster 🔗︎

Here is a sample cURL request to create an Azure cluster with two nodePools:

curl -g --request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/clusters' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name":"azcluster{{username}}{{$randomInt}}",

"location": "westeurope",

"cloud": "azure",

"secret_id": "{{secret_id}}",

"nodeInstanceType": "Standard_B2ms",

"properties": {

"azure": {

"resourceGroup": "{{az-resource-group}}",

"kubernetesVersion": "1.9.2",

"nodePools": {

"pool1": {

"count": 1,

"nodeInstanceType": "Standard_B2ms"

},

"pool2": {

"count": 1,

"nodeInstanceType": "Standard_B2ms"

}

}

}

}

}'If the cluster was created successfully, you’ll see something like this after calling Get cluster status endpoint:

{

"status": "RUNNING",

"status_message": "Cluster is running",

"name": "azclustercolin316",

"location": "westeurope",

"cloud": "azure",

"id": 76,

"nodePools": {

"pool1": {

"count": 1,

"instance_type": "Standard_B2ms"

},

"pool2": {

"count": 1,

"instance_type": "Standard_B2ms"

}

}

}If the status field is ERROR the status_message explains what caused the error.

To get cluster config use Get cluster config endpoint.

To get cluster endpoint use API endpoint endpoint.

The kubectl get nodes result should be be:

NAME STATUS ROLES AGE VERSION

aks-pool1-27255451-0 Ready agent 33m v1.9.2

aks-pool2-27255451-0 Ready agent 33m v1.9.2Create a Google GKE cluster 🔗︎

Here is a sample cURL request to create a Google cluster with two nodePools:

curl -g --request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/clusters' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name":"gkecluster-{{username}}-{{$randomInt}}",

"location": "us-central1-a",

"cloud": "google",

"nodeInstanceType": "n1-standard-1",

"secret_id": "{{secret_id}}",

"properties": {

"google": {

"master":{

"version":"1.9"

},

"nodeVersion":"1.9",

"nodePools": {

"pool1": {

"count": 1,

"nodeInstanceType": "n1-standard-2"

}

}

}

}

}'If the cluster was created successfully, you will see something like this after calling the Get cluster status endpoint:

{

"status": "RUNNING",

"status_message": "Cluster is running",

"name": "gkecluster-colin-811",

"location": "us-central1-a",

"cloud": "google",

"id": 79,

"nodePools": {

"pool1": {

"count": 1,

"instance_type": "n1-standard-2"

},

"pool2": {

"count": 2,

"instance_type": "n1-standard-2"

}

}

}If the status field is ERROR, status_message will explain what caused the error.

To get cluster config use Get cluster config endpoint.

To get cluster endpoint use API endpoint endpoint.

The kubectl get nodes result should be:

NAME STATUS ROLES AGE VERSION

gke-gkecluster-colin-811-pool1-67b39b0b-v3n3 Ready <none> 15m v1.9.6-gke.1

gke-gkecluster-colin-811-pool2-73b01f4f-chm4 Ready <none> 7m v1.9.6-gke.1

gke-gkecluster-colin-811-pool2-73b01f4f-rkkz Ready <none> 15m v1.9.6-gke.1Create cluster(s) with profiles 🔗︎

To simplify the request bodies, Pipeline supports cluster creation with profiles. When creating a cluster with profiles, (almost) every input field comes from the profile and the new required field is profile_name. This new field and cloud identifies which profile should be used. These profiles can be reused any time without changes.

If you don’t want to create such a cluster but you want to try one out, Pipeline offers a default profile for every provider option. In this case, referenced only as

defaulttoprofile_name.

- AWS cluster creation with profile:

curl -g --request POST \ --url 'http://{{url}}/api/v1/orgs/{{orgId}}/clusters' \ --header 'Authorization: Bearer {{token}}' \ --header 'Content-Type: application/json' \ -d '{ "name":"awscluster-{{username}}-{{$randomInt}}", "secret_id": "{{secret_id}}", "cloud": "amazon", "profile_name": "default" }' - AKS cluster creation with profile:

curl -g --request POST \ --url 'http://{{url}}/api/v1/orgs/{{orgId}}/clusters' \ --header 'Authorization: Bearer {{token}}' \ --header 'Content-Type: application/json' \ -d '{ "name":"azcluster{{username}}{{$randomInt}}", "secret_id": "{{secret_id}}", "cloud": "azure", "profile_name": "default", "properties": { "azure": { "resourceGroup": "{{az-resource-group}}" } } }' - GKE cluster creation with profile:

curl -g --request POST \ --url 'http://{{url}}/api/v1/orgs/{{orgId}}/clusters' \ --header 'Authorization: Bearer {{token}}' \ --header 'Content-Type: application/json' \ -d '{ "name":"gkecluster-{{username}}-{{$randomInt}}", "secret_id": "{{secret_id}}", "cloud": "google", "profile_name": "default" }'

How to create AWS profiles 🔗︎

Here is a sample cURL request for creating an AWS profile:

curl - g--request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/profiles/cluster' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name": "{{profile_name}}",

"location": "eu-west-1",

"cloud": "amazon",

"{

"name": "{{profile_name}}",

"location": "eu-west-1",

"cloud": "amazon",

"properties": {

"amazon": {

"master": {

"instanceType": "m4.xlarge",

"image": "ami-16bfeb6f"

},

"nodePools": {

"pool1": {

"instanceType": "m4.xlarge",

"spotPrice": "0.2",

"minCount": 1,

"maxCount": 2,

"image": "ami-16bfeb6f"

},

"pool2": {

"instanceType": "m4.xlarge",

"spotPrice": "0.2",

"minCount": 3,

"maxCount": 5,

"image": "ami-16bfeb6f"

}

}

}

}

}'How to create an AKS profile 🔗︎

Send a request to Create profile endpoint with the following body:

curl -g --request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/profiles/cluster' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name": "{{profile_name}}",

"location": "westeurope",

"cloud": "azure",

"properties": {

"azure": {

"kubernetesVersion": "1.9.2",

"nodePools": {

"pool1": {

"count": 1,

"nodeInstanceType": "Standard_D2_v2"

},

"pool2": {

"count": 2,

"nodeInstanceType": "Standard_D2_v2"

}

}

}

}

}'How to create a GKE profile 🔗︎

Send a request to Create profile endpoint with the following body:

curl -g --request POST \

--url 'http://{{url}}/api/v1/orgs/{{orgId}}/profiles/cluster' \

--header 'Authorization: Bearer {{token}}' \

--header 'Content-Type: application/json' \

-d '{

"name": "{{profile_name}}",

"location": "us-central1-a",

"cloud": "google",

"properties": {

"google": {

"master":{

"version":"1.9"

},

"nodeVersion":"1.9",

"nodePools": {

"pool1": {

"count": 1,

"nodeInstanceType": "n1-standard-2"

},

"pool2": {

"count": 2,

"nodeInstanceType": "n1-standard-2"

}

}

}

}

}'We hope this helps you spin up Kubernetes clusters quickly, on any provider, using a unified and secure interface. We’ll stop here, since this is already quite a long post. However, if you are interested in all the features we provide with the Pipeline Platform, please make sure you read our previous posts or check out our GitHub repositories.

If you’d like to learn more about Banzai Cloud, take a look at the other posts on this blog, the Pipeline, Hollowtrees and Bank-Vaults projects.