We had quite a few Bank-Vaults releases recently where new features arrived in the webhook and operator. This is no coincidence, rather, these features were developed and implemented with the help of the community that has grown up around the project over the past two years. In this blog post, we would like to highlight and discuss some of these improvements (though, this is not an exhaustive list).

Docker image registry changes 🔗︎

ghcr.io 🔗︎

As of 1.4.2 we’ve begun to push all the Bank-Vaults images to the recently introduced GitHub Container Registry. One of the reasons for this is that we’ve wanted to offer a backup registry source for a long time. And, in light of the recent Docker rate-limit announcement, we thought that this might be the right time to put something like that in place.

ghcr.io will be the default repository of this project, once we finish testing it. For now, you have to specifically and explicitly ask to pull images from there, instead of DockerHub, like in the following example when installing the webhook:

helm repo add banzaicloud-stable https://kubernetes-charts.banzaicloud.com

helm upgrade --install vault-secrets-webhook banzaicloud-stable/vault-secrets-webhook --set image.repository=ghcr.io/banzaicloud/vault-secrets-webhook --set vaultEnv.repository=ghcr.io/banzaicloud/vault-env --set configMapMutation=true

GCR auto-auth in webhook 🔗︎

We’re the beneficiaries of an excellent convenience feature for GCR Docker images from Viktor Radnai; for the webhook to mutate a container by injecting vault-env into it, it has to know the exact command and arguments necessary to prepend the vault-env binary. If those are not defined explicitly, the webhook will look for image metadata in the given container registry. This works fine in the case of public registries, but, if you are using a private registry, the webhook needs to authenticated first. This authentication was already automatic for ECR when running on EC2 instances, but now it’s also automatic for GCR - in case the your workload is running on GCP nodes.

ARM images 🔗︎

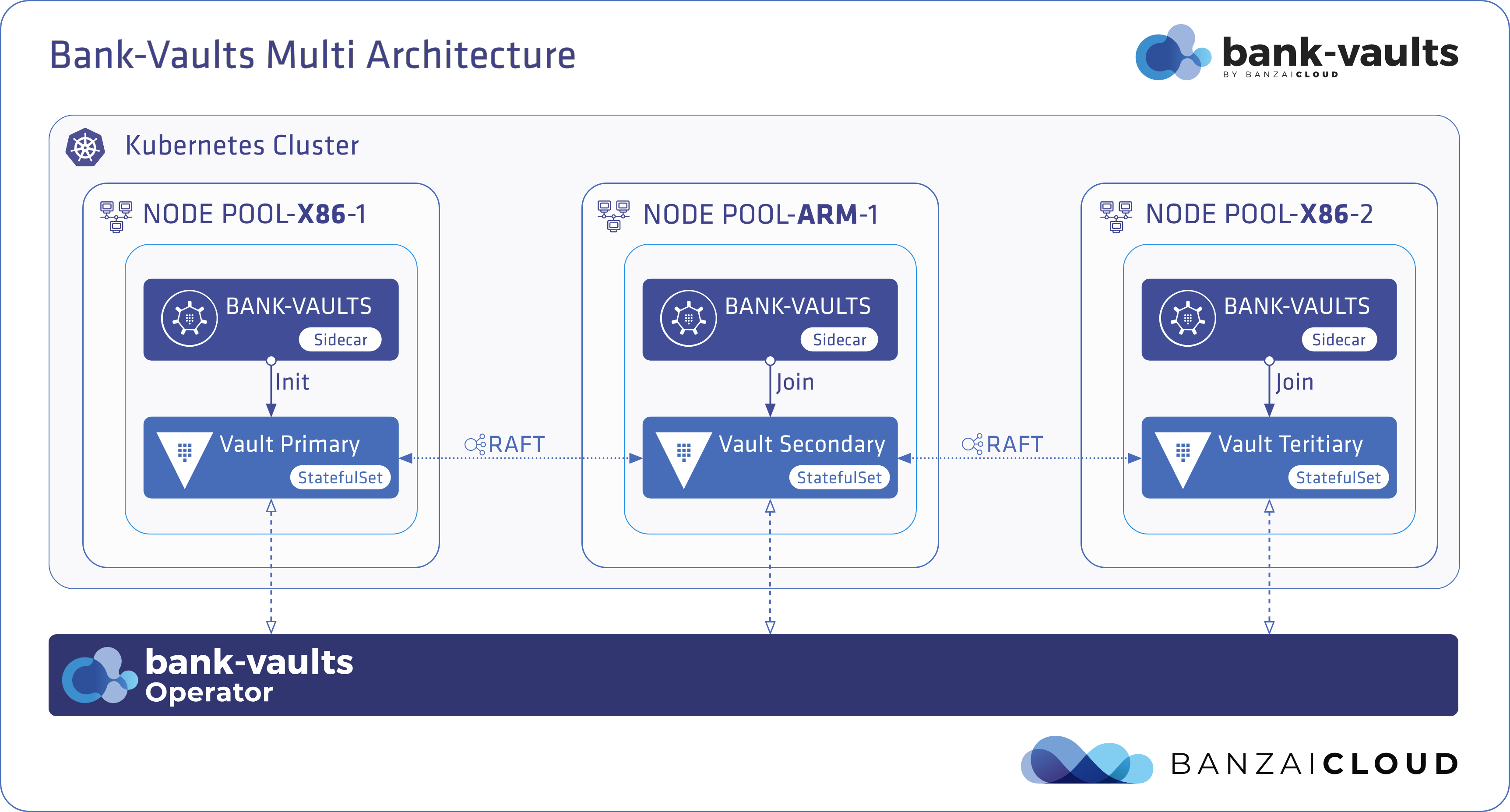

In 1.5.0 we added support for various ARM platforms (32 and 64 bit). This great idea/requirement came from Pato Arvizu, who runs Bank-Vaults on his Raspberry PI cluster! The images pushed to ghcr.io are now multi-architecture. The ones on DockerHub aren’t yet, but we’ll update the build of those DockerHub images, soon, to make those multi-architectural as well.

Let’s take a look at an example of how to set up a Vault instance with the operator on a mixed-architecture EKS cluster. EKS supports moderately priced A1 ARM EC2 instances, which will serve perfectly as cheap demonstrational infrastructure for this post. First, we deploy a new cluster with two node-pools, one with ARM and one with x86 instances through our Try Pipeline environment and the Banzai CLI:

# login to try

banzai login -e <your-pipeline-url>/pipeline

# create an AWS secret based on your local credentials

banzai secret create -n aws -t amazon --magic

banzai cluster create <<EOF

{

"name": "eks-arm-bank-vaults",

"location": "us-east-2",

"cloud": "amazon",

"secretName": "aws-trash",

"properties": {

"eks": {

"version": "1.17.9",

"nodePools": {

"pool1": {

"spotPrice": "0.1",

"count": 2,

"minCount": 2,

"maxCount": 3,

"autoscaling": true,

"instanceType": "a1.xlarge",

"image": "ami-05835ec0d56045247"

},

"pool2": {

"spotPrice": "0.101",

"count": 1,

"minCount": 1,

"maxCount": 2,

"autoscaling": true,

"instanceType": "c5.large"

}

}

}

}

}

EOF

Once the cluster is ready, we install the operator, using the ghcr.io images (which will be the default soon):

banzai cluster shell --cluster-name eks-arm-bank-vaults -- \

helm upgrade --install vault-operator banzaicloud-stable/vault-operator --set image.repository=ghcr.io/banzaicloud/vault-operator --set image.bankVaultsRepository=ghcr.io/banzaicloud/bank-vaults

Now, create the Vault instance with the operator:

kubectl apply -f https://raw.githubusercontent.com/banzaicloud/bank-vaults/master/operator/deploy/rbac.yaml

kubectl apply -f https://raw.githubusercontent.com/banzaicloud/bank-vaults/master/operator/deploy/cr.yaml

To make sure your Vault instance lands on an arm64 node, edit the vault CR and add the following lines:

kubectl edit vault vault

nodeSelector:

kubernetes.io/arch: arm64

As you can see from the outputs below, the vault-0 Pod is running on ip-192-168-68-7.us-east-2.compute.internal, which is an aarch64 (ARM 64bit) node:

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-192-168-68-7.us-east-2.compute.internal Ready <none> 4h56m v1.17.11-eks-cfdc40 192.168.68.7 3.15.218.216 Amazon Linux 2 4.14.198-152.320.amzn2.aarch64 docker://19.3.6

ip-192-168-68-84.us-east-2.compute.internal Ready <none> 4h47m v1.17.11-eks-cfdc40 192.168.68.84 3.16.47.242 Amazon Linux 2 4.14.198-152.320.amzn2.aarch64 docker://19.3.6

ip-192-168-76-34.us-east-2.compute.internal Ready <none> 4h56m v1.17.11-eks-cfdc40 192.168.76.34 18.216.230.201 Amazon Linux 2 4.14.198-152.320.amzn2.x86_64 docker://19.3.6

Some Pods are running on x86_64, and some are on aarch64 - not an issue for Bank-Vaults:

kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

vault-0 3/3 Running 0 3h9m 192.168.68.157 ip-192-168-68-7.us-east-2.compute.internal <none> <none>

vault-configurer-5b7cd464cb-2fn5p 1/1 Running 0 3h10m 192.168.78.204 ip-192-168-68-84.us-east-2.compute.internal <none> <none>

vault-operator-966949459-kf6pp 1/1 Running 0 84s 192.168.73.136 ip-192-168-68-84.us-east-2.compute.internal <none> <none>

vault-secrets-webhook-58db77d747-6plv7 1/1 Running 0 168m 192.168.71.128 ip-192-168-68-84.us-east-2.compute.internal <none> <none>

vault-secrets-webhook-58db77d747-wttqg 1/1 Running 0 168m 192.168.72.95 ip-192-168-68-7.us-east-2.compute.internal <none> <none>

The nodeSelector mentioned previously is not required, we just used it to make sure that the Bank-Vaults container lands on an ARM node. The Docker/ContainerD daemon running on the actual node knows which architecture it’s running on and automatically pulls up the right image.

Webhook 🔗︎

Multiple authentication types 🔗︎

Previously, the webhook offered only Kubernetes ServiceAccount-based authentication in Vault. This mechanism wasn’t configurable - an old gripe of ours - and we’d always wanted to enhance authentication support in the webhook. Plus, users have long since requested AWS/GCP/JWT-based authentication. With the help of the new vault.security.banzaicloud.io/vault-auth-method annotation, users can now request different authentication types:

apiVersion: apps/v1

kind: Deployment

metadata:

name: vault-env-gcp-auth

spec:

selector:

matchLabels:

app.kubernetes.io/name: vault-env-gcp-auth

template:

metadata:

labels:

app.kubernetes.io/name: vault-env-gcp-auth

annotations:

# These annotations enable Vault GCP GCE auth, see:

# https://www.vaultproject.io/docs/auth/gcp#gce-login

vault.security.banzaicloud.io/vault-addr: "https://vault:8200"

vault.security.banzaicloud.io/vault-tls-secret: vault-tls

vault.security.banzaicloud.io/vault-role: "my-role"

vault.security.banzaicloud.io/vault-path: "gcp"

vault.security.banzaicloud.io/vault-auth-method: "gcp-gce"

spec:

containers:

- name: alpine

image: alpine

command:

- "sh"

- "-c"

- "echo $MYSQL_PASSWORD && echo going to sleep... && sleep 10000"

env:

- name: MYSQL_PASSWORD

value: vault:secret/data/mysql#MYSQL_PASSWORD

This deployment - if running on a GCP instance - will automatically receive a signed-JWT token from the metadata server of the cloud provider, which it will use to authenticate against Vault. The same goes for vault-auth-method: "aws-ec2", when running on an EC2 node with the right instance-role.

Mount all keys from Vault secret to env 🔗︎

From Rakesh Patel, the webhook got a feature very similar to Kubernetes’ standard envFrom: construct, but instead of a Kubernetes Secret/ConfigMap, all its keys are mounted from a Vault secret with the help of the webhook and vault-env. Let’s see the vault.security.banzaicloud.io/vault-env-from-path annotation in action:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-secrets

spec:

selector:

matchLabels:

app.kubernetes.io/name: hello-secrets

template:

metadata:

labels:

app.kubernetes.io/name: hello-secrets

annotations:

vault.security.banzaicloud.io/vault-addr: "https://vault:8200"

vault.security.banzaicloud.io/vault-tls-secret: vault-tls

vault.security.banzaicloud.io/vault-env-from-path: "secret/data/accounts/aws"

spec:

initContainers:

- name: init-ubuntu

image: ubuntu

command: ["sh", "-c", "echo AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID && echo initContainers ready"]

containers:

- name: alpine

image: alpine

command: ["sh", "-c", "echo AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY && echo going to sleep... && sleep 10000"]

The only difference here, when compared to the original environment variable definition in the Pod env construct, is that you won’t see the actual environment variables in the definition, since it’s dynamic - is based on the contents of the Vault secret’s - just like envFrom:.

Define multiple inline-secrets in resources 🔗︎

Thanks to the helpful people at AOE GmbH we received a long-awaited addition to the webhook, namely, one that allows us to inject multiple secrets under the same key in a Secret/ConfigMap/Object. Originally, we offered a single Vault path defined as a value in the Secret/ConfigMap/PodEnv. Later, we extended this with templating, so you could transform the received value. Now, we offer complete support for multiple Vault paths inside a value, find these, here, in the following example:

apiVersion: v1

kind: ConfigMap

metadata:

name: sample-configmap

annotations:

vault.security.banzaicloud.io/vault-addr: "https://vault.default:8200"

vault.security.banzaicloud.io/vault-role: "default"

vault.security.banzaicloud.io/vault-tls-secret: vault-tls

vault.security.banzaicloud.io/vault-path: "kubernetes"

vault.security.banzaicloud.io/inline-mutation: "true"

data:

aws-access-key-id: "vault:secret/data/accounts/aws#AWS_ACCESS_KEY_ID"

aws-access-template: "vault:secret/data/accounts/aws#AWS key in base64: ${.AWS_ACCESS_KEY_ID | b64enc}"

aws-access-inline: "AWS_ACCESS_KEY_ID: ${vault:secret/data/accounts/aws#AWS_ACCESS_KEY_ID} AWS_SECRET_ACCESS_KEY: ${vault:secret/data/accounts/aws#AWS_SECRET_ACCESS_KEY}"

This example also shows how a CA certificate (created by the operator) can be used (with vault.security.banzaicloud.io/vault-tls-secret: vault-tls) to validate the TLS connection in case of a non-Pod resource, which was added in #1148.

Operator 🔗︎

The operator received a lot of fixes and stabilizations in recent releases. Two features that are worth mentioning:

Dropped support for Helm2 🔗︎

This is a removal of a feature. The shift from Helm 2 to Helm 3 was not as fluent as it might have been in the Helm community, and we also incorporated some “less than ideal” solutions to get through the transition. Because Helm 2 reaches end-of-life in the coming days we decided to drop Helm 2 support entirely, which made our charts much cleaner and properly lint-able.

CRD with proper validation 🔗︎

We’re at the finish line before finally implementing a proper CRD validation for our Vault Custom Resource, but we have a blocker issue, in kubernetes-sigs/controller-tools, that needs to be fixed first. Once merged, you’ll be able to fully validate Vault CRDs, even at the externalConfig level!

Learn more about Bank-Vaults:

- Secret injection webhook improvements

- Backing up Vault with Velero

- Vault replication across multiple datacenters

- Vault secret injection webhook and Istio

- Mutate any kind of k8s resources

- HSM support

- Injecting dynamic configuration with templates

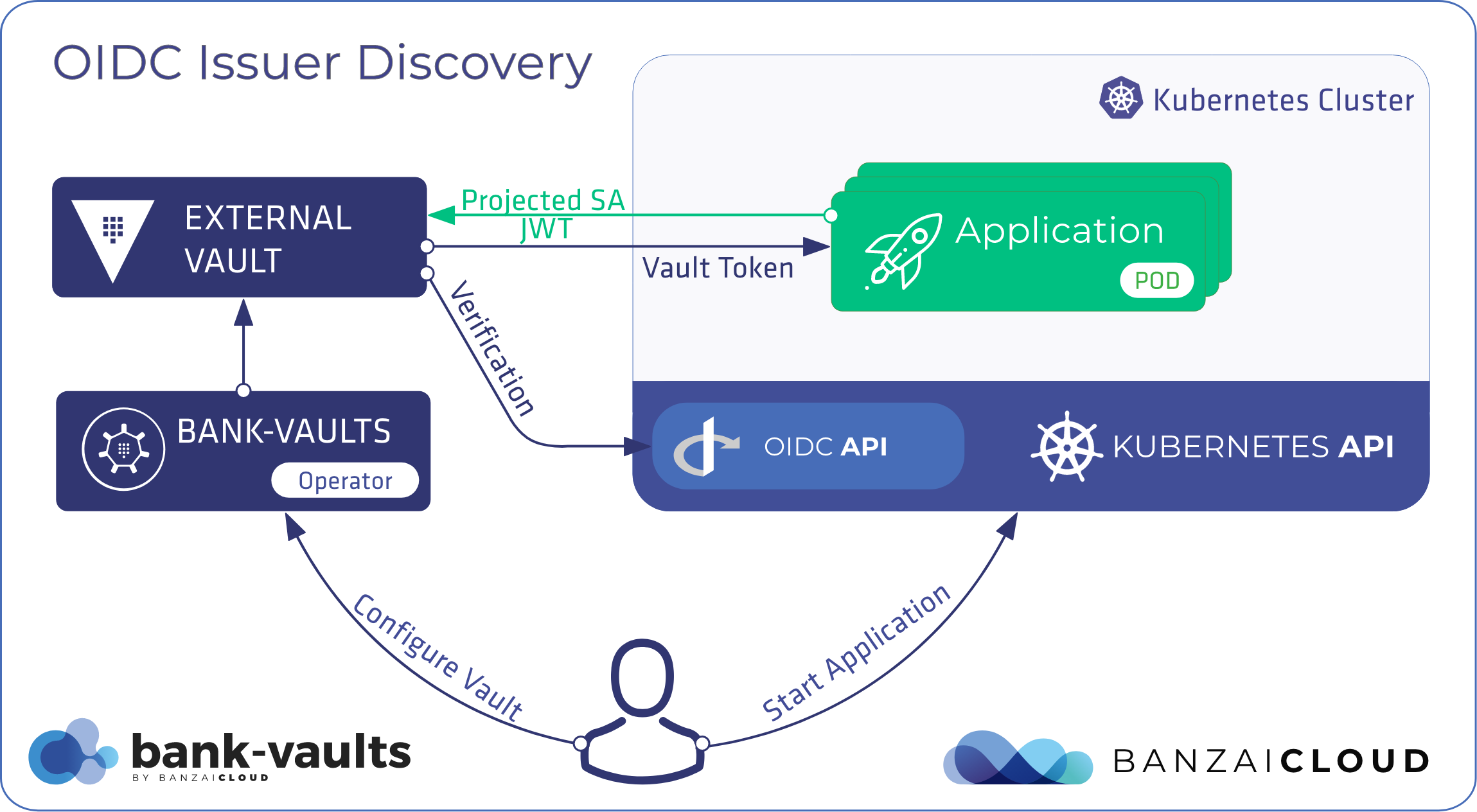

- OIDC issuer discovery for Kubernetes service accounts

- Show all posts related to Bank-Vaults