Distributed applications 🔗︎

Distributed applications have many definitions, but typically they are defined as applications that run on a network of multiple computers. Nowadays, that might well mean an application that runs on multiple Kubernetes clusters that are distributed over a large geographical area. Since such clusters are distributed, they have many benefits - like high-availability, speed, resiliency - but it can make developing, operating, and testing such applications tedious. The Multi-DC feature of Bank-Vaults is just such a distributed application; its testing was not automated and required us to manually test each time an important PR went into master. This blog post demonstrates how to set up a multi-cluster testing infrastructure locally and with proper load-balancing between clusters. Plus, we’ll show you how we test Bank-Vaults on that infrastructure.

Multi-cluster locally 🔗︎

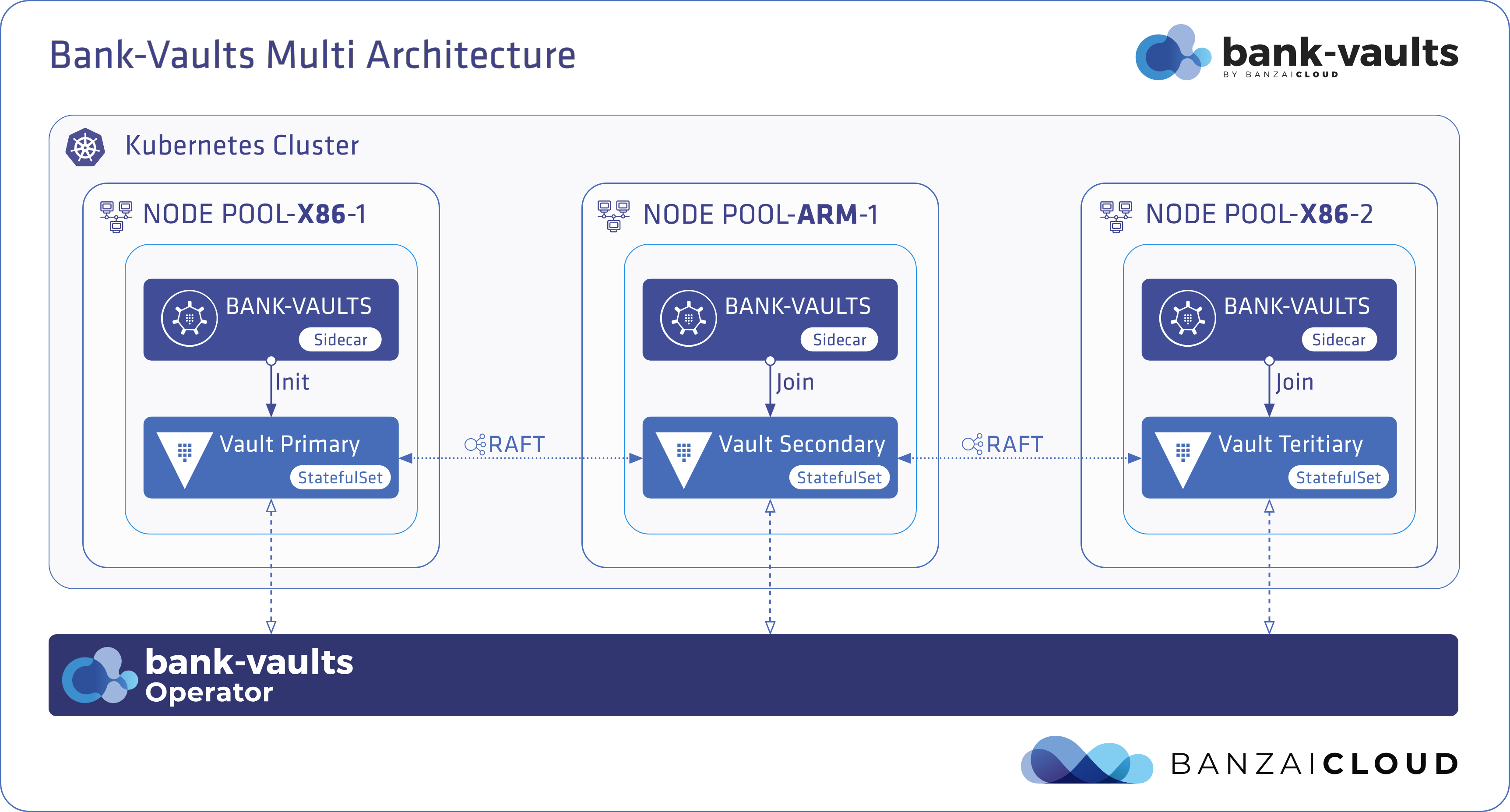

For testing Kubernetes applications locally, first, you need a cluster on your laptop. Many projects offer running a Kubernetes cluster locally, like minikube, kind, Docker for Mac, k3s, etc. We like kind the most, since it is highly customizable, is lightweight, and runs itself in Docker, which opens up a lot of opportunities - as you’ll see. In this tutorial, we’ll create three Kubernetes clusters with kind, and then join them to Vault.

The plan is to deploy Vault with Bank-Vaults to these clusters, and connect them with the Raft storage backend. This process is fully automated, from Kubernetes cluster creation until the multi-DC Vault Raft cluster reads as healthy. Communication between these Raft-based Vault nodes is done with a LoadBalancer-type Kubernetes Services. Having a working LoadBalancer Service is an absolute must for these, and, for that reason, we’ll be configuring a MetalLB instance on each cluster. We’ve already gone into detail about how this works with on-premise clusters in our previous post about MetalLB.

Running Bank-Vaults on the multi-cluster 🔗︎

We’ll be using the multi-dc-raft.sh script in the Bank-Vaults repository to set up the 3 kind-based Kubernetes clusters, install MetalLB on them, and install Bank-Vaults on the whole platform. We use this script for Continuous Integration of the entire project, as well. If the script exits with 0, the multi-cluster Raft setup is healthy.

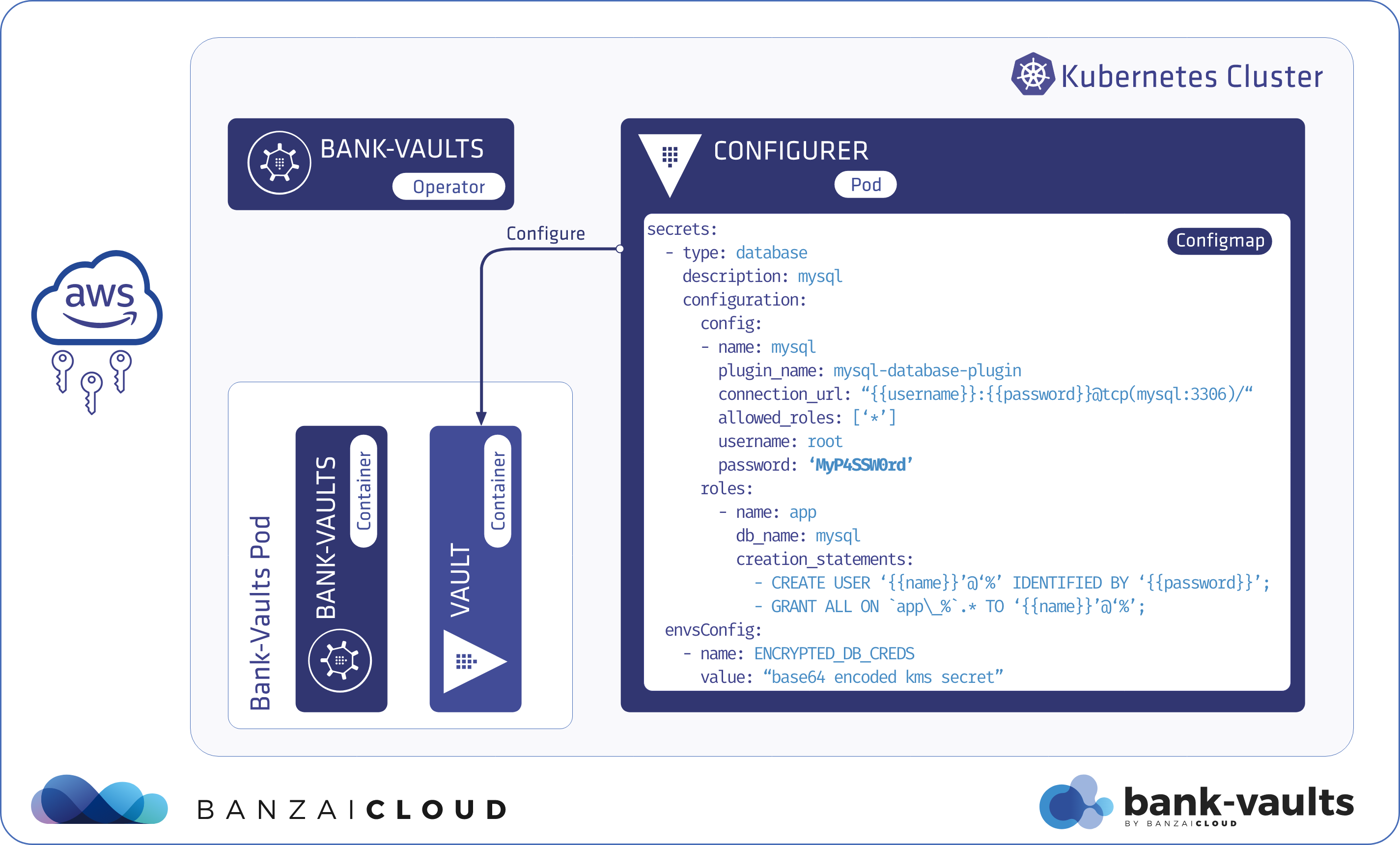

With auto-unsealing Vault clusters, it’s necessary that we store unseal keys somewhere (encrypted) outside the cluster premises. In this example, an “external” Vault instance is used (outside the Kubernetes clusters) as the KMS service. kind creates its own Docker network called kind (this can be overridden with the KIND_EXPERIMENTAL_DOCKER_NETWORK environment variable), and provisions clusters on top of that. The setup script deploys a Vault Docker container on the same kind Docker network where the Kubernetes nodes are running, so they can see each other at the network level. The three Vault instances inside the clusters unseal with this “external” instance.

The requirements for running this test locally (and if you’re already using Kubernetes, you probably have most of them) are as follows:

- docker

- jq

- kind

- kubectl

- helm

- https://github.com/subfuzion/envtpl

- https://github.com/hankjacobs/cidr

Run the installer locally, after checking out the repository:

$ ./operator/deploy/multi-dc/test/multi-dc-raft.sh install

Creating cluster "primary" ...

✓ Ensuring node image (kindest/node:v1.19.1) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-primary"

You can now use your cluster with:

# ...

# A few minutes later

# ...

Waiting for for tertiary vault instance...

pod/vault-tertiary-0 condition met

Multi-DC Vault cluster setup completed.

Now that the setup is complete, you can analyze the cluster and the Vaults running on it, including how they manage to successfully connect to one another. These are the Docker containers that we have on that top-level:

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4ce56b6efe33 vault "docker-entrypoint.s…" 19 minutes ago Up 19 minutes 8200/tcp central-vault

af4c70d249bd kindest/node:v1.19.1 "/usr/local/bin/entr…" 20 minutes ago Up 20 minutes 127.0.0.1:59885->6443/tcp tertiary-control-plane

852771794e95 kindest/node:v1.19.1 "/usr/local/bin/entr…" 21 minutes ago Up 21 minutes 127.0.0.1:59542->6443/tcp secondary-control-plane

f017a2d80e89 kindest/node:v1.19.1 "/usr/local/bin/entr…" 22 minutes ago Up 22 minutes 127.0.0.1:59202->6443/tcp primary-control-plane

How MetalLB works in kind. 🔗︎

All kind Kubernetes nodes are Docker containers, and kind starts them on the same Docker network, which is called kind. Let’s take a look at the subnet of this network:

$ docker network inspect kind

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

},

$ docker inspect primary-control-plane --format '{{.NetworkSettings.Networks.kind.IPAddress}}'

172.18.0.2

172.18.0.0/16, that’s a lot of addresses. The three nodes got an address from this CIDR. The test script allocates three ranges from this Docker subnet for the three MetalLB controllers, and they manage the addresses in the corresponding clusters:

- 172.18.1.128/25 (primary)

- 172.18.2.128/25 (secondary)

- 172.18.3.128/25 (tertiary)

Now, we’re going to use Layer 2’s configuration in MetalLB. This doesn’t require any special reconfiguring (the default kube-proxy mode in kind is “iptables”, which also reduces the number of steps), only the address ranges for the 3 MetalLB instances that manage the following config (for the primary cluster):

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.18.1.128/25

Layer 2’s configuration works on the entire kind Docker network. The MetalLB speakers components communicate the IP addresses to the Address Resolution Protocol (ARP) throughout the 172.18.0.0/16 network.

Let’s make sure that, thanks to MetalLB, the primary instance is now available on one of the addresses (172.18.1.128) in the range of 172.18.1.128 to 172.18.1.255:

$ kubectl config use-context kind-primary

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 9m48s

vault-operator ClusterIP 10.109.203.236 <none> 80/TCP,8383/TCP 6m50s

vault-primary LoadBalancer 10.103.77.250 172.18.1.128 8200:31187/TCP,8201:32413/TCP,9091:32638/TCP,9102:30358/TCP 5m44s

vault-primary-0 ClusterIP 10.109.38.127 <none> 8200/TCP,8201/TCP,9091/TCP 5m43s

vault-primary-configurer ClusterIP 10.111.165.239 <none> 9091/TCP 5m26s

Now the tertiary instance (172.18.3.128:8201) can join the cluster of the primary and secondary nodes as we’ll see in the logs:

$ kubectl config use-context kind-tertiary

$ kubectl logs -f vault-tertiary-0 -c vault

...

2020-09-17T07:10:45.223Z [INFO] storage.raft: initial configuration: index=1 servers="[

{Suffrage:Voter ID:6caa8795-74f1-d97b-5652-e592f2694a13 Address:172.18.1.128:8201}

{Suffrage:Voter ID:44ede1d6-c12a-86d5-a34b-7521ce0096b9 Address:172.18.2.128:8201}

{Suffrage:Voter ID:db17edbd-f423-ab47-3e5d-17624add5abf Address:172.18.3.128:8201}

]"

...

To remove the test setup entirely, run the following:

$ ./operator/deploy/multi-dc/test/multi-dc-raft.sh uninstall

Deleting cluster "primary" ...

Deleting cluster "secondary" ...

Deleting cluster "tertiary" ...

central-vault

With this small script and the help of kind and MetalLB, we can easily test this complex multi-cluster Vault setup, even on a commodity laptop or - completely automated - on a hosted CI solution to prevent bugs from sneaking into the codebase. This setup also comes in handy when you need to experiment and would like to add new features to a multi-cluster application. Feel free to grab the script and use it to install your application.

Learn more about Bank-Vaults:

- Secret injection webhook improvements

- Backing up Vault with Velero

- Vault replication across multiple datacenters

- Vault secret injection webhook and Istio

- Mutate any kind of k8s resources

- HSM support

- Injecting dynamic configuration with templates

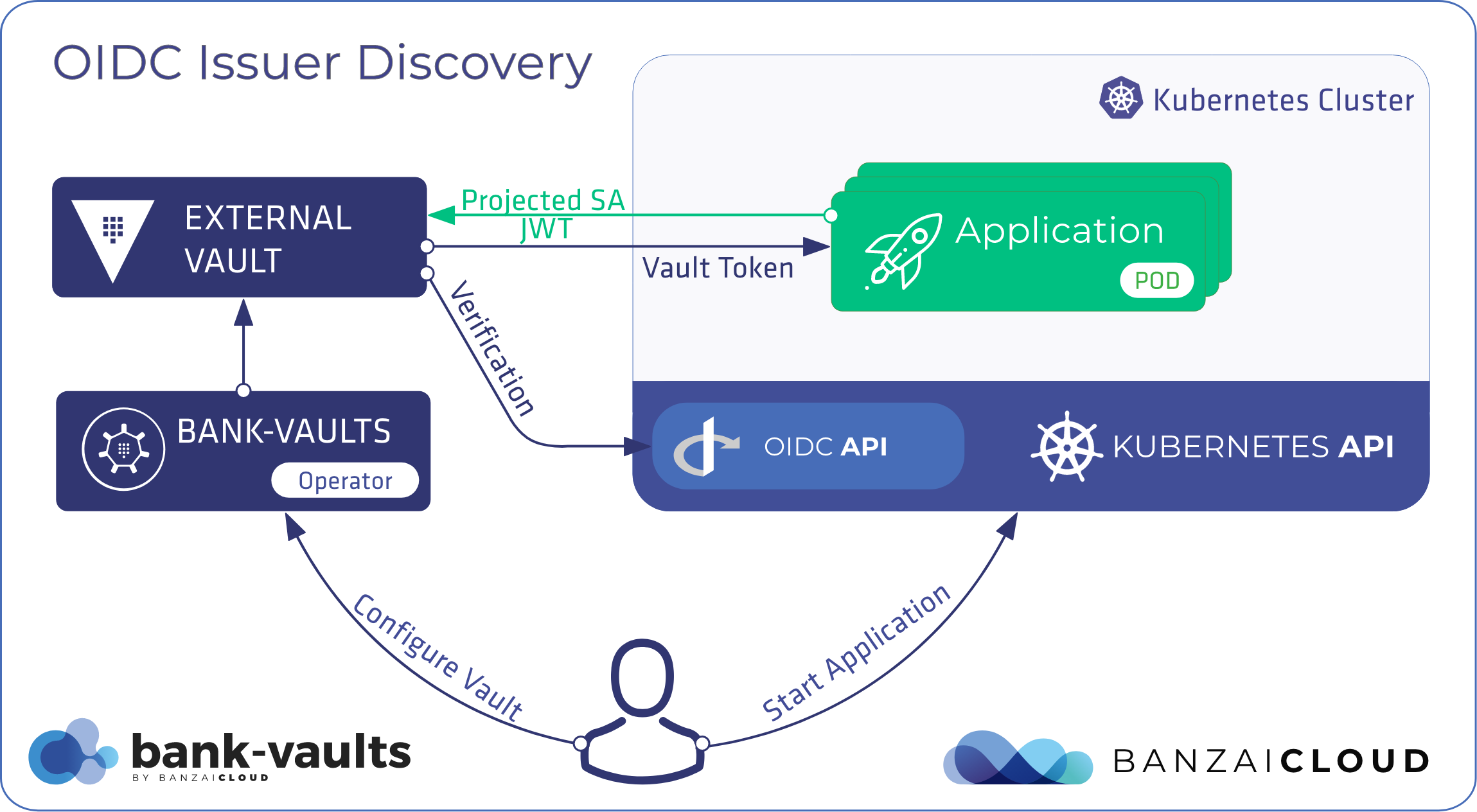

- OIDC issuer discovery for Kubernetes service accounts

- Show all posts related to Bank-Vaults