Admission webhook series:

In-depth introduction to Kubernetes admission webhooks Detecting and blocking vulnerable containers in Kubernetes (deployments) Controlling the scheduling of pods on spot instance clusters

Banzai Cloud’s Pipeline platform uses a number of Kubernetes webhooks to provide several advanced features, such as spot instance scheduling, vulnerability scans and some advanced security features (to bypass K8s secrets - more to come next week). The Pipeline webhooks are all open source and available on our GitHub:

Until now we used the openshift/generic-admission-server and other hand crafted solutions as our admission webhook framework to run the webhook as a Go process.

As we were working towards the public Pipeline beta release, we were trying to clean up and simplify our codebase. We came across a simpler and lighter weight Go framework for creating external admission webhooks for Kubernetes, called Kubewebhook.

With Kubewebhook you can make validating and mutating webhooks very fast and easy, and focus mainly on the domain-logic of the webhook itself. Some of the main features of Kubewebhook are:

- Ready for both mutating and validating webhook types.

- An easy and testable API.

- Multiple webhooks on the same server.

- Webhook metrics for Prometheus with Grafana dashboard included.

- Webhook tracing using Opentracing.

We were especially happy to see these last two features, as observability is one of the core features of the Pipeline platform. We want to enable deep insight on every detail of the Kubernetes clusters we provision.

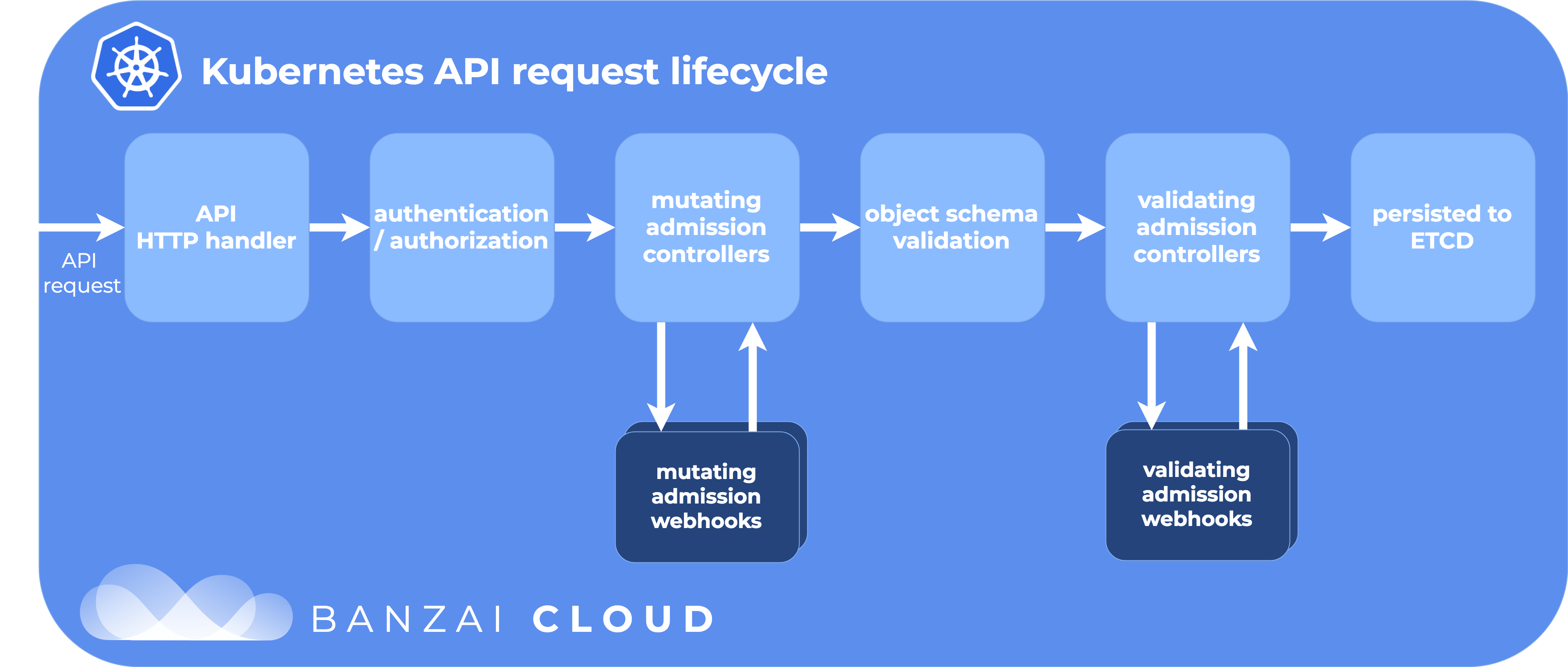

As a recap of what the lifecycle of a Kubernetes API request looks like - in particular for admission and mutating webhooks - please take a look at the diagram below (or read In-depth introduction to Kubernetes admission webhooks).

openshift/generic-admission-server vs Kubewebhook through an example 🔗︎

We are going to compare the two frameworks by creating a very simple mutating webhook that mutates deployments by adding an annotation to them. Using Kubewebhook, the setup code at the beginning is longer, since you have to define your own HTTP server and configure the handlers returned by the framework by yourself, but this - in turn - gives you flexibility (for example you can choose which web server you’d like to use (e.g. net/http package or Gin):

Simple net/http version:

1import (

2 "net/http"

3 ...

4)

5

6var deploymentHandler http.Handler

7

8func main() {

9 mux := http.NewServeMux()

10 mux.Handle("/deployments", deploymentHandler)

11

12 logger.Infof("Listening on :443")

13 err := http.ListenAndServeTLS(":443", "./tls.crt", "./tls.key", mux)

14 if err != nil {

15 fmt.Fprintf(os.Stderr, "error serving webhook: %s", err)

16 os.Exit(1)

17 }

18}Gin version:

1import (

2 "github.com/gin-gonic/gin"

3 ...

4)

5

6var deploymentHandler http.Handler

7

8func main() {

9 router := gin.New()

10 router.POST("/deployments", gin.WrapH(deploymentHandler))

11

12 logger.Infof("Listening on :443")

13 err := router.RunTLS(":443", "./tls.crt", "./tls.key")

14 if err != nil {

15 fmt.Fprintf(os.Stderr, "error serving webhook: %s", err)

16 os.Exit(1)

17 }

18}With openshift/generic-admission-server, the server setup is hidden from the user, thus it is simpler to set up but more opinionated (e.g. you can’t select the server framework):

1import (

2 "github.com/openshift/generic-admission-server/pkg/cmd"

3 ...

4)

5

6type deploymentHook struct {}

7

8// where to host it

9func (a *deploymentHook) ValidatingResource() (plural schema.GroupVersionResource, singular string) {}

10

11// your business logic

12func (a *deploymentHook) Validate(admissionSpec *admissionv1beta1.AdmissionRequest) *admissionv1beta1.AdmissionResponse {

13 //...

14}

15

16// any special initialization goes here

17func (a *deploymentHook) Initialize(kubeClientConfig *rest.Config, stopCh <-chan struct{}) error {

18 return nil

19}

20

21func main() {

22 cmd.RunAdmissionServer(&deploymentHook{})

23}We started by describing the boiler plate of the server setup, but the important difference lies in how deploymentHandler and deploymentHook get defined.

In generic-admission-server we have to fill in the interface methods defined by the library:

1import (

2 "encoding/json"

3

4 admissionv1beta1 "k8s.io/api/admission/v1beta1"

5 appsv1 "k8s.io/api/apps/v1"

6 "k8s.io/apimachinery/pkg/types"

7 ...

8)

9

10type patchOperation struct {

11 Op string `json:"op"`

12 Path string `json:"path"`

13 Value interface{} `json:"value,omitempty"`

14}

15

16func (a *deploymentHook) Admit(req *admissionv1beta1.AdmissionRequest) *admissionv1beta1.AdmissionResponse {

17

18 var podAnnotations map[string]string

19

20 switch req.Kind.Kind {

21 case "Deployment":

22 var deployment appsv1.Deployment

23 if err := json.Unmarshal(req.Object.Raw, &deployment); err != nil {

24 return successResponseNoPatch(req.UID, errors.Wrap(err, "could not unmarshal raw object"))

25 }

26 podAnnotations = deployment.Spec.Template.Annotations

27 default:

28 return successResponseNoPatch(req.UID, errors.Errorf("resource type %s is not applicable for this webhook", req.Kind.Kind))

29 }

30

31 var patch []patchOperation

32 if _, ok := podAnnotations["mutated"]; !ok {

33 patch = append(patch, patchOperation{

34 Op: "add",

35 Path: "/spec/template/metadata/annotations",

36 Value: map[string]string{

37 "mutated": "true",

38 },

39 })

40 } else {

41 return successResponseNoPatch(req.UID, errors.New("object already mutated"))

42 }

43

44 patchBytes, err := json.Marshal(patch)

45 if err != nil {

46 return successResponseNoPatch(req.UID, errors.Wrap(err, "failed to marshal patch bytes"))

47 }

48

49 return &admissionv1beta1.AdmissionResponse{

50 Allowed: true,

51 UID: req.UID,

52 Result: &metav1.Status{Status: "Success", Message: ""},

53 Patch: patchBytes,

54 PatchType: func() *admissionv1beta1.PatchType {

55 pt := admissionv1beta1.PatchTypeJSONPatch

56 return &pt

57 }(),

58 }

59}

60

61func successResponseNoPatch(uid types.UID, err error) *admissionv1beta1.AdmissionResponse {

62 return &admissionv1beta1.AdmissionResponse{

63 Allowed: true,

64 UID: uid,

65 Result: &metav1.Status{

66 Status: "Success",

67 Message: err.Error(),

68 },

69 }

70}In Kubewebhook, we have to create a function of type MutatorFunc:

1import (

2 appsv1 "k8s.io/api/apps/v1"

3 "github.com/slok/kubewebhook/pkg/webhook/mutating"

4 ...

5)

6

7func deploymentMutator(_ context.Context, obj metav1.Object) (bool, error) {

8 deployment, ok := obj.(*appsv1.Deployment)

9 if !ok {

10 return false, nil

11 }

12

13 if _, ok := deployment.Annotations["mutated"]; ok {

14 return false, nil

15 }

16

17 if deployment.Annotations == nil {

18 deployment.Annotations = map[string]string{}

19 }

20 deployment.Annotations["mutated"] = "true"

21

22 return false, nil

23}

24

25// It is advised to check that we satisfy the MutatorFunc type

26var _ mutating.MutatorFunc = vaultSecretsMutatorWith Kubewebhook, writing the actual business logic is much easier. You get the Kubernetes resource as an metav1.Object Go struct - as defined in the Kubernetes source tree - instead of the fairly low-level AdmissionRequest, and therefore you don’t have to deal with the JSON marshaling and manual object patching process anymore. The AdmissionRequest is still available of course, if you need it, using the context.GetAdmissionRequest(ctx) call defined by Kubewebhook.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.