These days it seems that everyone is using some sort a CI/CD solution for their software development projects, either a third-party service, or something written in house. Those of us working on the Banzai Cloud Pipeline platform are no different; our CI/CD solution is capable of creating Kubernetes clusters, running and testing builds, of pulling secrets from Vault, packaging and deploying applications as Helm charts, and lots more. For quite awhile now (since the end of 2017), we’ve been looking for a Kubernetes native solution but could not find many. We decided to fork Drone and add new features to meet our specific needs, such as:

- the ability to run natively on Kubernetes

- to support other OCI runtime alternatives besides Docker

- to run the same build jobs on multiple Kubernetes nodes

- the introduction of a

contextthat allows for the building of workflows across different k8s clusters - compatibility with legacy Drone plugins

- the ability to talk with the Banzai Cloud Pipeline platform API through plugins

- to change user permission handling in order to bring it in line with Pipeline’s strict security policies

- to pull secrets from Vault in the form we want, ephemeral and persistent

- and to move away from DinD - aka Docker in Docker

There’s always room for improvement - to increase Kubernetes-nativity and versatility - but one of our chief areas of focus has been refining Docker OCI image builds on Kubernetes.

Note: The Pipeline CI/CD module mentioned in this post is outdated and not available anymore. You can integrate Pipeline to your CI/CD solution using the Pipeline API. Contact us for details.

The old way of building Docker images 🔗︎

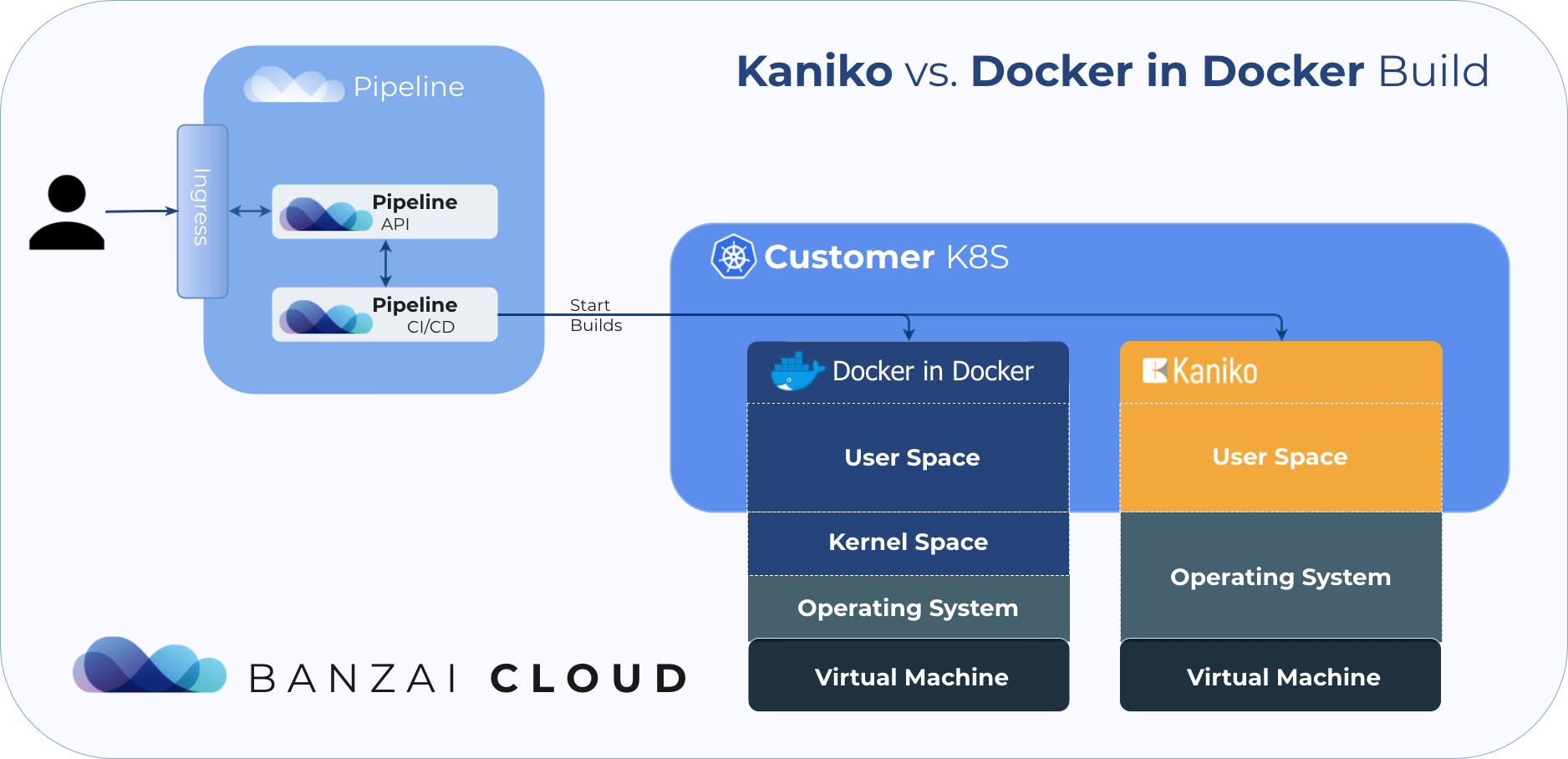

People tend to rely on the container runtime behind Kubernetes being Docker, and, up until a little while ago, we did too. Initially, we used the Official Drone Docker plugin. However, for it to work we had to mount the Docker socket into the building container and run it in privileged mode (Docker-in-Docker). This constituted sort of a can of worms, and was associated with some serious drawbacks and security concerns.

Many people seem to be willing to accept those drawbacks, however, there is another showstopping problem. Kubernetes no longer relys on Docker directly. Actually, Kubernetes has given up on relying on any container runtime, and instead relies on CRI specifications. Earlier this year, containerd integration went GA and this means that, from now on, anytime you open a Kubernetes cluster there’s no guarantee that Docker will be available. At this point, there are at least five container runtimes to choose from.

Note that the Banzai Cloud Pipeline platform supports six different managed Kubernetes platforms as well as our own Banzai Cloud Kubernetes distribution - a few of which no longer use Docker for runtime. Our K8s distribution, for instance, favors containerd, and GKE is likewise moving towards containerd (this feature is already in beta).

The risks of privileged mode 🔗︎

So let’s get back to the problem at hand, running builds in privileged mode. Here’s a quote lifted right from the documentation of Docker’s --privileged flag:

gives all capabilities to the container, and it also lifts all the limitations enforced by the device cgroup controller. In other words, the container can then do almost everything that the host can do. This flag exists to allow special use-cases, like running Docker within Docker.

Privileged mode means you can run some containers with (almost) all the capabilities of their host machine, including kernel features and device access. Sounds scary right? It is.

NOTE: Please note that, by default, Docker only allows use of a restricted set of capabilities.

An attack could come from an unverified (and backdoored) base-image. And, if it detected that it was running in privileged mode it might run on a different execution path. Also, some people tend to hide Monero miners in Docker images. What would stop them from leaving code in such an image and, with the help of excessive capabilities, taking full control of your build host?

See the links below for various issues that exist in public base-images:

- https://github.com/docker/hub-feedback/issues/1121

- https://github.com/docker/hub-feedback/issues/1549

- https://github.com/docker/hub-feedback/issues/1549

If possible, privileged mode should be avoided at all costs. To this end, and with the help of PodSecurityPolicies, it is possible to mandate the use of security extensions and other Kubernetes security directives. They provide minimum contracts that pods must fulfill to be submitted to the API server - including security profiles, the privileged flag, and the sharing of host network, process, or IPC namespaces.

1policy/example-psp.yaml

2apiVersion: policy/v1beta1

3kind: PodSecurityPolicy

4metadata:

5 name: unprivileged

6spec:

7 privileged: false # Don't allow privileged pods!

8 # For the rest fill in the required fields.

9 seLinux:

10 rule: RunAsAny

11 supplementalGroups:

12 rule: RunAsAny

13 runAsUser:

14 rule: RunAsAny

15 fsGroup:

16 rule: RunAsAny

17 volumes:

18 - '*'Since privileged mode can be turned on even on the kubelet level with the --allow-privileged=false flag, you may find yourself on a Kubernetes cluster in which the old way of building a Docker image is nearly impossible.

Finally, Pipeline is a multi-tenant environment, so we don’t want containers from different users rendezvousing on the same host!

“Unprivileged” image builder options 🔗︎

There’s been rapid development in the field of “Docker-less Docker image builds” and there are even a few tools available, today:

There are a lot of OCI compatible image builders out there, but in this blog post we’re going to talk about the ones that only work well on top of Kubernetes..

img 🔗︎

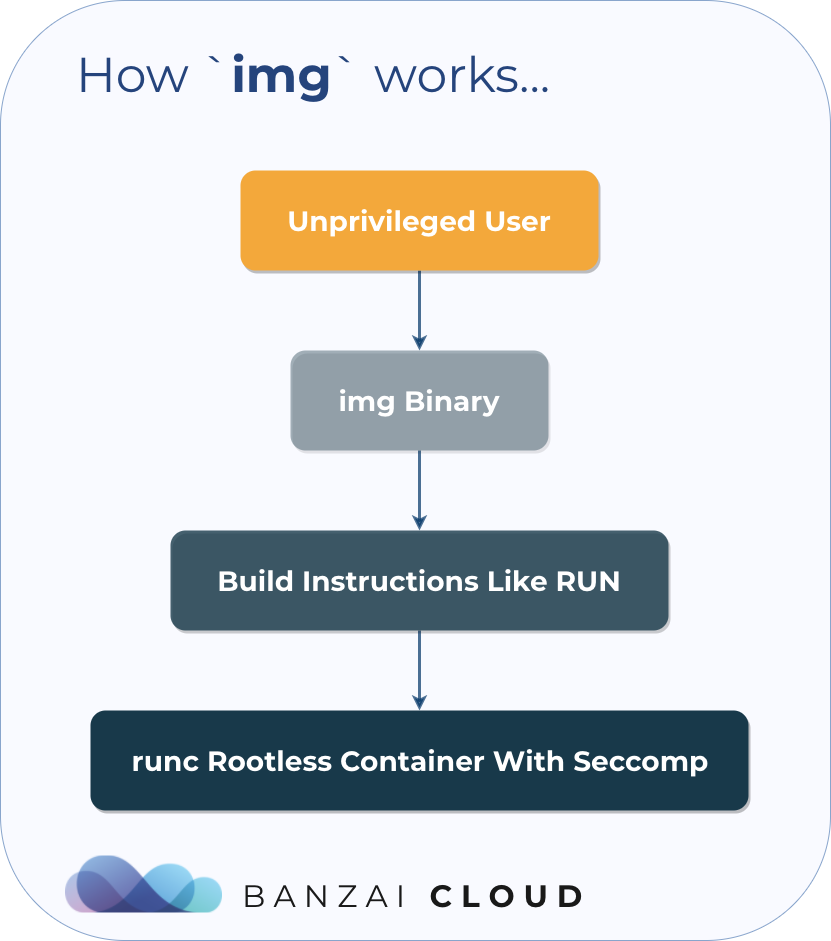

Jessie’s push towards a better and more secure container ecosystem has always been an inspiration to us, and when she first opensourced img we got pretty excited. img was the first image builder that tried to build images on Kubernetes without Docker, and is described in more detail in this blog post. img runs in unprivileged mode, and as a non-root user. However, it’s designed to be used outside of a container and thus, in order to work, it mounts the /proc filesystem in unmasked mode. Jess’s blog uses the rawProc term because, at that time, the UnmaskedProcMount wasn’t implemented in upstream Kubernetes, though it is available from 1.12, onward. Since 1.12 is not yet available on major cloud providers as a managed Kubernetes service, we had to move on temporarily, but we’re keeping a close eye on it!

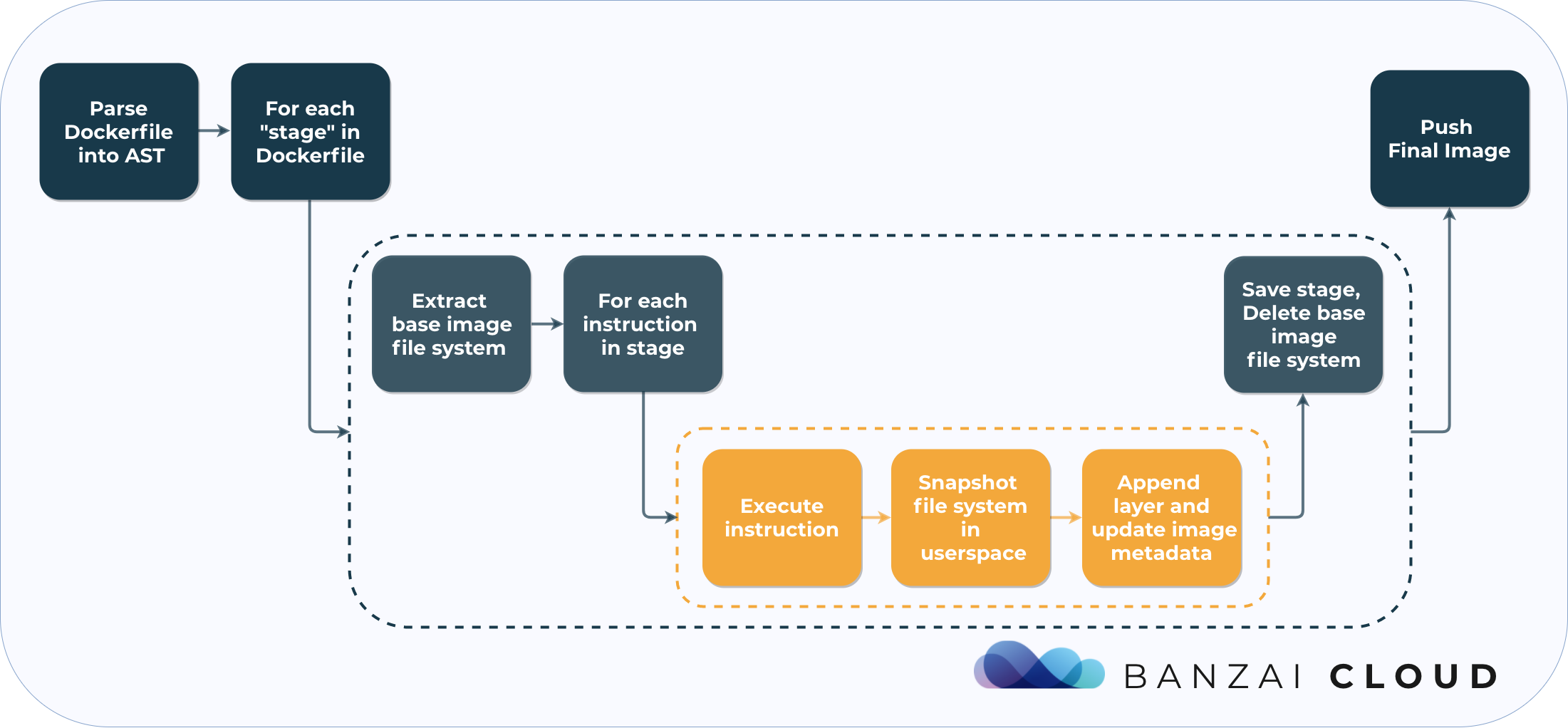

Kaniko is a project opensourced by Google to build OCI images from a Dockerfile inside Kubernetes Pods - which is exactly what the Pipeline CI/CD component does. Kaniko doesn’t depend on a Docker daemon, and executes each command within a Dockerfile entirely in userspace in unprivileged mode. This enables building container images in environments that can’t easily or securely run a Docker daemon, such as a standard Kubernetes cluster.

It’s still fairly young (was announced this April), and has some issues, like this (so we had to build an image from the fork of @aduong in order to use it in our environment), but it’s very promising and has worked relatively well for us, so far.

Example image build with Kaniko 🔗︎

Since the Pipeline CI/CD component is backward compatible with Drone plugins, we created a thin wrapper around the Kaniko Docker image, so that we could use it as a Drone plugin. You can find an example of how to build an image with the wrapper in the Kaniko-plugin repository:

1docker run -it --rm -w /src -v $PWD:/src

2 -e DOCKER_USERNAME=${DOCKER_USERNAME} \

3 -e DOCKER_PASSWORD=${DOCKER_PASSWORD} \

4 -e PLUGIN_REPO=banzaicloud/kaniko-plugin-test \

5 -e PLUGIN_TAGS=test \

6 -e PLUGIN_DOCKERFILE=Dockerfile.test \

7 banzaicloud/kaniko-pluginINFO[0000] Downloading base image alpine:3.8

INFO[0004] Taking snapshot of full filesystem...

INFO[0004] RUN apk add --update git

INFO[0004] cmd: /bin/sh

INFO[0004] args: [-c apk add --update git]

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/main/x86_64/APKINDEX.tar.gz

fetch http://dl-cdn.alpinelinux.org/alpine/v3.8/community/x86_64/APKINDEX.tar.gz

(1/7) Installing ca-certificates (20171114-r3)

(2/7) Installing nghttp2-libs (1.32.0-r0)

(3/7) Installing libssh2 (1.8.0-r3)

(4/7) Installing libcurl (7.61.1-r1)

(5/7) Installing expat (2.2.5-r0)

(6/7) Installing pcre2 (10.31-r0)

(7/7) Installing git (2.18.1-r0)

Executing busybox-1.28.4-r1.trigger

Executing ca-certificates-20171114-r3.trigger

OK: 19 MiB in 20 packages

INFO[0007] Taking snapshot of full filesystem...

INFO[0008] ENTRYPOINT [ "/usr/bin/plugin.sh" ]

2018/11/23 08:02:33 existing blob: sha256:4fe2ade4980c2dda4fc95858ebb981489baec8c1e4bd282ab1c3560be8ff9bde

2018/11/23 08:02:34 pushed blob sha256:c391d88f6049905f754365f148c7e6a3d0c5a2d400c6e66e6d0868d2534be08f

2018/11/23 08:02:39 pushed blob sha256:ebb66d4c5395524de931e577b1c3ce33e1e60e6a36bac42737fa999001676707

2018/11/23 08:02:39 index.docker.io/banzaicloud/kaniko-plugin:latest: digest: sha256:42585bcaa0858cb631077271e4005646762bdd679771aeeab5999fcb925a060e size: 592

The pipeline.yaml counterpart of the same invocation is:

1 build_image:

2 image: banzaicloud/kaniko-plugin

3 dockerfile: Dockerfile.test

4 repo: banzaicloud/kaniko-plugin-test

5 tags: test

6 secretFrom:

7 DOCKER_USERNAME:

8 # name:

9 keyRef: username

10 DOCKER_PASSWORD:

11 # name:

12 keyRef: passwordWe’ve already used this with Kaniko in our Node.JS Spotguide, and it works like a charm.

NOTE: Please note that, kaniko by itself does not make it safe to run untrusted builds, because currently the builds are running as root, however it is still much better than having all capabilities set. There is an open issue for making kaniko rootless: https://github.com/GoogleContainerTools/kaniko/issues/105.

Caching 🔗︎

Kaniko can cache layers created by RUN commands in a remote repository, which acts as a distributed cache amongst many build processes. This repository has to be set up by the user. Kaniko can also cache base images in a local directory that can be volume mounted into the Kaniko image, but again, this has to be pre-populated by the user (with the warmer tool). See the official documentation for more details.

Makisu 🔗︎

Makisu is a brand new project from Uber (announced last week!), a fast and flexible Docker image build tool designed for containerized environments like Kubernetes. Makisu requires no elevated privileges (unprivileged mode), making the build process portable, and it uses a distributed cache layer to improve performance across a build cluster, which makes it a great choice for use on top of Kubernetes. We are currently experimenting with Makisu as well, and trying to execute the same build flows we are on Kaniko.

Caching 🔗︎

Makisu offers the same kind of Docker registry-based distributed caching as Kaniko, although it requires a distributed key-value store to work properly (it maps the lines to the layer hashes in this store). Furthermore, it adds an interesting explicit caching capability to Dockerfiles (available exclusively on Makisu) with the #!COMMIT keyword for use in optimizing the caching even further.

Summary 🔗︎

Security has always been one of the primary concerns of Banzai Cloud’s Pipeline platform. We’ve altered and made lots of changes in/on top of Kubernetes - secrets stored in Vault, fine grained access policies on top of RBAC, etc. - and this is one more step forward in our efforts to support secure container builds on Kubernetes for our Pipeline users.

As usual, we opensourced this. You can get the whole project from our GitHub and quickly start it in your own environment.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.