Hybrid and multi-cloud infrastructure is becoming more and more common in enterprise IT environments, since it provides a superior set of capabilities than what might otherwise be gained from a single cloud. Specifically, it allows us to use different cloud services for different use-cases within a single environment, helping to avoid vendor lock-in. Using both on-prem and cloud environments makes it possible to use the best of both worlds, letting users transition to the cloud at their own pace; it’s very rare for businesses of significant size, nowadays, to exist exclusively on either.

The emerging need for hybrid infrastructure, and the resultant move to the cloud, has accelerated the adoption of automation across enterprises, with companies adopting related technologies, including microservices architecture and the usage of containers. Modern hybrid infrastructure needed a unified platform for container management in multiple environments, this is where Kubernetes came in. It automates the deployment, management, and scaling of containerized applications within an enterprise.

The Banzai Cloud Pipeline platform allows enterprises to run their workloads in a single-, multi-, or hybrid-cloud environment, providing a unified platform.

In a hybrid infrastructure service abstraction becomes a necessity, since, as with workloads, services can be all over the place depending on their needs, speed, cost and compliance requirements. Thus, there is a need for a unified infrastructure layer to provide seamless, secure and reliable connectivity between services wherever they exist within the hybrid infrastructure.

Service mesh is a new layer in the infrastructure that handles all communication between services. It is independent of each service’s code, so that it can work with multiple service management systems and across network boundaries with no problems. Its features create and manage connections between services, securely and effortlessly.

Banzai Cloud Backyards is a multi and hybrid-cloud enabled service mesh platform for constructing modern applications. Built on Kubernetes, our Istio operator, and Pipeline, Backyards enables flexibility, portability and consistency across on-premise datacenters and cloud environments. With Backyards, you can monitor and manage your hybrid multi-cloud service infrastructure through a single pane of glass.

Check out Backyards in action on your own clusters!

Want to know more? Get in touch with us, or delve into the details of the latest release.

Or just take a look at some of the Istio features that Backyards automates and simplifies for you, and which we’ve already blogged about.

Service failover in a hybrid infrastructure 🔗︎

One of the use-cases of hybrid cloud infrastructure is to provide high availability through deploying workloads and services to multiple environments, either on-prem and on a public cloud, or to two public clouds. The following example shows how to set up such an environment with Backyards (now Cisco Service Mesh Manager).

Three clusters will be used for this example. One for the Backyards control plane and the customer-facing ingress gateway and two workload clusters on different cloud providers. Backyards (now Cisco Service Mesh Manager) comes with a built-in demo application that will be installed onto the two workload clusters to demonstrate automatic multi-provider failover.

Backyards supports locality-based load balancing, a feature used to provide automatic failover for incoming requests. You can read about locality-based load balancing in our previous blog post on that topic.

Create three Kubernetes clusters 🔗︎

If you need a hand with this, you can create clusters with our free version of Banzai Cloud’s Pipeline platform.

The clusters used in the actual example are:

waynz0r-1009-02-aws- control plane and ingress cluster running on AWS in the eu-west-3 regionwaynz0r-1009-01-aws- workload cluster running on AWS in the eu-west-2 regionwaynz0r-1009-01-gke- workload cluster running on GKE in the europe-west2 region

Point KUBECONFIG at one of the clusters 🔗︎

Register for the free version and run the following command to install Backyards 🔗︎

Register for the free tier version of Cisco Service Mesh Manager (formerly called Banzai Cloud Backyards) and follow the Getting Started Guide for up-to-date instructions on the installation.

Attach the two other clusters to the service mesh 🔗︎

Creating a multi-cluster single mesh is as easy as pie. Clusters can be attached with a simple CLI command.

~ waynz0r-1009-02-aws ❯ backyards istio cluster attach <path-to-kubeconfig-of-waynz0r-1009-01-aws>

? Are you sure to use the following context? waynz0r-1009-01-aws (API Server: https://52.56.114.116:6443) Yes

✓ creating service account and rbac permissions

✓ retrieving service account token

✓ attaching cluster started successfully name=waynz0r-1009-01-aws

✓ backyards ❯ configured successfully

✓ backyards ❯ reconciling

✓ backyards ❯ deployed successfully

✓ node-exporter ❯ configured successfully

✓ node-exporter ❯ reconciling

✓ node-exporter ❯ deployed successfully

~ waynz0r-1009-02-aws ❯ backyards istio cluster attach <path-to-kubeconfig-of-waynz0r-1009-01-gke>

? Are you sure to use the following context? waynz0r-1009-01-gke (API Server: https://35.246.99.134:6443) Yes

✓ creating service account and rbac permissions

✓ retrieving service account token

✓ attaching cluster started successfully name=waynz0r-1009-01-gke

✓ backyards ❯ configured successfully

✓ backyards ❯ reconciling

✓ backyards ❯ deployed successfully

✓ node-exporter ❯ configured successfully

✓ node-exporter ❯ reconciling

✓ node-exporter ❯ deployed successfully

Check mesh status 🔗︎

~ waynz0r-1009-02-aws ❯ backyards istio cluster status

Clusters in the mesh

Name Type Status Gateway Address Istio Control Plane Message

waynz0r-1009-02-aws Host Available [15.236.163.90] -

waynz0r-1009-01-aws Peer Available [3.11.47.43] cp-v17x.istio-system

waynz0r-1009-01-gke Peer Available [35.197.239.240] cp-v17x.istio-system

Install demo application onto the clusters 🔗︎

~ waynz0r-1009-02-aws ❯ backyards demoapp install -s bombardier

✓ demoapp ❯ deploying application

✓ demoapp/reconciling ❯ done name=backyards-demo, namespace=backyards-demo

✓ demoapp ❯ deployed successfully

~ waynz0r-1009-02-aws ❯ backyards -c <path-to-kubeconfig-of-waynz0r-1009-01-gke> demoapp install -s frontpage,bookings,catalog --peer

✓ demoapp ❯ deploying application

✓ demoapp/reconciling ❯ done name=backyards-demo, namespace=backyards-demo

✓ demoapp ❯ deployed successfully

~ waynz0r-1009-02-aws ❯ backyards -c <path-to-kubeconfig-of-waynz0r-1009-01-aws> demoapp install -s frontpage,bookings,catalog --peer

✓ demoapp ❯ deploying application

✓ demoapp/reconciling ❯ done name=backyards-demo, namespace=backyards-demo

✓ demoapp ❯ deployed successfully

Turn on locality-based load balancing for the applications 🔗︎

To make locality based failover work, OutlierDetection needs to be defined on all the related services, so that Envoy can determine if instances are unhealthy. The following YAML snippets have to be applied on the control cluster:

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: frontpage-outlier

namespace: backyards-demo

spec:

host: "frontpage.backyards-demo.svc.cluster.local"

trafficPolicy:

loadBalancer:

localityLbSetting:

enabled: true

failover:

- from: eu-west-3

to: eu-west-2

outlierDetection:

consecutiveErrors: 7

interval: 5m

baseEjectionTime: 15m

---

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: bydemo-outlier

namespace: backyards-demo

spec:

host: "*.backyards-demo.svc.cluster.local"

trafficPolicy:

outlierDetection:

consecutiveErrors: 7

interval: 5m

baseEjectionTime: 15m

This configuration makes it so that if there are no healthy workloads in the frontpage service of the eu-west-3 region (this is where our ingress is), the traffic should failover to the eu-west-2 region, which is where our primary AWS workload cluster resides.

Expose the demo application on ingress 🔗︎

Let’s expose the frontpage service of the demo application on ingress to simulate outside traffic to the demo application. Here is a detailed post on how you can do this with Backyards.

The internal address of the service is frontpage.backyards-demo.svc.cluster.local:8080. After exposing it, the externally available URL is http://frontpage.1pj1gx.backyards.banzaicloud.io.

Open up Backyards dashboard and test failover 🔗︎

The Backyards dashboard can be easily accessed with the following command:

~ waynz0r-1009-02-aws ❯ backyards dashboard

In the meantime, let’s send a load to the external URL and downscale the frontpage service on the AWS workload cluster to simulate workload outage. We should see traffic patterns that are similar to those in the following animation:

The traffic should automatically failover to the GKE workload cluster, without any outages or hiccups, since Backyards provides service abstraction and seamless, secure communication within our hybrid infrastructure.

The demo application is stateless, but, usually, that is not the case, the second part of this post will cover the options we have with stateful applications.

Life is not that simple – data replication 🔗︎

If all applications used by enterprises were stateless life would be simpler. The disaster recovery solutions for these applications would be as simple as starting new application instances in an unaffected region and directing traffic to them. The reality is that for various reasons most applications are not, or cannot be, stateless. These applications store their state in a data store, thus a disaster recovery solution has to replicate the state to a different secondary location to survive a potential outage of the primary data store without loss. The used data store itself may provide replication capabilities out-of-the-box. However, these often require a secondary data store of an identical type. Enterprises running their workflows on one cloud provider while using a different cloud provider as a recovery location, may hit a stumbling block when the RDBMSes in the two locations are not 100% compatible, thus nixing out-of-the-box replication. Kafka comes to rescue here by replicating data between heterogeneous environments.

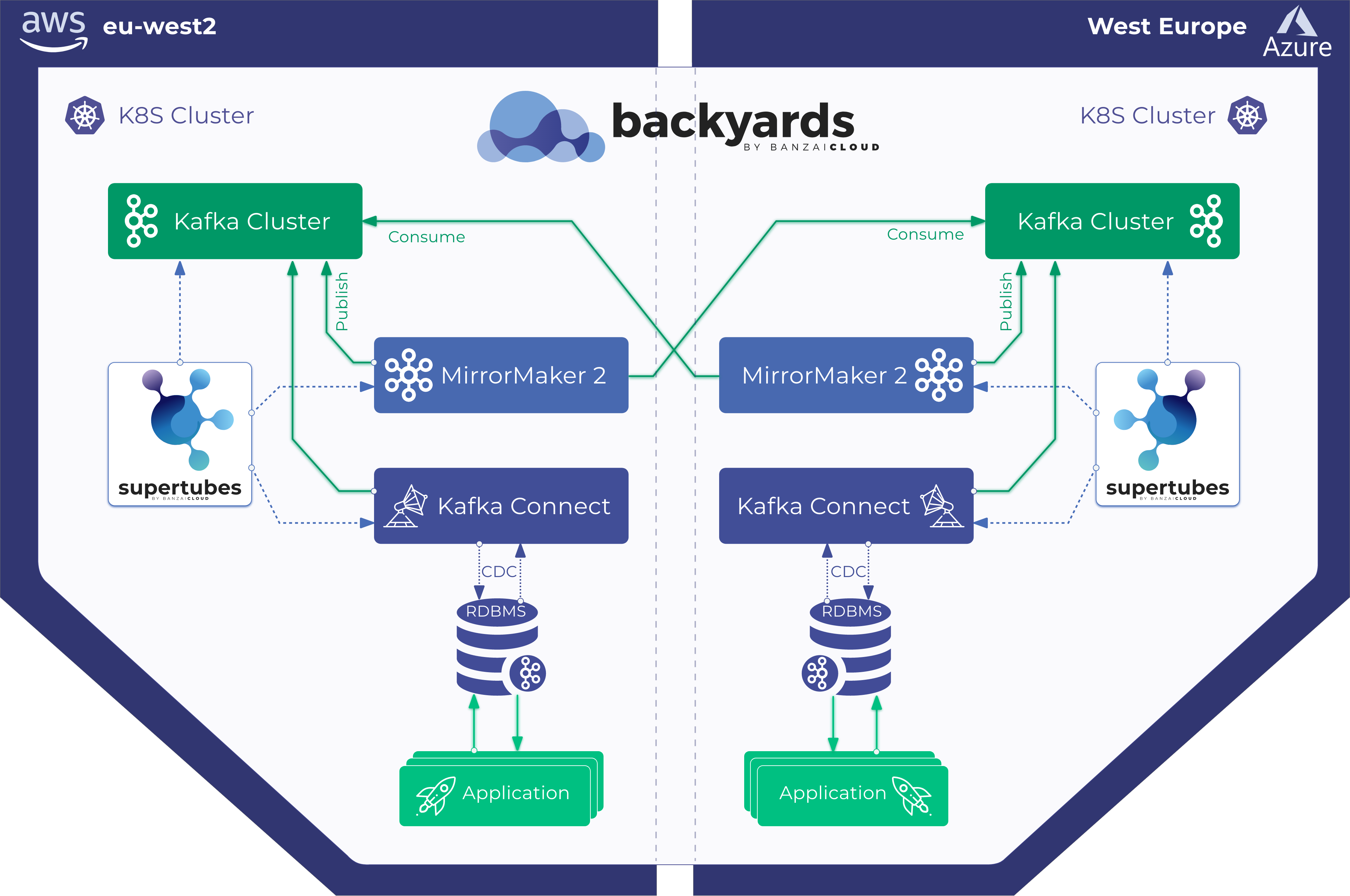

With Supertubes, our users can easily set up Kafka clusters on Kubernetes and then enable bi-directional replication between them, using MirrorMaker2.

See Kafka disaster recovery on Kubernetes using MirrorMaker2 for details on how to set up bi-directional replication between Kafka clusters with Supertubes

Applications that already use Kafka for exchanging/storing data can leverage the bi-directional mirroring of Kafka topics as is, without any additional components. In a disaster recovery scenario, these applications can be switched over to the Kafka cluster which resides at the secondary location. The state is already available at secondary location thanks to the bi-directional mirroring set up with Supertubes using MirrorMaker2, which ensures that Kafka topics are kept in sync. When the failed location comes back online, MirrorMaker2 ensures that the data from the mirrored topics are updated. This bi-directional mirroring makes a hot-hot setup possible, wherein an instance of the application runs continuously at each location, both with the same data, since both are kept in sync (of course, we need to account for the lag that can stem from distance between locations).

What about applications that don’t use Kafka, but rather RDBMS as the data store, for example?

If applications don’t use Kafka to store their data, additional components can be used that source/publish data from/to these data stores into/from Kafka which will be then replicated using the same MirrorMaker2-based mechanism we’ve been discussing. These additional components can be producer/consumer Kafka client applications, or CDC (change data capture) connectors running on the Kafka Connect framework.

See Using Kafka Connect with Supertubes for details on how to set up Kafka Connect and deploy connectors into it.

The bi-directional replication of Kafka topics in conjunction with mesh functionalities is not limited to seamless handling of disaster recovery scenarios, but enables the implementation of other useful capabilities like locality-awareness and traffic shifting, to name a few.

Takeaway 🔗︎

Thinking in terms of global infrastructure that span across regions, cloud providers, on-premise data centers for your workloads is no longer some almost unaccomplishable, complex task, requiring expertise in various domains, since Backyards provides all the required cohesion, under the hood. Backyards, complemented by the bi-directional data replication that Supertubes enables, makes running workloads on a global infrastructure a seamless experience.