Banzai Cloud is on a mission to simplify the development, deployment, and scaling of complex applications and to bring the full power of Kubernetes to all developers and enterprises. Banzai Cloud’s Pipeline provides a platform which allows enterprises to develop, deploy and scale container-based applications. It leverages best-of-breed technology from the Cloud Native Foundation ecosystem to create a highly productive, yet flexible environment for developers and operation teams alike. One of the key tools we use from the Kubernetes ecosystem is Helm.

The purpose of this post is to provide an introduction to Helm, which we’ve been using, contributing to and extending for quite a while. We’ll describe a variety of best practices and share our experience of how to best create charts using Helm. In a follow up post we’ll dig into the details of how the Banzai Cloud Pipeline platform abstracts and uses Helm to go from commit to scale at lightspeed with our managed k8s offering.

There is a second part of this post, where we are exploring best practices and taking a look at some common mistakes. Follow up here: Helm from basics to advanced - part II. There is a Helm 3 post coming soon, so make sure to subscribe to one of our social channels.

What is Helm? 🔗︎

If you’re already familiar with Helm you can skip this section and scroll down to Creating new charts and keep reading. Helm is the de facto application for management on Kubernetes. It is officially a CNCF incubator project.

“Helm helps you manage Kubernetes applications — Helm Charts helps you define, install, and upgrade even the most complex Kubernetes application.” - https://helm.sh/

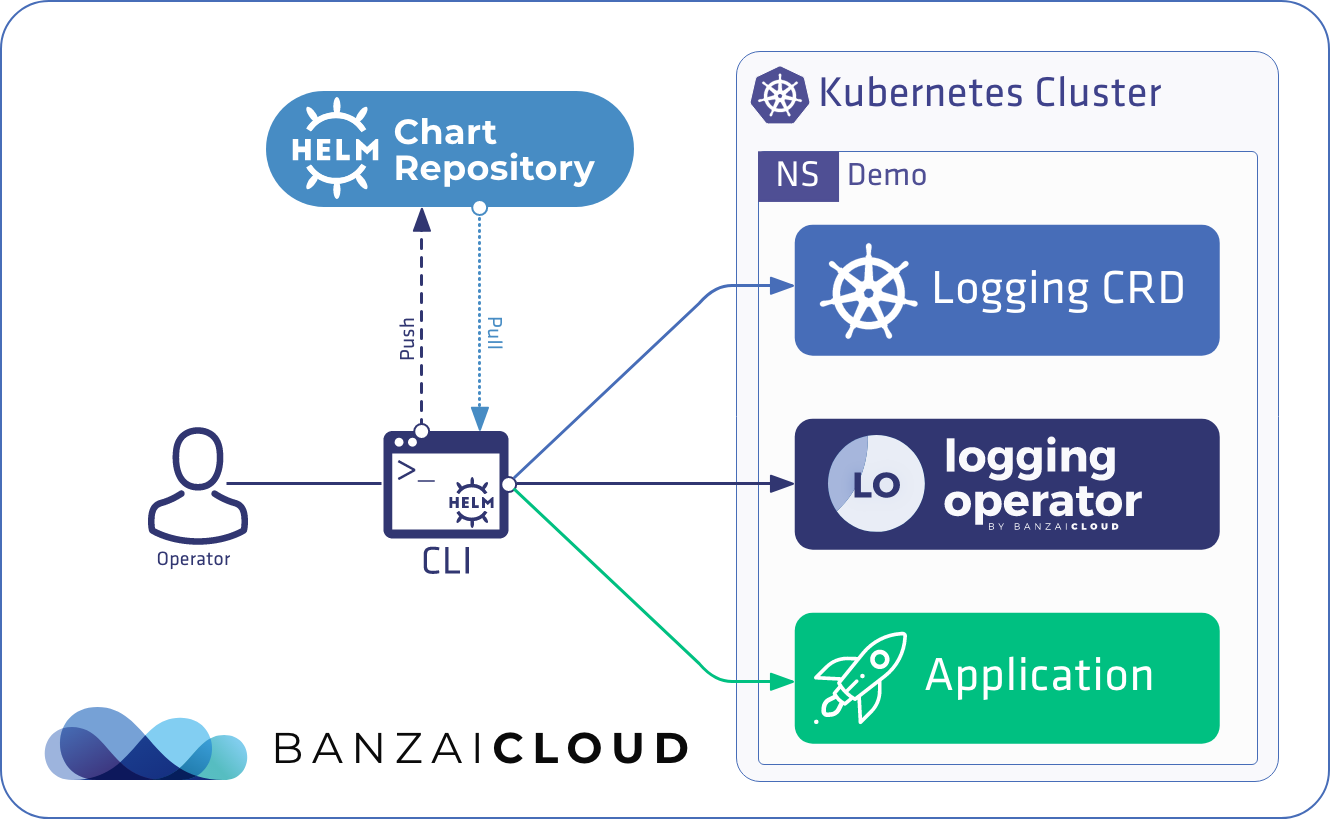

Helm’s operation is based on cooperation between two main components: a command line tool called helm, and a server component called tiller, which has to run on the cluster it manages.

The main building block of Helm based deployments are Helm Charts: these charts describe a configurable set of dynamically generated Kubernetes resources. The charts can either be stored locally or fetched from remote chart repositories.

Installing Helm 🔗︎

To install the helm command use a supported package manager, or simply download the pre-compiled binary:

# Brew

$ brew install kubernetes-helm

# Choco

$ choco install kubernetes-helm

# Gofish

$ gofish install helmInstalling Tiller 🔗︎

Note: When RBAC is enabled on your cluster you may need to set proper permissions for the tiller pod.

To start deploying applications to a pure Kubernetes cluster you have to install tiller with the helm init command of the CLI tool.

# Select the Kubernetes context you want to use

$ kubectl config use-context docker-for-desktop

$ helm initThat’s it, now we have a working Helm and Tiller setup.

The Banzai Cloud Pipeline platform abstracts and automates all these. We’ve also extended Helm to make deployments available through a REST API.

Helm repositories 🔗︎

You don’t need a Chart to be available locally, because Helm helps you manage remote repositories. It offers a stable repository by default, but you can also add and remove repositories.

$ helm repo add banzaicloud-stable https://kubernetes-charts.banzaicloud.com/branch/master

$ helm repo list

NAME URL

stable https://kubernetes-charts.storage.googleapis.com

banzaicloud-stable https://kubernetes-charts.banzaicloud.com/branch/master/Installing a Chart 🔗︎

Installing a chart to the cluster is really simple. You need only specify the name of the Chart (it can be local or in a repository/chart format) and optional custom configuration values.

# Install with default values

$ helm install banzaicloud-stable/logging-operator

# Install with custom yaml file

$ helm install banzaicloud-stable/logging-operator -f example.yaml

# Install with value overrides

$ helm install banzaicloud-stable/logging-operator --set rbac.enabled=falseCreating new Charts 🔗︎

Using Helm packages is really simple. Writing Helm charts is only a little bit more complex.

Helm Charts are source trees that contain a self-descriptor file, Chart.yaml, and one or more templates. Templates are Kubernetes manifest files that describe the resources you want to have on the cluster. Helm uses the Go templating engine by default.

Most charts include a file called values.yaml, which provides default configuration data for the templates in a structured format.

To create a new chart you should use the built-in helm create command. This will create a standard layout with some basic templates and examples.

$ helm create my-app

Creating my-app

$ tree -d my-app

my-app

├── Chart.yaml

├── charts

├── templates

│ ├── NOTES.txt

│ ├── _helpers.tpl

│ ├── deployment.yaml

│ ├── ingress.yaml

│ └── service.yaml

└── values.yamlUseful functions 🔗︎

The real power behind Helm is the templating. The Golang template can be difficult to read at first, but it makes up for that with some useful functions. If you need detailed documentation pertaining to the available Template functions, visit the Sprig homepage. We’ll walk through the most common and helpful functions, here.

Fake Random 🔗︎

You may be asking, ‘Why is it that a simple random won’t do?’ The problem is that, if you generate a random string for each installation (upgrade), the string will overwrite the previous existing value each time, which causes unnecessary noise and other problems. To handle this, use derivePassword. It generates the same stable output for each run based on several input sources.

Example:

sharedKey = "{{ .Values.tls.sharedKey | default (derivePassword 1 "long" (.Release.Time | toString) .Release.Name .Chart.Name ) }}"You can read the detailed documentation here.

Generating TLS certificates 🔗︎

Using encrypted channels is a standard way to communicate between components, although for testing and development you might not want to use a complete PKI to issue test certificates. It’s possible to hardcode pre-generated certificates, but it’s neither easy to maintain, nor elegant. Sprig provides out-of-box self-signed certificate support.

Note: It’s not recommended that you use self-signed server certificates in a production environment.

Here’s an example from our Logging Operator Helm chart:

{{- if and .Values.tls.enabled (not .Values.tls.secretName) }}

{{ $ca := genCA "svc-cat-ca" 3650 }}

{{ $cn := printf "fluentd.%s.svc.cluster.local" .Release.Namespace }}

{{ $server := genSignedCert $cn nil nil 365 $ca }}

{{ $client := genSignedCert "" nil nil 365 $ca }}

apiVersion: v1

kind: Secret

metadata:

name: {{ template "logging-operator.fullname" . }}

labels:

app: {{ template "logging-operator.name" . }}

chart: {{ .Chart.Name }}-{{ .Chart.Version }}

heritage: {{ .Release.Service }}

release: {{ .Release.Name }}

data:

caCert: {{ b64enc $ca.Cert }}

clientCert: {{ b64enc $client.Key }}

clientKey: {{ b64enc $client.Cert }}

serverCert: {{ b64enc $server.Cert }}

serverKey: {{ b64enc $server.Key }}

{{ end }}For more information check out the official documentation.

Labels, Annotations and other attributes 🔗︎

You should be aware of Kubernetes’ capabilities, even the ones you’re not using. You should help customize your deployments as much as possible. A good way to do this is through the use of labels, selectors etc. You can use your own attributes, but you need to enable end-users to complete theirs. The best way to runtime override objects like annotations and labels is to render values as yaml or json.

Example empty values:

nodeSelector: {}

tolerations: []

affinity: {}Example templates in Pod specification:

{{- with .Values.nodeSelector }}

nodeSelector:

{{ toYaml . | indent 8 }}

{{- end }}

{{- with .Values.affinity }}

affinity:

{{ toYaml . | indent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{ toYaml . | indent 8 }}

{{- end }}Unique resource names 🔗︎

The _helpers.tpl file includes some useful functions. Using these functions to build resource names makes it easy to create a chart that can be deployed in more than one instance.

However, it’s a better idea to use separate deployments with different namespaces.

| Name | Usage | Override |

|---|---|---|

| my-app.name | {{ include “my-app.name” . }} | .Values.nameOverride |

| my-app.fullname | {{ include “my-app.fullname” . }} | .Values.fullnameOverride |

| my-app.chart | {{ include “my-app.chart” . }} |

Creating Charts for customization 🔗︎

To create flexible Helm charts you need to present opportunities for users to override generated and default values. This helps deploy your chart to different environments, and helps build an Umbrella Chart on top of your charts.

Many of the charts in the official charts repository are “building blocks” for creating more advanced applications. But charts may be used to create instances of large-scale applications. In such cases, a single umbrella chart may have multiple subcharts, each of which functions as a piece of the whole.

Example configMapOverrideName value to handle custom configurations:

- name: config-volume

configMap:

name: {{ if .Values.configMapOverrideName }}{{ .Release.Name }}-{{ .Values.configMapOverrideName }}{{- else }}{{ template "my-app.fullname" . }}{{- end }}Creating umbrella Charts 🔗︎

To create a chart that builds on one or more other charts, refer to well-defined versions of the dependent charts in the requirements.yaml file.

For example to install our MySQL chart along your application, you should create a requirements.yaml whose contents look like this:

dependencies:

- name: mysql

version: 0.7.1

repository: alias:banzaicloud-stable

condition: mysql.enabledThis requirements will install the mysql chart from a custom repository with a fixed version. The chart will only be installed when the value of mysql.enabled is true.

You can override the default values in your required chart’s values.yaml file. Dependent charts get the subtree of your values structure under the key matching the dependent chart’s name:

mysql:

enabled: true

nameOverride: my-example-db

mysqlDatabase: exampleUsing long files as configmaps 🔗︎

Occasionally, you may want to use a long file as a Kubernetes configmap, without wanting to apply a template to it. You can use the Files.Get function to include these files in one or more configmap manifest(s).

Note: The path is relative from the Chart’s root directory

{{- if .Values.grafana.dashboard.enabled }}

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ template "logging-operator.fullname" . }}-grafana-dashboard-logging

labels:

pipeline_grafana_dashboard: "1"

data:

logging.json: |-2

{{.Files.Get "grafana-dashboards/logging-dashboard_rev1.json"| indent 4}}

{{- end }}Design principles 🔗︎

The following sections are not functional requirements, instead, they are design guidelines. Helm charts can quickly grow unwieldy and complex. That’s why it’s important to keep them consistent and tidy. If you’re not sure about something, the official Helm Best Practices documentation is a good starting point.

Values 🔗︎

Values is the primary customization point of any Helm deployment. It has to be susceptible to customization but simple for easy deployment. At Banzai Cloud we follow some simple rules that help us achieve this.

Use as few hierarchies as possible, try to not make redundant parents

Bad

my-app:

config:

rbac:

enabled: truerbac:

enabled: trueUse a hierarchy, not a prefix/suffix

Bad

tls_secret: aaatls:

secret: aaaConsistent naming

Consistent naming will help you and other developers contribute to the code. Every language has guidelines. Since Kubernetes uses camelCase for variable names, it’s recommended that you use it in charts as well.

Bad

secret_name: aaa

Config_Name: bbbsecretName: my-secretUse feature switch conditions 🔗︎

As you dig deeper into functionality there will be several options bundled together. A good example of this is the use of TLS certificates for in-cluster communication, since you have to change several attributes that go hand in hand.

Look at this example 🔗︎

service:

tls:

enabled: true

...This small snippet completely changes the behaviour of these components:

- Ingress frontend and backend schemes and ports

ports: - port: {{ .Values.service.externalPort }} targetPort: {{ .Values.service.internalPort }} protocol: TCP {{- if .Values.service.tls }} name: "https-{{ .Values.service.name }}" {{- else }} - Service schemes and ports

type: {{ .Values.service.type }} ports: - port: {{ .Values.service.externalPort }} targetPort: {{ .Values.service.internalPort }} protocol: TCP {{- if .Values.service.tls }} name: "https-{{ .Values.service.name }}" {{- else }} name: "{{ .Values.service.name }}" {{ end }} - Readiness and Liveness probes

livenessProbe: httpGet: path: {{ .Values.pipelineBasepath }}/api port: {{ .Values.service.internalPort }} {{- if .Values.service.tls }} scheme: HTTPS {{ end }} initialDelaySeconds: 15 readinessProbe: httpGet: path: {{ .Values.global.pipelineBasepath }}/api port: {{ .Values.service.internalPort }} {{- if .Values.service.tls }} scheme: HTTPS {{ end }} initialDelaySeconds: 10 - Mount secrets

{{- if .Values.service.tls }} - name: tls-certificate mountPath: /tls {{ end }}

Using NOTES.txt 🔗︎

NOTES.txt provides information for users deploying your chart. It’s templated as well, so you can provide them some useful information to help them start using your deployed application. Generally, you should print created endpoints in whatever format users will most probably need it.

This snippet as an example command that allows users to reach a created service.

POD_NAME=$(kubectl get pods --namespace {{ .Release.Namespace }} -l "app={{ template "prometheus.name" . }},component={{ .Values.pushgateway.name }}" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace {{ .Release.Namespace }} port-forward $POD_NAME 9091Readme 🔗︎

No chart is complete until you write a README file. A good readme includes a brief description of the Chart, as well as some examples and some details about install options. If you’re in need of inspiration, check out another Chart’s readme, like our Cadence Chart.

Check your chart 🔗︎

Don’t forget to check your chart for syntax errors. It’s much faster then searching for errors after deployment.

$ helm lint my-chart

==> Linting my-chart

Lint OK

1 chart(s) linted, no failuresDebugging 🔗︎

Last but not least, debug. To understand what will happen after Helm renders templates we can use the --debug and --dry-run options.

$ helm install chart-name --debug --dry-runWhat did I install? 🔗︎

Getting values used by a running deployment may also come in handy.

$ helm get values release-name**There is a second part of this post, where we are exploring best practices and taking a look at some common mistakes. Follow up here: Helm from basics to advanced - part II.

I hope you found this short article about Helm useful and enjoyable.

Learn more about Helm: