Helm version 3 has been out officially for some time (release blog post was published on Wed, Nov 13, 2019). We’ve been using Helm since the early days of Kubernetes, and it’s been a core part of our Pipeline container management platform since day one. We’ve been making the switch to Helm 3 for a while and, as the title of this post indicates, today we’ll be digging into some details of our experience in transitioning between versions, and of using Helm as a Kubernetes release manager.

Learn more about Helm:

We expected Helm 3 to solve some of our most pressing problems with Helm 2, and it has solved several of them (removing Tiller, better CRD handling), though we would have liked to see support for Lua.

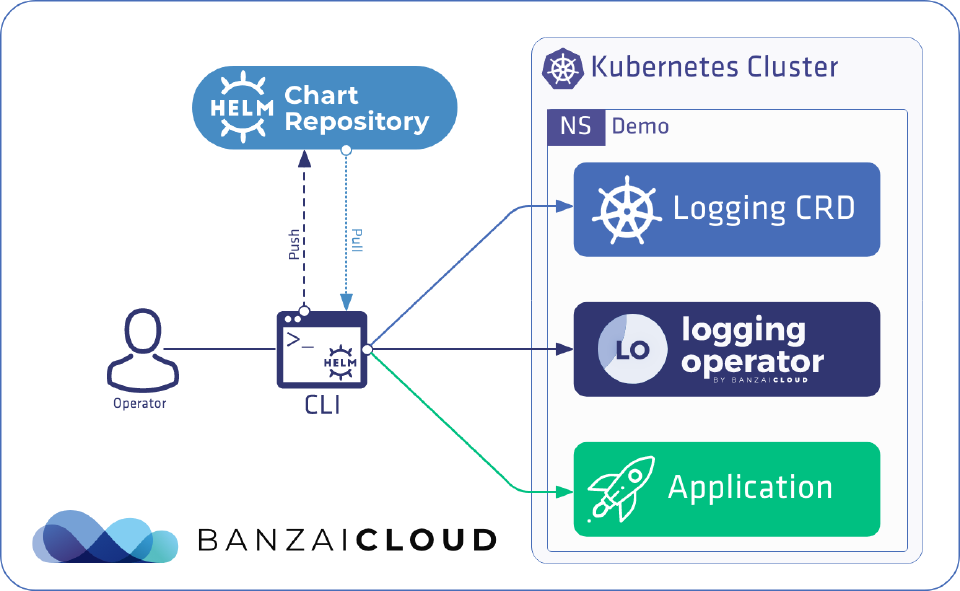

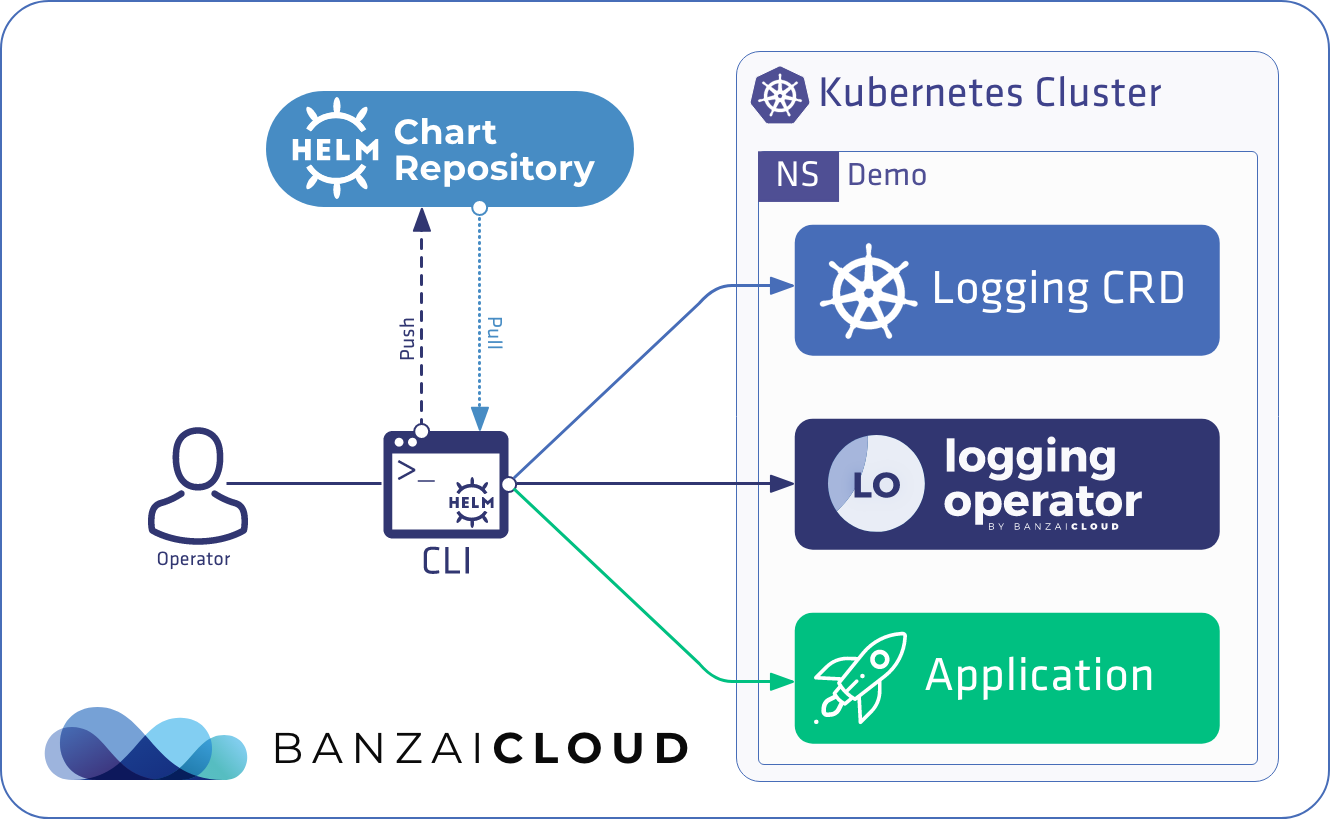

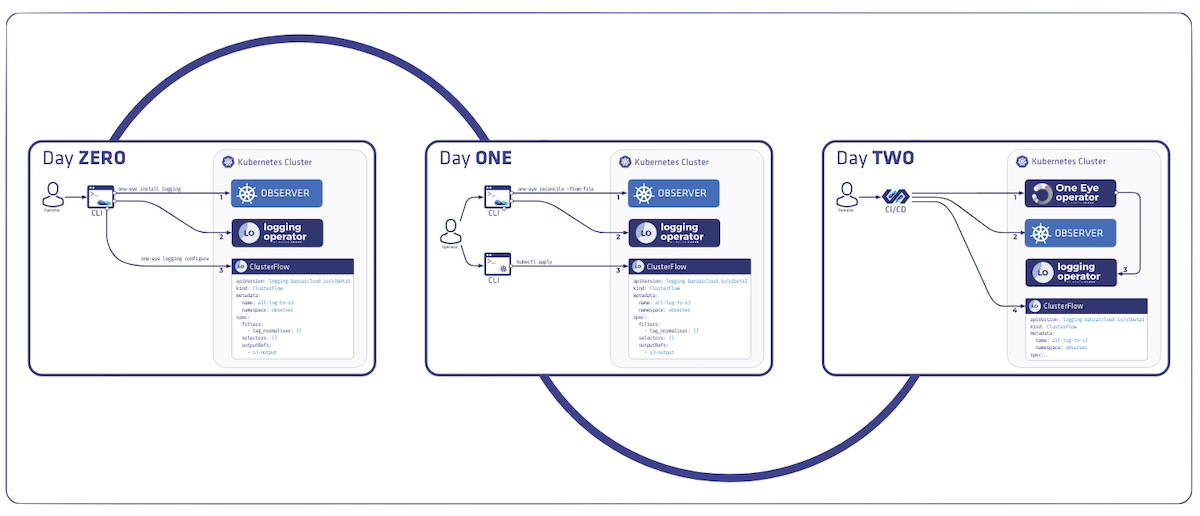

Recently at Banzai Cloud we took our fate into our own hands, and switched to a declarative installation methodology for the products and applications we are building and installing on Kubernetes. This does not compete with using Helm directly, and is complementary. We needed a method of installing applications to Kubernetes that had Helm’s simplicity; at the same time, that tool (binary) had to be capable of acting as a CLI, a local reconciler, and an in-cluster operator. To see it in action with Supertubes, our Kafka as a Kubernetes service read this post: Declarative deployment of Apache Kafka on Kubernetes with Supertubes.

Now let’s start with the official changes in Helm 3 and our reflections on them.

Removal of Tiller 🔗︎

The main benefit of Helm 3 is the removal of its previous version’s God component. This part of the stack required every permission to install other resources, and had a generic API to accomplish that. Without Tiller, Helm has become a much safer tool to work with.

Release names are now scoped to the Namespace 🔗︎

The global release name scope is now reduced to the namespace scope. This has some benefits, but ignores the fact that a release may contain cluster-scoped resources, in which case resources may collide. Because the emerging prevalence of multi-tenant Kubernetes, we’re counting this as an improvement (if the cluster is properly configured, this helps avoid us stepping on each other’s toes).

Improved upgrade strategy: 3-way strategic merge patches 🔗︎

This is something kubectl has been using for quite a while and it is worthy of its own post. We’ve built lots of operators (Istio, Kafka, PVC, HPA, Logging, Thanos, Vault, etc.) and we needed a standardized method of avoiding unnecessary object matching checks and updates (which actually matter at the scale we run our clusters). Accordingly, we have already open-sourced a project and blogged about the 3-way strategic merge patch problems and its solutions in our Kubernetes object matcher library post.

Secrets as the default storage driver 🔗︎

Another benefit of Helm 3 is that instead of storing huge configmaps, it splits the state file into release and versions. This means it’s much easier to track what’s happening to resources, and that it’s less likely to exhaust Kubernetes’ limits.

Validating Chart Values with JSONSchema 🔗︎

This is a good way to increase the quality of charts. Linting and validation are relatively problematic and there are several ways to screw up charts. It’s easy to type or misconfigure values, or generate templates that are not valid for Kubernetes. Additionally, Lint runs before the installer command. There is a great post about Validating Helm Chart Values with JSON Schemas.

Consolidation of requirements.yaml into Chart.yaml 🔗︎

This is just a minor change, but the fewer configuration files the better.

Pushing Charts to OCI Registries 🔗︎

This is another interesting new way to use OCI compatible registries as repository backends. This is super handy if you don’t want to manage yet another repository. However, this feature is still considered experimental.

$ helm chart list

Error: this feature has been marked as experimental and is not enabled by default. Please set HELM_EXPERIMENTAL_OCI=1 in your environment to use this feature

After exporting the relevant environment variable we can use the chart commands.

export HELM_EXPERIMENTAL_OCI=1

# Start a registry in the background

docker run -dp 5000:5000 --restart=always --name registry registry

# Save chart in the local cache

helm chart save logging-operator localhost:5000/myrepo/logging-operator:1.1.1

# Push chart to the remote repository

helm chart push localhost:5000/myrepo/logging-operator:1.1.1

# List charts

helm chart list

# Pull chart from the remote repository

helm chart pull localhost:5000/myrepo/logging-operator:1.1.1

You can install charts the same way by using the full path.

Library chart support 🔗︎

We’re not a big fan of library charts, nevertheless this one has received an official type identifier, and is thus worth mentioning. So let’s take a look. These charts only have template definitions. What does that mean? Basically, it means that when you create the same resources (like Kubernetes Deployments) several times, instead of duplicate definitions like metadata you can use library charts as templates.

This sounds good but, due to the nature of templating, it introduces a new level of complexity. Let’s take a look at an official example to see what we’re talking about.

This is a deployment definition from the official repository.

{{- define "common.deployment.tpl" -}}

apiVersion: extensions/v1beta1

kind: Deployment

{{ template "common.metadata" . }}

spec:

template:

metadata:

labels:

app: {{ template "common.name" . }}

release: {{ .Release.Name | quote }}

spec:

containers:

-

{{ include "common.container.tpl" . | indent 8 }}

{{- end -}}

{{- define "common.deployment" -}}

{{- template "common.util.merge" (append . "common.deployment.tpl") -}}

{{- end -}}

It constructs a deployment from other templates like common.metadata and common.container.

This is what common.container looks like.

{{- define "common.container.tpl" -}}

name: {{ .Chart.Name }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

ports:

- name: http

containerPort: 80

resources:

{{ toYaml .Values.resources | indent 2 }}

{{- end -}}

{{- define "common.container" -}}

{{- /* clear new line so indentation works correctly */ -}}

{{- println "" -}}

{{- include "common.util.merge" (append . "common.container.tpl") | indent 8 -}}

{{- end -}}

If you want to use those libraries you could craft your deployment like so:

{{- template "common.deployment" (list . "mychart.deployment") -}}

{{- define "mychart.deployment" -}}

## Define overrides for your Deployment resource here, e.g.

spec:

template:

spec:

containers:

- {{ template "common.container" (list . "mychart.deployment.container") }}

{{- end -}}

{{- define "mychart.deployment.container" -}}

## Define overrides for your Container here, e.g.

livenessProbe:

httpGet:

path: /

port: 80

readinessProbe:

httpGet:

path: /

port: 80

{{- end -}}

As you can see, you need to define the values in your templates for Helm to be able to merge them into the underlying definitions. So if you want to use values from your values.yaml file, you need to define them here. To truly take advantage of library charts you need very specific knowledge of the underlying implementation. There are some generic use-cases like the container image spec which can be useful, but you need to be mindful of copy-pasting errors and how you name the templates. This is still just template merging without any validation of specific resource, which, in our opinion, may be more error-prone than simply copying common attributes. Also, this means that changing default behaviors later would become troublesome, so, when taken all together, we don’t use or recommend using library charts, since they make it harder both to write and to read charts.

Managing CRDs 🔗︎

Kubernetes operators have become as common as Pods themselves. If you are running Kubernetes in production we are sure that you have several custom resource definitions installed. Kubernetes operators use CRDs to define resources in a structured manner. This has a lot of benefits, including reacting to the events of such resources, keeping track of states, and much more. The problem with CRDs is that they are dependencies of the operator and the custom resource object. Helm has always struggled to manage these components. Let’s see how Helm 2 handled them.

CRD pre-hook 🔗︎

The idea was to install custom resource definitions before the deployment and do this only once per installation. This solved some ordering issues but:

- There was an undefined time between applying a CRD and being able to use the definition to apply the CR. Hooks didn’t wait for CRDs to become available.

- There was no upgrade path for the CRDs as they were post-install hooks.

- If you deleted a CRD the upgrade wouldn’t try to install it again, so obviously the deployment would fail since the CRD was not available.

- You needed to specify the removal model in the deployment, and the CRD delete behavior in the Chart (of course you could template this as well). However, without proper lifecycle management, different charts would get different results.

CRD as a first-class citizen 🔗︎

In Helm 3 CRDs get their own placeholder in the charts, and the crd folder is reserved for their storage. The installation ensures (finally) that the CRD is available on the Kubernetes API and ready for use. That’s it; no more, no less. There’s still no upgrade or delete policy yet, which means that the same upgrade problems are prevalent. See this example with Prometheus Operator Chart version 8.3:

Install the operator:

# Install the official Prometheus Operator Chart 8.3

helm install prom83 stable/prometheus-operator --version 8.3

Check if our CRDs are available:

kubectl get crds

alertmanagers.monitoring.coreos.com 2020-05-22T10:30:58Z

podmonitors.monitoring.coreos.com 2020-05-22T10:30:58Z

prometheuses.monitoring.coreos.com 2020-05-22T10:30:59Z

prometheusrules.monitoring.coreos.com 2020-05-22T10:30:59Z

servicemonitors.monitoring.coreos.com 2020-05-22T10:30:59Z

Delete CRDs:

kubectl delete crd --all

Try an upgrade:

helm upgrade prom83 stable/prometheus-operator

Error: UPGRADE FAILED: [unable to recognize "": no matches for kind "Alertmanager" in version "monitoring.coreos.com/v1", unable to recognize "": no matches for kind "Prometheus" in version "monitoring.coreos.com/v1", unable to recognize "": no matches for kind "PrometheusRule" in version "monitoring.coreos.com/v1", unable to recognize "": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"]

Okay, no worries. We can just delete the release and install a fresh one.

helm delete prom83

Error: uninstallation completed with 1 error(s): unable to build kubernetes objects for delete: [unable to recognize "": no matches for kind "Alertmanager" in version "monitoring.coreos.com/v1", unable to recognize "": no matches for kind "Prometheus" in version "monitoring.coreos.com/v1", unable to recognize "": no matches for kind "PrometheusRule" in version "monitoring.coreos.com/v1", unable to recognize "": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"]

This is an actual bug that we already created a PR for. The bigger problem is that Helm has already deleted the deployment secret, so our resources are stuck in the cluster.

helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

helm list --failed

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

helm list --uninstalled

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

helm list --uninstalling

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

helm list --pending

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

helm list --all

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

This is a great example of how Helm leaves your deployment in an inconsistent state. Now you have to remove all the resources and the previously installed Chart.

Changing the unchangeable 🔗︎

Several attributes cannot be changed on an active Kubernetes resource, like statefulset labels and rolebinding. To overcome this limitation you have to recreate a given object. The following example demonstrates this based on this Github issue.

helm create example

Creating example

cat > example/templates/rolebinding.yaml <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: {{ include "example.fullname" $ }}

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: {{ .Values.role }}

subjects: []

EOF

helm install ex example --set role=view

NAME: ex

LAST DEPLOYED: Fri May 22 12:50:50 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=example,app.kubernetes.io/instance=ex" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:80

$ helm upgrade --force ex example --set role=edit

Error: UPGRADE FAILED: failed to replace object: RoleBinding.rbac.authorization.k8s.io "ex-example" is invalid: roleRef: Invalid value: rbac.RoleRef{APIGroup:"rbac.authorization.k8s.io", Kind:"ClusterRole", Name:"edit"}: cannot change roleRef

As you can see, the object can’t be changed and Helm does not, as of yet, offer a workaround.

Knowing about your Kubernetes resources 🔗︎

The recurring problem here is that Helm is a clever templating tool for YAML files and doesn’t care how Kubernetes works. Another great example is the following three-way patch problem.

We were facing similar problems when writing Kubernetes operators; check out the Kubernetes object matcher library for our solution.

The following example is based on this issue comment

Let’s see an example service definition snippet:

# values.yaml

controller:

service:

clusterIP: ""

---

# service.yaml

...

clusterIP: "{{ .Values.controller.service.clusterIP }}"

You can install this definition on a Kubernetes cluster and the service will be created. However, when you try to upgrade the chart you get an error.

Cannot patch Service: example-service" (Service "example-service" is invalid: spec.clusterIP: Invalid value: "": field is immutable)

So what happened here? After you created the service, Kubernetes assigned a cluster IP to it (let’s say 10.0.0.1). When generating the three-way patch, it knows that the old state was "", the live state is currently at "10.0.0.1", and the proposed state is "". Helm detected that the user requested to change the clusterIP from "10.0.0.1" to "".

“Helm 2 ignored the live state, so there was no change (old state: clusterIP: "” to a new state: clusterIP: “"), and no patch was generated, bypassing this behavior.”

The recommended workaround is to not set empty values unless you want to use them. The following snippet won’t generate a clusterIP with empty values:

# values.yaml

controller:

service:

clusterIP: ""

---

# service.yaml

...

{{ if .Values.controller.service.clusterIP }}

clusterIP: "{{ .Values.controller.service.clusterIP }}"

{{ end }}

Takeaway 🔗︎

This might sound a little harsh - and Helm 3 is a great step toward a truly functional deployment tool - but it still doesn’t help solve real day-to-day issues. Maybe this isn’t a definite conclusion, and is specific to the type and scale of the Kubernetes clusters and the deployments our customers are running on them (Pipeline is used in 5 clouds and on-prem datacenters to run and manage the application lifecycle and their Day 2 operation).

Ultimately, Helm is a clever templating tool, but we believe it misses the opportunity it has to understand underlying objects and behave accordingly. There are several other tools that allow you to deploy on Kubernetes, but each of them has its limitations, so it’s worth experimenting to find the one that best suits your needs: