At Banzai Cloud we are building a feature-rich enterprise-grade application platform, built for containers on top of Kubernetes, called Pipeline. For an enterprise-grade application platform security is a must and it has many building blocks. Please read through the Security series on our blog to learn how we deal with a variety of security-related issues.

Security series:

Authentication and authorization of Pipeline users with OAuth2 and Vault Dynamic credentials with Vault using Kubernetes Service Accounts Dynamic SSH with Vault and Pipeline Secure Kubernetes Deployments with Vault and Pipeline Policy enforcement on K8s with Pipeline The Vault swiss-army knife The Banzai Cloud Vault Operator Vault unseal flow with KMS

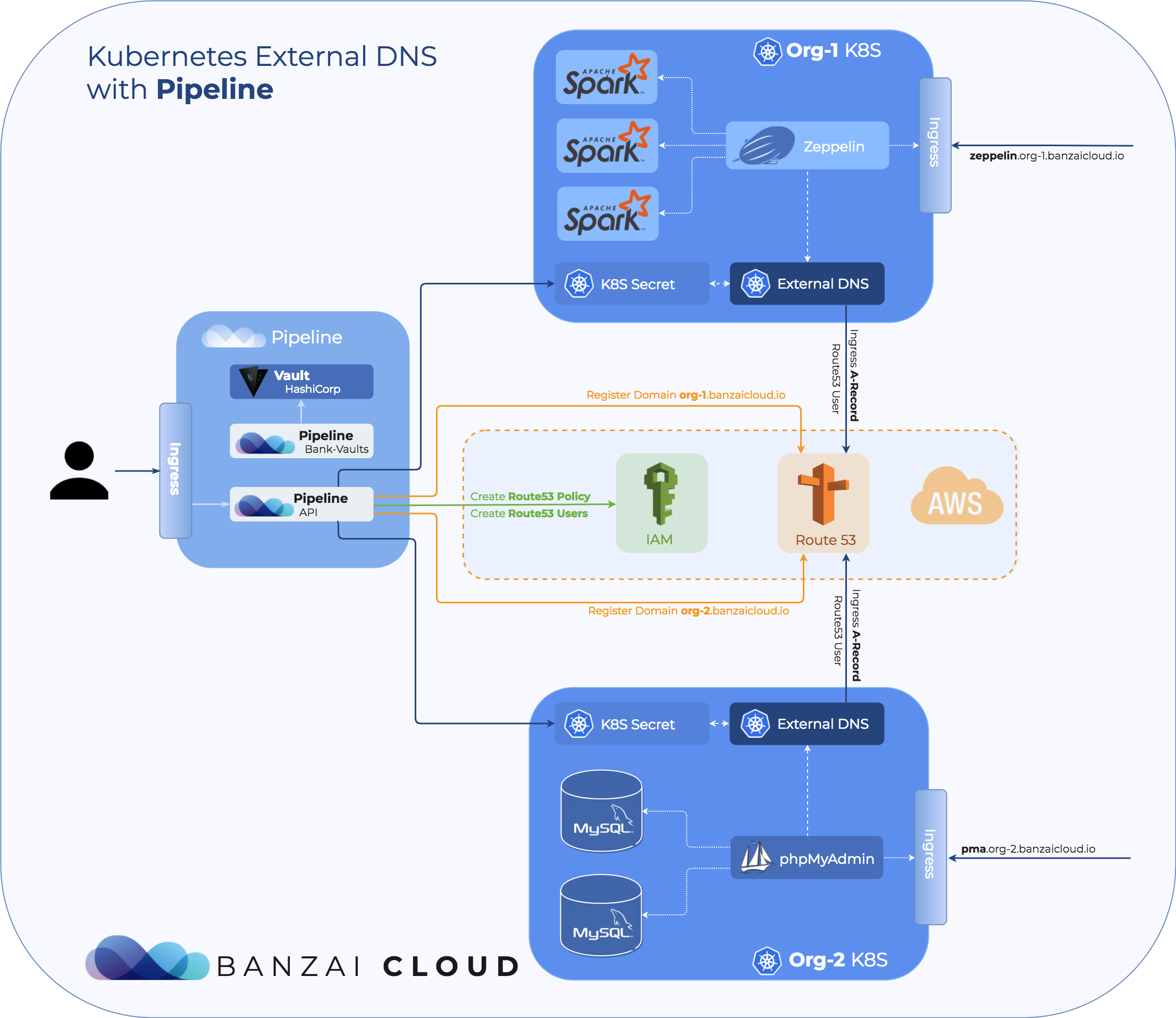

In this post, we’ll describe how we use external DNS services, specifically Amazon Route53, to solve a piece of the security puzzle (though Amazon Route53’s utility is not limited to this use case). With Pipeline, users can provision large, multi-tenant Kubernetes clusters on all major cloud providers such as AWS, GCP, Azure, and BYOC and then deploy various applications to their Kubernetes clusters.

The deployed application may expose a public endpoint at which the service it provides is reachable. (e.g. the host and port of a deployed MySQL). One of the steps in securing that public endpoint is to ensure that the communication channel between client and server is secure. To accomplish this, the connections between the client and the server must be encrypted using a TLS (Transport Layer Security) protocol.

In order to set up TLS for a service, a private key and a server certificate containing the public key are required. The server certificate can be either self-signed or a commercial certificate from a well-know certificate provider. The common name of the server certificate must match the URL of the service, otherwise clients will not trust the service they connect to. If the service can be reached through multiple URLs, then a multi-domain server certificate is required.

When running Kubernetes in the cloud, public endpoints are usually exposed via services of the LoadBalancer-type. Kubernetes engages the cloud provider’s API to create a load balancer offered by the cloud provider (e.g. for Amazon it will be an Amazon Elastic Load Balancer). A load balancer created by the cloud provider incurs some cost, so it’s recommended that you keep the number of blanancers relatively low. That can be achieved in Pipeline by using Ingress.

If you’re interested in how authentication can be added to Ingress check out our Ingress Authentication post.

When a cloud provider provisions a load balancer it will be assigned a Public IP and a generated DNS name. This is the URL that will point to a Kubernetes service of the LoadBalancer-type, which then points to the Kubernetes Pod running the application. These are the mechanics of how the public endpoint of an application running on Kubernetes is exposed in a cloud environment.

As stated above, in order to set up TLS on this public endpoint, the server certificate must be issued with a Common Name that matches the URL of the public endpoint. Since we don’t know what the DNS name generated by a cloud provider will be, we can only provision the server certificate after the DNS name is ready, and then configure the application to use TLS. Clearly, this is not sustainable and we need a solution that allows the publishing of public endpoints on predefined URLs, for which the TLS server certificates can be provisioned for upfront.

Kubernetes ExternalDNS provides a solution. It sets up DNS records at DNS providers external to Kubernetes such that Kubernetes services are discoverable via the external DNS providers, and allows the controlling of DNS records to be done dynamically, in a DNS provider agnostic way.

Once ExternalDNS is deployed to a Kubernetes cluster, exposing Kubernetes services via the configured external DNS provider is as simple as annotating the Kubernetes service with external-dns.alpha.kubernetes.io/hostname=<my-service-public-url>. See external dns setup steps for more details.

With these solutions we can control the URL at whcih our public service is accessible, thus we can create the appropriate server certificate for TLS in advance, before the application using it is deployed.

What does Pipeline brings to the table? 🔗︎

At Banzai Cloud one of our objectives is to automate as much as possible in order to make life easier for our users. Setting up External DNS involves quite a few steps, but Pipeline takes care of these for the user.

With Pipeline, users can create multiple Kubernetes clusters on any of its supported cloud providers. An end user belongs to one or more organizations. When a cluster is created, the user specifies which organization the cluster will belong to.

After that, Pipeline:

- Creates a Route53 hosted zone for each organization

- Sets up an access policy for the hosted zone

- Deploys

ExternalDNSto cluster - Deletes unused hosted zones from Route53

- Generates TLS certificates

Route53 hosted zone 🔗︎

We use Amazon Route53 as our DNS provider. Pipeline registers a hosted zone domain in Route53 for each organization in the form of <organization-name>.<domain>. This domain is shared by all clusters that belong to that same organization.

Route53 access policy 🔗︎

The various ExternalDNS instances deployed to the Kubernetes clusters that belong to an organization must be restricted to have access only to the hosted zone that was created for that organization. This is essential in order to protect the hosted zone of an organization from being manipulated by an ExternalDNS instance deployed to the Kubernetes cluster of another organization.

The Route53 access policy on Amazon that restricts modify access to a specific hosted zone:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "route53:ChangeResourceRecordSets",

"Resource": "arn:aws:route53:::hostedzone/<hosted-zone-id>"

},

{

"Effect": "Allow",

"Action": [

"route53:ListHostedZones",

"route53:ListResourceRecordSets"

],

"Resource": "*"

}

]

}Next, an IAM user is created and the Route53 policy is attached to to it. ExternalDNS will perform operations against Route53 on behalf of the IAM user, which requires an AWS Access Key to be created for the user. Pipeline creates the access key and stores it in a secure place, using Bank-Vaults.

Deploying ExternalDNS to cluster 🔗︎

ExternalDNS is deployed to the cluster and is configured to use the AWS Access Key of the previously created IAM user. The AWS Access Key is taken from Vault and injected into the cluster as a Kubernetes secret. ExternalDNS has access to the AWS Access Key via this Kubernetes secret.

Removing unused Route53 hosted zones 🔗︎

Pipeline periodically checks if there are unused Route53 hosted zones by searching for organizations that don’t have any running Kubernetes clusters. Hosted zones of those organizations are candidates for removal. Also, Pipeline takes into consideration Amazon’s Route53 pricing model in order to optimize costs such as:

- Checks if the unused hosted zone candidate for deletion was created in the last 12 hours. If that is the case, the hosted zone can be deleted and won’t be invoiced, in accordance with Route53 pricing “To allow testing, a hosted zone that is deleted within 12 hours of creation is not charged…"

- If the unused hosted zone is older than 12 hours, then we have already been charged for it, thus there is no reason to delete the hosted zone, since, if the zone is re-created later in the same month, we will be charged again if it lasts more than 12 hours

- the unused hosted zones that we have been already charged for in the current billing period are deleted just before the next billing period starts, provided these are still unused (“The monthly hosted zone prices listed above are not prorated for partial months. A hosted zone is charged at the time it’s created and on the first day of each subsequent month”).

The following diagram depicts the above described Route53 flow:

TLS certificates 🔗︎

Pipeline already supports the generation of self-signed TLS certificates, and stores them in a secure place using Vault and by injecting them into a Kubernetes cluster as a Kubernetes secret.

All users need to do is to configure deployment of the application to consume the TLS keys from the Kubernetes secret in order to enable TLS, also to annotate the Kubernetes service that the application exposes with external-dns.alpha.kubernetes.io/hostname=<my-service-public-url> in order that it should be recorded in Route53 DNS service.