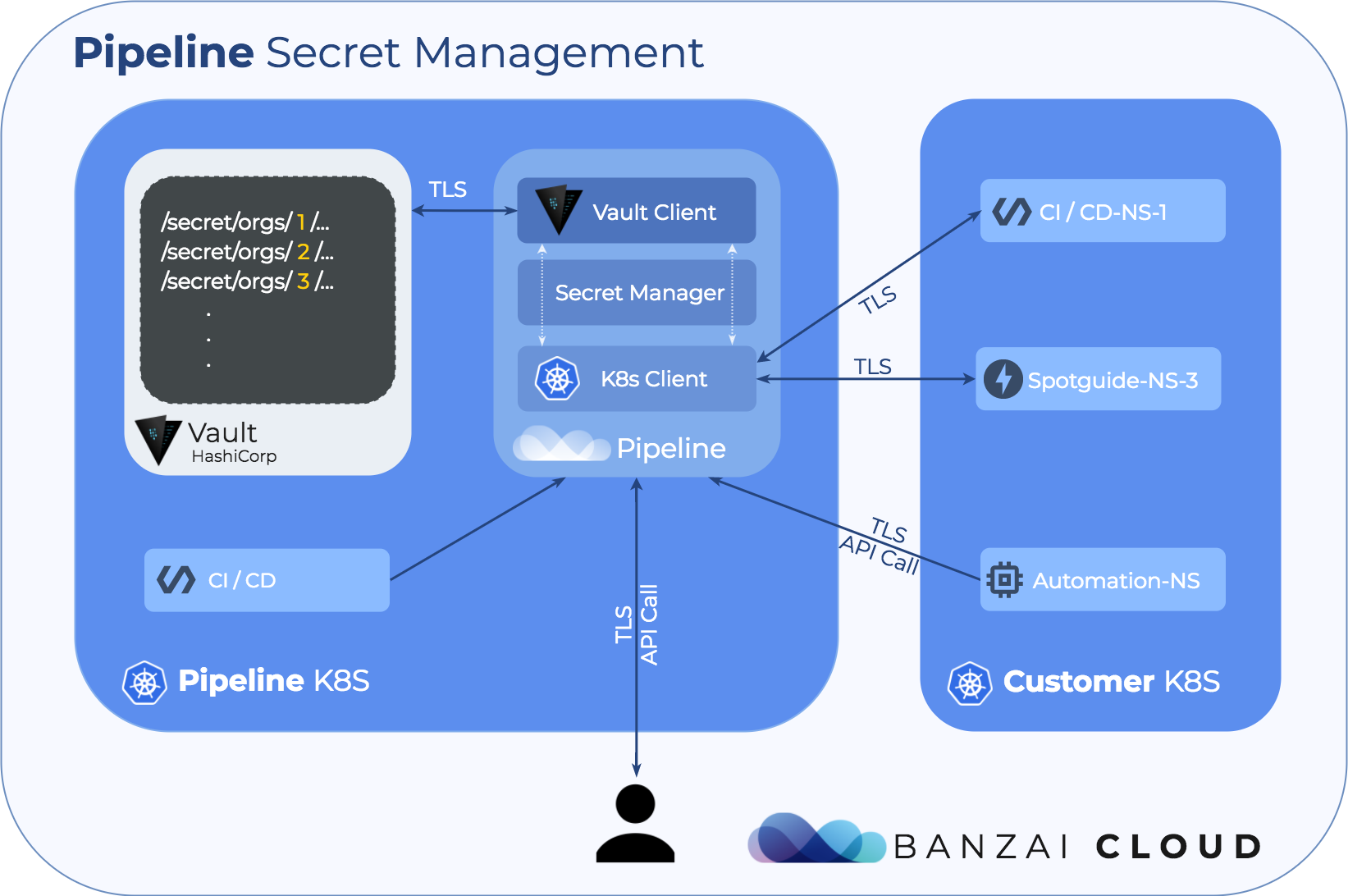

At Banzai Cloud we are building a feature rich enterprise-grade application platform, built for containers on top of Kubernetes, called Pipeline. With Pipeline we provision large, multi-tenant Kubernetes clusters on all major cloud providers such as AWS, GCP, Azure and BYOC, on-premise and hybrid, and deploy all kinds of predefined or ad-hoc workloads to these clusters. For us and our enterprise users, Kubernetes secret management (base 64) was not sufficient, so we chose Vault and added Kubernetes support to manage our secrets. In this post we will describe how we built a secret management layer on top of Vault, in Pipeline, and how we distribute these secrets in Kubernetes.

Security series:

Authentication and authorization of Pipeline users with OAuth2 and Vault Dynamic credentials with Vault using Kubernetes Service Accounts Dynamic SSH with Vault and Pipeline Secure Kubernetes Deployments with Vault and Pipeline Policy enforcement on K8s with Pipeline The Vault swiss-army knife The Banzai Cloud Vault Operator Vault unseal flow with KMS Kubernetes secret management with Pipeline Container vulnerability scans with Pipeline Kubernetes API proxy with Pipeline

Secret management in Pipeline 🔗︎

In Pipeline, users are part of organizations. Users can invite other users to organizations and a user can be part of multiple organizations at once. These organizations may have various types of associated resources: Kubernetes clusters that can be created inside organizations, object storage buckets that can be set up, application catalogs, etc. One common feature is that these all require secrets to work properly. These secrets usually allow Pipeline to interact with your cloud provider account, in order to provision underlying resources. Also, secrets can be associated with applications running on your Kubernetes clusters, like a TLS certificate chain for MySQL or a Kubernetes cluster’s own credentials. We try to rely on already existing features, but in some cases we have to extend Vault with additional layers of abstraction in order for it to better fit our needs. Of course, for all Vault facing operations we use our own Bank-Vaults client and unsealer/configurator in combination with our Vault operator.

Secret types in Pipeline 🔗︎

The following secret types are currently supported:

- Username/Password pairs

- TLS certificates

- SSH keys

- AWS credentials

- Google credentials

- Azure credentials

- Kubernetes client configurations

- Generic secrets (no schematic support just KV pairs)

Username/Password pairs 🔗︎

This is the most common authentication method for applications (for example, it is necessary for our MySQL spotguide). It is a secret type wherein you don’t need to supply all fields, because a password can be generated based on a schema, and, later on, you can query that password through the API. The application will be bootstrapped with this password. There are certain options pertaining to how the password is generated and stored, with two types currently supported:

passwordwhich is stored as “plain text”, which is of course not plain text, just not hashed before it is pushed to Vaulthtpasswdfor HTTP servers Basic Auth support - like NGINX Ingress - which isbcrypt-ed before it is pushed to Vault

TLS certificate 🔗︎

If your application requires secure communication (and what application doesn’t?), the most straightforward and broadly supported way of securing that communication is to generate TLS certificates both on the server and on the client’s side (if clients are also identified by certificates, this is referred to as Mutual TLS).

For example MySQL will be configured with the following settings after the TLS certificates are generated:

[mysqld]

ssl-ca=ca.pem

ssl-cert=server-cert.pem

ssl-key=server-key.pem

Let’s suppose you have a running Pipeline instance and you have an access token in $TOKEN as an environment variable. Call the API with cURL to get a TLS secret generated for you that corresponds to a specified host (in this case localhost):

curl -f -s -H "Authorization: Bearer $TOKEN" \

-H "Content-Type: application/json" \

http://localhost:9090/api/v1/orgs/1/secrets \

-d "{\"type\":\"tls\", \"name\":\"localhost-tls\", \"values\":{\"hosts\":\"localhost\"}}" | jq

It will return the "id" of the generated TLS secret:

{

"name": "localhost-tls",

"type": "tls",

"id": "5acbca16ecf920dcb32b86c0d9b25b566afeec970c999f338c4d79995acc43f5"

}

List all generated values (only the keys):

curl -f -s -H "Authorization: Bearer $TOKEN" \

http://localhost:9090/api/v1/orgs/1/secrets/5acbca16ecf920dcb32b86c0d9b25b566afeec970c999f338c4d79995acc43f5 \

| jq '.values' | jq 'keys'

To get the actual values of the generated root and client certificates, call HTTP GET on the returned "id" with some jq help:

curl -f -s -H "Authorization: Bearer $TOKEN" \

http://localhost:9090/api/v1/orgs/1/secrets/5acbca16ecf920dcb32b86c0d9b25b566afeec970c999f338c4d79995acc43f5 \

| jq -r .values.caCert

-----BEGIN CERTIFICATE-----

MIIDHzCCAgegAwIBAgIRAIhejcff4aLrIMvFXtRlO6UwDQYJKoZIhvcNAQELBQAw

KTEVMBMGA1UEChMMQmFuemFpIENsb3VkMRAwDgYDVQQDEwdSb290IENBMB4XDTE4

MDYyOTEyMDkzMFoXDTE5MDYyOTEyMDkzMFowKTEVMBMGA1UEChMMQmFuemFpIENs

b3VkMRAwDgYDVQQDEwdSb290IENBMIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIB

CgKCAQEAw8IPTng0LjZpznauxic/qwPrcaxQyBqsx7G3UHUyK3b2CObB8Zasgg0q

/P/5JF9jGe/PaAWUooAdS0VLE+b6ssSFkO5FQvsQvthBu4MgFztE3HE98VQP6MBD

FJoeZEfJ9RfHElM0qZqpQNnhgd7mHPydUXsKHn7kM7Krw2Txp4bLirOMdMZnZUle

oD0NWnk9/z1zTPWZwOsfOLdBBGdKY6iyHrusN9nsRWErf0ioDJTCBwkCvhYh50UZ

NeWj67gDsX/3DdNh9BWJFnLRu02pF5FCDqzvO7ipGACcKzEkBLx37OnsPgasPBNT

4U4XMuE5FlLXZYDDVpfsRmX4fi0ShQIDAQABo0IwQDAOBgNVHQ8BAf8EBAMCAgQw

HQYDVR0lBBYwFAYIKwYBBQUHAwEGCCsGAQUFBwMCMA8GA1UdEwEB/wQFMAMBAf8w

DQYJKoZIhvcNAQELBQADggEBAAswywCuQNfp7W7PgU9snTYMptP33O6ouW3kh8tG

qyB1/68FJ3HX4iJx4Mq5VD3zULMMOOJC2uRDrdafW88LFrThfFi/WiUIuT1KVwT5

opO3UAyoaI6q2XRHc2bAeGOcfWPznDHGEV7KF8Zg5kzrXGXGnpYwlQZPvvION551

B7RPTKl/+Yc8dCWpIBQPn+MT2X4oiKuHbz5SOFdjQdMeUVCqx7OA6BLqVCJGcqp5

aetFFTV5Pk4MOwXqUOVMQy5rLj0iffMQB1vXN/SrvR/o3tDUqPwqApSRM1Y5BFYp

6fW7Qrw2DKe0QcYGs4dqXa95eP3+/zKXYOPqP7eVEgi84EI=

-----END CERTIFICATE-----

curl -f -s -H "Authorization: Bearer $TOKEN" \

http://localhost:9090/api/v1/orgs/1/secrets/5acbca16ecf920dcb32b86c0d9b25b566afeec970c999f338c4d79995acc43f5 \

| jq -r .values.clientCert

-----BEGIN CERTIFICATE-----

MIIDFzCCAf+gAwIBAgIBBDANBgkqhkiG9w0BAQsFADApMRUwEwYDVQQKEwxCYW56

YWkgQ2xvdWQxEDAOBgNVBAMTB1Jvb3QgQ0EwHhcNMTgwNjI5MTIwOTMwWhcNMTkw

NjI5MTIwOTMwWjA+MRUwEwYDVQQKEwxCYW56YWkgQ2xvdWQxJTAjBgNVBAMTHEJh

bnphaSBHZW5lcmV0ZWQgQ2xpZW50IENlcnQwggEiMA0GCSqGSIb3DQEBAQUAA4IB

DwAwggEKAoIBAQDe8n5NWjN1q27nvKVtBBQ5NFZ6leyv5fumHrSYHK010DpL2Q3D

nwGTL+xQjCoTbXb6ElsVSBut3LvP0/ZkQ8YZjI2rEuMs5IpvIR0zqHuvYN3KboGL

n7WJuCO0PJoUj/9qzcKtr5+3Dh3btS9UQLbUpcuMG/ixIrdpYT5YiTfnOBoTdN6Y

IHQGU4n/WTFPulWkHoFbSFXmG1DGrWO3O8dTokL+b/gqh6t6pfbhlspQoFA0GEUp

ur8qQ6/LildcSle4QoNMfUr+SL8NW8N3dhGodnEEqtmouoMANCvKSRy7zU96laFi

dxEwOAmJswp3uhPBCqDb09QgHeAykP8g3poFAgMBAAGjNTAzMA4GA1UdDwEB/wQE

AwIHgDATBgNVHSUEDDAKBggrBgEFBQcDAjAMBgNVHRMBAf8EAjAAMA0GCSqGSIb3

DQEBCwUAA4IBAQA9V2YlaGHYEMDwAR1x8itxWpC3dozg5pj01gjJwVf9rfsDi0y7

/nG/q6ZUkx8Bn4VcHfD+vIW40D6zAEzZBd5Y+xg9jGLKzlhzRa3FciFYzMC3CC1E

LNdpXrNERJ8at09LXxjzOPY9dmLtnap5kR5fimyHaP6bsUCxHGpA8ukHxvs0L6+8

9iu3XUTD5rnr+uV67ACRwHREv0KVckm1ufCBTWAfIwdkIQvDaghT580gmstneieJ

nDf9m+BjPEu1AXScCW+ikMqcByC9hFfgyyToGr91isFx0pTGBpInTHJHMTX2Hmmz

0wXzuc/a+f8uVlc8ePWlXw5BuAPRxnabn64K

-----END CERTIFICATE-----

If you save these certificates, and the keys to the corresponding pem files, you can connect to MySQL like this:

mysql --ssl-ca=ca.pem \

--ssl-cert=client-cert.pem \

--ssl-key=client-key.pem

Please note that you can post all values through the API when creating the secret, in which case Pipeline doesn’t generate anything, but uses the values supplied by the call.

SSH secrets 🔗︎

Are very similar to TLS certificates from the API’s point of view; this endpoint is used to generate SSH key pairs for logging into virtual machines. However, if you do supply your own keys, then those keys will be stored instead of more keys being generated.

Cloud provider credentials 🔗︎

These types of secrets are probably the most important part of any setup. These are currently static secrets, thus the user has to provide already existing credentials. However, since the Secret Store is based on Vault, there is an opportunity to generate short-lived, dynamic cloud provider credentials for a list of supported providers. (AWS and Google Cloud are currently supported by Vault). When these credentials are passed to the API, Pipeline does some basic validation (for example, it tries to list your supported VM instance types as a sanity check). We use these credentials internally as well; the DNS domains for organizations are registered via Route53, so we deploy a limited AWS credential into your organization’s secret space.

Kubernetes client configuration 🔗︎

As the name implies, this type of secret holds the kubectl readable Kubernetes configuration. Each cluster created by Pipeline has at least one secret of this type associated with it.

Generic secrets 🔗︎

We have all faced situations wherein we’ve had to store and distribute a piece of a credential to an application but it wasn’t a username/password, or a TLS certificate or a key pair, but was just a single value or a group of values, something generic with no well defined schema. For this reason, we’ve added generic-type secrets, which are simply lists of key value pairs without validation or support for generating them.

Secret consumption in Kubernetes 🔗︎

In Kubernetes, these Pipeline secrets are consumable by Pods in the form of v1.Secrets. Since these are only Base64 encoded values, we don’t want to store them permanently in Kubernetes, but we need to supply them as Secrets to let Pods consume them. We do this in isolated namespaces, for periods that are as short as possible, to minimize the leakage of any confidential data.

We also need to tell in Pod descriptors how we would like to consume these secrets, and in which form. Kubernetes gives us two options: mount them as volumes into a directory’s secret keys as files, or pass them in as environment variables. So far so good. However, how do we decide which option to use?

This is supported by our API; after calling Install secrets into cluster API endpoint (POST /api/v1/orgs/{orgId}/clusters/{id}/secrets), it returns the list of installed secrets and also how they may be consumed:

curl -f -s -H "Authorization: Bearer $TOKEN" \

-H "Content-Type: application/json" \

http://localhost:9090/api/v1/orgs/1/clusters/1/secrets \

-d "{\"namespace\": \"build-123\", \"query\": {\"type\": \"tls\", \"tag\": \"repo:pipeline\"}}" | jq

In the above example we’ve posted a filter object in the form of JSON to select only secrets with type:tls and, to connect to the repository:pipeline, they should be installed into the build’s own namespace: build-123. We will go into more detail about build namespaces in a later blog post.

The API answers:

[

{

"name": "my-tls-cert",

"sourcing": "volume"

}

]

as this example illustrates, TLS certs are usually accepted by applications in the form of files, so that the volume sourcing type is returned.

Since Pipeline is a centralized secret orchestrator, it offers more features than standard Kubernetes secrets. Secrets are enriched with metadata, and this metadata offers a lot of flexibility in terms of auditability, querying, grouping and consumption.

The Pipeline Secrets API Definition 🔗︎

The whole Pipeline Secrets API is an OpenAPI 3.0.0 definition and available online.

Authentication extensions 🔗︎

Currently, Pipeline supports GitHub OAuth based authentication. This is the so-called ‘usual’ workflow for web-based developer services: the GitHub access form pops up in the browser and asks if you will allow the application to access your user data and repository information (scopes). We also support other identity providers (Google, Facebook, Twitter, etc), which are supported by our auth package. However, this is not usually acceptable for our enterprise users.

For enterprise use cases we have extended our authentication framework with Dex support. Now, if you have an existing LDAP or Active Directory service, you can plug it in to our authentication backend which is based on Dex.

Future plans 🔗︎

Managing secrets is always a challenge, because the use cases and problems we face during the distribution, storage, auditing, and editing of secrets are almost endless. TLS certificates expire (!), so sometimes they have to be refreshed, and services provided with their new versions. We also try to minimize the number of distributed secrets, so we tag secrets with more metadata in order to get the right granularity of distribution. Also, our Vault setup is highly portable (see the number of backends and unseal options we support), so we would like to give you the opportunity to install a Vault instance inside your organization and store your secrets inside your DMZ. The current secret backend is Vault KV version 2, which supports versioned secrets. This functionality will be implemented in forthcoming versions.

Learn through code 🔗︎

We take our Pipeline users’ security and trust very seriously - and, as usual, our code is open source. If you believe you’ve found a security issue please contact us at security@banzaicloud.com.