It may not be a well-known fact, but a Kubernetes API server can proxy HTTP connections between a client and any service running on a cluster.

A simple kubectl command exists that allows it:

$ kubectl proxy

Starting to serve on 127.0.0.1:8001

We use this for demo purposes or when we don’t want to expose APIs publicly, but need to access them from our computers.

Moreover, this proxy can transport WebSocket connections. A common example is the: kubectl logs command. Although these are pretty common, there exists an edge-case issue wherein we wanted to use WebSocket connections as well as query parameters.

The purpose behind proxy WebSocket 🔗︎

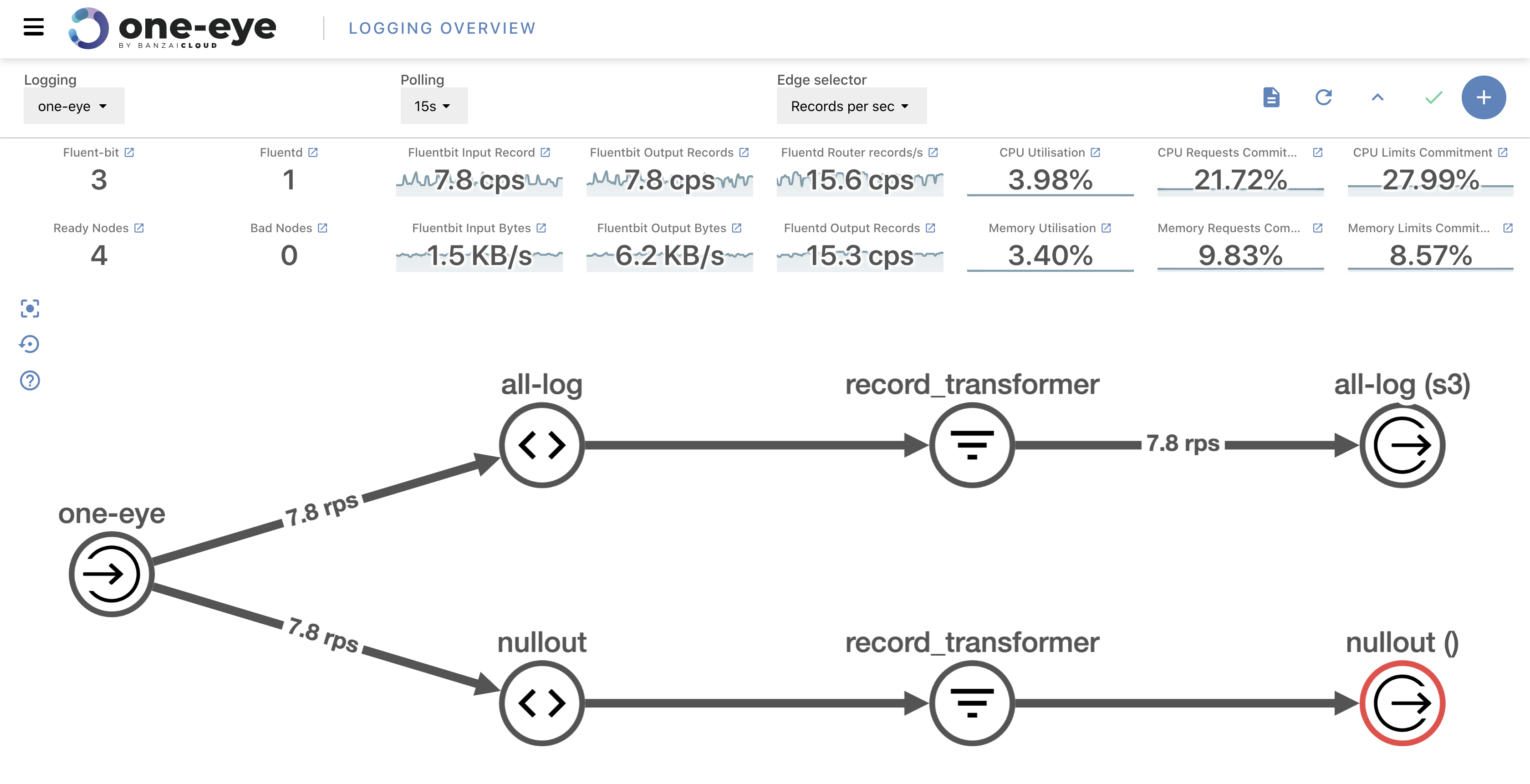

One Eye provides both metrics and logs for Kubernetes containers, and it’s only natural to want to tail the logs of a given container. Since the previous release - 0.3.0 - One Eye supports Loki as it’s log backend. Loki offers a built in HTTP API that supports the LogQL query language.

Additionally, it provides a streaming endpoint to tail the results of a query through WebSocket. We only have to access this endpoint through the Kubernetes API. Sounds simple, right?

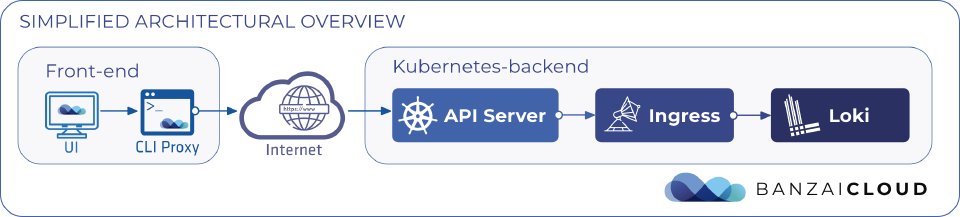

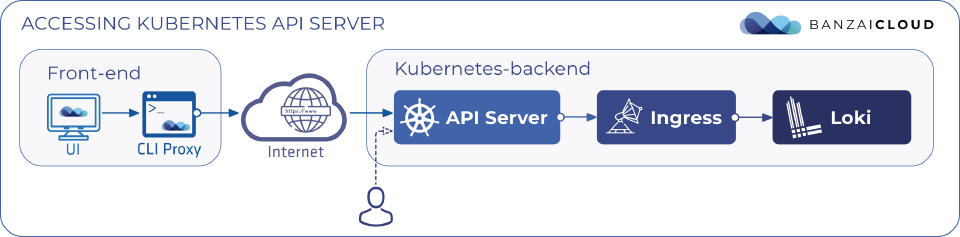

Architectural overview 🔗︎

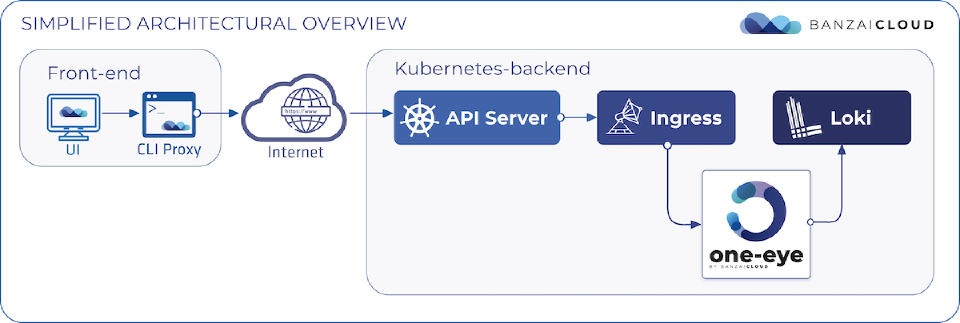

Let’s take a look at the simplified architectural map, to better understand the querying journey.

-

CLI ProxyA small go application that runs on the local computer. It’s a simple proxy to the Kubernetes API Server’s endpoint. As the UI is javascript running inside a browser, in this case it’s running from the same host as the CLI. -

Kubernetes Api ServerThe entry point to the Kubernetes cluster. -

IngressIngress server, which proxies the requests to a particular backend-component. We use Nginx-ingress at the moment. -

LokiThe Loki backend, which collects all the logs in the cluster and provides HTTP & GRPC API.

About LogQL: 🔗︎

The Loki API endpoint requires a LogQL query provided as HTTP query args to filter the logs that match the given terms.

There are two conventional ways to initiate Loki queries:

- One is to call the /loki/api/v1/query endpoint over HTTP with query args, which returns results in a simple HTTP response

- The other is to use the /loki/api/v1/tail endpoint where the query is similar (relatively speaking) to the first one, but it upgrades the HTTP connection to a WebSocket and continuously feeds this live connection with the most recent data (e.g. POSIX tail)

Both query endpoints require a LogQL query that’s provided in HTTP query args, like this:

query={pod="one-eye-event-tailer-0"}&limit=10

If you are not familiar with LogQL check out our post Loki, the tail and grep for Kubernetes

Crack some eggs 🔗︎

With a running CLI proxy on port 8080 we were able to call Loki services over HTTP.

We tried this with the most basic check: a readiness check. It worked well.

$ curl -i localhost:8080/loki/ready

HTTP/1.1 200 OK

Content-Length: 6

Content-Type: text/plain; charset=utf-8

Date: Tue, 30 Jun 2020 09:02:05 GMT

Server: nginx/1.17.8

X-Content-Type-Options: nosniff

ready

The next step was to grab some labels from the Loki server.

curl localhost:8080/loki/loki/api/v1/label | jq

Please note the double

/lokiURL:

- The first

/lokiURL belongs to the ingress, which makes the ingress route the request to the loki backend- The second

/lokiURL is actually part of the Loki service HTTP API

{

"status": "success",

"data": [

"__name__",

"app",

"app_kubernetes_io_component",

"app_kubernetes_io_instance",

"app_kubernetes_io_name",

"chart",

"container",

"container_id",

"controller_revision_hash",

"heritage",

"host",

"name",

"namespace",

"pod",

"pod_id",

"pod_template_hash",

"prometheus",

"release",

"statefulset_kubernetes_io_pod_name"

]

}

We ran some more complex queries as well, which all came out fine.

curl 'localhost:8080/loki/loki/api/v1/query_range?query=\{name="loki"\}&limit=1' 2>/dev/null | jq

Notice the escaped curly brackets (\{ \}). We need these because bash tries to evaluate them otherwise.

{

"status": "success",

"data": {

"resultType": "streams",

"result": [

{

"stream": {

"namespace": "default",

"pod": "one-eye-loki-0",

"pod_id": "78a4c513-06b9-449d-aca6-10a1266fc116",

"statefulset_kubernetes_io_pod_name": "one-eye-loki-0",

"app": "loki",

"container": "loki",

"container_id": "c7a9c62eb8e5f5629a04aecf54c39156b4903ecbc5e93ac729de335dbfcf6dd8",

"controller_revision_hash": "one-eye-loki-7874db668c",

"host": "kind-control-plane",

"name": "loki",

"release": "one-eye-loki"

},

"values": [

[

"1593529265837448400",

"{\"stream\":\"stderr\",\"logtag\":\"F\",\"message\":\"level=info ts=2020-06-30T15:01:05.8367595Z caller=table_manager.go:292 msg=\\\"synching tables\\\" expected_tables=116\"}"

]

]

}

],

"stats": {

"summary": {

"bytesProcessedPerSecond": 14001628,

"linesProcessedPerSecond": 43852,

"totalBytesProcessed": 53321,

"totalLinesProcessed": 167,

"execTime": 0.0038082

},

"store": { ... }

}

}

}

Everything worked fine during our first few attempts, but now we want to use the /tail endpoint with a WebSocket connection to continuously get the most recent logs.

Websocket tools 🔗︎

We debugged the HTTP API endpoints with simple tools like curl and other small oneliners. However, query WebSocket is a bit trickier than just giving a URL to the shell.

curl 🔗︎

The WebSocket protocol requires a lot of headers, so we used a tool which simplifies the call to make WebSocket requests with curl, and scripted a simple bash function to handle all those headers. We’ve tested the bash function against another WebSocket application, and it worked well. It looked like this:

curlws() {

setopt local_options BASH_REMATCH;

local uri="${1}"

[[ "${uri}" =~ ^((http|https)[:]//)?([^/]+)(.*)$ ]] || return

local protocol=${BASH_REMATCH[2]:-http://}

curl -N \

-H "Connection: Upgrade" \

-H "Upgrade: websocket" \

-H "Host: ${BASH_REMATCH[4]}" \

-H "Origin: $protocol${BASH_REMATCH[4]}" \

-H "Sec-WebSocket-Key: $(head -c 16 /dev/urandom | base64)" \

-H "Sec-WebSocket-Version: 13" \

$@ --output - ;

}

The

setopt local_options BASH_REMATCH;required on zsh, to use bash regex format

javascript 🔗︎

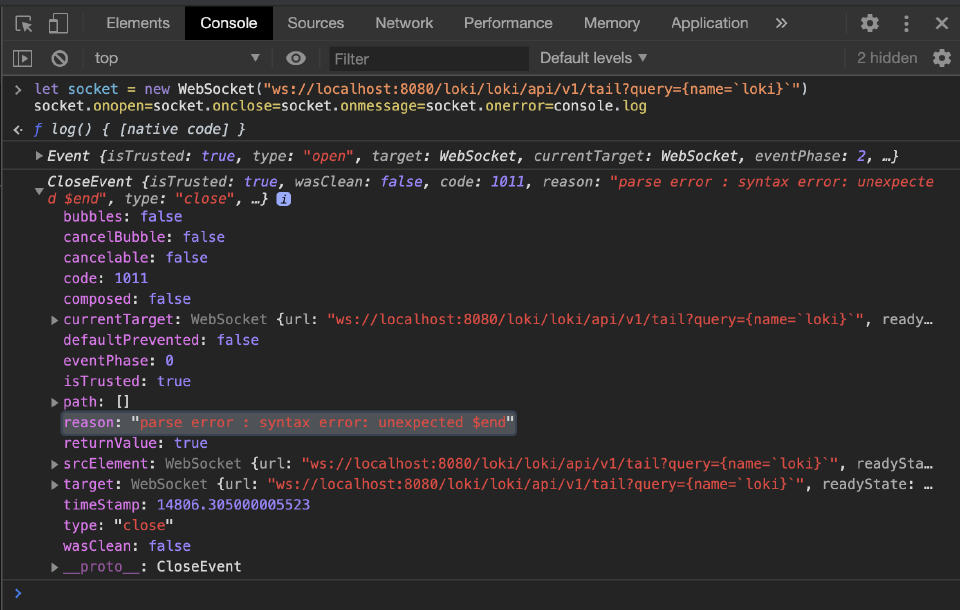

There was another way of testing WebSocket connections, and that was with the built-in javascript console in Chrome. It should be noted that it’s possible to initiate a WebSocket connection in js with just a few lines of code.

let socket = new WebSocket("ws://localhost:8080/loki/loki/api/v1/tail?query={name=`loki}`")

socket.onopen=socket.onclose=socket.onmessage=socket.onerror=console.log

The issue 🔗︎

The first probes of /tail queries resulted in an error…

curlws 'http://localhost:8080/loki/loki/api/v1/tail?query=\{name="loki"\}'

…with this response in the body, without HTTP status.

�-�parse error : syntax error: unexpected $end

The javascript-websocket powered /tail request failed too.

Our first thought was that the problem was hiding somewhere in the CLI proxy, because it was just a few lines of Go code, but after several code reviews and additional tests, we decided to check the other components as well.

Discovering the problem 🔗︎

After checking the response headers and the body message, the response looked like a successful WebSocket connection upgrade. The Sec-Websocket-Accept header is defined as a server response header in Websocket specifications.

curlws 'http://localhost:8080/loki/loki/api/v1/tail?query=\{name="loki"\}' -i

HTTP/1.1 101 Switching Protocols

Server: nginx/1.17.8

Date: Tue, 30 Jun 2020 08:16:35 GMT

Connection: upgrade

Sec-Websocket-Accept: CTk59tUEO+X3dzYN3yElMnoK4Cc=

Upgrade: websocket

�-�parse error : syntax error: unexpected $end%

The response body also contained some suspicious control characters which are bitmasks from the WebSocket’s base framing protocol. We won’t go into further detail now, but feel free to check out RFC 6455 if you want to dig deeper.

curlws 'http://localhost:8080/loki/loki/api/v1/tail?query=\{name="loki"\}' 2>/dev/null | hexdump -C

00000000 88 2d 03 f3 70 61 72 73 65 20 65 72 72 6f 72 20 |.-..parse error |

00000010 3a 20 73 79 6e 74 61 78 20 65 72 72 6f 72 3a 20 |: syntax error: |

00000020 75 6e 65 78 70 65 63 74 65 64 20 24 65 6e 64 |unexpected $end|

0000002f

All signs pointed to the http-to-websocket connection upgrade having worked well, and the error message we received in the response body having been returned by Loki.

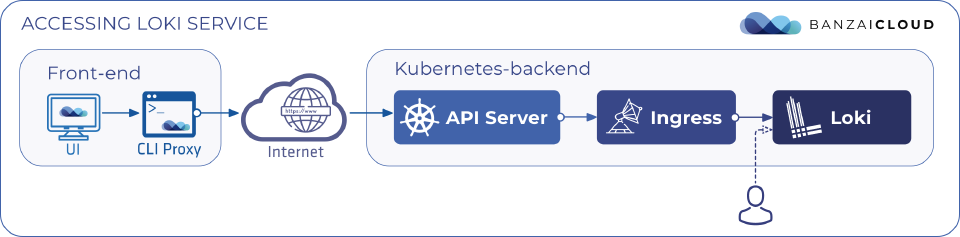

Checking Loki endpoint directly 🔗︎

To make sure Loki handles the WebSocket queries properly, we’ve requested its endpoint directly through a Kubernetes port forward to its service. This way we bypassed all the proxy services to focus on the Loki component.

kubectl port-forward svc/one-eye-loki 3100:3100

Loki service port 3100 forwarded to localhost 3100

curlws 'http://localhost:3100/loki/api/v1/tail?query=\{name="loki"\}&limit=10' -i

Note the single

lokiURL; our request goes directly to the Loki service, skipping the ingress.

HTTP/1.1 101 Switching Protocols

Upgrade: websocket

Connection: Upgrade

Sec-WebSocket-Accept: qGEgH3En71di5rrssAZTmtRTyFk=

�~�{"streams":[{"stream":{"app":"loki","container":"loki","container_id":"809458c447120553ec55bf7159fa1b5eab71e1d541077633d91f5511f48b14d5","controller_revision_hash":"one-eye-loki-7874db668c","host":"k3d-k3s-default-server","name":"loki","namespace":"default","pod":"one-eye-loki-0","pod_id":"89f61a2a-5baa-44bd-be2e-d6e4a7b79156","release":"one-eye-loki","statefulset_kubernetes_io_pod_name":"one-eye-loki-0"},"values":[["1593507930108519100","{\"stream\":\"stderr\",\"logtag\":\"F\",\"message\":\"level=info ts=2020-06-30T09:05:30.1080634Z caller=table_manager.go:292 msg=\\\"synching tables\\\" expected_tables=116\"}"]]},{"stream":{"app":"loki","container":"loki","container_id":"809458c447120553ec55bf7159fa1b5eab71e1d541077633d91f5511f48b14d5","controller_revision_hash":"one-eye-loki-7874db668c","host":"k3d-k3s-default-server","name":"loki","namespace":"default","pod":"one-eye-loki-0","pod_id":"89f61a2a-5baa-44bd-be2e-d6e4a7b79156","release":"one-eye-loki","statefulset_kubernetes_io_pod_name":"one-eye-loki-0"},"values":[["1593508049970165600","{\"stream\":\"stderr\",\"logtag\":\"F\",\"message\":\"level=info ts=2020-06-30T09:07:29.9696504Z caller=table_manager.go:292 msg=\\\"synching tables\\\" expected_tables=116\"}"]]}]}

���^C

Our test tail query worked like a charm, even held the connection and received new data chunks until it was terminated by a ctrl+c. Loki is seemingly functioning well.

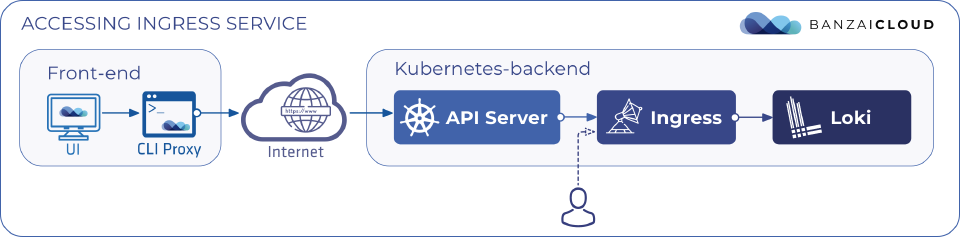

Checking Loki through ingress service 🔗︎

The ingress service might also have been the culprit, so in the next step, we checked the ingress endpoint against the test query.

Nginx-ingress also presented nice access-logs, which helped a lot to discover this issue. With a simple log tailing, we were able to examine the requests on it.

kubectl logs -l app=nginx-ingress,app.kubernetes.io/component=controller -f

kubectl port-forward svc/one-eye-nginx-ingress-controller 8000:80

Ingress service port 80 forwarded to localhost 8000

curlws 'http://localhost:8000/loki/loki/api/v1/tail?query=\{name="loki"\}&limit=2' -i

HTTP/1.1 101 Switching Protocols

Server: nginx/1.17.8

Date: Tue, 30 Jun 2020 09:13:01 GMT

Connection: upgrade

Sec-Websocket-Accept: qGEgH3En71di5rrssAZTmtRTyFk=

Upgrade: websocket

�~d{"streams":[{"stream":{"app":"loki","container":"loki","container_id":"809458c447120553ec55bf7159fa1b5eab71e1d541077633d91f5511f48b14d5","controller_revision_hash":"one-eye-loki-7874db668c","host":"k3d-k3s-default-server","name":"loki","namespace":"default","pod":"one-eye-loki-0","pod_id":"89f61a2a-5baa-44bd-be2e-d6e4a7b79156","release":"one-eye-loki","statefulset_kubernetes_io_pod_name":"one-eye-loki-0"},"values":[["1593508211818521600","{\"stream\":\"stderr\",\"logtag\":\"F\",\"message\":\"level=error ts=2020-06-30T09:10:11.8182047Z caller=tailer.go:97 org_id=fake traceID=7939cf98b55a337f msg=\\\"Error writing to tail client\\\" err=\\\"rpc error: code = Internal desc = transport: transport: the stream is done or WriteHeader was already called\\\"\"}"]]},{"stream":{"app":"loki","container":"loki","container_id":"809458c447120553ec55bf7159fa1b5eab71e1d541077633d91f5511f48b14d5","controller_revision_hash":"one-eye-loki-7874db668c","host":"k3d-k3s-default-server","name":"loki","namespace":"default","pod":"one-eye-loki-0","pod_id":"89f61a2a-5baa-44bd-be2e-d6e4a7b79156","release":"one-eye-loki","statefulset_kubernetes_io_pod_name":"one-eye-loki-0"},"values":[["1593508289700192000","{\"stream\":\"stderr\",\"logtag\":\"F\",\"message\":\"level=info ts=2020-06-30T09:11:29.6975065Z caller=table_manager.go:292 msg=\\\"synching tables\\\" expected_tables=116\"}"]]}]}

��^C%

Our test query results were still looking ok, with the particular nginx-ingress log line looking like this. We could see the URL path /loki/loki/api/v1/tail and the query args, also query={name=\x22loki\x22}&limit=2.

127.0.0.1 - - [30/Jun/2020:09:27:49 +0000] "GET /loki/loki/api/v1/tail?query={name=\x22loki\x22}&limit=2 HTTP/1.1" 101 1245 "-" "curl/7.64.1" 267 3.778 [default-one-eye-ui-80] [] 10.42.0.20:80 0 3.777 101 478b2dfd61a5a38bd6ef2e0d57226ad3

Requesting Loki through the Kubernetes API server 🔗︎

The next step was to run the test query proxied through the Kubernetes API server, the same way the CLI proxy does.

kubectl proxy --port 8001

Running application-level gateway between localhost:8001 and the Kubernetes API Server.

The URL required by the API server is a bit more complex. Its first part contains the destination service /api/v1/namespaces/default/services/one-eye-nginx-ingress-controller:80 and its second part describes what URL to proxy /loki/loki/api/v1/tail?query=\{name="loki"\} connected with the /proxy URL which tells the apiserver to use proxy mode.

curlws 'localhost:8001/api/v1/namespaces/default/services/one-eye-nginx-ingress-controller:80/proxy/loki/loki/api/v1/tail?query=\{name="loki"\}' -i

The result was another error.

HTTP/1.1 101 Switching Protocols

Server: nginx/1.17.8

Date: Tue, 30 Jun 2020 09:23:29 GMT

Connection: upgrade

Sec-Websocket-Accept: wrWIcq4LOt2fDoU9xsJxH0zBMx0=

Upgrade: websocket

�-�parse error : syntax error: unexpected $end

When we had a look at the nginx-ingress logs, we realized that there weren’t any query arguments.

10.42.0.1 - - [30/Jun/2020:09:28:24 +0000] "GET /loki/loki/api/v1/tail HTTP/1.1" 101 47 "-" "curl/7.64.1" 283 0.006 [default-one-eye-ui-80] [] 10.42.0.20:80 0 0.006 101 aa853734e432cd429a242169045c1881

Verifying the error 🔗︎

So, it looked like no query args were getting transferred through the Kubernetes API server proxy with the WebSocket connections, and the (empty) Loki LogQL query was failing and returning an error. The error message was a little bit misleading or vague, because a simple emptiness check with a relevant error message would have helped us a lot.

That meant that we should receive the same error message when calling the previously port-forwarded Loki service directly without any query args.

curlws 'http://localhost:3100/loki/api/v1/tail' -i

Please note the single

lokiURL, since our request is skipping the ingress.

And the result was the same! Now we had enough information about the issue to do something about it.

HTTP/1.1 101 Switching Protocols

Upgrade: websocket

Connection: Upgrade

Sec-WebSocket-Accept: bFKsYQ4fN7yQPofaObI65dx1mek=

�-�parse error : syntax error: unexpected $end

Possible solutions overview 🔗︎

Of course, we did a lot of googling and found several tickets:

- Kubectl proxy would strip out the parameter for WebSocket request

- service proxy for WebSocket is missing forwarded headers and query params

- API proxy strips URL query params when proxying WebSocket connections

Update: a solution to this issue has since been submitted, but is yet to be approved: Fixed bug where query string was lost on connection upgrade without using request location

Due to the inactive status of these tickets, we decided to work around the problem. It was clear that we needed to transfer the query args in a different way, so we figured out two possible ways of handling the problem:

- transfering the query args in request headers

- building a new URL with query args (somehow) placed in the URL path, and restoring them when they were no longer needed (which we named composition/decomposition)

We considered transfering the query args in request headers, since it might have been a cleaner solution, but some of the tickets mentioned missing headers as well, so we decided it was better to be safe than sorry. But changing the URL path part might have gotten messy fast, so we tried to keep the composition process as close to the decomposition as possible. At first, we thought we could do the magic in the Ingress configuration, but the nginx-ingress was difficult to customize. This forced us to extend the LogQL request proxy-chain with our backend server - we had it there for unrelated reasons - from where we could easily restore the URLs.

The new architectural design looked like this:

- CLI Proxy composites the URL

- One-Eye-Backend decomposites the URL

- We also had some issues about Loki base path handling (shout out to the Loki devs for the quick response in regards to that), therefore we did some proxy path rule rewriting at the Ingress level. With the chosen solution (proxying the Loki requests and responses through the One Eye backend) we could redesign the rewrites and simply place them in the backend.

- Any element of the proxy-chain was able to proxy standard LogQL queries this way (except when the Kubernetes API server called directly)

An in-depth exploration of the chosen solution 🔗︎

Compositing query args on the CLI Proxy 🔗︎

This function receives a URL and builds a new URL from it, where the query args are stored in the URL path. It returns the original URL when an error occurs.

import ("encoding/base64"

"net/url")

...

const QueryArgsURLPart string = "queryargs"

...

func paramsToPath(uri *url.URL) *url.URL {

// cloning url

result, err := url.Parse(uri.String())

if err != nil {

return uri

}

if len(result.RawQuery) > 0 {

// composite all the query args into the path

result.Path += fmt.Sprintf("/%s/%s/", QueryArgsURLPart, base64.URLEncoding.EncodeToString([]byte(result.RawQuery)))

// clear query args to avoid future problems

result.RawQuery = ""

}

return result

}

We call URL composition for only WebSocket requests, where query args aren’t empty

...

// only websocket connections

if req.Header.Get("Connection") == "Upgrade" &&

req.Header.Get("Upgrade") == "websocket" &&

// only with query args presented

len(req.URL.RawQuery) > 0 {

req.URL = paramsToPath(req.URL)

}

...

After implementing the workaround in the CLI proxy and requesting our test Loki tail query again, the ingress log looked like this:

curlws 'localhost:8080/loki/loki/api/v1/tail?query=\{name="loki"\}&limit=2'

10.42.0.1 - - [30/Jun/2020:12:58:17 +0000] "GET /loki/loki/api/v1/tail/queryargs/cXVlcnk9e25hbWU9Imxva2kifSZsaW1pdD0y/ HTTP/1.1" 101 1242 "-" "curl/7.64.1" 350 1.923 [default-one-eye-ui-80] [] 10.42.0.20:80 0 1.923 101 e4425bd0a2c4462ca1746e6757e5cc86

Our url-composition worked well, the URL /loki/loki/api/v1/tail?query=\{name="loki"\}&limit=2 has changed to /loki/loki/api/v1/tail/queryargs/cXVlcnk9e25hbWU9Imxva2kifSZsaW1pdD0y/.

We were able to simply verify the encoded part with a quick bash command:

echo "cXVlcnk9e25hbWU9Imxva2kifSZsaW1pdD0y" | base64 -d

query={name="loki"}&limit=2

Decompositing query args on One-Eye-Backend 🔗︎

This function restores the original Loki tail query URL or returns the intact URL if the error occurs.

const QueryArgsURLPart string = "queryargs"

var (

QueryArgsRegxPattern string = fmt.Sprintf("/%s/(%s)/", QueryArgsURLPart, "[^/]*")

QueryArgsRegx = regexp.MustCompile(QueryArgsRegxPattern)

)

func PathToParams(uri *url.URL) *url.URL {

if QueryArgsRegx.MatchString(uri.String()) {

// cloning url

result, err := url.Parse(uri.String())

if err != nil {

return uri

}

// checking the existence of composited query args

subResults := QueryArgsRegx.FindStringSubmatch(result.String())

if len(subResults) != 2 {

return uri

}

// decomposites query args

decodedArgs, err := base64.URLEncoding.DecodeString(subResults[1])

if err != nil {

return uri

}

// restores the original query args

result.RawQuery = string(decodedArgs)

// removing the composited query args from the path

result.Path = QueryArgsRegx.ReplaceAllLiteralString(result.Path, "")

return result

}

return uri

}

The decomposition function can be called on every request, it only matches with composited URLs, or returns the original URL if not a composite (the regex doesn’t match). The real implementation was more complex of course, but the basic idea was that, this way, our One Eye backend restored the form of the URL, and the Loki service received the original request.

After we’ve released all the workaround changes, Loki’s /tail endpoint became useable through the CLI proxy.

Summary 🔗︎

Overall, we had ambivalent feelings about applying a workaround to a feature as basic as proxying a request. On one hand, it seemed unnecessary, on the other hand, it was a great exercise that sent us digging into the depths of Kubernetes.

In other words: this wouldn’t have been a big deal if it had had been outside Kubernetes, with a regular nginx, or if there had been more informative error handling in Loki.

A few thoughts about the components:

Kubernetes: the Kubernetes API server proxy issue (with HTTP query args) still exists despite the several issue tickets.

Nginx: we love Nginx but nginx-ingress is quite cumbersome to customize, this led us to move the rewrites to the One Eye backend (like rewrites, etc.).

Loki: the base-path URL handling problem was a bug, it will be fixed I’m sure, but the non-relevant error message made it hard to discover the problem. The Grafana/Loki HTTP api was very useful though.