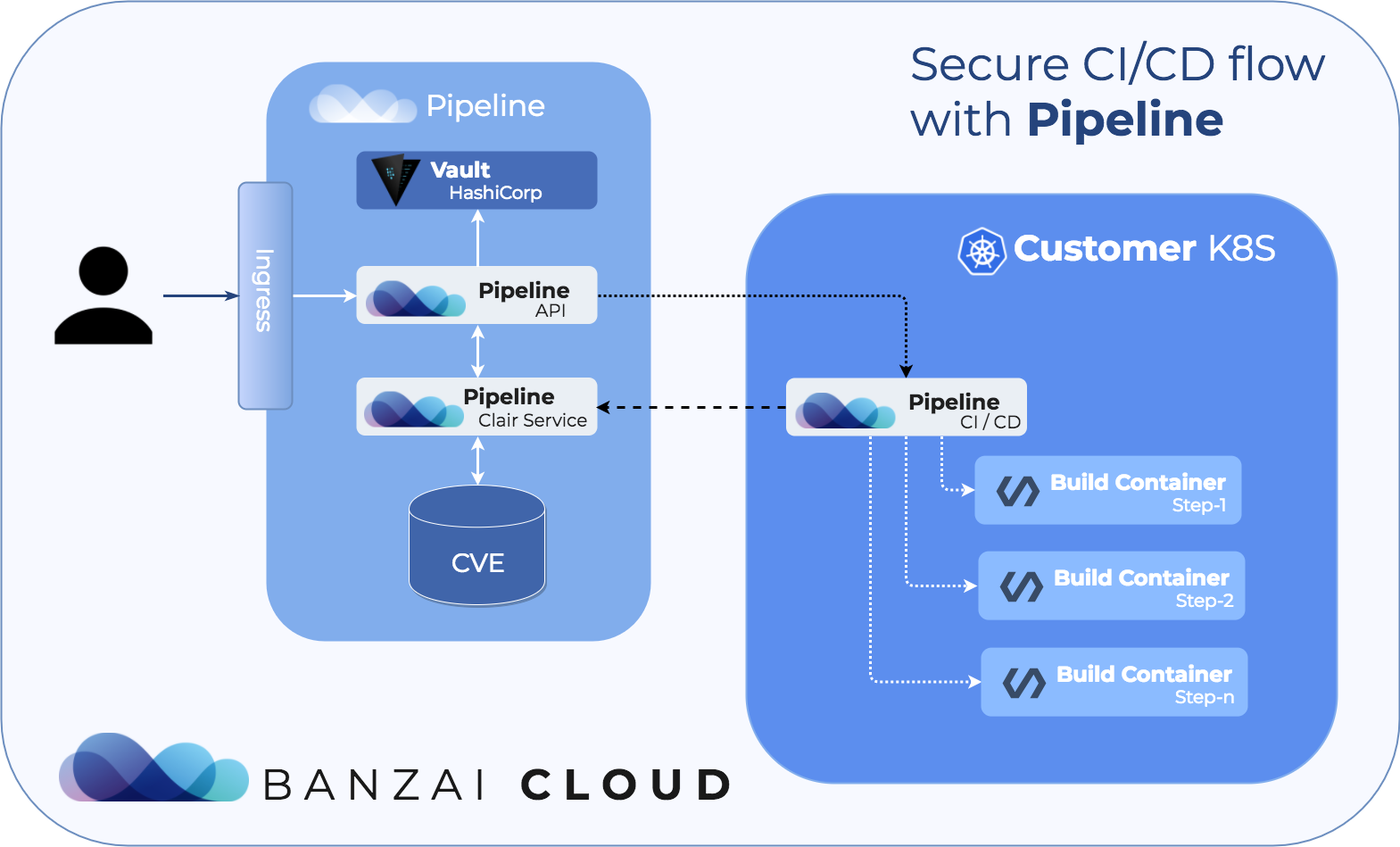

At Banzai Cloud we are building a feature rich enterprise-grade application platform, built for containers on top of Kubernetes, called Pipeline. Security is one of our main areas of focus, and we strive to automate and enable those security patterns we consider essential, including tier zero features for all enterprises using the Pipeline Platform.

We’ve blogged about how to handle security scenarios on several of our previous posts. This time we’d like to focus on a different aspect of securing Kubernetes deployments:

- static code scans that are part of our CI/CD Pipeline

- container vulnerability scans, which are part of the same CI/CD Pipeline

- Kubernetes deployment scans/rescans at the time of deployment and post deployment

Note: The Pipeline CI/CD module mentioned in this post is outdated and not available anymore. You can integrate Pipeline to your CI/CD solution using the Pipeline API. Contact us for details.

Update - we have switched from Clair to Anchore Engine. For details please read this post: https://banzaicloud.com/blog/anchore-image-validation/

Security series:

Authentication and authorization of Pipeline users with OAuth2 and Vault Dynamic credentials with Vault using Kubernetes Service Accounts Dynamic SSH with Vault and Pipeline Secure Kubernetes Deployments with Vault and Pipeline Policy enforcement on K8s with Pipeline The Vault swiss-army knife The Banzai Cloud Vault Operator Vault unseal flow with KMS Kubernetes secret management with Pipeline Container vulnerability scans with Pipeline Kubernetes API proxy with Pipeline

tl;dr: 🔗︎

We have released Drone CI/CD plugins to run static code analysis and container vulnerability scans.

The problem statement 🔗︎

A few weeks ago, news broke that a few container images available on Docker Hub (for over 8 months) were mining cryptocurrencies in addition to their intended purposes.

Backdoored images downloaded 5 million times finally removed from Docker Hub. 17 images posted by a single account over 10 months may have generated $90,000.

How was this possible? It’s pretty simple: we’re living in a paradigm shift, wherein each and every developer is becoming a DevOps engineer but simultaneously lacks the necessary skills or does not pay enough attention to his new role. In the world of VMs, DevOps/infra/security teams used to validate OS images, install packages for vulnerabilities, and release them. Now, with the wide adoption of Kubernetes and containers in general, developers are building their own container images. However, these images are rarely built from scratch. They are typically built on base images, which are built on top of other base images, so layers below the actual application are built on layers from public, third party sources.

This brings us to the following problems:

- images and libraries may contain obsolete or vulnerable packages

- many existing legacy vulnerability-scanning tools may not work with containers

- existing tools are hard to integrate into new delivery models

Over 80% of the latest versions of official images publicly available on Docker Hub contained at least one high severity vulnerability!

How Pipeline automates vulnerability scans 🔗︎

Banzai Cloud is a Kubernetes Certified Solution Provider and one of our mission statements is to bring cloud native to enterprises. We have already deployed and managed large Kubernetes deployments with Pipeline, and during this process we have learned a few things, like:

- enterprises demand strict security (and they like Pipeline because we enforce it)

- developers often overlook security, because their main focus is to make it work on my computer and vulnerabilities often end up in production

- they need a Platform where these strict security enforcements can’t be bypassed

- the entry barrier should be as low as possible, ideally close to zero

- security should be a tier-zero feature

While we were building the Platform and adding new features, we learned and listened, and now we’re enabling and automating the following steps for Pipeline users:

- Static code analysis and vulnerability scans using our CI/CD pipeline plugin.

- Once build artifacts are created, the CI/CD pipeline creates containers. Once containers are created, we scan each layer for vulnerabilities using data from the CVE databases.

- If all scans pass, Pipeline pushes the containers to a container registry, or creates a Kubernetes deployment. We re-scan these Kubernetes deployments with configurable frequency.

- In case vulnerabilities are found during the deployment lifecycle, we allow enterprises to implement several strategies, such as: notification (this is a default operation, we notify by email or Slack), graceful removal of the deployment, a snapshot, and a fallback to a previous deployment (although this is unlikely to pass the scan, because vulnerabilities are typically in 3rd party layers).

Under the hood - how it works 🔗︎

The Pipeline control plane hosts all the necessary components for static code scans and container vulnerability checks (Sonar, Clair, databases, etc) and offers these as a service. There is hard (schema) isolation per organization or department, namespace separation for scans, OAuth2 JWT token-based security and integration with Vault. As usual, secrets in Pipeline (e.g. private container registry access) are encrypted and stored inside Vault.

Static code analysis - Sonar

The CI/CD pipeline checks out the code inside the Kubernetes cluster owned by the organization/developer, triggering the build via a GitHub webhook. The static code analysis runs inside the Kubernetes cluster, however, the result is pushed back to the Pipeline control plane. Each organization has it’s own database schema, and, when accessing the Sonar interface, we use OAuth2 JWT tokens (as we do basically everywhere inside Pipeline). Depending on organization ACLs and GitHub groups, individuals might or might not see each other’s builds, or organization level results.

For our enterprise users we support LDAP and AD authentication and authorization based on roles. Pipeline is integrated with Dex to support multiple identity provider backends.

By default, Pipeline is non-intrusive - beside raising alarms - in the sense that it will not break a build, however, rules can be configured at an organization level or in profiles (e.g. test, UAT, production), and enforced to builds triggered by developers belonging to an organization.

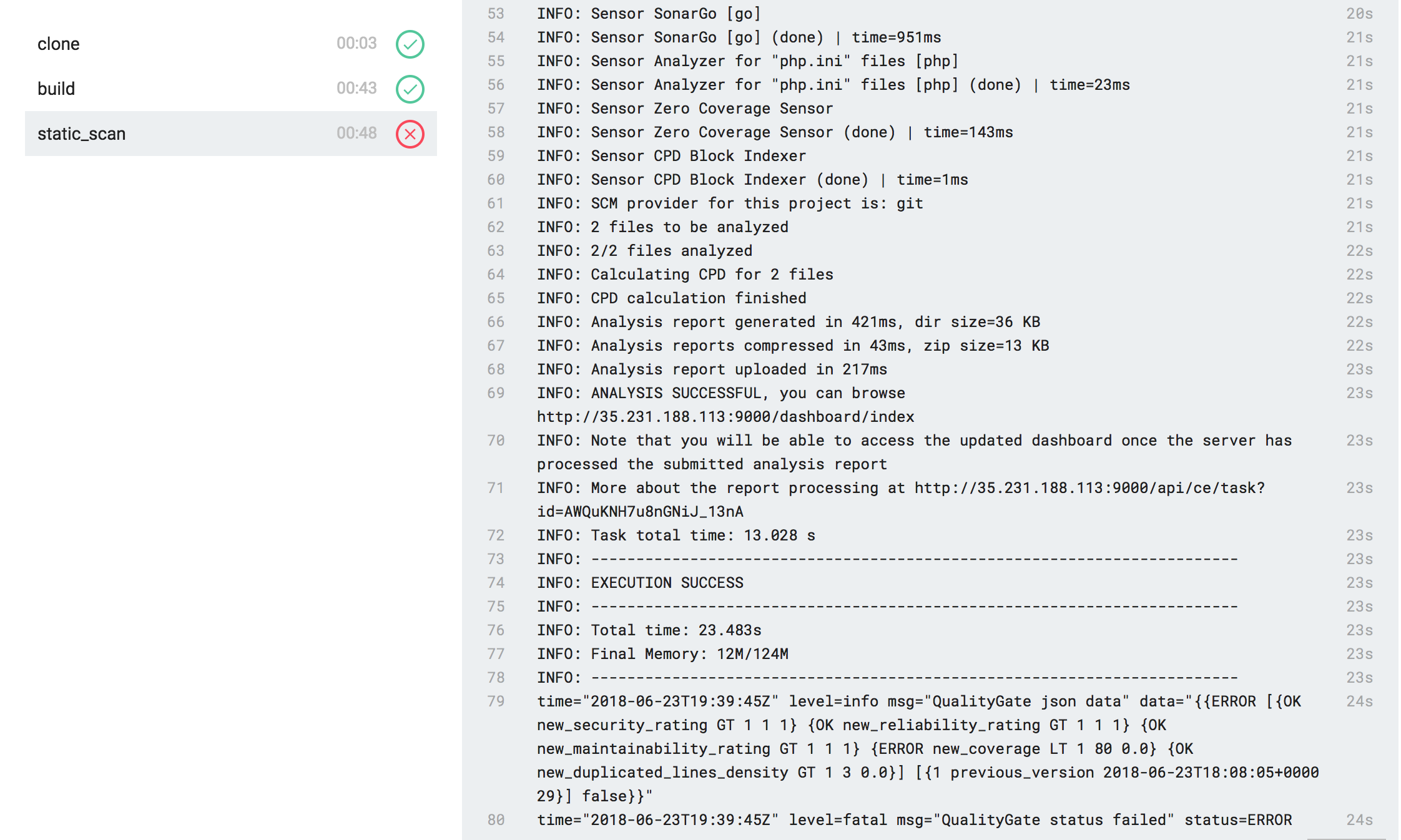

Static code scans are enabled during the CI/CD pipeline and are configured with an acceptable Sonar QualityGate status. Before running the sonar-scanner process, the plugin creates a sonar-scanner.properties file from a template. The properties file contains sonar-scanner configurations including the Sonar server host, sources, inclusions, exclusions, encoding, branch, etc. After the sonar-scan process is successfully finished, the plugin will wait for results from QualitiyGate and break the build if the results do not pass.

An example CI/CD yaml:

workspace:

base: /go

path: src/github.com/example/app

pipeline:

build:

image: golang:1.9

environment:

- CGO_ENABLED=0

commands:

- go test -cover -coverprofile=coverage.out

- go build -ldflags "-s -w -X main.revision=$(git rev-parse HEAD)" -a

static_scan:

image: registry/sonar-scanner

quality: OK

secrets: [ sonar_host, sonar_token ]

The Sonar server is running on the Pipeline control plane and it’s backed by an isolated database for each organization. Every organization has it’s own user/password pair, which is stored in Vault and managed with Pipeline. For further information on how we manage secrets on k8s, please read: Kubernetes secret management with Pipeline.

This is configurable to the organization, and the server and database can run inside the Kubernetes cluster as well

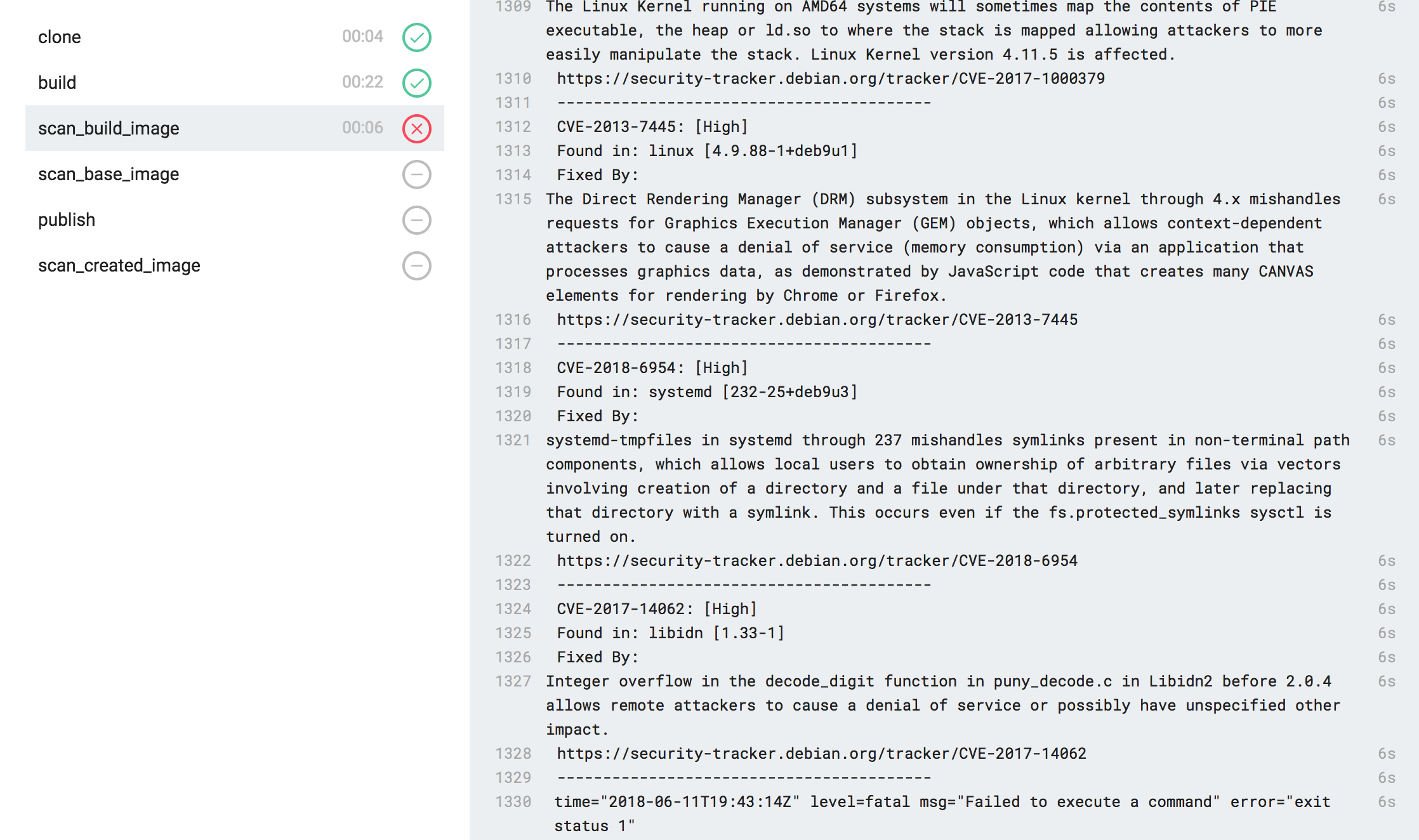

Container vulnerability checks - Clair

Pipeline uses Clair for vulnerability checks. Scans are isolated by organization (or department) and run inside a separate namespace. We do not store the layers, but only the hashes and the vulnerabilities associated with the layers.

By default, Pipeline is non-intrusive - beside raising alarms - in the sense that it will not break builds, or remove deployments, however rules can be configured to trigger actions.

Vulnerability scans are enabled during the CI/CD pipeline with configs for images, severity and threshold. Multiple scans are allowed, since, during an image build, we can use more than one image. For example, if we are about to create an application written in Golang, we’ll need to build it inside a container which contains all the necessary packages for compilation, but, when running the application, we won’t need to use the whole build environment.

An example CI/CD yaml:

workspace:

base: /go

path: src/github.com/example/app

pipeline:

build:

image: golang:1.9

environment:

- CGO_ENABLED=0

commands:

- go test -cover -coverprofile=coverage.out

- go build -ldflags "-s -w -X main.revision=$(git rev-parse HEAD)" -a

scan_build_image:

image: registry/drone-clair-scanner

scan_image: golang:1.9

severity: High

treshold: 20

secrets: [ docker_username, docker_password ]

scan_base_image:

image: registry/drone-clair-scanner

scan_image: alpine:3.7

severity: Medium

treshold: 1

secrets: [ docker_username, docker_password ]

publish:

image: plugins/docker

repo: registry/app-docker-image

tags: [ 1.3 ]

secrets: [ docker_username, docker_password ]

scan_created_image:

image: registry/drone-clair-scanner

scan_image: registry/app-docker-image

severity: Medium

treshold: 1

secrets: [ docker_username, docker_password ]

When the configured treshold is exceeded, the build process fails.

The Clair server runs on the Pipeline control plane, so it’s crucial to isolate organization scans at the database level. This is also true for Sonar; every organization has its own database and Vault secret. When a vulnerability scan is initiated, the control plane starts a Clair pod using the organization’s own database.

If a new organization is created, the control plane will start a new init pod within Pipeline K8s, which connects to the DB service and creates a new database owned by the organization. This is configurable to the organization, and the server and database can run inside the Kubernetes cluster, as well.

We take our users’ security seriously. If you believe that you have found a security vulnerability please contact us at security@banzaicloud.com. Thank you.

If you’d like to learn more about Banzai Cloud and our approach towards securing Kubernetes deployments check out the other posts on this blog, the Pipeline project.