At Banzai Cloud we are building a feature rich enterprise-grade application platform, built for containers on top of Kubernetes, called Pipeline. With Pipeline we provision large, multi-tenant Kubernetes clusters on all major cloud providers, specifically AWS, GCP, Azure, AliCloud, and BYOC - on-premise and hybrid - and deploy all kinds of predefined or ad-hoc workloads to these clusters. For us and our enterprise users authentication and authorization is absolutely vital, thus, in order to access the Kubernetes API and the Services in an authenticated manner as defined within Kubernetes, we arrived at a simple but flexible solution.

Security series:

Authentication and authorization of Pipeline users with OAuth2 and Vault Dynamic credentials with Vault using Kubernetes Service Accounts Dynamic SSH with Vault and Pipeline Secure Kubernetes Deployments with Vault and Pipeline Policy enforcement on K8s with Pipeline The Vault swiss-army knife The Banzai Cloud Vault Operator Vault unseal flow with KMS Kubernetes secret management with Pipeline Container vulnerability scans with Pipeline Kubernetes API proxy with Pipeline

Kubernetes API proxy with Pipeline 🔗︎

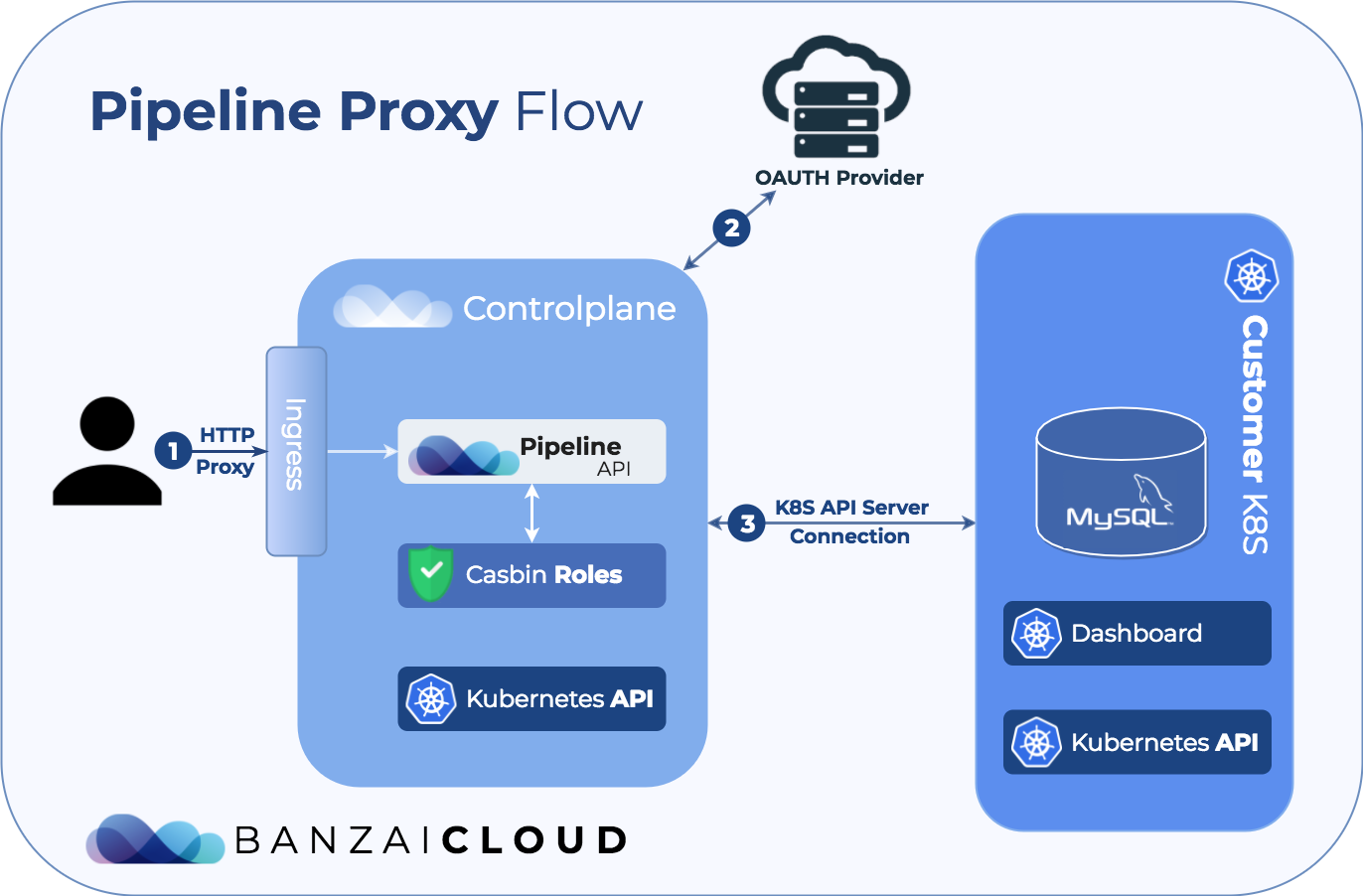

Pipeline is an application centric platform; our end users push deployments to Kubernetes (clusters we install or manage) through our RESTful API, or CLI. In many cases they don’t care about the logistics of how they get from code to deployment, as long as that process fulfills their enterprise standards (e.g. static code analysis, vulnerability scans, autoscaling deployments, etc). However, administrators might want to have deeper interactions with the system - for example, they’ll likely want to use/access the K8s API server or interactive dashboard. All Pipeline deployments are secured using OAuth2 tokens and fine grained ACLs. While we automate all of these, this post describes the low level code details and provides guidance to those who would like to implement something similar.

The Kubernetes API server is a very versatile daemon, it also offers proxy capabilities towards the system and user defined Kubernetes Services, also it allows you to:

- Manage existing cluster resources

- Visualize pod CPU and memory utilization collected by heapster

- Configure an Ingress to publicly expose running applications

- Provision new deployments from JSON or YAML manifests

The versatility of an API server means that it must be made very secure, otherwise it becomes a double-edged sword: and not just from inside your organization, as you may find yourself in a very uncomfortable situation the way Tesla did in February.

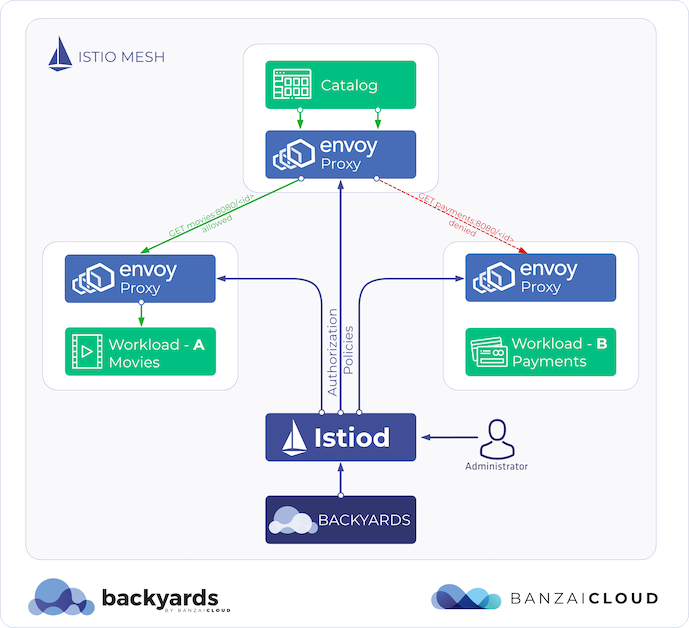

One handy solution for securing the API server is to deploy a reverse proxy in front of it, like the OAuth2 Proxy. Pipeline, itself, can be used in a manner similar to the OAuth2 Proxy described, however, with Casbin we can limit user access on the HTTP request level for user level authorization as well.

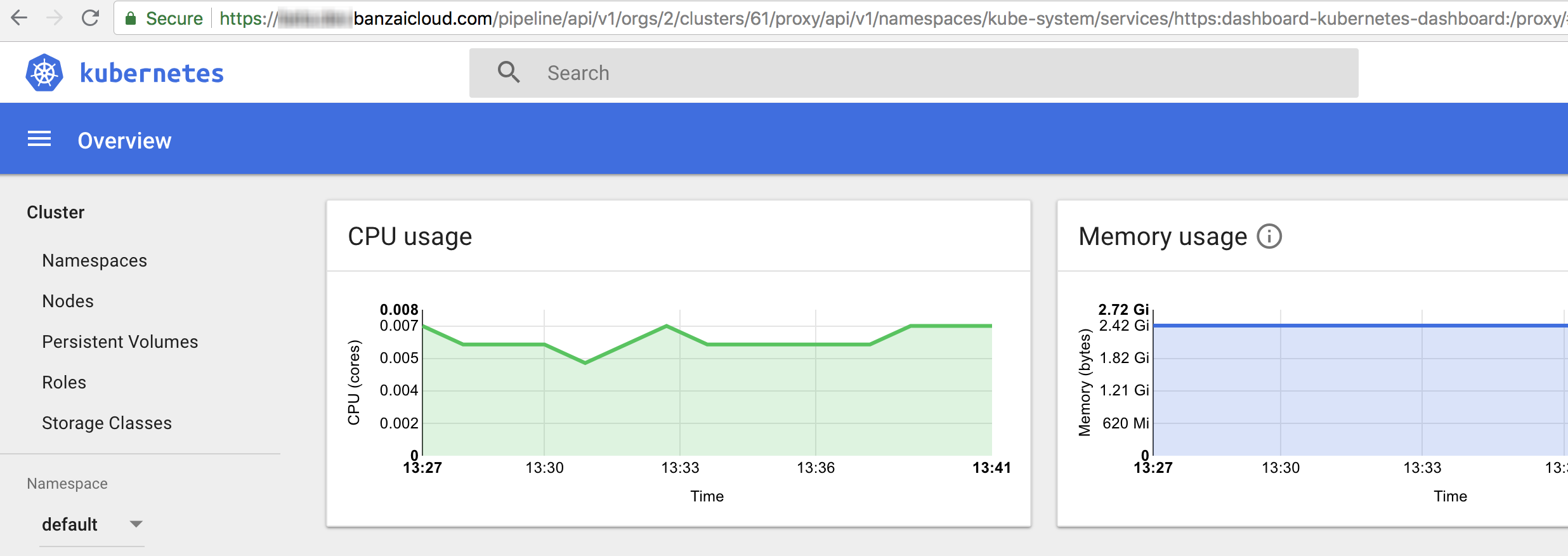

Pipeline installs the Kubernetes Dashboard by default to newly provisioned clusters.

The workflow 🔗︎

This workflow assumes that you have already created a working Pipeline installation on your local machine, based on this developer documentation.

# Opens up this URL in your default browser

open http://localhost:9090/auth/github/login

After the successful GitHub OAuth login let’s get a Pipeline API token:

open http://localhost:9090/pipeline/api/v1/token

{

"id": "06790441-9564-4cb1-9c33-e498ad154f84",

"token": "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJhdWQiOiJodHRwczovL3BpcGVsaW5lLmJhbnphaWNsb3VkLmNvbSIsImp0aSI6IjA2NzkwNDQxLTk1NjQtNGNiMS05YzMzLWU0OThhZDE1NGY4NCIsImlhdCI6MTUzMjUwMzc2NiwiaXNzIjoiaHR0cHM6Ly9iYW56YWljbG91ZC5jb20vIiwic3ViIjoiMSIsInNjb3BlIjoiYXBpOmludm9rZSIsInR5cGUiOiJ1c2VyIiwidGV4dCI6ImJvbmlmYWlkbyJ9.4y2f9__AMxuivCUl6Ge6zVrUdlV_DTY-l-vEVdErzB4"

}

Copy the "token" part of this response and export it as an environment variable:

export TOKEN= ...

Create a cluster with Pipeline, already documented and augmented with Postman.

Let’s assume that you have created a cluster in organization ID 1 and the cluster ID is 1 as well, to proxy the Kubernetes API’s root path open up this URL in your browser:

open http://localhost:9090/pipeline/api/v1/orgs/1/clusters/1/proxy/

Open up the Kubernetes Dashboard in your browser through the proxy:

open http://localhost:9090/pipeline/api/v1/orgs/1/clusters/3/proxy/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

You can also call the API with cURL, but only with the Authorization header containing the TOKEN:

curl -f -s -H "Authorization: Bearer $TOKEN" \

-H "Content-Type: application/json" \

http://localhost:9090/pipeline/api/v1/orgs/1/clusters/1/proxy/ | jq

Output:

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/",

"/apis/admissionregistration.k8s.io",

"/apis/admissionregistration.k8s.io/v1beta1",

"/apis/apiextensions.k8s.io",

"/apis/apiextensions.k8s.io/v1beta1",

"/apis/apiregistration.k8s.io",

"/apis/apiregistration.k8s.io/v1",

"/apis/apiregistration.k8s.io/v1beta1",

"/apis/apps",

"/apis/apps/v1",

"/apis/apps/v1beta1"

/...

]

}

Benefits 🔗︎

Because the whole Kubernetes API is proxied through Pipeline, the authentication mechanism can be reused, since Pipeline already handles the OAuth login so you don’t have to setup an OAuth proxy. The default is GitHub, but we use the pluggable qor/auth backend, since it’s easy to add Google or other OAuth providers to Pipeline. For our enterprise users we provide LDAP/AD support as well. Since users are part of organizations, and have certain roles handled by the Casbin backend, the Kubernetes API can be restricted to certain roles. For example, administrators have full CRUD access on the Dashboard, but members may only read the API.

Implementation details 🔗︎

Naturally, this project is open source, and its code is available on Pipeline’s GitHub repository.

The driving force as it were, the proxy itself, is based on a slightly modified version of the code that makes the kubectl proxy CLI command work.

When proxy requests arrive for a cluster, the Gin handler method checks to see if there is an existing proxy instance created for the cluster, if yes, it simply returns it and passes on the HTTP context. Otherwise a proxy is created towards the cluster based on the K8s configuration already stored in Vault. This proxy is then stored in a thread-safe sync.Map for later reuse. When the cluster is deleted the proxy gets deleted as well if there is one.

We have already talked about Gin Handlers, we install this ProxyToCluster Handler with the Any directive to catch all HTTP methods. This handler intercepts at least three other handlers before it can start serving proxy requests. The first is the Audit Handler, which audits all requests sent to the API (user, timestamp, path and method are saved, sensitive data is masked out). The second is the Auth Handler, which parses the Authorization header or the session cookies sent by the user to authenticate the request. Last, the Casbin based Authorization handler checks Casbin’s role and policy storage to see if the current user has the rights to access the requested path using the corresponding method.

We take our Pipeline users security and their trust very seriously - as usual, the code is open source. If you believe you have found a security issue, please contact us at security@banzaicloud.com. Thank you.