Leveraging a Cloud Native technology stack, Banzai Cloud Supertubes is the ultimate deployment tool for setting up and operating production-ready Kafka clusters on Kubernetes. While Supertubes installs and manages all the infrastructure components of a production-ready Kafka cluster on Kubernetes (like Zookeeper, Koperator , Envoy, etc) it also includes several convenience components, like Schema Registry, multiple disaster recovery options, Cruise Control and lots more. The list of Supertubes features is very long and covers everything you need to self host and run a production-ready Kafka cluster on Kubernetes. Today, we’ll be exploring one of these features, Schema Registry, through a real world example.

tl;dr: 🔗︎

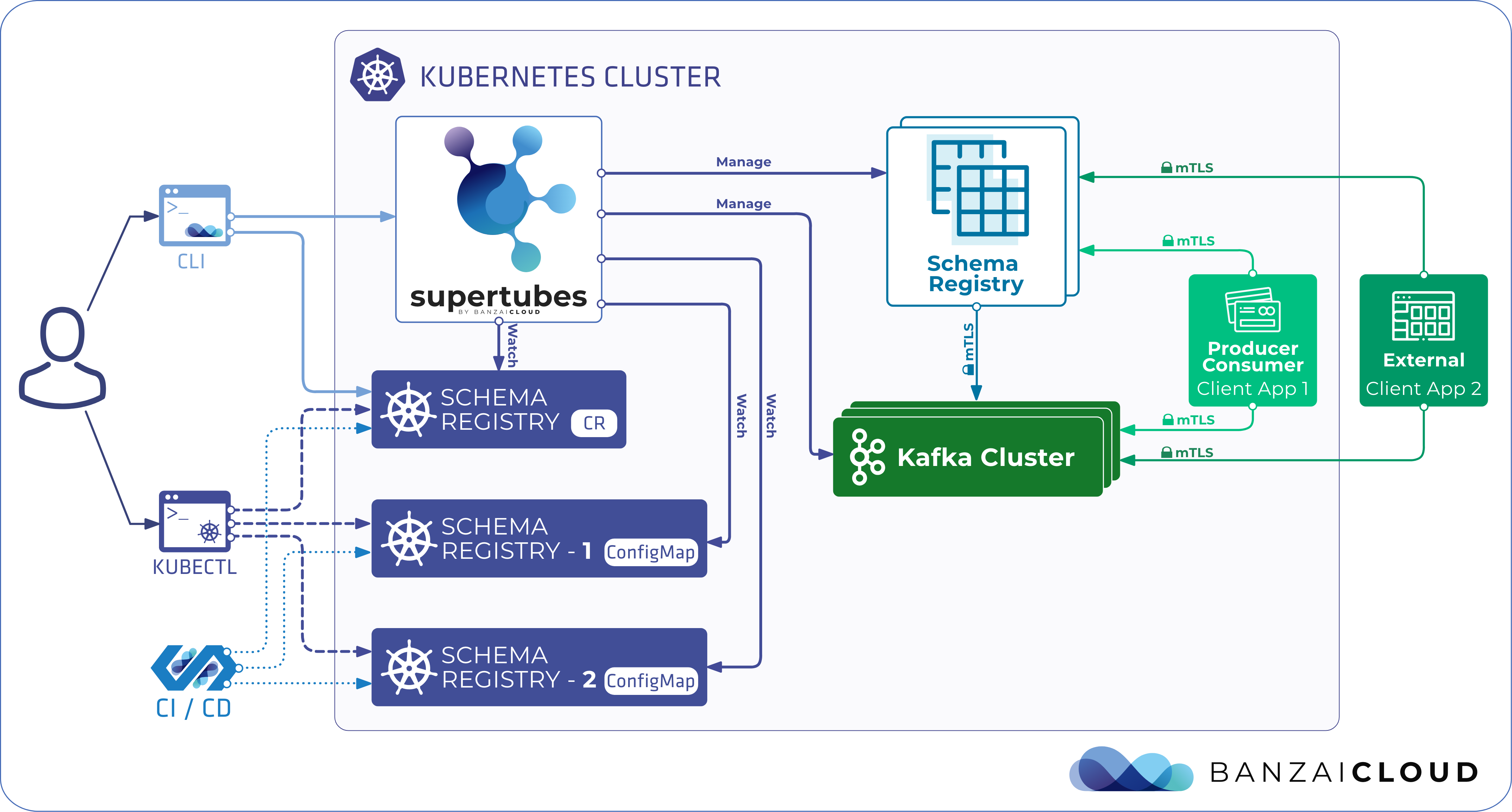

The latest version of Supertubes automates Schema Registry installation by introducing a new custom resource called:

- SchemaRegistry

It also provides a declarative approach to defining schemas using custom annotated configmaps:

- schema-registry.banzaicloud.io/name

- schema-registry.banzaicloud.io/subject

This way we overcome difficulties inherent to handling schemas in a Kubernetes environment.

Schema Registry 🔗︎

For those not yet familiar with Schema Registry, it is a centralized repository for schemas and metadata which enables Kafka producer/consumer applications to write/consume data records of different shapes to/from Kafka topics.

Manage Schema Registry instances with Supertubes 🔗︎

Imperative CLI 🔗︎

The Supertubes CLI provides commands to deploy Schema Registry instances with either default or custom settings with ease. This easiest way to start it is by using the

supertubes install -a

command, which sets up everything needed to start playing with Kafka and Schema Registry on Kubernetes. Additional Schema Registry instances can be deployed, and existing ones managed, with the

supertubes cluster schemaregistry

command. The create and update commands expect a Schema Registry descriptor.

For the details of this descriptor check the SchemaRegistry custom resource definition described in the section below.

Managing schemas declaratively 🔗︎

Managing Schema Registry instances with Supertubes is as simple as creating and updating the SchemaRegistry custom resource. The Schema Registry deployment and configuration settings specified by users with the SchemaRegistry custom resource are automatically monitored by Supertubes.

Besides installing, managing, scaling, etc the Kafka cluster, Supertubes also includes monitoring capabilities, has a rich set of dashboards and metrics, even down to the level of protocols.

These will perform the necessary steps to spin up new Schema Registry instances or reconfigure existing ones with the desired configuration.

apiVersion: kafka.banzaicloud.io/v1beta1

kind: SchemaRegistry

metadata:

name: my-schema-registry

namespace: kafka

spec:

clusterRef:

# Name of the KafkaCluster custom resource that represents the Kafka cluster this Schema Registry instance will connect to

name: kafka

# The port Schema registry listens on for API requests (default: 8081)

servicePort: 8081

# Labels to be applied to the schema registry pod

podLabels:

# Annotations to be applied to the schema registry pod

podAnnotations:

# Annotations to be applied to the service that exposes the Schema registry API on port `ServicePort`

serviceAnnotations:

# Labels to be applied to the service that exposes the Schema Registry API on port `ServicePort`

serviceLabels:

# Service account for schema registry pod

serviceAccountName:

# Description of compute resource requirements

#

# requests:

# cpu: 200m

# mem: 800mi

# limits:

# cpu: 1

# mem: 1.2Gi

resources:

# https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#nodeselector

nodeSelector:

# https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#affinity-and-anti-affinity

affinity:

# https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/

tolerations:

# Minimum number of replicas (default: 1)

minReplicas: 1

# Maximum number of replicas (default: 5) Horizontal Pod Autoscaler can upscale to

maxReplicas: 5

# Controls whether mTLS is enforced between Schema Registry and client applications

# as well as Schema registry instances

# (default: true)

MTLS: true

# Heap settings for Schema Registry (default: -Xms512M -Xmx1024M)

heapOpts: -Xms512M -Xmx1024M

# Defines the config values for schema registry in the form of key-value pairs

schemaRegistryConfig:

There are several SchemaRegistry configurations that are computed and maintained by Supertubes, and thus cannot be overridden by end users:

- host.name

- listeners

- kafkastore.bootstrap.servers

- kafkastore.group.id

- schema.registry.group.id

- master.eligibility - always

true - kafkastore.topic

Schema Registry API endpoints 🔗︎

The deployed Schema Registry instances are reachable at the schema-registry-svc-<schema-registry-name>.<namespace>.svc:<servicePort> endpoint from within the Kubernetes cluster.

Client applications connecting from outside the Kubernetes cluster can reach Schema Registry instances at the public IP address of the schema-registry-meshgateway-<schema-registry-name> LoadBalancer-type service.

This public endpoint is made available when the Kafka cluster that the Schema Registry is bound to becomes exposed externally.

Supertubes uses and heavily relies on Envoy, which provides a variety of methods for securely exposing Kafka clusters with Kubernetes-native ACL mechanisms.

Security 🔗︎

All participants that connect to Schema Registry are authenticated using mTLS by default.

This can be disabled via the MTLS field of the SchemaRegistry custom resource.

Schema Registry Kafka ACLs 🔗︎

When ACLs are enabled in the Kafka cluster that the particular Schema Registry is bound to, Supertubes automatically creates Schema Registry’s required Kafka ACLs.

Managing schemas declaratively 🔗︎

By default, client applications automatically register new schemas. When they produce new messages to the topic, they will automatically try to register new schemas. This behavior comes in handy during development, but should be avoided during production. Aside from registering schemas through Schema Registry’s API endpoint, Supertubes also makes it possible to register schemas declaratively, through Kubernetes ConfigMaps that hold schema definitions. Supertubes looks for ConfigMaps with specific labels to read schema definitions from, and registers them into the Schema Registry running in that namespace. This is very much in line with the GitOps and Configuration as Code trends of today.

apiVersion: v1

kind: ConfigMap

metadata:

name: my-topic-value-schema

labels:

schema-registry.banzaicloud.io/name: my-schema-registry

schema-registry.banzaicloud.io/subject: my-topic-value

data:

schema.json: |-

{

"namespace": "io.examples",

"type": "record",

"name": "Payment",

"fields": [

{

"name": "id",

"type": "string"

},

{

"name": "amount",

"type": "double"

}

]

}

Notice the two labels on ConfigMap:

- schema-registry.banzaicloud.io/name - denotes the name of the

SchemaRegistrycustom resource that represents the Schema Registry deployment for the schema to be registered in - schema-registry.banzaicloud.io/subject - is the name of the subject which the schema should be registered under

The schema specification should be under the schema.json key in the ConfigMap.

Any updates made to the schema specifications in ConfigMap result in new versions of the underlying schema in Schema Registry.

When the ConfigMap is deleted, all versions relating to the corresponding schema are deleted from their Schema Registry.

Showtime - How to utilize Schema Registry from a Spring Boot application 🔗︎

Setup 🔗︎

-

Create a Kubernetes cluster.

You can create a Kubernetes cluster with the Banzai Cloud Pipeline platform on-prem, or over any one of five different cloud providers.

-

Point

KUBECONFIGto your cluster. -

Install Supertubes. Register for an evaluation version and run the following command to install the CLI tool:

As you might know, Cisco has recently acquired Banzai Cloud. Currently we are in a transitional period and are moving our infrastructure. Contact us so we can discuss your needs and requirements, and organize a live demo.

Evaluation downloads are temporarily suspended. Contact us to discuss your needs and requirements, and organize a live demo.

This step usually takes a few minutes, and installs a 2 broker Kafka cluster and all its components.

Enable ACL and external access 🔗︎

The demo cluster doesn’t have ACLs and external access points enabled, so we’ll have to enable them by running:

If you are interested in how Supertubes allows you to handle Kafka ACLs the declarative way, please read our blogpost on the subject.

supertubes cluster update --namespace kafka --kafka-cluster kafka --kubeconfig <path-to-kubeconfig> -f -<<EOF

apiVersion: kafka.banzaicloud.io/v1beta1

kind: KafkaCluster

spec:

istioIngressConfig:

gatewayConfig:

mode: PASSTHROUGH

readOnlyConfig: |

auto.create.topics.enable=false

offsets.topic.replication.factor=2

authorizer.class.name=kafka.security.authorizer.AclAuthorizer

allow.everyone.if.no.acl.found=false

listenersConfig:

externalListeners:

- type: "plaintext"

name: "external"

externalStartingPort: 19090

containerPort: 9094

EOF

Wait until Supertubes finishes the rolling upgrade on the managed Kafka cluster.

supertubes cluster get kafka

Register your schema 🔗︎

Create the required schema by installing the following ConfigMap:

kubectl create -n kafka -f - <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: spring-boot-schema

labels:

schema-registry.banzaicloud.io/name: kafka

schema-registry.banzaicloud.io/subject: users-value

data:

schema.json: |-

{

"namespace": "io.banzaicloud.blog",

"type": "record",

"name": "User",

"fields": [

{

"name": "name",

"type": "string",

"avro.java.string": "String"

},

{

"name": "age",

"type": "int"

}

]

}

EOF

Run the Spring Boot application 🔗︎

We prepared a Spring Boot application which is basically a producer/consumer REST service for Kafka. It uses the Schema Registry to store its Avro schema.

The source code of the application is available on Github.

Install the Spring Boot application from a pre-built docker image. Create the pod using the following command:

kubectl create -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: kafka-springboot

namespace: kafka

labels:

app: spring-boot

spec:

serviceAccountName: kafka-cluster

containers:

- name: kafka-springboot

image: "banzaiclouddev/springboot-kafka-avro:v1"

ports:

- containerPort: 9080

name: spring-boot

protocol: TCP

EOF

Expose the spring boot application through a service:

kubectl create -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: kafka-springboot

namespace: kafka

spec:

ports:

- name: tcp-spring

port: 9080

protocol: TCP

targetPort: 9080

selector:

app: spring-boot

type: ClusterIP

EOF

When the application is up and running, install a test environment:

kubectl create -n kafka -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: springboot-test

spec:

containers:

- name: kafka-test

image: confluentinc/cp-schema-registry:5.5.0

# Just spin & wait forever

command: [ "/bin/bash", "-c", "--" ]

args: [ "while true; do sleep 3000; done;" ]

EOF

Exec inside the container and install curl:

kubectl exec -it -n kafka springboot-test -c kafka-test -- bash

# Install command line tool curl

apt update && apt install curl -y

Produce some messages to the Spring Boot application using curl::

curl -X POST -d 'name=banzaicloud&age=2' "kafka-springboot.kafka.svc.cluster.local:9080/user/publish"

Read back the messages created by the Spring Boot application published in the previous step:

kafka-avro-console-consumer --bootstrap-server kafka-all-broker:29092 --topic users --from-beginning --property schema.registry.url=http://schema-registry-svc-kafka.kafka.svc.cluster.local:8081

Note: Using a legacy app which runs outside of Kubernetes? Don’t worry, we got you covered. Check out this post on how to setup a

KafkaUserthat represents an external application such that it’s authenticated over mTLS.

Summary 🔗︎

Supertubes makes using a Kafka client easy. We would like to emphasize that all of the clients are automatically behind mTLS and using Kafka ACLs. If you take a closer look at the client containers, you might notice that this happens without any cert generation or configuration, but through Supertubes’ magic.

Supertubes is built on a top on several Envoy proxies and sidecars managed by the lightweight Banzai Cloud Istio operator control plane.