Banzai Pipeline, or simply “Pipeline” is a tabletop reef break located in Hawaii, on Oahu’s North Shore. It is the most famous and infamous reef on the planet, and serves as the benchmark by which all other surf breaks are measured.

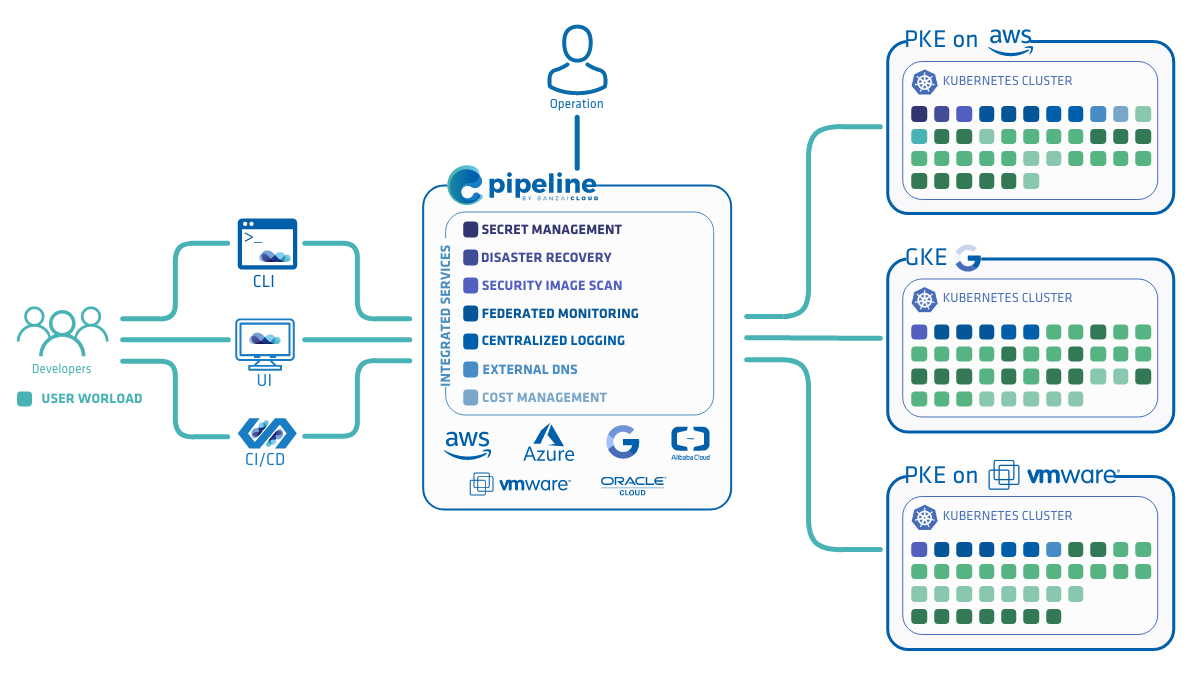

Pipeline is a PaaS with a built in CI/CD engine to deploy cloud native microservices to a public cloud or on-premise. It simplifies and abstracts all the details of provisioning cloud infrastructure, installing or reusing a Kubernetes cluster, and deploying an application.

Today we are pleased to announce the second release of Pipeline - 0.2.0. Besides a battery of new features and fixes, the main focus of this release is to add support for Microsoft Azure AKS, allowing for easy deployment of applications on Azure-managed Kubernetes. It provides end-to-end support for deploying, monitoring and autoscaling cloud native applications, in minutes, starting with a GitHub commit hook and using a fully customizable CI/CD workflow - available for the first time on Azure.

Note: The Pipeline CI/CD module mentioned in this post is outdated and not available anymore. You can integrate Pipeline to your CI/CD solution using the Pipeline API. Contact us for details.

tl;dr: 🔗︎

- Pipeline now supports end to end CI/CD pipelines and cluster/application deployments on Microsoft Azure AKS

- Banzai Cloud has opensourced a Microsoft Azure AKS Golang SDK/binding

- Pipeline control plane is now available as an ARM template

- A RESTful API provisions the AKS cluster and deploys Helm charts

- OAuth2-based authentication and JWT access tokens are now stored in Vault (this is in beta)

- Support for multiple cloud providers such as Amazon EC2, Azure AKS and Google GKE (also in beta)

- Cloud native deployments of Spark and Zeppelin (natively scheduled by k8s), Kafka (on etcd instead of Zookeeper) and Java applications (using cgroups)

- The addition of new services, like Tensorflow and JupyterHub, TiDB and Spark History Server

New cloud provider support - Microsoft Azure AKS 🔗︎

While adding support for Azure AKS in Pipeline we faced various challenges, which we detailed in this blog post. For now, note that AKS is in preview mode. These challenges gave us a good opportunity to master AKS and make Pipeline as robust as possible when dealing with AKS-related calls. Also, as a side effect, we opensourced a Golang binding/client for Azure AKS, since one is currently missing from the Azure SDK. The Pipeline control plane is now available as a Microsoft Azure Resource Manager ARM template, and, once AKS is GA, it will be made available on the Azure marketplace.

We strongly believe that managed Kubernetes services are the future, and most of our investment in cloud neutrality and provisioning is built on managed Kubernetes services, both in the cloud (GKE, OCI and ACS) and on-premise.

Services 🔗︎

Please find below the list of services run by the control plane. Note that this is not an exhaustive list - there are other low level infrastructure services running inside k8s, like Tiller, that Pipeline speaks to, and other beta services like aggregated log collectors, Helm chart orchestrators, namespace operators or K8S watchers/informers.

| Service | Technology stack | Type | Remarks |

|---|---|---|---|

| Monitoring | Prometheus | Full vertical and horizontal stack monitoring for AWS, AKS, K8S, and deployed microservices | Default node exporters and push gateways are deployed when needed |

| Alerting | Prometheus | Default alerts for infra and apps, customizable node exporters and push gateways | Correlated alerts across the stack, collected for model build |

| Dashboards | Grafana | AWS, AKS, K8S, app deployment specific dashboards | Dashboards are based on spotguides, e.g. a Spark deployment contains all relevant metrics, and differs from a Zeppelin or JavaOne deployment |

| CI/CD | Drone | AWS, AKS, K8S, microservices | Vendor independent, can be used with CircleCI or Travis |

| Pipeline plugins | Golang, Docker | AWS, AKS, K8S, Spark, Zeppelin, Kafka, Java, Golang | Extensible, custom plugins are built in Go based on an interface |

| WebHooks | GitHub | Default GitHub web hooks | GitHub marketplace placement and GitLab support coming soon |

Pipeline PaaS release components - DIY, be your own PaaS vendor 🔗︎

The components of Pipeline have been re-worked to support AKS, easily streamline the incorporation of new cloud providers (GKE), and to provide them with the same level of automation and easier enablement. Below are the building blocks of this release.

| Component | Source code |

|---|---|

| Pipeline API | https://github.com/banzaicloud/pipeline/releases/tag/0.2.0 |

| Control Plane Cloudformation template | https://github.com/banzaicloud/pipeline-cp-launcher/releases/tag/0.2.0 |

| Cluster images | https://github.com/banzaicloud/pipeline-cluster-images/releases/tag/0.2.0 |

| Control plain images | https://github.com/banzaicloud/pipeline-cp-images/releases/tag/0.2.0 |

| Banzai Charts | https://github.com/banzaicloud/banzai-charts/releases/tag/0.2.0 |

| Pipeline CI/CD plugin | https://github.com/banzaicloud/drone-plugin-pipeline-client/releases/tag/0.2.0 |

| Kubernetes CI/CD plugin | https://github.com/banzaicloud/drone-plugin-k8s-proxy/releases/tag/0.2.0 |

| Zeppelin CI/CD plugin | https://github.com/banzaicloud/drone-plugin-zeppelin-client/releases/tag/0.2.0 |

| Spark CI/CD plugin | https://github.com/banzaicloud/drone-plugin-spark-submit-k8s/releases/tag/0.2.0 |

| HTPassword generator for ingress | https://github.com/banzaicloud/htpasswd-gen/releases/tag/0.2.0 |

| Spark on K8S | https://github.com/banzaicloud/spark/tree/branch-2.2-kubernetes |

| Zeppelin on K8S | https://github.com/banzaicloud/zeppelin/tree/spark-interpreter-k8s |

| TensorFlow on K8S | https://github.com/banzaicloud/tensorflow-models |

| TiDB on K8S | https://github.com/banzaicloud/banzai-charts/tree/master/incubator/tidb |

| Zeppelin on K8S | https://github.com/banzaicloud/zeppelin/tree/spark-interpreter-k8s |

| Spark History Server | https://github.com/banzaicloud/banzai-charts/tree/master/stable/spark-hs |

Pipeline PaaS release - the hosted version, aka the next generation of Heroku/CloudFoundry 🔗︎

Here, we have demonstrated the complexity and number of building blocks necessary to host your own PaaS and become your own microservice provider to better illustrate the level of work, maintenance - the HA deployments and Kubernetes expertise - that’s required to run these components.

We are moving towards a hosted service that will host, deploy, maintain, patch and support all these services, with the end goal that our users should never know, be exposed to, or care about the underlying systems; at the end of the day your focus should be on writing applications, without having to worry about whether they are built, deployed and automatically operated according to your company’s SLA rules and configured CI/CD workflow.

Supported flows 🔗︎

Once a control plane is up and running (please check the following installation guide), Pipeline is able to serve as a fully customizable CI/CD workflow by placing a .pipeline.yml file under source control alongside your project. This is similar to commercial CI systems like CircleCI or Travis, however, instead of using these systems (though that is possible), it uses Pipeline’s own CI/CD system with plugins for workflow steps, such as:

clone- clones a GH repository inside a k8s clusters (uses PVC)remote_checkout- checks out a GH repository inside a k8s cluster (uses PVC)cluster- provisions, updates, reuses or deletes a cloud infrastructure and Kubernetes cluster, and deploys a runtime required for thespotguide(e.g. if it’s Spark then it understands pre-requisites like RSS and executors running as k8s daemon sets)remote_build- builds an application inside a k8s cluster based on thespotguiderun- runs an application on a runtime required by thespotguide

Flows are backed by spotguides. Currently, we support the following:

- Java with

cgroupssupport - Apache Spark support

- Apache Zeppelin support

- TensorFlow support

- JupyterHub support

- TiDB support

- Fn, container native serverless platform support

- OpenFaaS, functions as a service support

A typical flow in the UI, which deploys the custom workflow that’s described in the .pipeline.yml looks like this.

What’s next 🔗︎

This is an early release that we’re handing over to a few beta testers across different industries, with an eye toward receiving feedback and allowing them to drive the direction and features of Pipeline’s next generation. We don’t recommend deploying production use cases to Pipeline yet, not because the system is too unstable to run the supported spotguides, but because the current release falls far short of what we at Banzai Cloud believe a next generation PaaS platform should look like. In the coming months we’ll add support for many cloud or managed k8s providers, deliver an end to end security model integrated with Kubernetes (based on OAuth2 tokens and Vault) and a real service mesh to support throttling, as well as canary releases and policy driven ops and many many other features, all of which will be available through the current rest API, UI and CLI. Also, we’re doing extensive work on Hollowtrees, with custom plugins for Pipeline spotguides and SLA policy-driven Kubernetes schedulers that support the resource management needs of your microservices.

Building a PaaS is a challenging yet rewarding engineering experience, which we are all excited about. We encourage you to take part in this open source engagement, and we’ll continue to provide detailed information in our blog in order to share the experience, allowing you to join in and even to drive the process of creation.

About Banzai Cloud Pipeline 🔗︎

Banzai Cloud’s Pipeline provides a platform for enterprises to develop, deploy, and scale container-based applications. It leverages best-of-breed cloud components, such as Kubernetes, to create a highly productive, yet flexible environment for developers and operations teams alike. Strong security measures — multiple authentication backends, fine-grained authorization, dynamic secret management, automated secure communications between components using TLS, vulnerability scans, static code analysis, CI/CD, and so on — are default features of the Pipeline platform.