If you are a frequent reader of our blog, or if you’ve been using the open source Koperator , you might already be familiar with Supertubes, our product that delivers Apache Kafka as a service on Kubernetes.

Check out Supertubes in action on your own clusters:

Register for an evaluation version and run a simple install command!

As you might know, Cisco has recently acquired Banzai Cloud. Currently we are in a transitional period and are moving our infrastructure. Contact us so we can discuss your needs and requirements, and organize a live demo.

Evaluation downloads are temporarily suspended. Contact us to discuss your needs and requirements, and organize a live demo.

supertubes install -a --no-demo-cluster --kubeconfig <path-to-k8s-cluster-kubeconfig-file>or read the documentation for details.

Take a look at some of the Kafka features that we’ve automated and simplified through Supertubes and the Koperator , which we’ve already blogged about:

- Oh no! Yet another Kafka operator for Kubernetes

- Monitor and operate Kafka based on Prometheus metrics

- Kafka rack awareness on Kubernetes

- Running Apache Kafka over Istio - benchmark

- User authenticated and access controlled clusters with the Koperator

- Kafka rolling upgrade and dynamic configuration on Kubernetes

- Envoy protocol filter for Kafka, meshed

- Right-sizing Kafka clusters on Kubernetes

- Kafka disaster recovery on Kubernetes with CSI

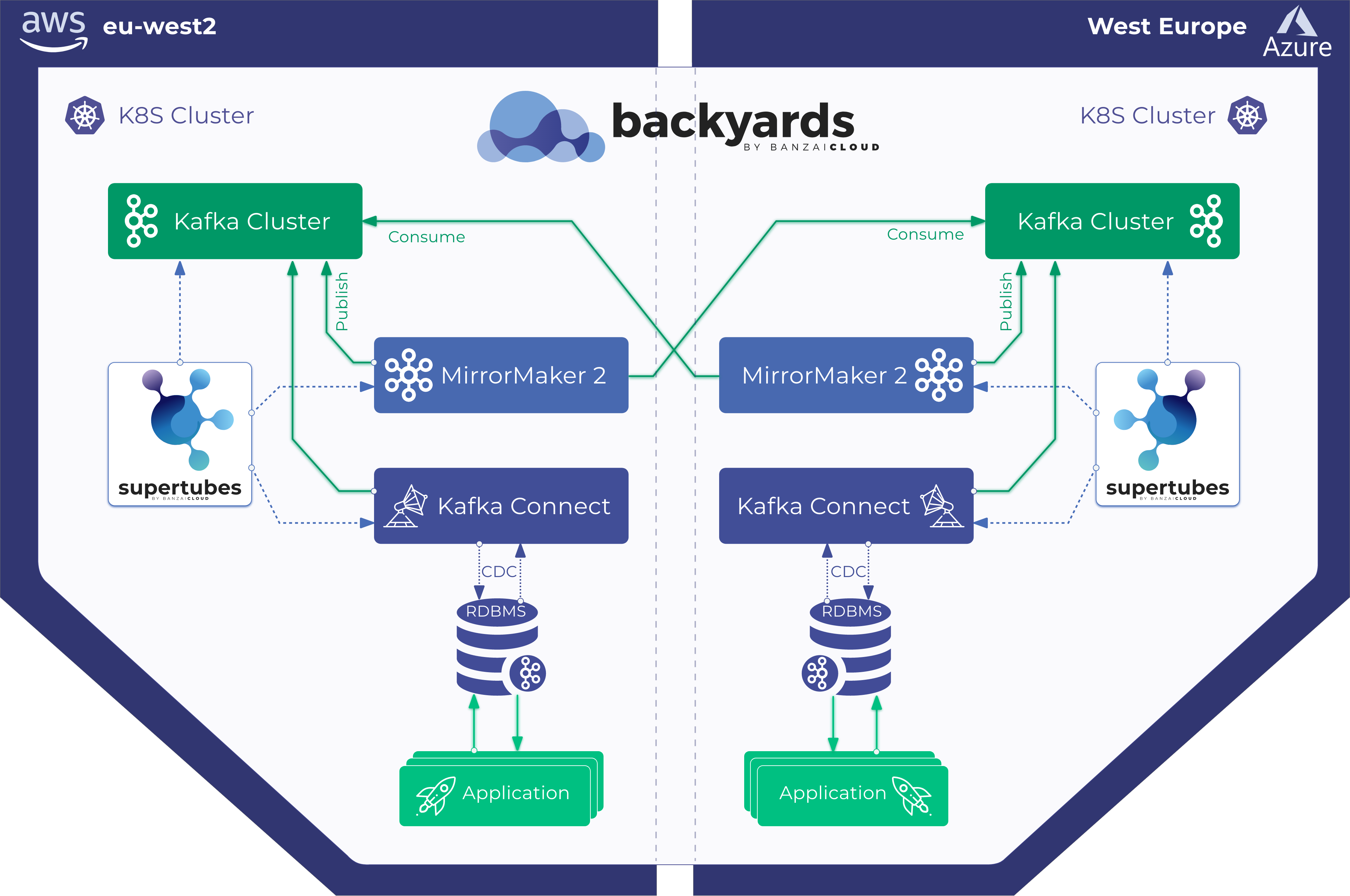

- Kafka disaster recovery on Kubernetes using MirrorMaker2

- The benefits of integrating Apache Kafka with Istio

- Kafka ACLs on Kubernetes over Istio mTLS

- Declarative deployment of Apache Kafka on Kubernetes

- Bringing Kafka ACLs to Kubernetes the declarative way

- Kafka Schema Registry on Kubernetes the declarative way

- Announcing Supertubes 1.0, with Kafka Connect and dashboard

The Supertubes ecosystem is built on a range of components which, together, deliver Supertube’s feature set. The first Supertubes version was merely a CLI tool which deployed these components (see the list below), but we knew that merely deploying these components in isolation would not be enough, as they have to be both configured properly, and work together as desired. Also, certain components should be deployed in a specific order for everything to work as expected. The orchestration of the deployment and configuration of these components is a process that lends itself to error when done manually, especially if there are many components.

tl;dr: 🔗︎

Supertubes is now a CLI, a control plane (including a Supertubes operator) and a backend. We added support to Supertubes for describing the desired state of the whole Kafka on Kubernetes ecosystem, including describing all the components and their configuration in a declarative manner. The Supertubes operator takes care of the rest. We have also opensourced a framework which eases writing declarative installers.

Orchestrating harmony 🔗︎

Supertubes’ feature set is fairly large - you can read more by checking the Supertubes tag - as well the number of components Supertubes employs under the hood:

- istio-operator to manage an Istio service mesh for Kafka and related components to take advantage of the benefits provided by Istio.

- zookeeper-operator to manage a Zookeeper cluster for Kafka clusters

- prometheus-operator to manage Prometheus and Grafana for monitoring

- Koperator to manage Kafka clusters

- Supertubes backend to provide functionalities like Kubernetes RBAC integration with Kafka ACL, disaster recovery using volume snapshots and MirrorMaker 2, management of monitoring artifacts (service monitors, pod monitors, Grafana dashboards), etc

- kafka-minion to provide Kafka consumer monitoring

The Supertubes CLI makes it easy for the user to set up the entire Supertubes eco-system by setting up and configuring all of the above-listed components. The user has only to execute one single command:

supertubes install -a

The CLI offers a few way of helping you get started and to begin experimenting with Supertubes. While the CLI commands are easy to use, they do not offer the possibility of fine-tuning each of your components. They also might not be a good fit for automation or CD tools.

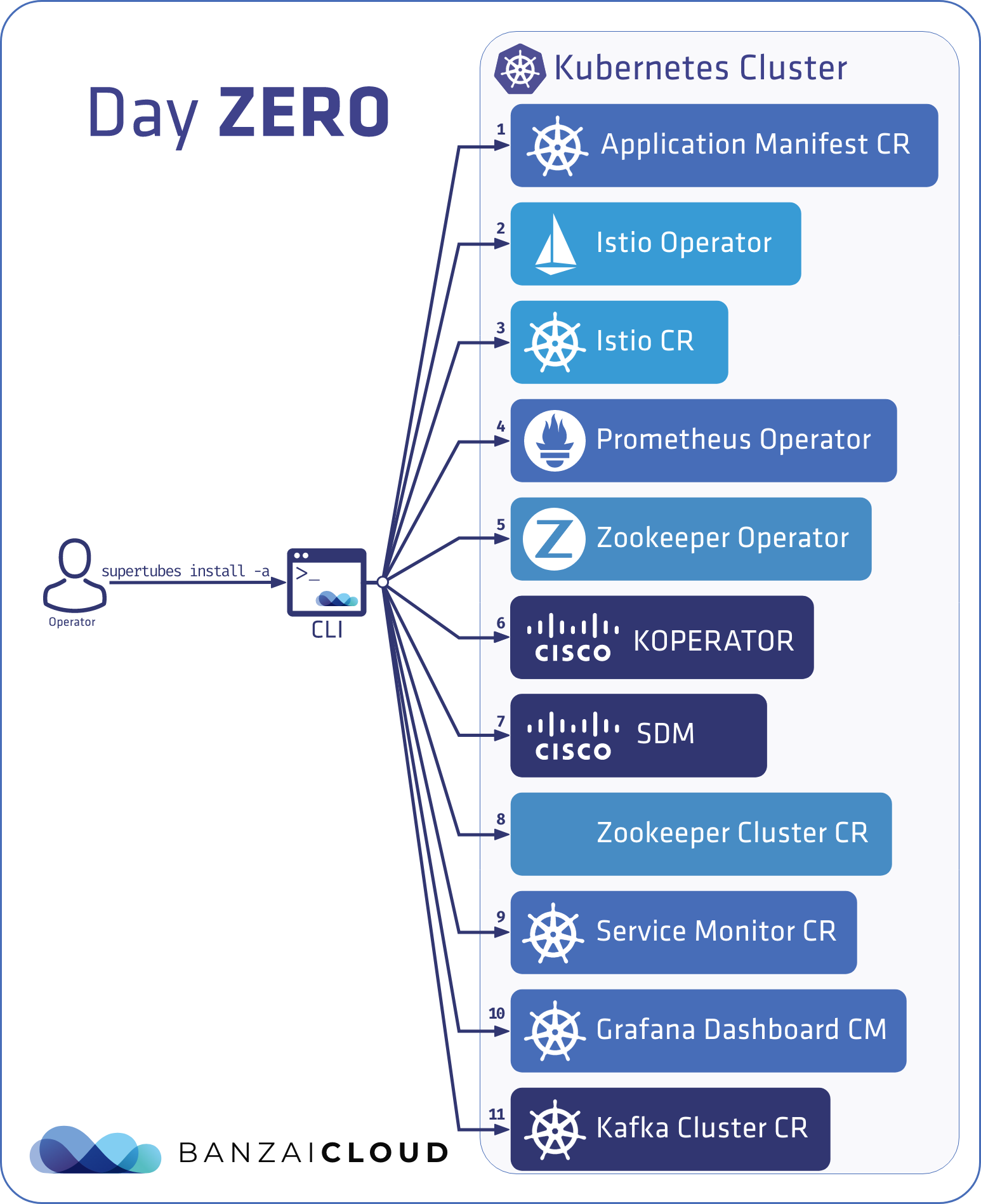

Day Zero - Setup Supertubes with CLI imperative commands 🔗︎

Even when a user has little or very little knowledge of Supertubes, it should be easy for them to spin up the Kafka on Kubernetes system with all its features enabled, so that the user can start experimenting with it.

After installing the CLI, and running the

superubes install -a

command, a Supertubes cluster is deployed with all the features enabled and a Kafka demo cluster. Behind the scenes, the default settings used to deploy the components are collected into a custom resource, called ApplicationManifest, and the reconcile flow deploys and configures the components according to these settings. The advantage of storing these settings in a custom resource is that we can later track what components were deployed/removed and with what settings. Also, it enables running the reconcile flow in operator mode which is useful for any unattended deployment/removal/reconfiguration of the components used by Supertubes (e.g. automation and CD tools).

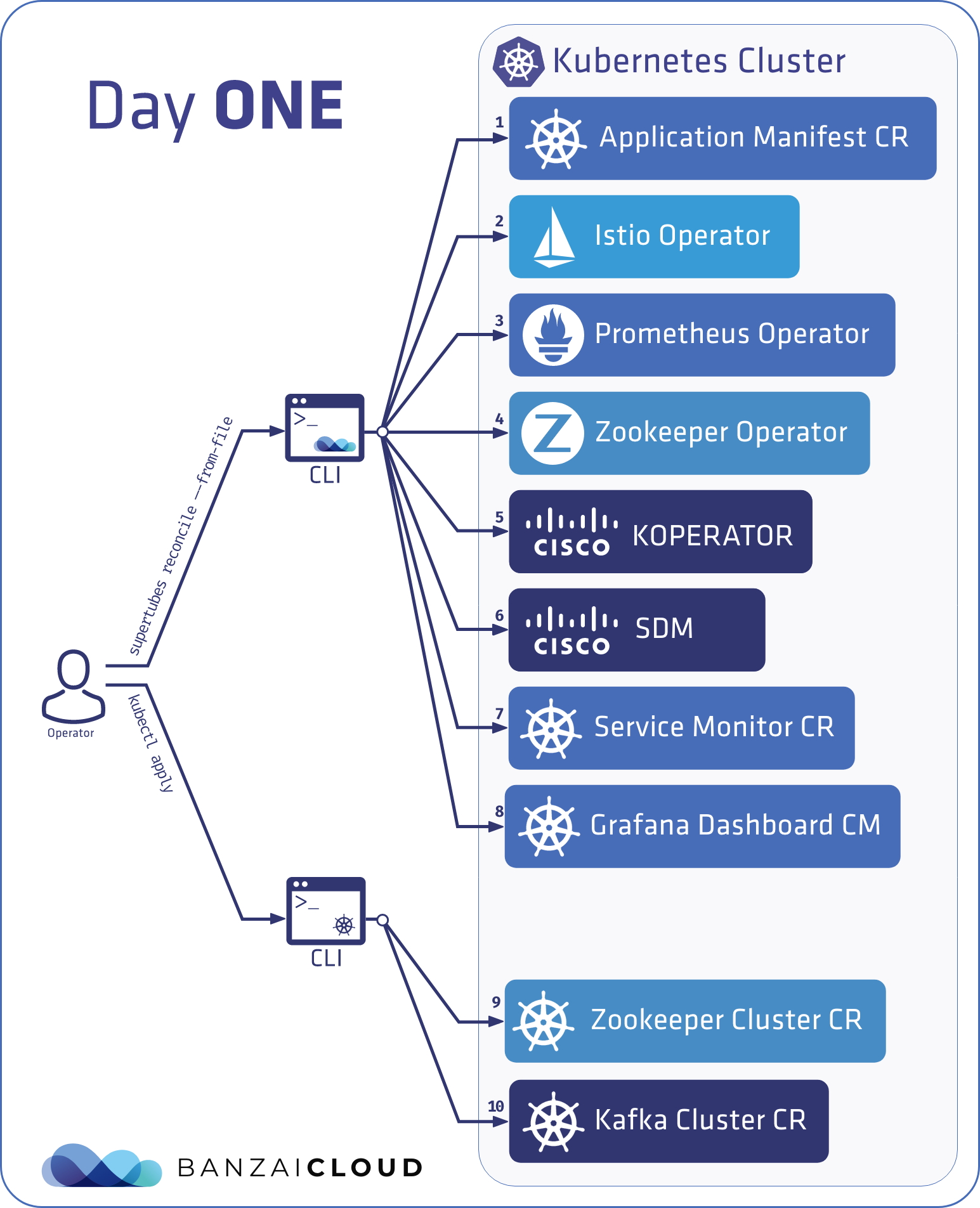

Day One - CLI declarative mode 🔗︎

Now let’s say the user is already familiar with Supertubes and wants to fine-tune the configuration of various components. The user provides the configuration settings for each service declaratively in a file and passes it to the CLI.

supertubes reconcile --from-file <path-to-file>

In this scenario, the ApplicationManifest is read from a file, allowing users to declaratively provide custom configuration for the various components involved.

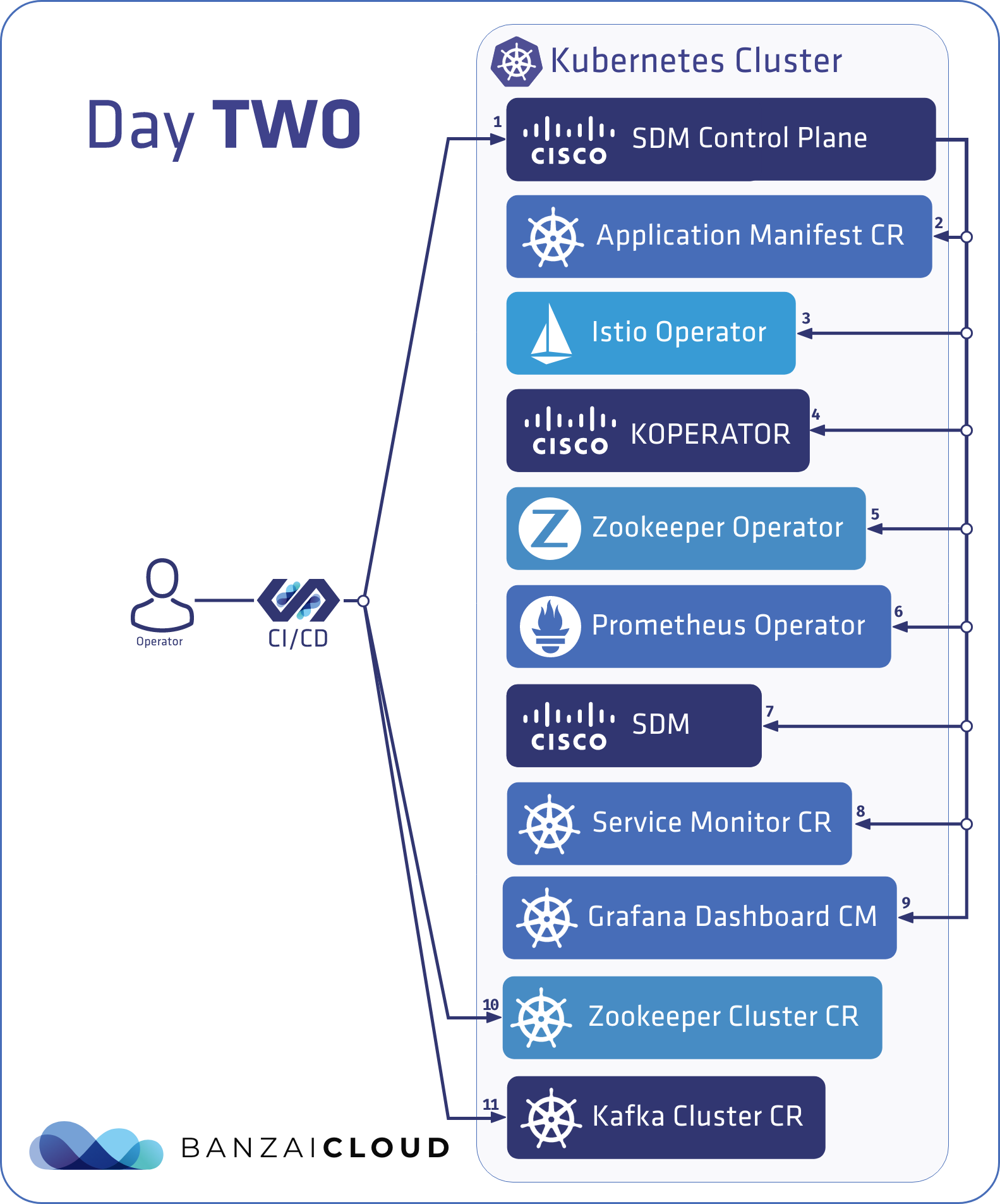

Day Two - Operator mode 🔗︎

Here, let’s assume that instead of the reconcile flow being executed on the user’s machine, it runs on the Kubernetes cluster as an operator that watches the ApplicationManifest custom resource. Any changes made to the watched custom resource triggers the same reconcile flow as in the previously described modes. For the operator mode deploy the Supertubes Control Plane into the Kubernetes cluster with Helm:

helm repo add banzaicloud-stable https://kubernetes-charts.banzaicloud.com/

helm install banzaicloud-stable/supertubes-control-plane

ApplicationManifest custom resource 🔗︎

The ApplicationManifest custom resource holds the desired component deployment and configuration settings.

apiVersion: supertubes.banzaicloud.io/v1beta1

kind: ApplicationManifest

metadata:

name: supertubes-apps

spec:

istioOperator: # Istio operator and Istio mesh related settings.

enabled: true # Whether to deploy or remove Istio operator component. Defaults to true.

# Whether to create an Istio mesh. Defaults to true. For an Istio mesh with

# custom settings set this to false and create a https://github.com/banzaicloud/istio-operator/blob/release-1.5/config/samples/istio_v1beta1_istio.yaml custom resource with your settings.

createDefaultMesh: true

namespace: istio-system # The namespace to deploy Istio operator into. Defaults to istio-system.

# Settings override in YAML format. For the list of overrideable settings see https://github.com/banzaicloud/istio-operator/blob/release-1.5/deploy/charts/istio-operator/values.yaml

valuesOverride:

kafkaOperator: # Koperator related settings.

enabled: true # Whether to deploy or remove Koperator component. Defaults to true.

namespace: kafka # The namespace to deploy Koperator into. Defaults to kafka.

valuesOverride:

supertubes: # Supertubes backend related settings.

enabled: true # Whether to deploy or remove Supertubes backend component. Defaults to true.

namespace: supertubes-system # The namespace to deploy Supertubes backend into. Defaults to supertubes-system.

# Settings override in YAML format. For the list of overrideable settings see https://banzaicloud.com/docs/supertubes/overview/

valuesOverride:

monitoring: # Monitoring related settings

grafanaDashboards: # Grafana dashboards related settings

enabled: true # Whether to deploy ConfigMaps with Grafana dashboards for the components.

label: # The label to apply to the Grafana dashboard ConfigMaps. It defaults to "app.kubernetes.io/supertubes_managed_grafana_dashboard"

prometheusOperator: # Prometheus operator related settings

enabled: true # Whether to deploy or remove Prometheus operator component. Defaults to true.

namespace: supertubes-system # The namespace to deploy Prometheus operator into. Defaults to supertubes-system.

# Settings override in YAML format. For the list of overrideable settings see Prometheus operator Helm chart version 8.11.2

valuesOverride:

kafkaMinion: # Kafka Minion related settings

enabled: true # Whether to deploy Kafka Minion for all Kafka clusters.

# Settings override in YAML format. For the list of overrideable settings see Kafka Minion Helm chart at https://github.com/banzaicloud/kafka-minion-helm-chart

valuesOverride:

zookeeperOperator: # Zookeeper operator and Zookeeper cluster related settings

enabled: true # Whether to deploy or remove Zookeeper operator component. Defaults to true.

# Whether to create a default 3 ensemble Zookeeper cluster. Defaults to true. For a Zookeeper cluster with custom settings set this to false and create a https://github.com/pravega/zookeeper-operator/tree/v0.2.6#deploy-a-sample-zookeeper-cluster custom resource with your settings.

createDefaultCluster: true

namespace: zookeeper # The namespace to deploy Zookeeper operator into. Defaults to zookeeper.

# Settings override in YAML format. For the list of overrideable settings see https://github.com/pravega/zookeeper-operator/blob/v0.2.6/charts/zookeeper-operator/values.yaml

valuesOverride:

The settings applied to the components are the result of merging default settings + valuesOverride + managed settings.

The managed settings are settings that cannot be changed by users, since inadequately setting them may lead to malfunction. Let’s take Kafka Minion as an example, which is used to gather consumer-related Kafka metrics.

kafkaMinion:

valuesOverride: |-

resources:

limits:

memory: 256Mi

kafka:

brokers: kafka-1:9092,kafka-2:9092,kafka-3:9092

The value for kafka.brokers specified in valuesOverride has no effect as that is computed dynamically by Supertubes and automatically updated when the list of brokers is changed due to an upscale or downscale.

You might be wondering why we allow removing the Koperator and supertubes backend core components as without these most functionalities are rendered useless. The reason is that we wanted to continue to be able to handle rare and unforeseen events that might require temporarily removing the core components.

The ApplicationManifest custom resource is the owner of these deployed components, thus, if removed, the Kubernetes Garbage Collector will remove all deployed components.

Try it out 🔗︎

Let’s try out the operator mode through an example.

Install the Supertubes control plane 🔗︎

helm repo add banzaicloud-stable https://kubernetes-charts.banzaicloud.com/

helm install banzaicloud-stable/supertubes-control-plane

Deploy ApplicationManifest custom resource 🔗︎

Deploy an ApplicationManifest that enables all components:

kubectl apply -f-<<EOF

apiVersion: supertubes.banzaicloud.io/v1beta1

kind: ApplicationManifest

metadata:

name: applicationmanifest-sample

spec:

istioOperator:

enabled: true

createDefaultMesh: true

namespace: istio-system

kafkaOperator:

enabled: true

namespace: kafka

supertubes:

enabled: true

namespace: supertubes-system

monitoring:

grafanaDashboards:

enabled: true

prometheusOperator:

enabled: true

namespace: supertubes-system

kafkaMinion:

enabled: true

zookeeperOperator:

enabled: true

createDefaultCluster: true

namespace: zookeeper

EOF

Check the status 🔗︎

The status of the ApplicationManifest provides information on the progress and status of the deployment of the enabled components, as well as the overall status of the reconcile flow:

...

status:

components:

istioOperator:

meshStatus: Available

status: Reconciling

status: Reconciling

...

status:

components:

istioOperator:

meshStatus: Available

status: Available

kafkaOperator:

status: Available

monitoring:

status: Available

supertubes:

status: Available

zookeeperOperator:

clusterStatus: Available

status: Available

status: Succeeded

Update the settings of one of the components 🔗︎

Let’s set a new password for Grafana:

kubectl apply -f-<<EOF

apiVersion: supertubes.banzaicloud.io/v1beta1

kind: ApplicationManifest

metadata:

name: applicationmanifest-sample

spec:

istioOperator:

enabled: true

createDefaultMesh: true

namespace: istio-system

kafkaOperator:

enabled: true

namespace: kafka

supertubes:

enabled: true

namespace: supertubes-system

monitoring:

grafanaDashboards:

enabled: true

prometheusOperator:

enabled: true

namespace: supertubes-system

valuesOverride: |-

grafana:

adminPassword: my-new-password

kafkaMinion:

enabled: true

zookeeperOperator:

enabled: true

createDefaultCluster: true

namespace: zookeeper

EOF

In the status section we can see that the status for monitoring has changed to Reconciling.

...

status:

components:

istioOperator:

meshStatus: Available

status: Available

kafkaOperator:

status: Available

monitoring:

status: Reconciling

supertubes:

status: Available

zookeeperOperator:

clusterStatus: Available

status: Available

status: Reconciling

After the new configuration was successfully applied, the status changes to Available

...

status:

components:

istioOperator:

meshStatus: Available

status: Available

kafkaOperator:

status: Available

monitoring:

status: Available

supertubes:

status: Available

zookeeperOperator:

clusterStatus: Available

status: Available

status: Succeeded

Supertubes roadmap 🔗︎

While Supertubes has been out in production, we are constantly working towards a better version. There is already a large list of features available, for example, Complete Kafka ACL coverage and integration with Kubernetes RBAC, but the upcoming releases will add:

- Support broker volume expansion (a problem we’ve seen our customers struggle with)

- Observability and management UI leveraging Istio telemetry data and the ability to drill down into the route cause

- Envoy protocol filter-based audits as an extension of the Envoy Kafka protocol filter

About Supertubes 🔗︎

Banzai Cloud Supertubes (Supertubes) is the automation tool for setting up and operating production-ready Apache Kafka clusters on Kubernetes, leveraging a Cloud-Native technology stack. Supertubes includes Zookeeper, the Koperator , Envoy, Istio and many other components that are installed, configured, and managed to operate a production-ready Kafka cluster on Kubernetes. Some of the key features are fine-grained broker configuration, scaling with rebalancing, graceful rolling upgrades, alert-based graceful scaling, monitoring, out-of-the-box mTLS with automatic certificate renewal, Kubernetes RBAC integration with Kafka ACLs, and multiple options for disaster recovery.