Security series:

Authentication and authorization of Pipeline users with OAuth2 and Vault Dynamic credentials with Vault using Kubernetes Service Accounts Dynamic SSH with Vault and Pipeline Secure Kubernetes Deployments with Vault and Pipeline Policy enforcement on K8s with Pipeline The Vault swiss-army knife The Banzai Cloud Vault Operator Vault unseal flow with KMS Kubernetes secret management with Pipeline Container vulnerability scans with Pipeline Kubernetes API proxy with Pipeline

Pipeline is quickly moving towards its as a Service milestone, after which the Pipeline PaaS will be available to early adopters and as a hosted service (current deployments are all self-hosted). In a previous blog post we showcased how Pipeline uses OAuth2 to authenticate and authorize our Pipeline PaaS users through JWT tokens stored and leased by Vault. In this post we’re going to talk about static and dynamic credentials, and their pros and cons. In the last entry in this series, we discussed how we’ve so far managed to get rid of static, baked-in credentials with the help of Vault Dynamic Secrets and Kubernetes Service Accounts.

Secret Engines in Vault 🔗︎

First, before taking a deep dive into dynamic credentials, let’s discuss their building blocks in Vault, called secrets engines. Secrets engines are components which store, generate or encrypt data. The best way to think about them is in terms of their functions; a secrets engine is provided with a set of data, it takes an action on the basis of that data, and it returns a result.

Some secrets engines simply store and read data, like the kv secrets engines or the cubbyhole (which is a specialized, ephemeral kv store, whose lifetime is based on invoking the token’s TTL). Other secrets engines connect to other services, which require authentication and generate dynamic credentials on demand (like aws, database). The third type of secrets engine does not store any data or talk with any kind of backend, rather it generates certificates (pki) or encryption data (transit) in a stateless manner.

Each secrets engine has a path in Vault. After a request arrives in Vault, the router automatically routes anything with the corresponding route prefix to the secrets engine. For further implementation details see how this is accomplished in Vault. For end users, secrets engines behave similar to virtual filesystems, like FUSE, and everyone is allowed to extend Vault with new secrets engines with the help of Vault plugins.

Vault is easy to configure if you already have an idea of how you want to use it, but, to start, there’s a development server implemented, which abstracts a lot of configuration details, so let’s fire that up (I assume you have the vault binary in your PATH):

➜ ~ vault server -dev &

➜ ~ export VAULT_ADDR=http://localhost:8200

Let’s check what kind of secrets engines are enabled by default:

➜ ~ vault secrets list

Path Type Description

---- ---- -----------

cubbyhole/ cubbyhole per-token private secret storage

identity/ identity identity store

secret/ kv key/value secret storage

sys/ system system endpoints used for control, policy and debugging

These four engines are always enabled, besides secret and cubbyhole, there is sys and identity, which are serving Vault’s internal purposes.

Let’s enable the database secrets engine in order to receive unique database credentials with Vault:

➜ ~ vault secrets enable database

Success! Enabled the database secrets engine at: database/

Dynamic database credentials 🔗︎

Connecting to a database almost always requires spasswords or certificates, which have to be passed to the application code through configuration. We plan to use dynamic secrets with Pipeline and basically in all of our supported applications and spotguides. First of all, handling credentials manually and storing them on configs, files, etc. is not a secure solution. Second, we’ve often encountered issues when users generate and pass credentials into our Helm charts during deployments. For these reasons, we will benefit greatly from using dynamic database credentials.

We’ve already described the advantages of using dynamic secrets in this excellent blog post by Armon Dadgar. I don’t think it’s necessary to repeat everything he said, but in a nutshell: in order to harden security, an application needs a dedicated credential for a requested service: this credential only belongs to the requesting application and has a fixed expiry time: because the credential is dedicated, it’s possible to track down which application accessed the service and when, and it’s easy to revoke the credential because it’s managed in a central place (Vault).

The Hashicorp blogpost describes an excellent method by which we can use dynamic secrets with applications that can’t integrate with Vault natively via consul-template and envnconsul. We choose a different path and have integrated with Vault directly in code, instead of configuring another container to talk with Vault and pass down the secrets via configuration files or environment variables, which ultimately means that the credential will show up in the filesystem, whereas, in our case, it’s in-memory only.

Since Pipeline runs on Kubernetes, we can apply Kubernetes Service Account-based authentication and get Vault tokens first, which we can later exchange for a MySQL credential (username/password) based on our configured Vault role.

Please see the diagram below for further details about this sequence of events:

A working example 🔗︎

You’ll have to configure Vault to access the MySQL database and define a role for Pipeline, which grants local access to the pipeline database:

Start a fresh MySQL instance on your machine:

docker run -it -p 3306:3306 --rm -e MYSQL_ROOT_PASSWORD=rootpassword mariadb

Create a database inside the MySQL instance:

CREATE DATABASE IF NOT EXISTS pipeline;

Configure the pre-mounted database secrets engine:

vault write database/config/my-mysql-database \

plugin_name=mysql-database-plugin \

connection_url="root:rootpassword@tcp(127.0.0.1:3306)/" \

allowed_roles="pipeline"

vault write database/roles/pipeline \

db_name=my-mysql-database \

creation_statements="GRANT ALL ON pipeline.* TO '{{name}}'@'localhost' IDENTIFIED BY '{{password}}';" \

default_ttl="10m" \

max_ttl="24h"

Let’s start by dissecting the DynamicSecretDataSource function in the bank-vaults library.

First of all, we have to create a Vault client:

import (

//...

"github.com/banzaicloud/bank-vaults/vault"

//...

)

//...

vaultClient, err := vault.NewClient(vaultRole)

if err != nil {

err = errors.Wrap(err, "failed to establish vault connection")

return "", err

}

This Vault client differs from the stock Vault client in that it automatically detects if it’s running in Kubernetes and, if it is, gets a Vault token based on its defined role parameter, renewing the token automatically.

Read the ephemeral database credential from Vault (this creates a “temporary” user in MySQL):

vaultCredsEndpoint := "database/creds/" + vaultRole

secret, err := vaultClient.Vault().Logical().Read(vaultCredsEndpoint)

if err != nil {

err = errors.Wrap(err, "failed to read db credentials")

return "", err

}

Since this is a “leased” secret from Vault, it must renew its lease from time to time. Otherwise, it will be revoked, and our database connection will break when Vault removes the temporary user from MySQL:

secretRenewer, err := vaultClient.Vault().NewRenewer(&vaultapi.RenewerInput{Secret: secret})

if err != nil {

vaultClient.Close()

err = errors.Wrap(err, "failed to start db credential renewer")

return "", err

}

The library will make sure that the leased database secret is always refreshed close to the end of the TTL interval.

Learn by the code 🔗︎

As you can see, with this solution Pipeline is able to connect to MySQL simply by virtue of running in a configured Kubernetes Service Account and without being required to type a single username/password during the configuration of the application.

The code implementing the dynamic secret allocation for database connections and the Vault configuration described above can be found in our open source project, Bank-Vaults.

This piece of code shows our exact usage in Pipeline.

We hope this post on security has been useful. The next post in this series will dicuss interesting topics such as how to configure Vault for our forthcoming production environment, how to store cloud provider credentials for our users, and how to unseal Vault in the middle of the night without much fuss.

Learn more about Bank-Vaults:

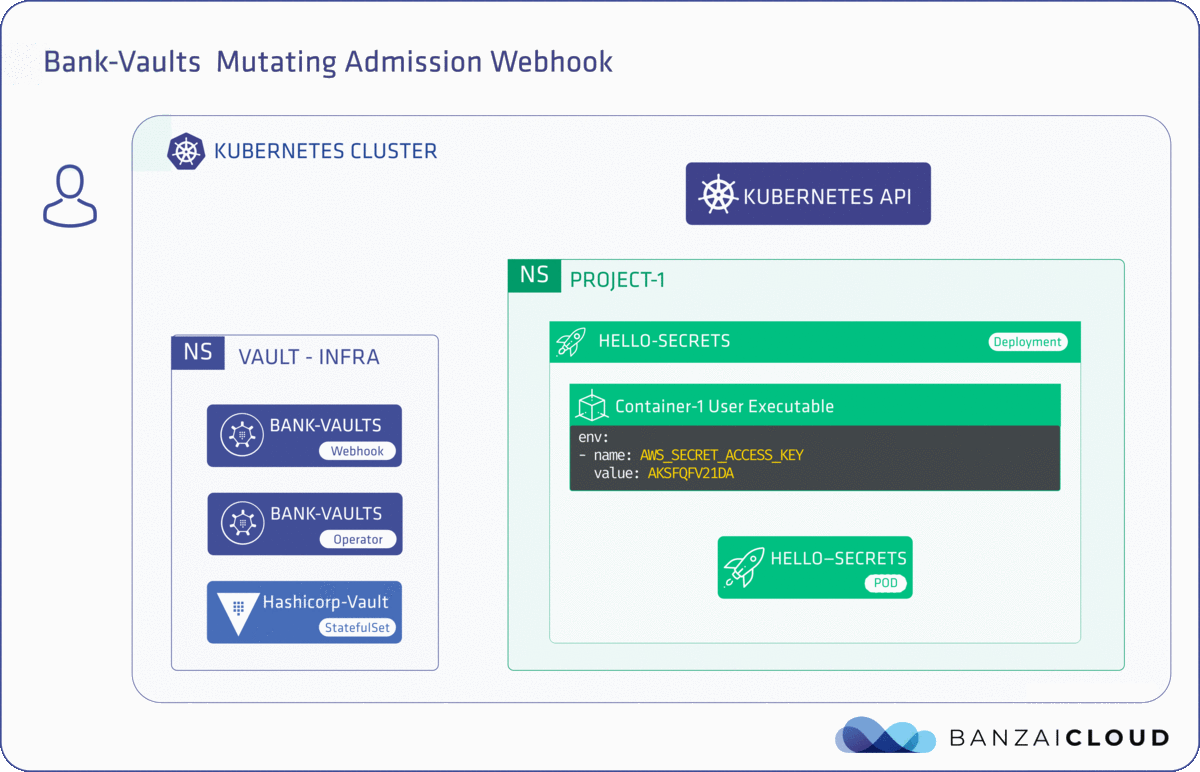

- Secret injection webhook improvements

- Backing up Vault with Velero

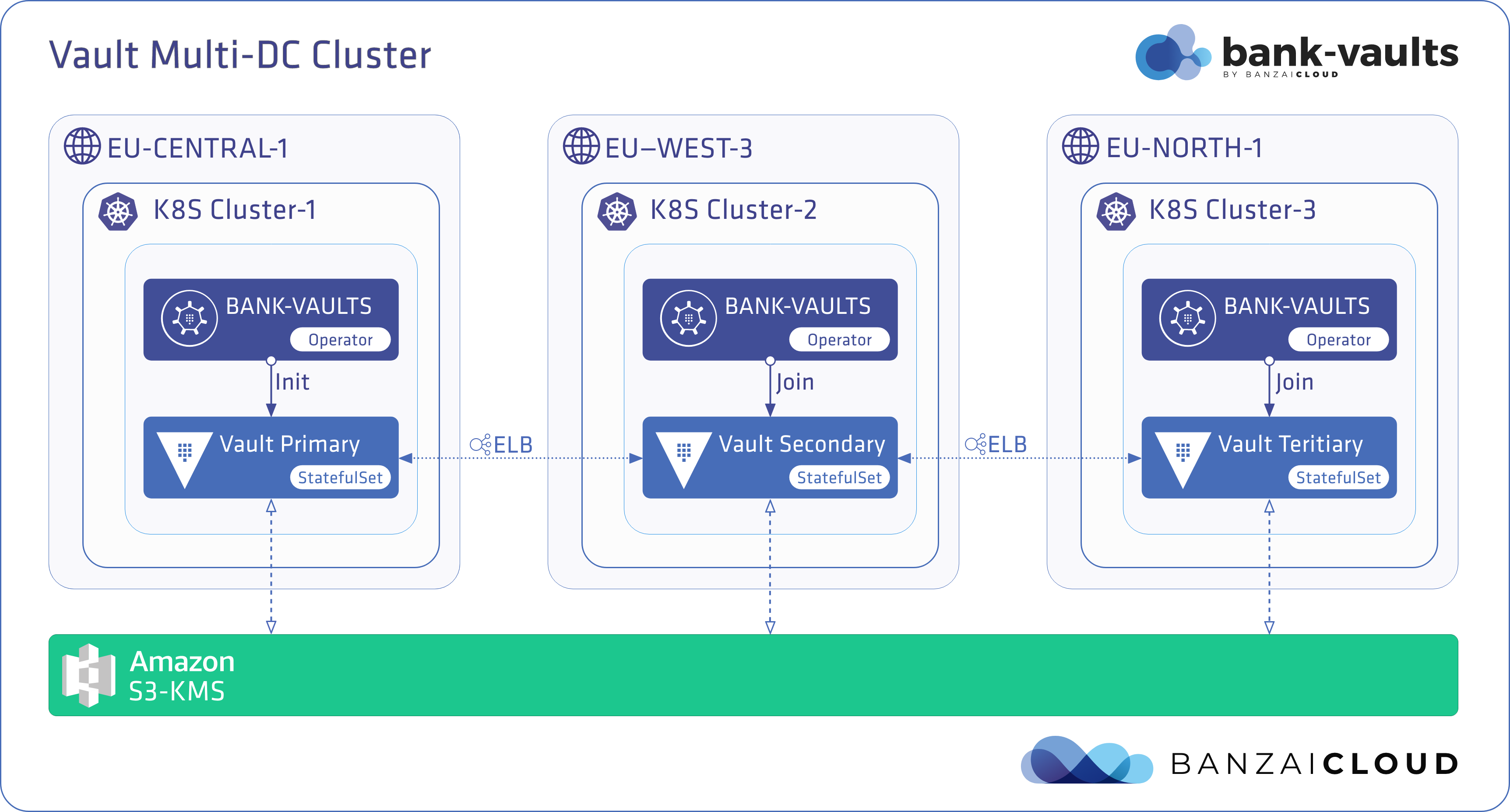

- Vault replication across multiple datacenters

- Vault secret injection webhook and Istio

- Mutate any kind of k8s resources

- HSM support

- Injecting dynamic configuration with templates

- OIDC issuer discovery for Kubernetes service accounts

- Show all posts related to Bank-Vaults