Note: The Spotguides feature mentioned in this post is outdated and not available anymore. In case you are interested in a similar feature, contact us for details.

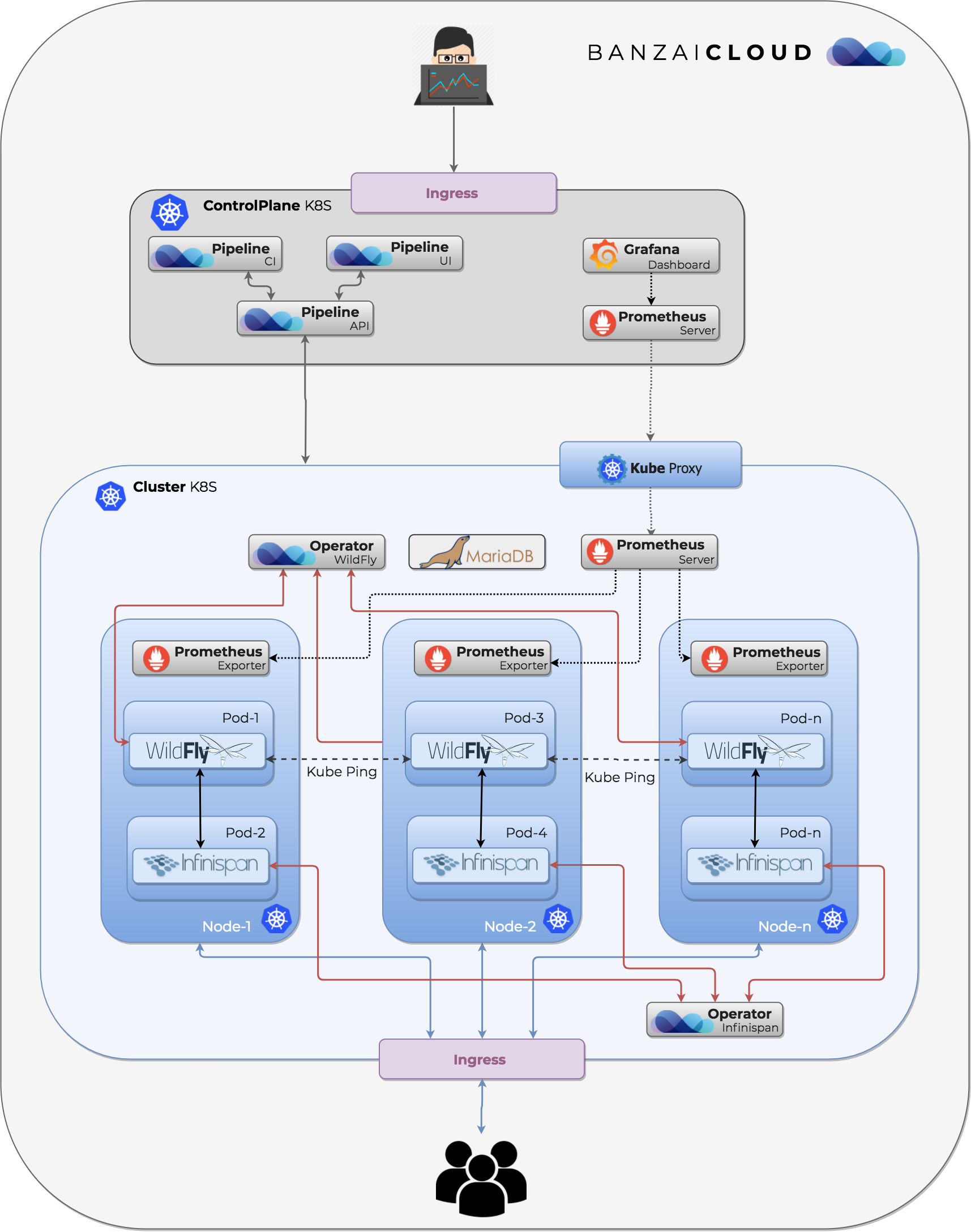

A good number of years ago, back at beginning of this century, most of us here at Banzai Cloud were in the Java Enterprise business, building application servers (BEA Weblogic and JBoss) and JEE applications. Those days are gone; the technology stack and landscape has dramatically changed; monolithic applications are out of fashion, but we still have lots of them running in production. Because of our background, we have a personal investment in helping to shift Java enterprise edition business applications towards microservices, managed deployments, Kubernetes, and the cloud using Pipeline.

We have a fully automated migration path for JEE (and for general monolith Java) applications, which we will be blogging about and opensourcing, so stay tuned. This blog serves as the first and easiest step.

So, the first step in converting J2EE/JEE legacy applications to a suite of microservices is usually to package the whole Application Server and JEE application within a container, then to run multiple containers like that in the cloud or Kubernetes. The advantage of this is that there’s no - or at least only a little - code changing required, and, as you will soon see is the case with our example application, you can quickly have a cluster of servers up and running your application, providing comfortable JEE services like session replication, EJB, Java Messaging, etc.

These Java containers needs to be properly sized

For an application server, we’ve picked Wildfly App Server which is a very popular open source JEE server that offers full stack JEE services, while also being modular. You can startup servers in standalone mode, after which they discover each other, then let Kubernetes resize your cluster based on different metrics and resource requests. Note that this practice should work with other application servers, given that they provide some mechanism for discovery.

WildFly, formerly known as JBoss AS, or simply JBoss, is an open source application server authored by JBoss, now developed by Red Hat.

tl;dr: 🔗︎

-

Our goal is to demonstrate that, with little or no

codedescriptor change, you can easily port a standard JEE application to a Kubernetes cluster. For this purpose we picked the Petstore Application for Java EE 7, whichcovers the JEE specification, and we want to deploy it to severalWildfly App Servers running in distributed mode on Kubernetes. For deployment we’ll use a Kubernetes operator for Wildfly, which gives users the ability to create and manage Wildfly applications just like built-in Kubernetes resources. We need to add some additional resources to the default Wildfly images to support distributed mode on Kubernetes, also, to make some minor modifications to the Petstore app. -

We opensourced a Wildfly operator

-

We are wiring Pipeline’s CI/CD component to automatically build a Kubernetes ready JEE app from code. This will be similar to the already supported Spark, Zeppelin, Spring Boot, NodeJS, as well as many other supported frameworks

Note: The Pipeline CI/CD module mentioned in this post is outdated and not available anymore. You can integrate Pipeline to your CI/CD solution using the Pipeline API. Contact us for details.

-

We strive to size these Java containers correctly

Wildfly Docker image 🔗︎

We want to start up the WildFly application server in standalone mode, but we also want to have session replication, Infinispan caches, EJB layer and JMS working, so we want to form an HA cluster. The basement of HA Clustering in Wildfly is JGroups. By default, cluster member discovery is based on a multicast protocol, which is not really available in the cloud. Fortunately KUBE_PING Kubernetes discovery protocol for JGroups can help us out, since it discovers nodes by asking Kubernetes for a list of IP addresses of selected cluster nodes, using label and namespace selectors. Nodes join together with those JGroups that are configured to use the KUBE_PING protocol, which find each Pod running a Wildfly server. This is not included in the default Wildfly image, so we have added and configured our own default standalone.xml config to use as a base for clustering.

Modifications required to Petstore app 🔗︎

To achieve session replication across nodes, it is necessary to apply a small temporary fix to LoggingInterceptor, and to add a distributable tag to web.xml that enables clustering of web applications. Other than that, we’ve added only the resources necessary to build the Wildfly image. You can checkout our modifications in our fork in the master-k8s branch on our GitHub repository of

petstore-ee7-kubernetes.

Wildfly operator 🔗︎

Wildfly Operator lets you describe and deploy your JEE application on the Wildfly server by creating the WildflyAppServer custom resource definition in Kubernetes. Once you deploy a WildflyAppServer resource, the operator starts up a WildFly server cluster with a given number of nodes, running from the provided container image in standalone mode. The JEE application to deploy must be in the provided image, in the /opt/jboss/wildfly/standalone/deployments folder. It will also start a load balancer service, so that the Wildfly Management Console and deployed Web application are reachable from outside. You can use the default standalone Full HA configuration for Kubernetes, or you can provide your own in a ConfigMap. In this case, you’ll have to specify the name of the config map and the key containing the standalone.xml configuration.

The default Wildfly username/password is admin/wildfly. You can set up your own in a Kubernetes Secret, which should have the same name as your WildflyAppServer resource. The Wildfly Operator is built on the new Kubernetes Operator SDK, which you can find more details on, here.

Steps to starting up WildflyAppServer nodes on Kubernetes 🔗︎

-

Cluster creation

You may use your

Minikubecluster, or quickly provision a cluster in the cloud AWS / Azure / Google Cloud with Pipeline. After your cluster is created, don’t forget to setKUBECONFIGto be able to usehelmin the next step. -

Install the Mariadb Chart

In the Banzai Cloud Helm repository we have a ready to use chart to deploy MariaDB. If you’re using Minikube you have to add this repo:

helm repo add banzaicloud-stable http://kubernetes-charts.banzaicloud.comhelm install -n demo --set Release.Name=demo --set mariadbUser=petstore --set mariadbPassword=petstore --set mariadbDatabase=petstore banzaicloud-stable/mariadb -

Download Wildfly operator definitions and deploy:

wget https://github.com/banzaicloud/wildfly-operator/blob/master/deploy/rbac.yaml wget https://github.com/banzaicloud/wildfly-operator/blob/master/deploy/operator.yaml kubectl create -f rbac.yaml kubectl create -f operator.yaml -

Deploy Petstore Application

This time we’re going to use the default configuration, default user/password, because we only need to pass the MariaDB connection properties to dataSourceConfig. The resource definition, below, will start two instances of

WildflyAppServerin standalone mode, with the Petstore application deployed.cat > example-app.yaml <<EOF apiVersion: "wildfly.banzaicloud.com/v1alpha1" kind: "WildflyAppServer" metadata: name: "wildfly-example" labels: app: my-label spec: nodeCount: 2 image: "banzaicloud/wildfly-jee-petstore:0.1.6" applicationPath: "applicationPetstore" labels: app: my-label dataSourceConfig: mariadb: hostName: "demo-mariadb" databaseName: "petstore" jdniName: "java:jboss/datasources/ExampleDS" user: "petstore" password: "petstore" EOF kubectl apply -f example-app.yaml -

Get Management Console and Application URLs

kubectl describe WildflyAppServerManagement Console is reachable at: http://35.232.66.116:9990 while Petstore application at: http://35.232.66.116:9990/applicationPetstore.Status: External Addresses: Application: 35.232.66.116:8080 Management: 35.232.66.116:9990 Nodes: wildfly-example-84b5986dbf-gxhbv wildfly-example-84b5986dbf-zvwml

Now you can resize your Wildfly cluster by updating spec.nodeCount with kubectl edit WildflyAppServer wildfly-example, and manually delete pods while checking that your Petstore application is still available and http session is still alive, i.e. you are still logged in.

Our next goal is to provide full spotguide support for standard JEE applications, enabling deployment of these applications with minimal code change, having logging + monitoring + autoscaling out of the box using Pipeline, so stay tuned. This would be a first step towards converting your Enterprise applications to a microservices oriented architecture in a semi-automated way, where you would get automatic recommendations on how to split your monolith into several smaller services.